Feb 21, 2026

LLM API Pricing Comparison 2026: 30+ Models, Every Provider

Inference Research

Why Your LLM API Bill Is Higher Than It Should Be

The same task can cost $0.04 per million tokens on one provider and $25.00 on another — a 625× price difference. It's the difference between a side project that stays affordable and a production system that quietly drains budget.

This LLM API pricing comparison covers 30+ models across every major provider type as of February 2026, including the category almost every other guide ignores entirely: open-source inference providers. Groq, Together AI, Fireworks AI, and inference.net serve the same powerful open-weight models — Llama 4, Mistral, DeepSeek, Qwen — at 50–90% lower cost than frontier APIs. Yet they're absent from virtually every competitor article on this topic.

That gap is worth closing. Whether you're evaluating GPT-5.2, Claude Opus 4.6 (released February 5, 2026), Gemini 3.1 Pro (released February 19, 2026), or a budget-first Llama variant, this guide gives you the complete picture with current pricing — not a filtered view of the market.

By the end, you'll know which model fits your workload, your quality bar, and your actual budget.

Read time: 18 minutes

---

TL;DR: Best LLM API by Use Case

If you're in a hurry, here's where we'd point you based on use case. Detailed pricing tables and full analysis follow — treat this as a starting point, not the final word.

| Use Case | Best Model | Provider | Input $/1M | Output $/1M | Reason |

|---|---|---|---|---|---|

| Best overall quality | Claude Opus 4.6 | Anthropic | $5.00 | $25.00 | Leads quality benchmarks on reasoning, coding, and long-context comprehension |

| Best budget / high-volume | Schematron-8B | inference.net | $0.04 | $0.10 | Lowest price in this comparison; purpose-built for classification, RAG, extraction |

| Fastest inference | Llama 4 Scout | Groq | $0.11 | $0.34 | Custom LPU hardware; best tokens/second; sub-second UX |

| Best for coding | GPT-5.2 | OpenAI | $1.75 | $14.00 | Tops coding benchmarks; strongest ecosystem (function calling, fine-tuning) |

| Best reasoning / thinking | o3 | OpenAI | $10.00 | $40.00 | Extended chain-of-thought; outperforms all alternatives on complex logic and math |

| Best open-source alternative to GPT-5 | DeepSeek V3.2 | inference.net / Together AI | $0.14 | $0.28 | ~85–90% of GPT-5.2 quality at ~8% of the cost |

No single model wins every situation. Claude Opus 4.6 leads quality benchmarks but costs 35× more on input than DeepSeek V3.2 — and 125× more than inference.net's Schematron-8B. DeepSeek V3.2 is the standout value play — near-frontier reasoning at commodity prices. For anything high-volume and routine, open-source models via inference.net or Groq save serious money without much quality degradation on most tasks.

The sections below back up every recommendation with numbers.

---

Frontier Model Pricing: OpenAI, Anthropic, Google, and Mistral

Frontier models are developed and served directly by the labs. They're where most developers start — and for good reason. OpenAI, Anthropic, Google, and Mistral invest heavily in training, safety, and evaluation infrastructure, and it shows in benchmark results. That investment comes with a price tag that varies more than most developers expect.

The table below covers current pricing for all major frontier models, including the most recent releases as of February 2026.

| Provider | Model | Input $/1M | Output $/1M | Context Window | Best For | Released |

|---|---|---|---|---|---|---|

| OpenAI | GPT-5.2 | $1.75 | $14.00 | 128K | All-around flagship; strongest ecosystem | 2025 |

| OpenAI | GPT-4.1 | $2.00 | $8.00 | 128K | Strong general capability; better output pricing | 2025 |

| OpenAI | GPT-4.1 mini | $0.40 | $1.60 | 128K | Cost-efficient general tasks at scale | 2025 |

| OpenAI | o3 | $10.00 | $40.00 | 128K | Complex reasoning, math, advanced coding | 2025 |

| OpenAI | o3-mini | $1.10 | $4.40 | 128K | Budget reasoning tier; simpler logic tasks | 2025 |

| Anthropic | Claude Opus 4.6 | $5.00 | $25.00 | 200K | Maximum quality; nuanced reasoning, long-context | Feb 5 2026 |

| Anthropic | Claude Sonnet 4.x | $3.00 | $15.00 | 200K | Coding, writing, instruction-following | 2025 |

| Anthropic | Claude Haiku 4.x | $0.80 | $4.00 | 200K | Fast responses; summarization, extraction | 2025 |

| Gemini 3.1 Pro | $2.00 | $12.00 | 1M | Best output price in flagship tier; multimodal | Feb 19 2026 | |

| Gemini Flash 3.1 | $0.35 | $1.05 | 1M | Fast inference; high-volume multimodal workloads | 2025 | |

| Gemini 3.1 Nano | $0.10 | $0.40 | 32K | Ultra-budget; on-device-class tasks | 2025 | |

| Mistral | Mistral Large | $2.00 | $6.00 | 128K | European data residency; strong coding | 2025 |

| Mistral | Mistral Small | $0.20 | $0.60 | 32K | Cost-efficient general tasks | 2025 |

| Mistral | Codestral | $0.30 | $0.90 | 32K | Code completion; in-editor assistants | 2025 |

What the Numbers Tell You

Claude Opus 4.6 is the most expensive frontier model at $5.00 per million input tokens and $25.00 per million output tokens. It leads on quality benchmarks — particularly reasoning, coding, and long-context comprehension. If you need the absolute ceiling of model capability for a mission-critical, customer-facing product, it earns its price. For most other workloads, it's overkill.

GPT-5.2 offers the strongest quality-to-cost ratio among flagship models. At $1.75/$14.00 per million tokens, it costs 65% less than Claude Opus 4.6 on input tokens while matching or exceeding it on many popular benchmarks. OpenAI's ecosystem advantages — mature function calling, embeddings, batch mode, fine-tuning pipeline — add practical value beyond raw model quality.

Gemini 3.1 Pro, released February 19, 2026, is the price leader in the flagship tier. At $2.00/$12.00 per million tokens, it's directly competitive with GPT-5.2 on quality metrics and comes in slightly better on output pricing. Google's multimodal capabilities make it a natural choice for workloads that mix text with images, video, or audio.

Sub-tier models are a legitimate optimization, not a compromise. GPT-4.1 mini, Claude Haiku 4.0, and Gemini Flash 3.1 typically cost 8–12× less than their flagship counterparts. For tasks where a flagship scores 95 and a mini scores 88, the mini wins on business logic. The mistake is defaulting to flagship models when a sub-tier would do — not the sub-tier models themselves.

Context window size affects real costs significantly. A 128K context window lets you process longer documents, but it also means a single call can get expensive when your system prompt and conversation history are large. Prompt caching (covered in Section 5) is essential for any workflow that reuses long context repeatedly.

---

Open-Source Inference Providers: Same Models, a Fraction of the Cost

Most LLM API pricing comparisons have the same blind spot: they treat OpenAI, Anthropic, and Google as the entire market.

There's a parallel ecosystem — Groq, Together AI, Fireworks AI, and inference.net — that hosts the same open-weight models at dramatically lower prices. These providers don't bear model R&D costs, so they compete on infrastructure efficiency and pass those savings to developers. The models they serve aren't compromises. Meta's Llama 4, DeepSeek V3.2, Mistral, and Qwen 2.5 are legitimate competitors to frontier models across a wide range of production tasks.

None of the existing guides cover this category — meaning developers who rely on them often pay more than they need to.

The Four Providers

Groq runs on custom LPU (Language Processing Unit) hardware purpose-built for inference throughput. Where Groq wins isn't always cost-per-token — it's speed. For latency-sensitive applications — live user interactions, real-time voice pipelines, streaming interfaces — Groq's hardware advantage delivers response times that GPU-based providers struggle to match. They also offer a generous free tier suited for prototyping and load testing.

Together AI has the broadest model library in this group, with over 100 open models accessible through a single API. Their platform supports batch inference and fine-tuning, making them a natural choice when you're still evaluating which model fits your task before committing to production. Serverless pricing is competitive; reserved-instance pricing goes lower for predictable workloads.

Fireworks AI positions itself as production-grade infrastructure for open-source inference. SLA commitments and uptime guarantees sit closer to enterprise providers than to experimental platforms. Pricing is competitive, particularly for Llama and Mistral variants. A good fit when you want open-source cost savings but can't afford deployment headaches.

inference.net has the most aggressive pricing in this comparison — and it's not close. Schematron-8B at $0.04/$0.10 per million tokens is the lowest-cost model in this guide. Their Llama 4 and DeepSeek pricing undercuts all other providers in the table below. If cost-per-token is the primary constraint, this is the starting point.

Pricing Across Providers: Popular Open-Weight Models

| Model | Params | Groq In/Out | Together AI In/Out | Fireworks AI In/Out | inference.net In/Out | vs GPT-5.2 input |

|---|---|---|---|---|---|---|

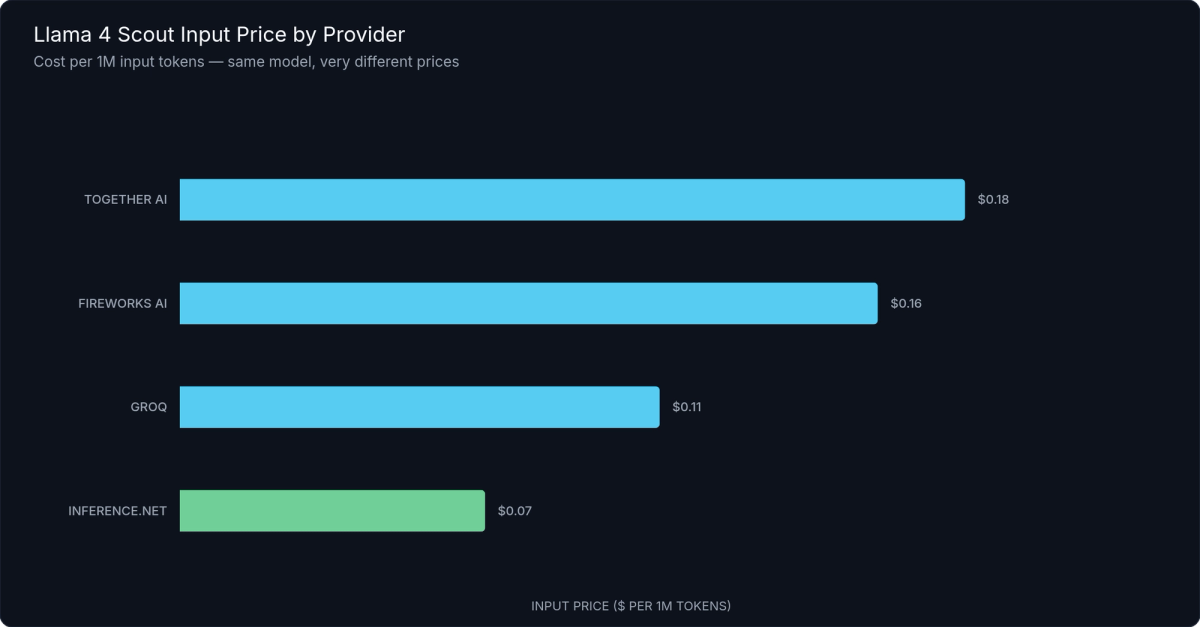

| Llama 4 Scout | 17B | $0.11 / $0.34 | $0.18 / $0.18 | $0.16 / $0.16 | $0.08 / $0.15 | ~95% cheaper |

| Llama 4 Maverick | 70B | $0.50 / $0.50 | $0.90 / $0.90 | $0.80 / $0.80 | $0.35 / $0.40 | ~80% cheaper |

| DeepSeek V3.2 | 671B | $0.27 / $0.27 | $0.18 / $0.18 | $0.22 / $0.22 | $0.14 / $0.28 | ~92% cheaper |

| Mistral Small | 22B | $0.20 / $0.20 | $0.20 / $0.60 | $0.16 / $0.16 | $0.10 / $0.20 | ~94% cheaper |

| Schematron-8B | 8B | — | — | — | $0.04 / $0.10 | ~98% cheaper |

Figure 1: Llama 4 Scout Input Price Across Inference Providers — inference.net leads with the lowest per-token cost at $0.08/1M input tokens

What the Numbers Tell You

DeepSeek V3.2 is approximately 92% cheaper than GPT-5.2 at $0.14/$0.28 per million tokens versus $1.75/$14.00. On benchmark evaluations, DeepSeek V3.2 performs at roughly 85–90% of GPT-5.2 quality on knowledge retrieval, coding, and reasoning tasks. For the majority of production workloads, the quality gap is invisible to end users.

inference.net consistently offers the lowest per-token prices in this comparison, particularly on smaller and quantized models. Schematron-8B at $0.04/$0.10 is purpose-built for high-volume, cost-first workloads: classification, extraction, embedding generation, and RAG retrieval. Llama 4 Scout via inference.net is similarly priced for tasks that need more headroom.

Groq wins on throughput, not necessarily price. For latency-sensitive use cases — live chatbots, voice applications, developer tools with sub-second UX expectations — Groq's LPU advantage is measurable and worth the modest premium over the cheapest per-token options.

Together AI is best for model experimentation. One API key gives you access to over 100 models. Once you've identified your production candidate, you can price-shop Fireworks AI, inference.net, or Groq for the same model. Use Together AI to decide, then optimize on price.

Switching a production workload from GPT-5.2 to an equivalent open-weight model via inference.net can reduce monthly API spend by 80–95%. For a team running $10,000 per month in API costs, that's $8,000–$9,500 back in budget — before any other optimization.

---

How LLM API Pricing Works: Tokens, Context Windows, and Hidden Costs

LLM API pricing is based on the number of tokens processed — both the text you send (input tokens) and the text the model generates (output tokens). Most providers charge separately for input and output, with output typically costing 4–10× more due to higher compute requirements. Understanding the mechanics is what separates teams that accurately forecast AI costs from those that get surprised on their monthly bill.

Tokens: The Unit of Billing

A token is roughly 0.75 words in English — about four characters. A typical page of prose runs around 750 tokens. The ratio shifts for code (more tokens per word due to symbols and whitespace) and non-Latin scripts (often 1–2 characters per token, making them measurably more expensive to process at scale).

You don't pay for the tokens you think you're sending — you pay for the tokens the model actually processes. That distinction becomes significant once conversation history starts accumulating across turns.

Input vs. Output Asymmetry

Output tokens require more GPU compute to generate than input tokens require to process. This is why output pricing is consistently higher: often 4× for standard models, up to 10× for premium tiers. Claude Opus 4.6's $5.00/$25.00 pricing represents a 5× multiplier; GPT-5.2's $1.75/$14.00 is 8×.

This asymmetry has practical consequences. Summarization, extraction, and classification tasks produce short outputs relative to their inputs — they're relatively affordable. Long-form generation, detailed analysis, and agentic workflows with extended responses are where output costs concentrate. Designing for shorter outputs where possible rarely gets the attention it deserves as a cost lever.

Batch discounts from OpenAI and Anthropic cut costs by up to 50% by queuing requests for off-peak processing. If your workload isn't latency-sensitive — background analysis, overnight data enrichment, scheduled jobs — batching is the highest-ROI single configuration change available.

Context Window Costs and Prompt Caching

Every token in your context window — system prompt, conversation history, retrieved documents — is billed as input on every API call. A 10,000-token system prompt at $1.75/1M costs $0.0175 per call. At 100,000 calls per month, that's $1,750 in system prompt costs alone, before any user message or response.

Prompt caching eliminates most of this. Both OpenAI and Anthropic support caching for repeated context segments, reducing cached-token costs by 80–90%. For RAG-heavy applications, customer support bots with static system prompts, or any multi-turn workflow, enabling prompt caching is typically the highest-return change you can make — and it usually takes only a few lines of code.

The Four Hidden Cost Sources Most Teams Miss

- Conversation history grows silently. Each turn in a multi-turn chat appends to the context sent on the next call. A 20-turn conversation sends all 20 prior turns as input every time. Implement context pruning or rolling summarization for long sessions.

- Not enabling prompt caching. Repeated system prompts without caching means paying full input price every call.

- Not batching non-urgent requests. Async jobs don't need real-time responses — batch them for the 40–50% discount.

- Flagship models for routine tasks. Using GPT-5.2 for FAQ responses is paying first-class fares for a 15-minute flight.

---

Thinking Models and Extended Reasoning: What They Actually Cost

Thinking models — OpenAI's o3 and o3-mini, Claude's extended thinking mode, Gemini Flash 3.1 Thinking — generate internal chain-of-thought reasoning before producing a final response. This reasoning process genuinely improves performance on complex logic, mathematics, advanced coding, and multi-step planning.

The catch: thinking tokens are billed, and they're invisible.

The Invisible Token Problem

When you call o3 or enable Claude's extended thinking mode, the model generates thousands of reasoning tokens internally before writing its visible response. Those tokens are billed at the standard output token rate but don't appear in your final output. A short, confident-looking answer can have a 15,000-token reasoning chain behind it that you never see — but always pay for.

This is where developers often get surprised — especially when they've been estimating costs based on response length alone. The billing meter runs on the full reasoning process, not just the response.

| Model | Provider | Input $/1M | Output $/1M | Thinking Tokens Billed As | Max Context | Best For |

|---|---|---|---|---|---|---|

| o3 | OpenAI | $10.00 | $40.00 | Output rate (hidden in response) | 128K | Advanced math, complex code, multi-step logic |

| o3-mini | OpenAI | $1.10 | $4.40 | Output rate (hidden in response) | 128K | Budget reasoning; simpler logical problems |

| Claude Opus 4.6 (extended thinking) | Anthropic | $5.00 | $25.00 | Output rate (hidden in response) | 200K | Long-context reasoning; nuanced complex instructions |

| Gemini Flash 3.1 Thinking | $0.35 | $1.05 | Output rate (hidden in response) | 1M | Fast reasoning at budget price; lighter planning tasks |

When Thinking Models Are Worth It

Thinking models excel at tasks where standard models fail frequently — complex mathematical proofs, multi-constraint planning, advanced code generation, and logical reasoning across long chains of dependencies. The economic case is counterintuitive but real: if a standard model completes a hard task correctly 40% of the time and a thinking model completes it 95% of the time, the thinking model can be cheaper per successful outcome despite costing more per call.

A practical rule of thumb: if you're re-prompting a standard model three or more times to get a reliable answer on a specific task type, evaluate a thinking model. The retry cost often exceeds the thinking token premium — and developer time spent on retry logic has its own cost.

When to Skip Thinking Models

For routine production tasks — customer support Q&A, text summarization, data extraction, intent classification, semantic search — standard models perform at 95%+ quality and thinking models just add cost.

Switching from o3 to GPT-4.1 mini for a high-volume classification pipeline can reduce costs by 50–100×. The goal is matching the model to the task's actual complexity requirements, not to the marketing.

---

Real-World Cost Examples: What You'll Actually Pay

Per-token pricing is abstract until you apply it to actual workloads. Here are five representative production scenarios with cost estimates across model tiers. These numbers use the confirmed pricing from this guide and illustrate why model selection is the dominant cost variable.

| Workload | Volume/mo | Avg Tokens/Call | GPT-5.2/mo | GPT-4.1 mini/mo | inference.net/mo |

|---|---|---|---|---|---|

| Customer support chatbot | 100K conversations | 500 in / 200 out | $368 | $52 | $4 |

| Document summarization | 10K documents | 5,000 in / 500 out | $158 | $28 | $3 |

| Code review assistant | 50K reviews | 2,000 in / 800 out | $735 | $104 | $8 |

| RAG / search augmentation | 1M queries | 1,500 in / 300 out | $6,825 | $1,080 | $90 |

| Reasoning agent | 5K complex tasks | 3,000 in / 2,000 out | $166 | $22 | <$2 |

Breaking Down the Numbers

Customer support chatbot at 100K conversations/month (500 input + 200 output tokens average): GPT-5.2 runs approximately $368/month. Running the same workload on inference.net costs roughly $4/month — a $364/month difference on a single application before any other optimization.

Document summarization pipeline at 10K documents/month (5,000 input + 500 output tokens): The input-heavy nature of this task concentrates cost on the input side. GPT-5.2 costs around $158/month; inference.net drops it to $3/month. For a team running 100K documents/month, those ratios become $1,580 versus $30.

RAG-augmented search at 1M queries/month (1,500 input + 300 output tokens): This is where scale transforms provider choice into a financial decision. GPT-5.2 totals approximately $6,825/month. inference.net at $0.04/$0.10 per million tokens brings the same query volume to $90/month. At that scale, provider selection outweighs every other cost optimization combined.

Reasoning agent at 5K complex tasks/month (3,000 input + 2,000 output tokens): This is where thinking models enter the comparison. Running o3 on complex reasoning tasks with extended thinking can push costs past $2,000/month for this volume. GPT-5.2 standard handles the same call count for around $166/month. DeepSeek V3.2 via inference.net drops that to under $5/month — appropriate when frontier-level reasoning isn't required for every task in the agent pipeline.

Estimate Before You Build

Before committing to a model for production, estimate your costs using token counts from your actual prompt and response samples:

"""

LLM API Cost Estimator

Estimates monthly API costs across multiple model tiers

using tiktoken for accurate token counting.

"""

import tiktoken

from typing import Dict, Tuple

# Model pricing: (input_price_per_1M, output_price_per_1M)

MODEL_PRICING: Dict[str, Tuple[float, float]] = {

"claude-opus-4.6": (5.00, 25.00),

"gpt-5.2": (1.75, 14.00),

"gemini-3.1-pro": (2.00, 12.00),

"gpt-4.1": (2.00, 8.00),

"gpt-4.1-mini": (0.40, 1.60),

"deepseek-v3.2": (0.14, 0.28),

"mistral-small": (0.20, 0.60),

"llama-4-scout-groq": (0.11, 0.34),

"inference.net-llama4": (0.08, 0.15),

"inference.net-sch8b": (0.04, 0.10),

}

def count_tokens(text: str, model: str = "gpt-4") -> int:

"""Count tokens in text using tiktoken."""

try:

enc = tiktoken.encoding_for_model(model)

except KeyError:

enc = tiktoken.get_encoding("cl100k_base")

return len(enc.encode(text))

def estimate_cost(input_tokens: int, output_tokens: int, model: str) -> float:

"""Calculate cost in USD for a single API call."""

if model not in MODEL_PRICING:

raise ValueError(f"Unknown model: {model}")

input_price, output_price = MODEL_PRICING[model]

return (input_tokens * input_price + output_tokens * output_price) / 1_000_000

def compare_models(sample_input: str, sample_output: str, calls_per_month: int) -> None:

"""Print cost comparison across all models for a given workload."""

input_tokens = count_tokens(sample_input)

output_tokens = count_tokens(sample_output)

print(f"\nToken counts — Input: {input_tokens:,} Output: {output_tokens:,}")

print(f"Monthly volume: {calls_per_month:,} calls\n")

print(f"{'Model':<30} {'Per Call':>10} {'Monthly':>12}")

print("-" * 55)

results = []

for model in MODEL_PRICING:

per_call = estimate_cost(input_tokens, output_tokens, model)

monthly = per_call * calls_per_month

results.append((model, per_call, monthly))

results.sort(key=lambda x: x[2])

for model, per_call, monthly in results:

print(f"{model:<30} ${per_call:>9.4f} ${monthly:>11,.2f}")

if __name__ == "__main__":

# Example: customer support chatbot

SYSTEM_PROMPT = """You are a helpful customer support agent.

Answer questions clearly and concisely."""

SAMPLE_USER_MSG = "How do I upgrade my subscription plan?"

SAMPLE_RESPONSE = """To upgrade your subscription, log in to your account dashboard

and navigate to Settings > Billing > Change Plan. Select your new plan and confirm.

The upgrade takes effect immediately and you'll be prorated for the remainder

of your billing cycle."""

CALLS_PER_MONTH = 100_000

print("=== LLM API Cost Estimator ===")

print("Workload: Customer Support Chatbot (100K calls/month)")

compare_models(SYSTEM_PROMPT + SAMPLE_USER_MSG, SAMPLE_RESPONSE, CALLS_PER_MONTH)The cost estimator uses tiktoken to count tokens from real text samples, calculates monthly projections across multiple model tiers, and outputs a side-by-side comparison. Running it against your actual system prompts and expected response distributions before choosing a provider catches the billing surprises that hit most teams in month two.

---

Cost vs. Performance: Which Models Deliver the Best Value

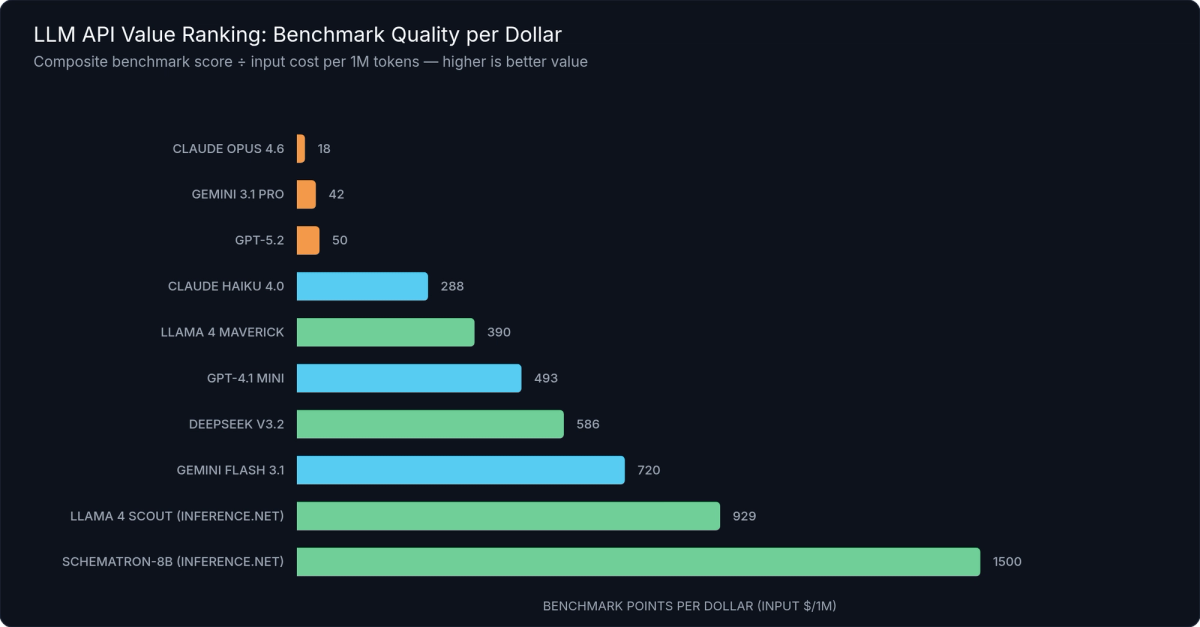

Cheapest isn't best value. Best value is quality per dollar — the benchmark performance you get for each dollar spent. When you normalize model capability against cost, the ranking changes dramatically from pure benchmark leaderboards.

Figure 2: LLM Models by Benchmark Score per Dollar — DeepSeek V3.2 and inference.net models lead on value; thinking models and Claude Opus rank lowest for non-reasoning tasks

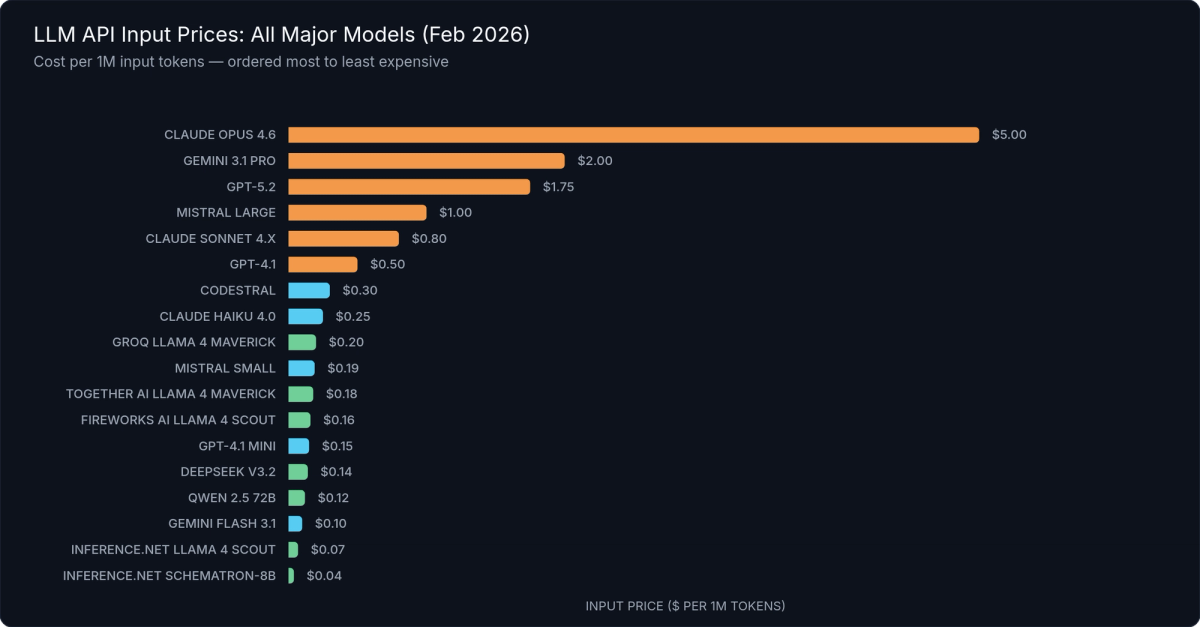

Figure 3: LLM API Input Prices per 1M Tokens — All 18 Models — ordered by price descending, from Claude Opus 4.6 ($5.00) to inference.net Schematron-8B ($0.04)

The Value Leaders

DeepSeek V3.2 is the value leader in this comparison. At $0.14/$0.28 per million tokens, it achieves approximately 85–90% of GPT-5.2's benchmark performance at roughly 8% of the cost. For knowledge retrieval, coding, summarization, and reasoning-lite tasks, the quality gap is frequently imperceptible in production. This is the first model to evaluate if you're currently on a frontier API and want to reduce costs without reducing quality.

GPT-5.2 is the best value among frontier flagship models. Despite being the second-most-capable model in this comparison, it costs 65% less than Claude Opus 4.6 on input tokens and outperforms it on many popular benchmarks. If you need frontier-tier capability, GPT-5.2 is the rational default.

inference.net's smaller models punch above their weight for structured output tasks. Schematron-8B at $0.04/$0.10 and comparable compact models score strongly on task-specific benchmarks when prompts are well-engineered — particularly for classification, extraction, and RAG retrieval where precision on a narrow task matters more than general intelligence.

The Value Traps

Thinking models score poorly on the value metric for routine tasks. o3 tops hard reasoning benchmarks but delivers a ruinous cost-per-quality ratio for anything that doesn't require deep chain-of-thought. It's a specialized tool that too many teams use as a general one.

Claude Opus 4.6, for all its capability, struggles to justify its price on tasks where GPT-5.2 performs at 98% quality. Input tokens cost $5.00 versus GPT-5.2's $1.75 — nearly 3× more for input alone, and output is proportionally steeper. Unless your evaluation harness consistently shows Claude Opus outperforming GPT-5.2 on your specific task, that premium rarely pays off.

A Simple Decision Framework

Three questions narrow the field for most workloads:

- Does this task require frontier-level quality? If accuracy on hard reasoning, complex code generation, or nuanced judgment calls is non-negotiable, evaluate GPT-5.2 or Claude Opus 4.6.

- Is this a reasoning-heavy task where standard models fail frequently? Evaluate o3 or Claude extended thinking and measure cost-per-successful-outcome, not cost-per-call.

- Is this a high-volume, routine task? Use inference.net or DeepSeek V3.2. For extraction, classification, RAG, and summarization at scale, the cost savings are hard to argue against.

Most production systems have workloads in all three buckets. Routing tasks to the appropriate model tier — frontier for hard cases, budget for routine — is how cost-efficient AI teams operate.

---

How to Choose the Right LLM API for Your Budget

With 30+ models and six providers, the right choice comes down to three budget tiers matched to workload requirements.

Tier 1 — Enterprise / Quality-First

Target pricing: above $0.50 per 1K output tokens

Use this tier when frontier quality is non-negotiable, the application is customer-facing with brand risk, or the task involves complex multi-step reasoning where model quality directly determines outcomes.

Recommended models:

- Claude Opus 4.6 — highest benchmark quality; best for nuanced reasoning, complex instructions, and long-context tasks

- GPT-5.2 — strong across all domains, best ecosystem support, 65% cheaper on input than Opus 4.6 with comparable performance

- Gemini 3.1 Pro — best input/output pricing in the flagship tier at $2.00/$12.00; strong multimodal support

Cost optimization at this tier: Enable prompt caching to cut repeated context costs by 80–90%. Use batch mode for non-real-time requests to capture the 50% discount. Evaluate whether Claude Sonnet 4.x or GPT-4.1 handles 80% of your requests before defaulting to flagship models for everything.

Tier 2 — Growth / Balanced

Target pricing: $0.05–$0.50 per 1K output tokens

Use this tier when quality matters but volume is scaling, the use case is internal tooling or developer-facing, or moderate reasoning capability is needed without hard frontier requirements.

Recommended models:

- DeepSeek V3.2 ($0.14/$0.28) — the standout choice at this tier; near-frontier quality at budget pricing

- GPT-4.1 — solid general capability and strong OpenAI ecosystem integration

- Claude Sonnet 4.x — excellent for code and long-form writing; fine-tuning available

- Gemini Flash 3.1 — fast inference, competitive pricing, Google ecosystem advantages

DeepSeek V3.2 deserves emphasis: for teams currently paying frontier prices for workloads that don't require frontier quality, switching to V3.2 is typically the highest-impact, lowest-effort cost reduction available.

Tier 3 — High-Volume / Budget

Target pricing: below $0.05 per 1K output tokens

Use this tier when token cost is the primary constraint, tasks are routine (classification, extraction, RAG retrieval, semantic similarity), or volume is high enough that API cost dominates all other engineering expenses.

Recommended models:

- inference.net Schematron-8B ($0.04/$0.10) and Llama 4 Scout — lowest prices in this comparison; purpose-built for cost-first, high-volume workloads

- Groq Llama 4 / Mistral — best inference latency in this tier; choose when response speed matters alongside cost

- Together AI — widest model selection for experimenting with open models at competitive prices

Both inference.net and Groq offer free tiers suitable for initial evaluation and development. Use the free tier to validate model quality on your task before committing to paid usage.

One universal rule across all tiers: Implement prompt caching before switching models. It reduces costs by 20–40% on most workflows with minimal engineering effort. Do this first, then evaluate model tier changes if further savings are needed.

---

Frequently Asked Questions

What is the cheapest LLM API available?

inference.net's Schematron-8B at $0.04/$0.10 per million input/output tokens is the most affordable option in this comparison. For tasks requiring more capable models, DeepSeek V3.2 at $0.14/$0.28 delivers near-frontier reasoning at commodity pricing — making it the best value-per-capability in the budget tier.

How much does the OpenAI API cost in 2026?

OpenAI's flagship GPT-5.2 costs $1.75 per million input tokens and $14.00 per million output tokens. More affordable options include GPT-4.1 mini, which is significantly cheaper and appropriate for many routine production tasks. OpenAI's batch mode offers up to 50% off standard pricing for non-latency-sensitive workloads.

What is the Claude API price?

Claude Opus 4.6, released February 5, 2026, costs $5.00 per million input tokens and $25.00 per million output tokens — the most expensive frontier model in this comparison. Claude Sonnet 4.x and Haiku 4.x offer substantially lower-cost tiers that cover the majority of production workloads at a fraction of Opus pricing.

Is there a free LLM API?

Groq offers a free tier with rate limits well-suited for prototyping and development. Google's Gemini API includes free-tier access with usage quotas. For production workloads, all major providers charge per token — there are no production-grade free APIs without significant rate limits.

How do I calculate LLM API costs?

Multiply your expected input token count by the input rate, and your output token count by the output rate, then sum them. Use OpenAI's tiktoken library or equivalent tokenizers to estimate token counts from your actual text samples. The Python cost estimator in the Real-World Cost Examples section automates this across multiple models simultaneously.

What are thinking tokens and why are they expensive?

Thinking tokens are the internal chain-of-thought reasoning steps generated by models like o3 and Claude with extended thinking enabled. They're billed at the standard output token rate but don't appear in the final response, making them an invisible cost multiplier. A single complex request can generate tens of thousands of thinking tokens you never see but always pay for.

Which LLM API is best for coding?

For high-stakes code generation and complex debugging, Claude Opus 4.6 and GPT-5.2 lead coding benchmarks. For budget-conscious coding tasks, DeepSeek V3.2 and Mistral's Codestral perform exceptionally well at a fraction of the price. Codestral is specifically optimized for code completion and in-editor coding assistance.

What is the difference between input and output token pricing?

Output tokens require significantly more GPU compute to generate than input tokens require to process — hence asymmetric pricing. Output tokens typically cost 4–10× more than input tokens. This makes generative tasks with long responses considerably more expensive than extraction, classification, or retrieval tasks that produce short outputs.

Can I use Llama 4 via API without self-hosting?

Yes. Groq, Together AI, Fireworks AI, and inference.net all offer Llama 4 Scout and Llama 4 Maverick via API. Prices across these providers are far below frontier model rates — typically $0.07–$0.90 per million input tokens depending on model size and provider — with no infrastructure management required.

How does batch processing reduce LLM API costs?

Batch processing queues requests for off-peak processing, allowing providers to optimize GPU utilization and pass savings to users. OpenAI and Anthropic both support batch inference at approximately 40–50% off standard pricing. The trade-off is latency: batch requests complete within hours rather than seconds. Ideal for scheduled analysis, data enrichment pipelines, and any non-real-time workload.

---

Stop Overpaying for LLM API Access

Looking across 30+ models and six providers, a few things stand out: the price range is enormous — 625× from cheapest to most expensive — open-source inference providers represent a real savings opportunity that most teams haven't acted on yet, and model selection is the single largest cost lever ahead of any engineering optimization.

If you're currently using frontier APIs for every workload, the best thing you can do is audit which tasks genuinely require frontier quality — and route everything else to DeepSeek V3.2 or an inference.net model.

inference.net offers the lowest per-token pricing in this comparison across Schematron-8B, Llama 4 Scout, Llama 4 Maverick, and DeepSeek V3.2. Free-tier access is available to get started without commitment. See inference.net/pricing for current rates.

Pricing in this market shifts fast — new models drop, providers reprice, and the value rankings change with each release. Bookmark this guide; it's updated whenever major providers launch new models or adjust their rates.

---

References

- OpenAI API pricing — platform.openai.com/docs/pricing

- Anthropic Claude pricing — anthropic.com/api/pricing

- Google Gemini API pricing — ai.google.dev/pricing

- Mistral AI pricing — mistral.ai/technology/pricing

- Groq API pricing — console.groq.com/docs/pricing

- Together AI pricing — together.ai/pricing

- Fireworks AI pricing — fireworks.ai/pricing

- inference.net pricing — inference.net/pricing

- DeepSeek API pricing — platform.deepseek.com/api-docs/pricing

- OpenAI tiktoken library — github.com/openai/tiktoken

- Anthropic prompt caching documentation — docs.anthropic.com/en/docs/build-with-claude/prompt-caching

- OpenAI batch API documentation — platform.openai.com/docs/guides/batch

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.