Content

Explore our latest articles, guides, and insights on AI and machine learning.

Jan 31, 2026

vLLM Docker Deployment: Production-Ready Setup Guide (2026)

Complete guide to deploying vLLM in Docker containers. Covers multi-GPU setup, Docker Compose, Kubernetes, monitoring with Prometheus, and production tuning.

Jan 26, 2026

SGLang: The Complete Guide to High-Performance LLM Inference

Learn SGLang from installation to production. Covers RadixAttention architecture, vLLM benchmarks, Docker/Kubernetes deployment, and troubleshooting.

Jan 24, 2026

LLM Cost Optimization: How to Reduce Your AI Spend by 80%

Learn practical strategies to reduce LLM costs by 80%. Compare OpenAI, Claude, and open-source pricing. Includes calculator, prompt caching tips, and model selection guide.

Jan 21, 2026

Azure OpenAI Pricing Explained (2026) | Hidden Costs + Alternatives

Complete breakdown of Azure OpenAI pricing by model, PTU vs pay-as-you-go comparison, hidden costs that add 15-40%, and cheaper alternatives. Updated for 2026.

Sep 1, 2025

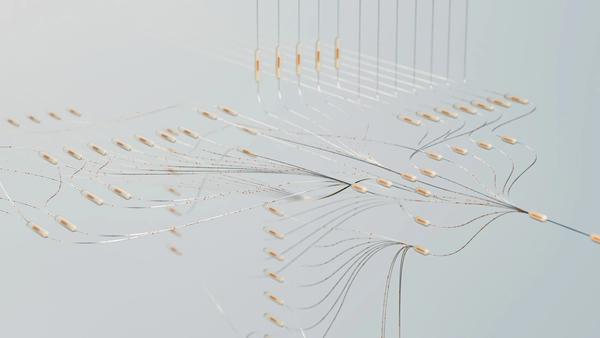

What is Distributed Inference & How to Add It to Your Tech Stack

Learn about distributed inference and how it can scale your AI models. Reduce latency & boost efficiency in your tech stack.

Aug 31, 2025

The Definitive Guide to Continuous Batching LLM for AI Inference

Learn how Continuous Batching LLM improves inference speed, memory use, and flexibility compared to static batching, helping scale AI applications efficiently.

Aug 30, 2025

Step-By-Step Pytorch Inference Tutorial for Beginners

Learn the fundamentals of Pytorch Inference with our easy-to-follow guide. Get your model ready for real-world predictions.

Aug 29, 2025

Top 14 Inference Optimization Techniques to Reduce Latency and Costs

Speed up your AI. Explore 14 powerful Inference optimization techniques to accelerate model inference without sacrificing accuracy.

Aug 28, 2025

The Ultimate LLM Benchmark Comparison Guide (2025 Edition)

Navigate the LLM landscape with our ultimate guide. Get a comprehensive LLM benchmark comparison for all top models in 2025.

Aug 27, 2025

What Is Inference Latency & How Can You Optimize It?

Reduce AI response time. Learn what inference latency is and discover powerful optimization techniques to boost your model's speed.