Intro

Today we're introducing Schematron, a family of specialized models that transform messy HTML into clean, structured JSON.

Schematron-8B and Schematron-3B deliver frontier-level extraction quality at 1-2% of the cost and 10x+ faster inference than large, general-purpose LLMs. By specializing in HTML-to-JSON, they can handle malformed HTML, complex JSON schemas, and excel across varying context lengths.

These models unlock workloads that would previously have been economically infeasible, and make web agents faster and more accurate.

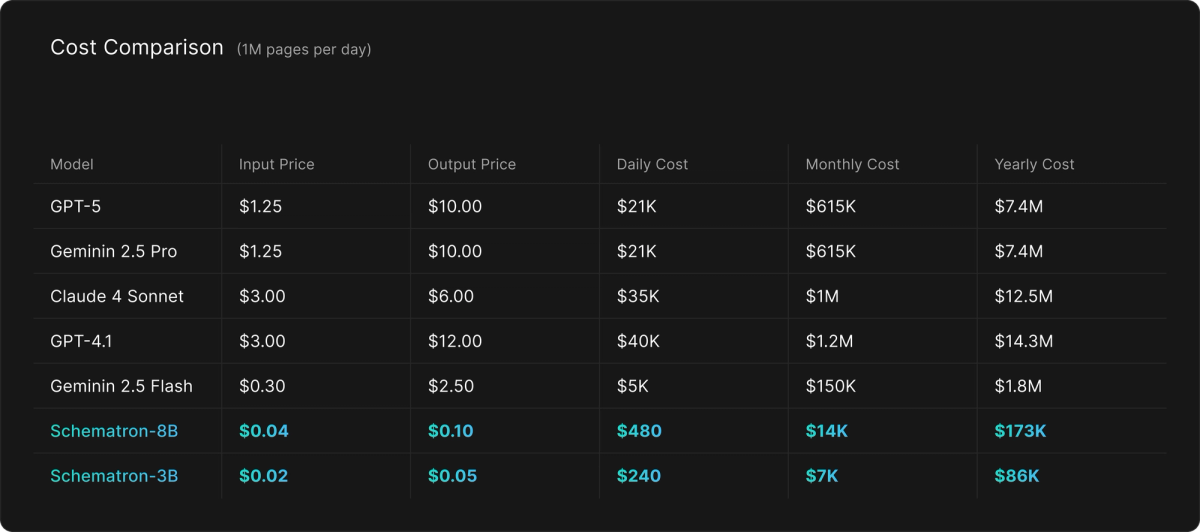

Here's what processing 1 million pages daily looks like (assuming 10k input + 8000 output tokens per page):

Both Schematron-3B and Schematron-8B are open source and available today on Hugging Face and through our serverless API.

Code examples are available on our docs and samples repo.

The Challenge of Structured Web Data

While today's strongest LLMs are extremely effective at extracting data from messy HTML, they're often impractical for real-world scraping workflows, as processing millions of pages with acceptable accuracy costs tens of thousands of dollars.

Our team has experienced this firsthand through building dozens of scrapers and crawlers, and acutely felt the need for a more affordable HTML parsing model that doesn’t sacrifice extraction quality.

We built Schematron to deliver frontier-model accuracy at prices that make sense for production workloads. Monitoring competitor prices, aggregating product catalogs, or building research agents all require extracting clean, typed data from messy HTML — a task that Schematron solves at a price point low enough to unlock new use cases.

Meet the Schematron Family

We trained two versions for Schematron: a highly accurate Schematron-8B and a marginally less accurate but much faster Schematron-3B.

Schematron-8B excels at complex extraction tasks, handling intricate nested schemas and very long documents with remarkable accuracy. It's your go-to choice when accuracy matters most.

Schematron-3B offers exceptional value for simpler extraction tasks, processing standard web pages in hundreds of milliseconds while maintaining impressive accuracy for common use cases.

Both models share core capabilities:

- 128K token context window: for processing long pages

- Strict JSON mode: guaranteeing parseable, schema-compliant output with 100% adherence

The quality of these models matches that of frontier models, being substantially smaller and faster. We used Gemini 2.5 Pro to grade the extractions from 1-5, where 5 is a perfect extraction. Gemini 2.5 Pro was selected because of its incredible long-context capabilities.

Best-in-class Speed and Cost

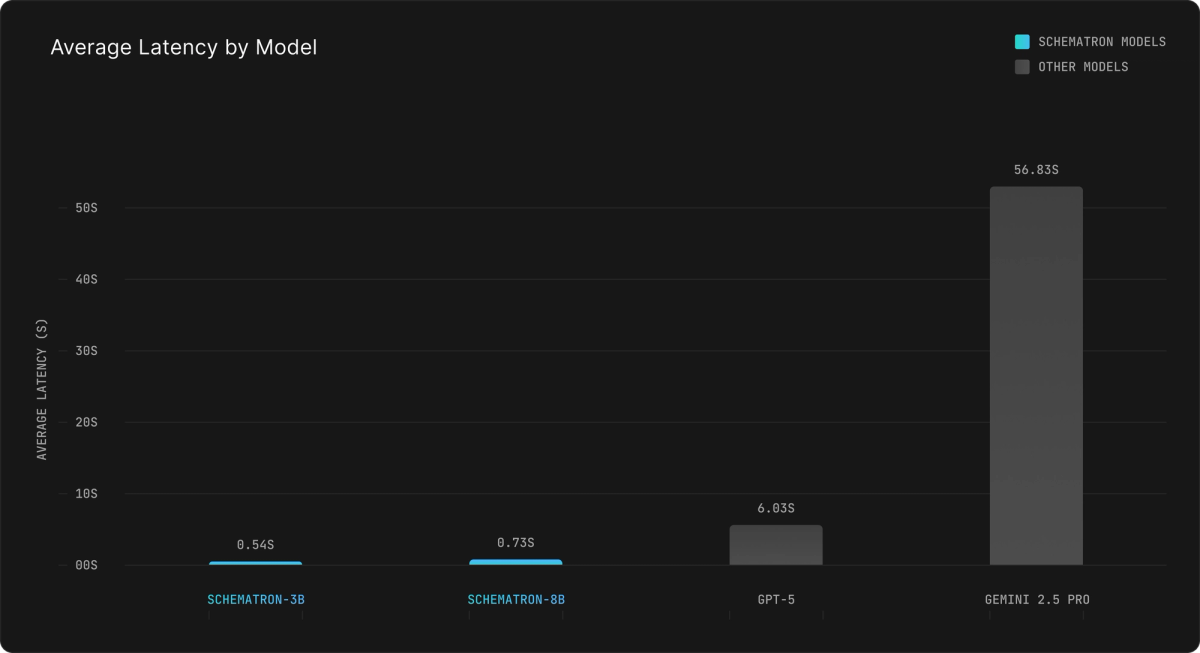

Schematron's speed unlocks new agentic use cases, allowing LLMs to navigate and parse the web an order of magnitude faster. 10x lower latency compared to frontier models also saves developer time and server resources, which is essential for large crawling and scraping jobs.

Some scraping/parsing tasks are extremely time sensitive, such as parsing financial documents for live trading. For these use cases, a fast model like Schematron can give you a significant advantage.

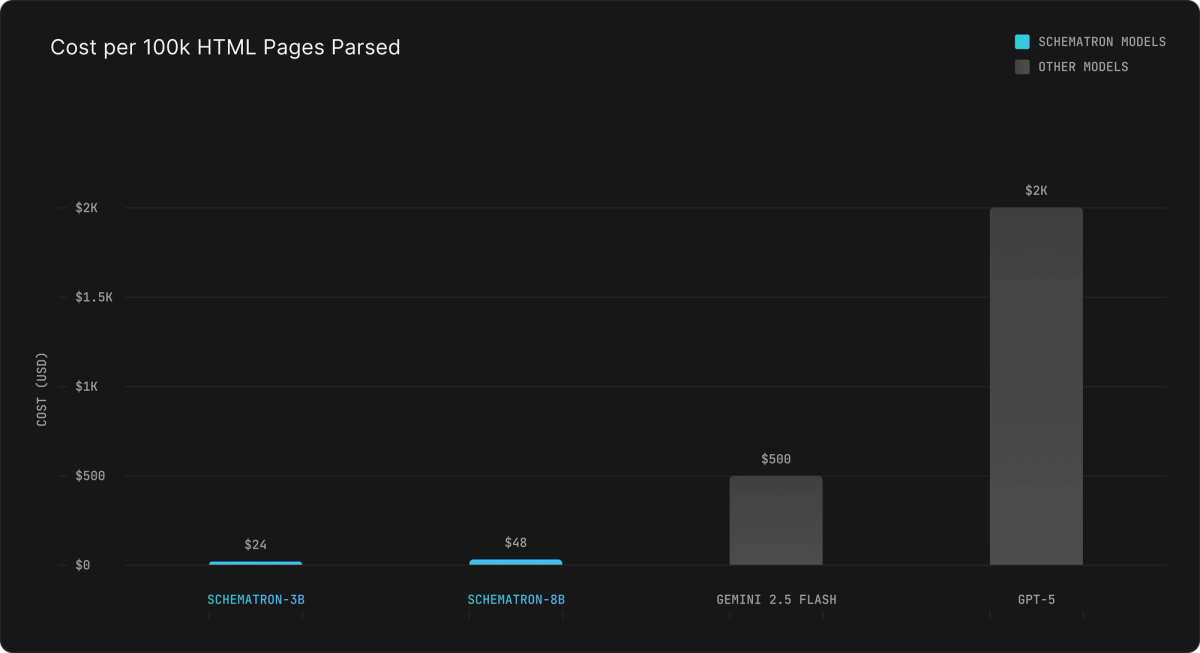

Perhaps more importantly, Schematron is significantly cheaper than using a frontier model. Schematron-8B and Schematron 3B are 40x and 80x more affordable than GPT-5, respectively. This unlocks internet-scale scraping jobs that previously would have been impossible for all but the best-funded teams. It also makes it cost-effective to monitor large groups of pages for real-time data that depends on external sites with diverse page structures.

Why We Built Schematron

Crawling/Scraping the Web Is a Token-Heavy Activity

Schematron was built because turning an HTML page into JSON with an LLM, a task executed billions of times a day, is far too expensive. Raw HTML from a website is massive—even cleaned, it averages about 10k tokens per page, and can exceed that dramatically.

Before Schematron, teams were either quickly draining their budgets using large models or parsing with poor accuracy. Our hope is that Schematron enables more ambitious web crawling and parsing projects, as a model this cheap allows for a reassessment of their viability.

Web Search is Particularly Inefficient

Grounding an LLM with web search can result in 5-100 relevant pages retrieved. Passing the context of all these pages would be infeasibly expensive, but it’s necessary to ground LLMs, as adding web context makes them significantly more factual than relying solely on LLM base knowledge.

Small Models Excel at Specialized Tasks

Instead of using large general-purpose models, a smaller model fine-tuned specifically for JSON schema extraction works far better. We are no longer paying for intelligence we don’t need, and focus all the parameters in a small model on this specific task. The result is that Schematron-8B is faster and 5x cheaper than Gemini 2.5 Flash and just as accurate.

Enhancing LLM Factuality With Schematron and Web Search

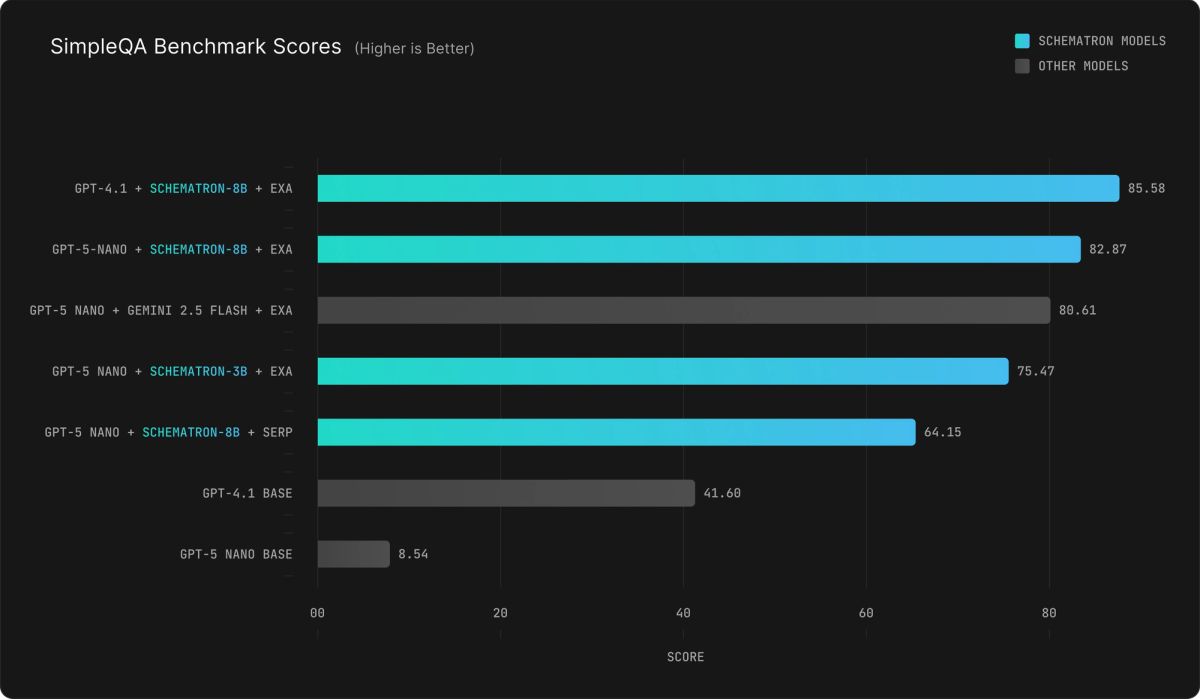

We evaluated Schematron's ability to improve web search accuracy using SimpleQA, a benchmark that tests factual question-answering capabilities.

Methodology

Our testing pipeline consisted of four stages:

- Query Generation: A primary LLM (GPT-5 Nano or GPT-4.1) generates search queries and defines a data extraction schema

- Web Search: A search provider (SERP or Exa) executes the queries and retrieves relevant pages

- Structured Extraction: Schematron-8B extracts structured JSON data from the retrieved pages using the schema

- Answer Synthesis: The primary LLM produces the final answer using the extracted structured data

Results

Without any search augmentation, both GPT-5 Nano and GPT-4.1 struggled to answer most factual questions correctly.

Incorporating web search improved the results dramatically. When we added SERP with Schematron-8B extraction, GPT-5 Nano could answer the majority of questions that had previously stumped it. The combination of Exa and Schematron-8B pushed performance even further, enabling GPT-5 Nano to correctly answer more than four out of five questions.

With GPT-4.1 as the base model, the same Exa-Schematron pipeline achieved near-expert performance, showing that these gains scale with base model quality.

Key Findings

In direct comparison as the extraction model, Schematron-8B consistently outperformed Gemini-2.5-Flash. Even the smaller Schematron-3B paired with Exa proved highly effective, demonstrating that structured extraction delivers strong factuality improvements regardless of model size.

The findings reveal how structured extraction changes the economics of web search for LLMs. Schematron makes web-augmented factuality economically viable by processing structured extracts instead of full web pages—avoiding tens of thousands of tokens per query.

How We Built Schematron

To create models that truly understand both HTML structure and JSON schemas, we started by assembling a massive corpus of 1M real-world web pages from Common Crawl. We built a synthetic dataset of schemas through clustering webpages and using groups of website summaries to generate schemas that could potentially be relevant, essentially simulating a reverse search process.

This approach mirrors real-world usage, where you define a schema once and use it across multiple similar pages. Using frontier models as teachers, we created hundreds of thousands of extraction examples across diverse domains.

We used curriculum learning to progressively train the model on longer context lengths for extraction, gradually exposing it to increasingly complex documents throughout training. This ensured stable performance even at the 128K token limit. The result was models that truly understand the relationship between schemas and HTML structure, even for long documents.

Built for Developers

To use Schematron models: define your schema using Pydantic, Zod, or JSON Schema. Pass in raw HTML and get back validated, type-safe data that's ready to use in your application.

Here's how simple it is to extract product information from any e-commerce page:

import os

from pydantic import BaseModel, Field

from openai import OpenAI

# 1) Define your schema (nested data and lists are supported)

class Product(BaseModel):

name: str

price: float = Field(

..., description=(

"Primary price of the product."

)

)

specs: dict = Field(

default_factory=dict,

description="Specs of the product.",

)

tags: list[str] = Field(

default_factory=list,

description="Tags assigned of the product.",

)

# 2) Messy HTML (could be the full page; trim to the relevant region when possible)

html = """

<div id="item">

<h2 class="title">MacBook Pro M3</h2>

<p>Price: <b>$2,499.99</b> USD</p>

<ul info>

<li>RAM: 16GB</li>

<li>Storage: 512GB SSD</li>

</ul>

<span class="tag">laptop</span>

<span class="tag">professional</span>

<span class="tag">macbook</span>

<span class="tag">apple</span>

</div>

"""

# 3) Client setup

client = OpenAI(

base_url="https://api.inference.net/v1",

api_key=os.environ.get("INFERENCE_API_KEY"),

)

# 4) Responses API extraction (typed parsing)

response = client.chat.completions.parse(

model="inference-net/schematron-8b",

messages=[

{"role": "user", "content": html},

],

response_format=Product,

)

print(response.choices[0].message.content)

Open Source and API Access

We believe specialized models like Schematron represent the future of AI applications that will be built on carefully trained small models that excel at specific tasks, rather than expensive generalists trying to do everything.

That's why we're making Schematron available through multiple channels:

- Inference.net API: Start extracting immediately with our hosted API. Pay only for what you use with no upfront costs.

- Open Source: Both Schematron-3B and Schematron-8B are available on Hugging Face under permissive licenses. Deploy them on your own infrastructure, fine-tune for your specific domain, or integrate directly into your applications.

- Batch Processing: Our Batch and Async APIs deliver significant savings on large-scale extraction.

For crawling, we recommend a webhook workflow: submit an async request, and results are posted to your webhook (typically within seconds) to upsert into your database, while retaining the same batch-level savings.

Start Extracting

We'd love to hear what you'd like to build with this model. Schedule a meeting with us or get started with these resources:

- Try the Inference.net API with $10 free credits

- Download models from Hugging Face

- Read the documentation

We can't wait to see what you'll build with truly affordable, high-quality extraction.

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.