Feb 8, 2026

AI Readiness Assessment: 6-Dimension Framework & Scoring

Inference Research

Why most organizations overestimate their AI readiness

There's a stubborn gap between how ready companies think they are for AI and how ready they actually are. Deloitte's 2026 State of AI survey found that 42% of organizations rate their AI strategy as "highly prepared," yet those same companies report much lower confidence in their infrastructure, data, risk management, and talent. Accenture puts an even finer point on it: while 97% of executives say AI will transform their industry, only 9% have fully deployed a single AI use case.

That's the preparedness paradox. Strategy decks say "AI-first." Execution says otherwise.

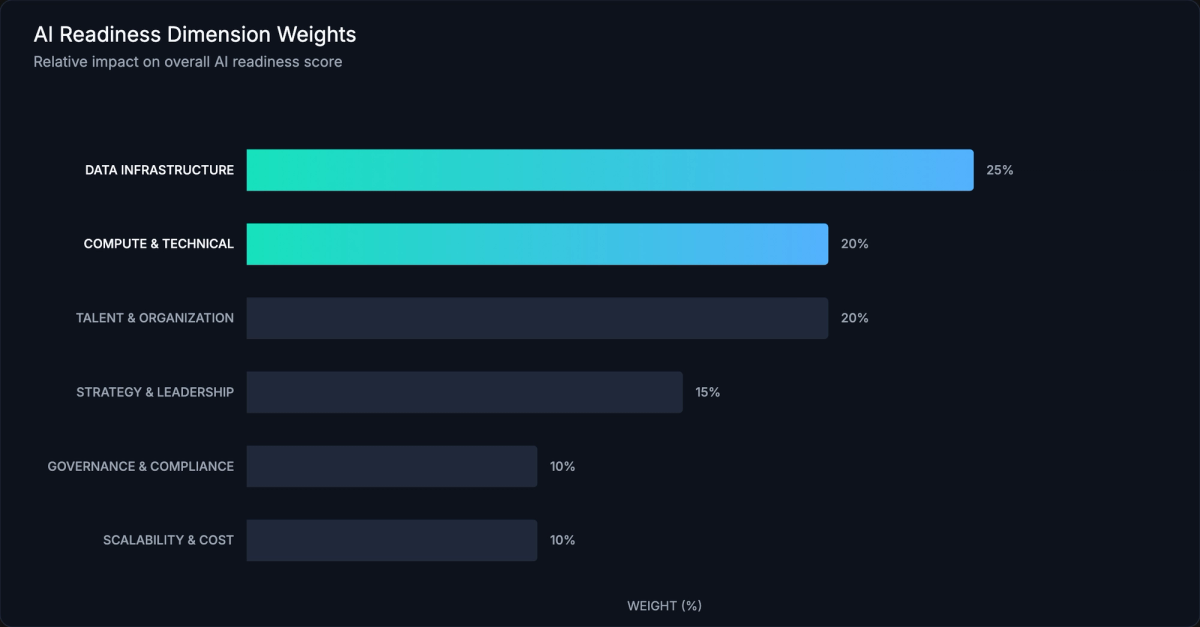

An AI readiness assessment is a structured evaluation of how prepared your organization is to adopt and scale AI. It measures capabilities across six dimensions, from data infrastructure and compute capacity to talent, strategy, governance, and scalability, producing a readiness score with specific recommendations for closing gaps.

This guide gives you a concrete ai readiness framework you can apply today. You'll get a six-dimension model grounded in research from Gartner, McKinsey, Deloitte, and others. You'll get a transparent scoring methodology with weighted dimensions. And you'll walk away with a self-assessment you can run against your own organization in under twenty minutes.

Read time: 12 minutes

The 6 dimensions of AI readiness

Every credible ai readiness assessment breaks organizational preparedness into distinct capability areas and evaluates each independently. Our ai readiness framework pulls together the most widely cited models from Gartner, McKinsey, Deloitte, and PwC into six dimensions:

- Data Infrastructure — the quality, accessibility, and pipeline maturity of your data

- Compute & Technical Infrastructure — GPU capacity, MLOps tooling, and platform readiness

- Talent & Organization — AI skills density, operating model, and cross-functional collaboration

- Strategy & Leadership — executive sponsorship, AI-business alignment, and dedicated budget

- Governance & Compliance — risk management, responsible AI policies, and regulatory readiness

- Scalability & Cost Optimization — the ability to move from pilot to production without cost blowouts

These dimensions aren't independent. A strong strategy means nothing if your data is locked in silos. World-class talent can't deliver without GPU infrastructure to train on. The radar chart above shows a typical mid-maturity profile: strong on strategy and data, weak on compute and governance. That unevenness is normal. Identifying it is the whole point.

1. Data infrastructure

Data readiness is the foundation. Without accessible, high-quality data, every other AI investment stalls. This dimension evaluates data quality (completeness, accuracy, consistency), pipeline maturity (batch vs. real-time), governance practices, and whether you have centralized catalogs or feature stores. Accenture reports that 47% of CXOs cite data readiness as the top challenge in applying generative AI. That number has barely moved in two years, which tells me this is a structural problem, not a temporary one.

2. Compute & technical infrastructure

This is the dimension most ai readiness frameworks skip entirely, and in my experience it's the one that separates organizations that ship models from those that stay in notebooks. Compute readiness covers GPU capacity (do you have access to H100 or equivalent hardware?), cloud infrastructure maturity, MLOps tooling, and model management.

Training a large language model can require 8 to 64 GPUs running for days. Inference at production scale demands dedicated GPU cloud instances with low-latency networking. If your team is queuing for shared VMs, you're not compute-ready.

3. Talent & organization

Deloitte's 2026 survey ranks insufficient worker skills as the number-one barrier to AI integration. This dimension measures AI talent density (data scientists, ML engineers, MLOps engineers), upskilling programs, and the operating model connecting AI teams to the business. McKinsey's "Rewired" research identifies the hub-and-spoke model, a central AI center of excellence paired with distributed domain teams, as the structure most correlated with successful AI scaling.

4. Strategy & leadership

PwC's 2026 analysis found that technology delivers only about 20% of AI value. The remaining 80% comes from redesigning workflows and processes. This dimension evaluates executive sponsorship at the C-suite level, strategy alignment with measurable business objectives, dedicated AI budget, and a multi-year roadmap. Leaders who treat AI as a technology project rather than a business transformation consistently underinvest in the change management that drives actual returns.

5. Governance & compliance

Only 1 in 5 companies has a mature governance model for autonomous AI agents (Deloitte 2026). As the EU AI Act takes effect and NIST's AI Risk Management Framework gains adoption, governance moves from "nice to have" to deployment blocker. This dimension evaluates risk tiering, human-in-the-loop requirements, model validation protocols, and regulatory compliance readiness. PwC found that 60% of executives say responsible AI practices improve ROI. Well-designed governance actually speeds up deployment rather than slowing it down.

6. Scalability & cost optimization

The gap between a working pilot and a production system is where most AI initiatives die. This dimension evaluates your ability to scale workloads from proof-of-concept to enterprise deployment, manage compute costs across that transition, and operate across multiple cloud providers.

Organizations that rely solely on on-demand GPU pricing will find costs unsustainable at scale. Reserved GPU instances can cut compute costs by 30-60%, but only if capacity planning is part of your ai readiness posture from the start.

Score your AI readiness: the self-assessment

The framework above tells you what to measure. This section tells you how to score it. Each dimension is rated on a 1-5 maturity scale, weighted by its relative importance, and combined into a composite readiness score. The whole thing takes about 15-20 minutes.

Maturity levels

| Level | Name | Description | Characteristics |

|---|---|---|---|

| 1 | Exploring | No formal AI initiatives exist. AI is on the radar but hasn't moved beyond conversations. | Limited organizational awareness of AI potential. No dedicated AI budget. Ad hoc data practices with siloed storage. No AI-specific talent hired. Technology decisions made without AI considerations. |

| 2 | Experimenting | Pilot projects are underway but nothing has reached production deployment. | 1-2 active AI pilots in isolated departments. Informal governance with no standardized review. Basic cloud usage for experimentation. Some AI talent hired or contracted. Initial executive interest but no formal sponsorship. |

| 3 | Operationalizing | Initial AI use cases are moving from pilot to production. Repeatable processes are forming. | Defined MLOps processes for model deployment. Basic governance framework with compliance checks. Dedicated compute resources for training and inference. Small-to-mid-sized ML team with defined roles. Documented AI strategy with executive sponsor. |

| 4 | Scaling | Multiple AI systems are running in production across business units. | Solid GPU infrastructure with reserved capacity. Mature governance with risk tiering and audit trails. Cross-functional AI teams using hub-and-spoke model. Reserved GPU instances for cost optimization. AI budget integrated into business unit planning. |

| 5 | Transforming | AI is embedded in the organization's strategy, culture, and daily operations. | Continuous model optimization with automated retraining. Industry-leading responsible AI practices. AI-first culture with organization-wide literacy programs. Optimized multi-cloud cost model with automated scaling. Board-level AI oversight and quarterly readiness reassessment. |

Use this table as your reference when scoring each dimension below. Be honest. The value of an ai readiness assessment comes from accuracy, not optimism.

Dimension scoring criteria

For each of the six dimensions, rate your organization on the 1-5 scale. The table below gives you the bookends, what a Level 1 looks like versus a Level 5, so you can place yourself accurately.

| Dimension | Weight | Level 1 (Exploring) | Level 3 (Operationalizing) | Level 5 (Transforming) |

|---|---|---|---|---|

| Data Infrastructure | 25% | Siloed data across departments. Manual ETL processes. No data catalog or metadata management. Data quality unknown or inconsistent. No feature store. | Central data warehouse operational. Basic automated pipelines (batch). Data catalog exists with partial coverage. Data quality monitored for key datasets. Basic feature engineering. | AI-ready data products with SLAs. Fully automated quality monitoring. Real-time feature stores. Data catalog with lineage tracking. Self-serve data access for ML teams. |

| Compute & Technical | 20% | No dedicated GPU resources. Shared cloud VMs for all workloads. No MLOps tooling. Models trained in notebooks without versioning. No model registry. | Dedicated GPU instances for training. Basic MLOps pipeline with model versioning. Model registry operational. CI/CD for model deployment exists but is partially manual. Monitoring for model drift in place. | Multi-cluster GPU infrastructure with automated scaling. Full MLOps pipeline (train, test, deploy, monitor). Automated model retraining triggers. Multi-cloud or hybrid deployment. Sub-second inference latency at scale. |

| Talent & Organization | 20% | No AI specialists on staff. No AI training or upskilling programs. AI initiatives driven by individual enthusiasts without formal support. No defined AI roles. | Small dedicated ML team (3-8 people). Some cross-functional collaboration between AI and business teams. Basic AI training available. Defined roles for data scientists and ML engineers. | AI Center of Excellence established. Hub-and-spoke operating model connecting central AI team to business units. Organization-wide AI literacy program. AI product managers bridging technical and business teams. |

| Strategy & Leadership | 15% | No documented AI strategy. No executive sponsor for AI. AI budget is ad hoc or nonexistent. Use cases discussed but not formally prioritized. | Documented AI strategy aligned to business objectives. C-suite executive sponsor identified. Dedicated AI budget allocated. Portfolio of use cases prioritized with ROI estimates. | AI-first strategy integrated into business planning. Board-level AI oversight committee. AI embedded in annual planning for every business unit. Multi-year roadmap with quarterly milestones. |

| Governance & Compliance | 10% | No AI-specific policies. Risk reviews are ad hoc and inconsistent. No awareness of AI regulatory requirements. No bias testing or model validation process. | Basic AI governance framework documented. Some compliance checks for high-risk applications. Risk tiering system in development. Initial responsible AI guidelines published. | Mature governance with automated compliance monitoring. Full risk tiering with human-in-the-loop requirements for high-risk decisions. EU AI Act and NIST AI RMF compliant. Responsible AI operationalized with continuous bias testing and explainability. |

| Scalability & Cost | 10% | No scaling plan beyond current pilots. Fully on-demand cloud pricing. No cost tracking for AI workloads. No capacity planning process. | Some reserved compute capacity for predictable workloads. Basic cost tracking and attribution for AI projects. Initial capacity planning for next quarter. Multi-cloud evaluation underway. | Optimized multi-cloud strategy with reserved GPU instances. Automated cost management and workload scheduling. Capacity planning integrated into business forecasting. 30-60% cost savings achieved through reserved pricing and optimization. |

Calculating your composite score

Multiply each dimension score by its weight, then sum the results. This gives you a weighted composite out of 5. To convert to a percentage, divide by 5 and multiply by 100.

Rate each dimension on a 1-5 scale using the scoring criteria table, then multiply by the weight to get your weighted score.

| Dimension | Weight | Your Score (1-5) | Weighted Score |

|---|---|---|---|

| Data Infrastructure | 0.25 | ___ | ___ |

| Compute & Technical | 0.20 | ___ | ___ |

| Talent & Organization | 0.20 | ___ | ___ |

| Strategy & Leadership | 0.15 | ___ | ___ |

| Governance & Compliance | 0.10 | ___ | ___ |

| Scalability & Cost | 0.10 | ___ | ___ |

| Total | 1.00 | ___ |

Formula: Composite Score (%) = (Total Weighted Score / 5) x 100

Example Calculation:

| Dimension | Weight | Score | Weighted Score |

|---|---|---|---|

| Data Infrastructure | 0.25 | 4 | 1.00 |

| Compute & Technical | 0.20 | 2 | 0.40 |

| Talent & Organization | 0.20 | 3 | 0.60 |

| Strategy & Leadership | 0.15 | 4 | 0.60 |

| Governance & Compliance | 0.10 | 2 | 0.20 |

| Scalability & Cost | 0.10 | 2 | 0.20 |

| Total | 1.00 | 3.00 |

Example Composite Score: (3.00 / 5) x 100 = 60% — Operationalizing stage

Data and compute carry the heaviest weights because they're the most common blockers. Organizations with strong strategy but weak data and infrastructure consistently stall at the pilot stage.

Want your score calculated automatically? Take the interactive assessment below for a personalized readiness report with dimension-by-dimension recommendations.

What your score means

A composite number is useful, but interpretation is what drives action. The table below maps your score to a maturity stage, a plain-language description of where you stand, and the highest-priority actions for that stage.

| Score Range | Maturity Stage | Description | Recommended Actions |

|---|---|---|---|

| 0-20% | Exploring | AI is on the radar but no structured approach exists. Conversations are happening but nothing is formalized. The organization lacks foundational capabilities across most dimensions. | 1. Define an AI strategy tied to specific business outcomes with executive sponsorship. 2. Audit existing data assets for quality, accessibility, and gaps. 3. Identify a single high-value use case for a first pilot. Don't try to boil the ocean. |

| 21-40% | Experimenting | Pilots are underway in isolated pockets, but nothing has reached production. Some foundational investments have been made, but there are still real gaps in infrastructure and governance. | 1. Invest in data infrastructure: build a central warehouse, automate key pipelines, start a data catalog. 2. Hire or contract ML engineering talent to complement data science. 3. Establish basic AI governance policies and a risk review process. |

| 41-60% | Operationalizing | Initial use cases are moving from pilot to production. Repeatable processes are forming but infrastructure is becoming the bottleneck. This is the stage where most organizations stall. | 1. Scale MLOps tooling: model registry, automated testing, CI/CD for models, production monitoring. 2. Invest in dedicated GPU infrastructure for training and inference workloads. 3. Formalize governance with risk tiering and human-in-the-loop requirements for high-risk decisions. |

| 61-80% | Scaling | Multiple AI systems are running in production across business units. The challenge shifts from building to optimizing: managing costs, maturing governance, and scaling teams. | 1. Optimize compute costs with reserved GPU instances, which typically save 30-60% over on-demand. 2. Mature governance for autonomous AI agents with continuous model audits and bias assessments. 3. Build an AI Center of Excellence with a hub-and-spoke model connecting to business units. |

| 81-100% | Transforming | AI is embedded across the organization's strategy and daily operations. The focus is continuous optimization, industry benchmarking, and staying competitive. | 1. Benchmark against industry leaders quarterly using frameworks from Gartner, McKinsey, and Deloitte. 2. Keep innovating on new model architectures, fine-tuning approaches, and emerging use cases. 3. Optimize your cost model at scale across multi-cloud with automated workload scheduling and capacity planning. |

Dimension gaps matter more than the overall score

A composite score of 60% can mean very different things. An organization scoring 4s across the board except for a 1 in governance faces a different challenge than one scoring 3s everywhere. A single low-scoring dimension can block progress across the board. Poor data quality undermines every model, regardless of how strong your strategy or talent might be.

Rank your dimensions from lowest to highest. Your lowest-scoring dimension is almost always your highest-priority investment.

The chart above shows each dimension's weight in the composite score. Use it to prioritize: a one-point improvement in data infrastructure (25% weight) has 2.5x the impact of a one-point improvement in governance (10% weight). When resources are tight, invest where the leverage is highest.

Reassess quarterly. AI maturity shifts fast. Deloitte's research shows worker access to AI rose 50% in 2025 alone, which means demands on infrastructure, governance, and talent are changing faster than annual planning cycles can keep up. Treat the assessment as a recurring diagnostic, not a one-time exercise.

Closing the gaps: recommendations by maturity stage

Knowing your score is step one. Acting on it is what actually matters. The recommendations below are organized into three tiers based on your composite score. Each tier has a different strategic priority. Trying to do everything at once is the most common mistake. Sequence matters.

Early stage (0-40%)

The priority at this stage is building foundations, not scaling AI. Resist the temptation to purchase expensive infrastructure before you have a clear use case and clean data to support it.

- Define a business-aligned AI strategy with executive sponsorship. Pick one use case with measurable ROI, not five.

- Audit your data estate. Map data sources, assess quality, and identify the gaps between what you have and what your target use case requires.

- Hire your first ML engineer or data scientist. A single skilled practitioner will accomplish more than a committee at this stage.

- Start with managed cloud services for initial experimentation. On-demand GPU access is fine when workloads are exploratory and intermittent.

Mid stage (41-70%)

This is the stage where infrastructure becomes the bottleneck. You have proven use cases, but moving them to production requires real compute capacity, repeatable MLOps, and formal governance.

- Scale your MLOps pipeline. Model registry, automated testing, CI/CD for models, and monitoring are no longer optional.

- Invest in dedicated GPU infrastructure. Production training and inference workloads need consistent, reserved capacity. Reserved GPU instances deliver 30-60% cost savings over on-demand and eliminate queuing delays.

- Formalize AI governance. Implement risk tiering for AI applications, establish human-in-the-loop requirements for high-risk decisions, and begin EU AI Act compliance planning.

- Build cross-functional teams that pair domain experts with ML engineers. The hub-and-spoke model works: centralize standards and reusable components, distribute execution to business units.

Advanced stage (71-100%)

At this stage, the focus shifts from building to optimizing. You have multiple AI systems in production. The question is whether you can run them efficiently and scale further without costs spiraling.

- Optimize compute costs through reserved GPU cloud instances, workload scheduling, and multi-cloud capacity planning. At scale, the difference between on-demand and reserved pricing is substantial.

- Mature your governance for autonomous agents. As AI systems gain more autonomy, governance needs to keep pace. Model audits, bias assessments, and incident response plans should run continuously.

- Benchmark against industry peers quarterly. Your readiness score is most meaningful in context. Use industry data from Deloitte, Gartner, and McKinsey to calibrate.

- Invest in AI literacy organization-wide. PwC's research shows 80% of AI value comes from process redesign. That redesign only happens when non-technical leaders understand what AI can and cannot do.

Take the full assessment

This guide gives you the framework. The interactive assessment gives you the score.

The full AI readiness assessment questionnaire walks you through 3-5 questions per dimension, calculates your weighted composite automatically, and generates a personalized readiness report. You get a dimension-by-dimension radar chart, comparison against enterprise benchmarks, and specific infrastructure recommendations tailored to your maturity stage.

[Take the AI Readiness Assessment](https://inference.net/ai-readiness-assessment)

Take the assessment and get a personalized infrastructure recommendation.

Frequently asked questions

What is an AI readiness assessment?

An AI readiness assessment is a structured evaluation that measures how prepared an organization is to adopt, deploy, and scale AI. It typically scores capabilities across multiple dimensions, including data, compute, talent, strategy, governance, and scalability, to identify gaps and prioritize investments.

How long does an AI readiness assessment take?

A self-assessment using a framework like the one in this guide takes 15-20 minutes. A full organizational assessment with stakeholder interviews, data audits, and expert validation typically takes 2-4 weeks.

What are the pillars of AI readiness?

Different frameworks use between four and seven pillars. This ai readiness framework uses six: data infrastructure, compute and technical infrastructure, talent and organization, strategy and leadership, governance and compliance, and scalability and cost optimization. What makes it different is that compute infrastructure gets its own dimension rather than being treated as an afterthought.

How is AI readiness different from data readiness?

Data readiness is one dimension within broader AI readiness. An organization can have excellent data infrastructure and still score poorly on a readiness assessment if it lacks compute capacity, governance frameworks, AI talent, or executive strategy alignment.

How often should you reassess AI readiness?

Quarterly. AI capabilities, regulatory requirements, and infrastructure options evolve fast. A dimension that scored well six months ago, particularly compute and governance, may become a bottleneck as workloads scale and regulations tighten.

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.