Introduction

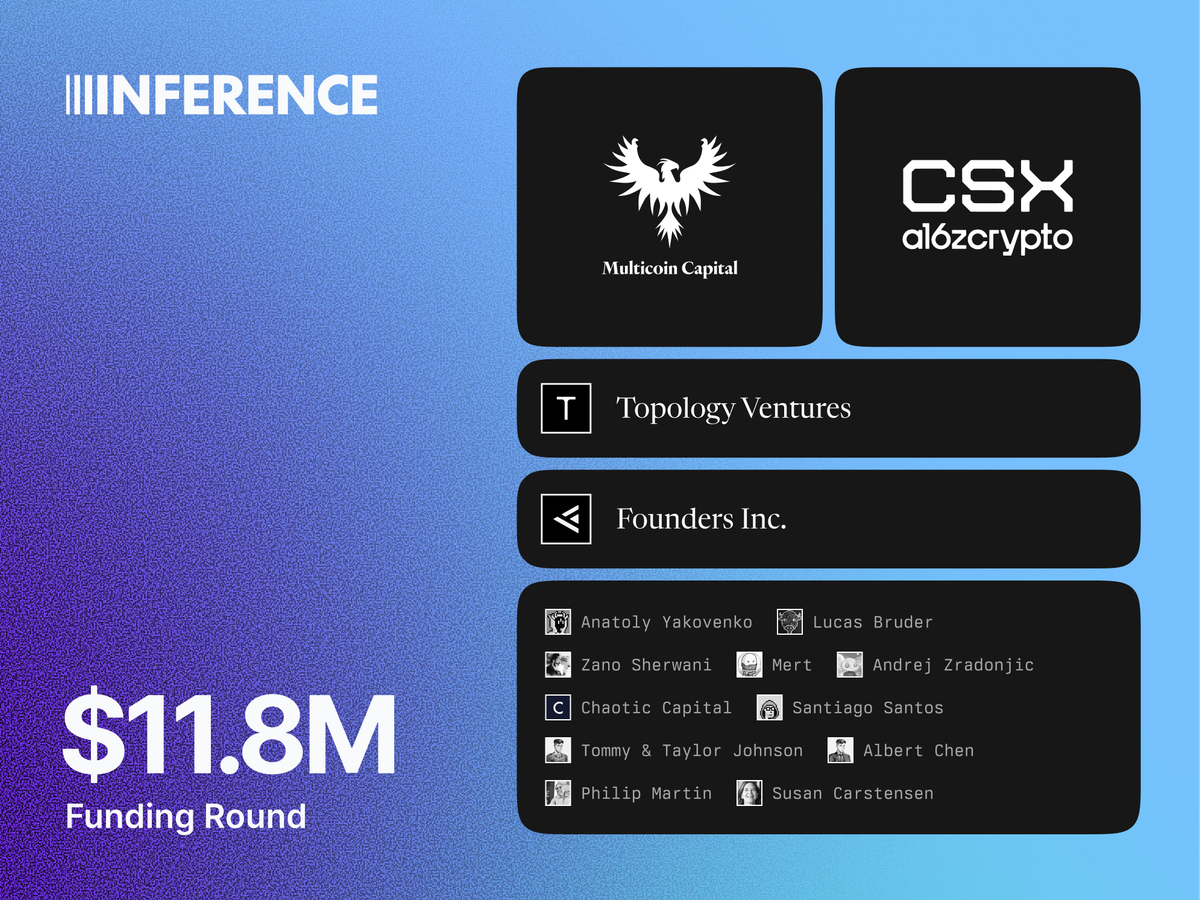

We’re excited to announce that we have raised $11.8M in Series Seed funding, led by Multicoin Capital and a16z CSX, with participation from Topology Ventures, Founders, Inc., and an exceptional group of angel investors.

Inference.net enables companies to train and deploy custom AI models that outperform general-purpose alternatives at a fraction of the cost. This capital will accelerate our mission to help businesses take control of their AI destiny.

A fork in the road

Every company building with AI faces a critical challenge: pay unsustainable prices to OpenAI, Anthropic, and Google for general-purpose models, or compromise on quality with cheaper alternatives. This dependency on frontier labs creates three fundamental risks: First, spiraling costs limit scale. As usage grows from thousands to billions of requests, API costs can consume entire budgets. Second, companies lack control over core business infrastructure, leaving them vulnerable to price changes, model deprecations, and service disruptions. Third, when everyone uses the same models, true differentiation becomes impossible.

Companies shouldn’t have to choose between quality and cost. They shouldn’t be forced to send sensitive customer data to third-party servers. And they shouldn’t build their competitive advantage on infrastructure they don’t control.

Where we stand

Over the past year, we’ve trained and deployed custom language models for some of the fastest-growing AI-native companies in the world. Our approach is straightforward: we identify the specific, repeatable tasks that businesses run millions of times and train purpose-built models that excel at exactly those tasks. Whether extracting data from documents, captioning images, or classifying content, our models deliver superior results for their specialized domains.

The results speak for themselves. Custom models match or exceed frontier model performance while running 2-3x faster and costing up to 90% less. These models, up to 100x smaller than GPT-5-class systems, prove that optimization for specific tasks beats general capability on a cost-to-performance ratio.

Specialized models transform the economics of using AI at scale. Companies spending millions annually on API calls reduce costs by up to 90%. Applications previously constrained by latency can now serve real-time use cases. Businesses concerned about data privacy run models on their own infrastructure. Most importantly, companies gain full control of the AI models powering their core products.

Beyond economics, custom models provide lasting competitive advantage. When every company has access to the same frontier models, differentiation disappears. Custom models trained on proprietary data and optimized for specific workflows become a moat that competitors cannot replicate. Your AI becomes yours, and yours alone.

Moving forward

The next decade will witness two parallel tracks in AI development. Frontier labs will continue pushing the boundaries with massive, general-purpose models for open-ended tasks like coding, creative writing, and complex reasoning. These models will remain expensive but essential for exploratory use cases.

Simultaneously, a new ecosystem of specialized models will power the repetitive, high-volume tasks that constitute the majority of business AI usage. Companies will rely on frontier labs for cutting-edge capabilities while owning and operating custom models for core operations.

As companies scale from prototypes to production, the cost of relying on frontier labs becomes untenable. Meanwhile, the open-source ecosystem has matured dramatically, and new post-training techniques make it possible to match frontier capabilities with far fewer parameters.

This funding enables us to expand our research and development efforts into new frontiers of model and infrastructure performance while scaling our ability to serve more companies.

Join us

The transition from renting to owning intelligence has begun. We aim to accelerate this process. If you’re spending more than $50,000 per month on closed-source AI providers, we can help you cut costs and improve performance in as little as 4 weeks. Book a call with our research team to learn more.

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.