Introduction

We introduce LOGIC, a practical method for verifying inference in decentralized GPU networks. LOGIC utilizes compressed token-level log-probabilities and statistical testing to detect dishonest GPU operators with high accuracy, while adding minimal overhead and seamlessly integrating with existing inference engines, such as vLLM and SGLang.

The system is resistant to decode-time spoofing attacks, a critical component for permissionless networks where economic incentives might encourage GPU operators to use smaller or quantized models during generation. With payload sizes of only ~250-313 KB per 10k tokens and verification requiring just 10 single-token recomputations, LOGIC provides a production-ready and battle-tested solution for trustless inference.

Crucially, LOGIC requires no modifications to underlying inference engines and integrates naturally with standard OpenAI-style APIs, making it immediately deployable across most production environments.

Contributors: Amarjot Singh, Francesco Virga, Sean Smith, Sam Hogan

Code: https://github.com/context-labs/logic

Paper: [Coming Soon]

Trust in permissionless inference networks

For the last 18 months, Inference.net has operated a globally decentralized AI inference network with up to 10,000 heterogeneous GPUs, ranging from consumer graphics cards in a laptop to datacenter hardware in Tier 3 facilities operated by public companies. The network is permissionless by design: any GPU owner can download our client software and immediately start serving inference requests.

This openness creates a fundamental challenge: How do we verify that GPU operators are indeed running the models they claim to be running?

In a permissionless environment with economic incentives, malicious actors have multiple avenues for dishonest behavior:

- Model substitution: Running a smaller, cheaper model (e.g., swapping a 70B model for an 8B model)

- Quantization spoofing: Using lower-precision quantized models to reduce compute costs

- Speculative decoding attacks: Prefilling with the claimed model but decoding with a smaller one to save on generation costs

- Returning fraudulent text: Simply fabricating responses without running inference at all

Traditional verification mechanisms fall short. Recreating responses verbatim is expensive and often fails due to non-determinism and temperature settings. Cryptographic approaches like zero-knowledge proofs are orders of magnitude too slow and expensive for modern LLM inference. Activation-based methods require heavy instrumentation of inference engines and are sensitive to hardware non-determinism.

Most critically, many existing verification methods only validate the prefill phase. In permissionless networks where participants are economically motivated to minimize costs, decode-time spoofing becomes the primary attack vector. An operator could pre-fill honestly with the correct model but secretly switch to a cheaper model for generation where computational costs are highest.

Verification through log-prob distributions

LOGIC takes a fundamentally different approach: instead of trying to recreate exact activations or token sequences, we verify that the statistical distribution of the model's outputs matches what we expect from the claimed model.

The core insight is that every language model produces a unique "fingerprint" in its token-level log-probability distributions. Different models, quantization schemes, or architectural changes all produce measurably different distributions over the vocabulary at each position.

Here's how it works:

During Inference (Operator Side)

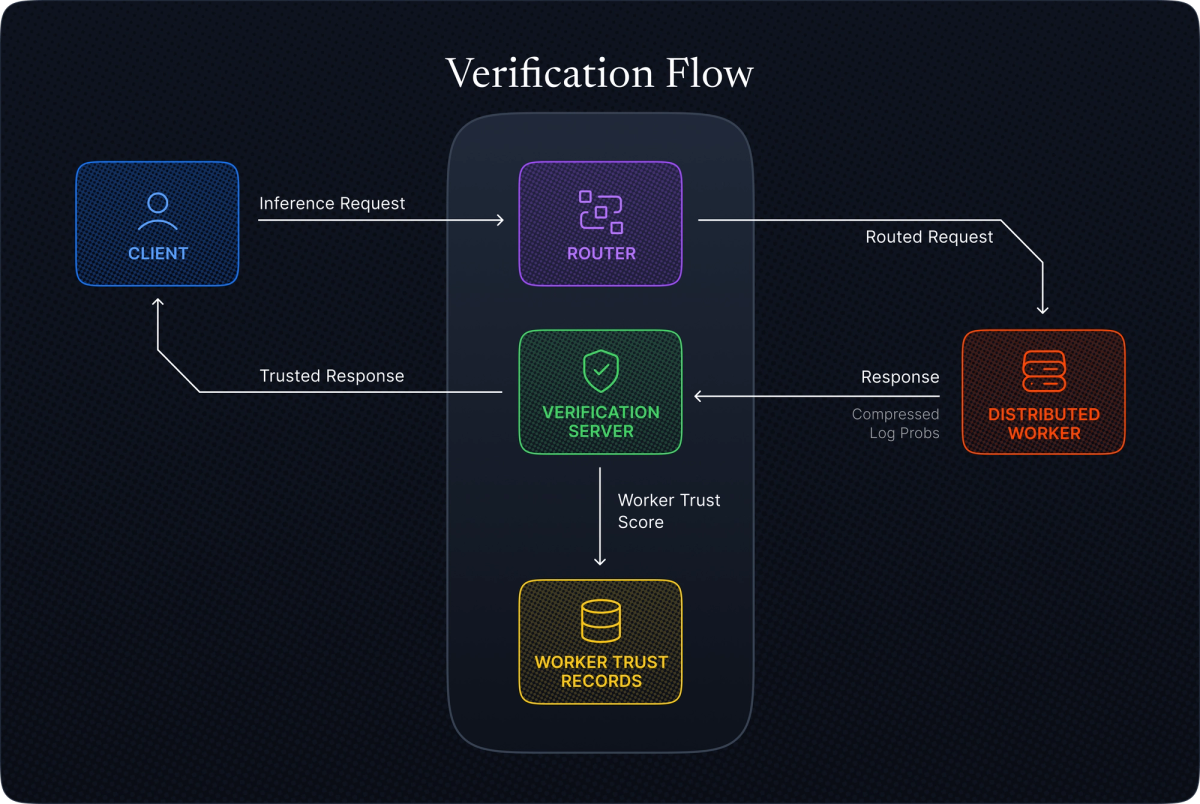

For each generated token, the untrusted GPU computes the top-k token log-probabilities—the most likely next tokens and their probabilities. These are compressed and returned alongside the generated text.

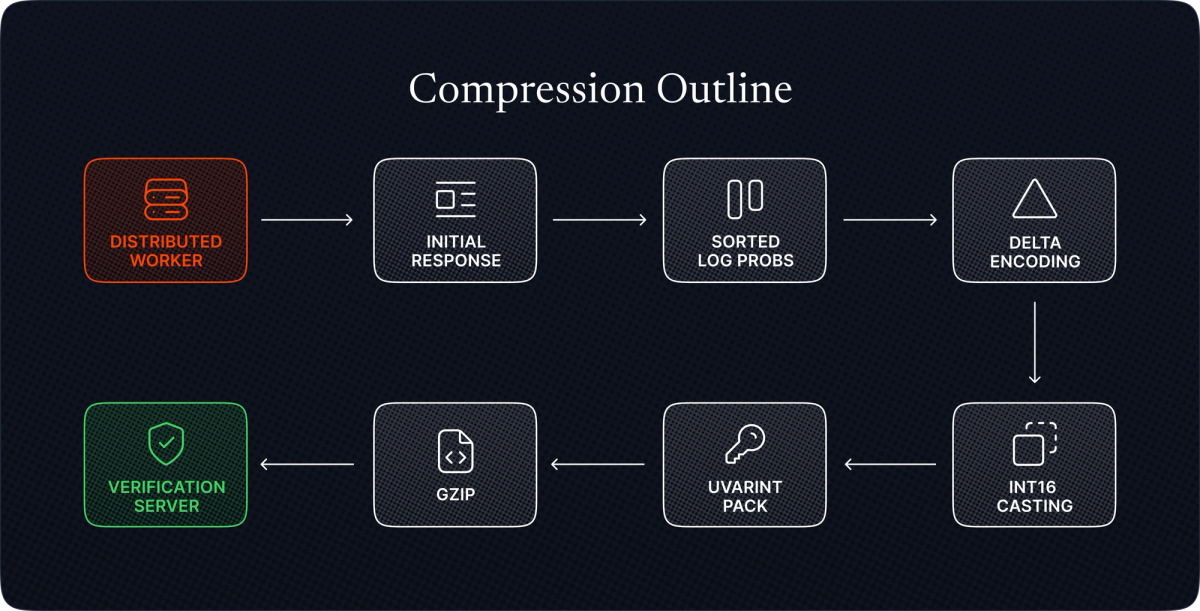

The compression pipeline is carefully designed for efficiency:

- Millilog quantization: Round log-probabilities to the nearest 0.001

- Delta encoding: Store differences between sorted log-probs

- Efficient packing: Token IDs as variable-length integers, deltas as int16

- Gzip compression: Final bytestream compression

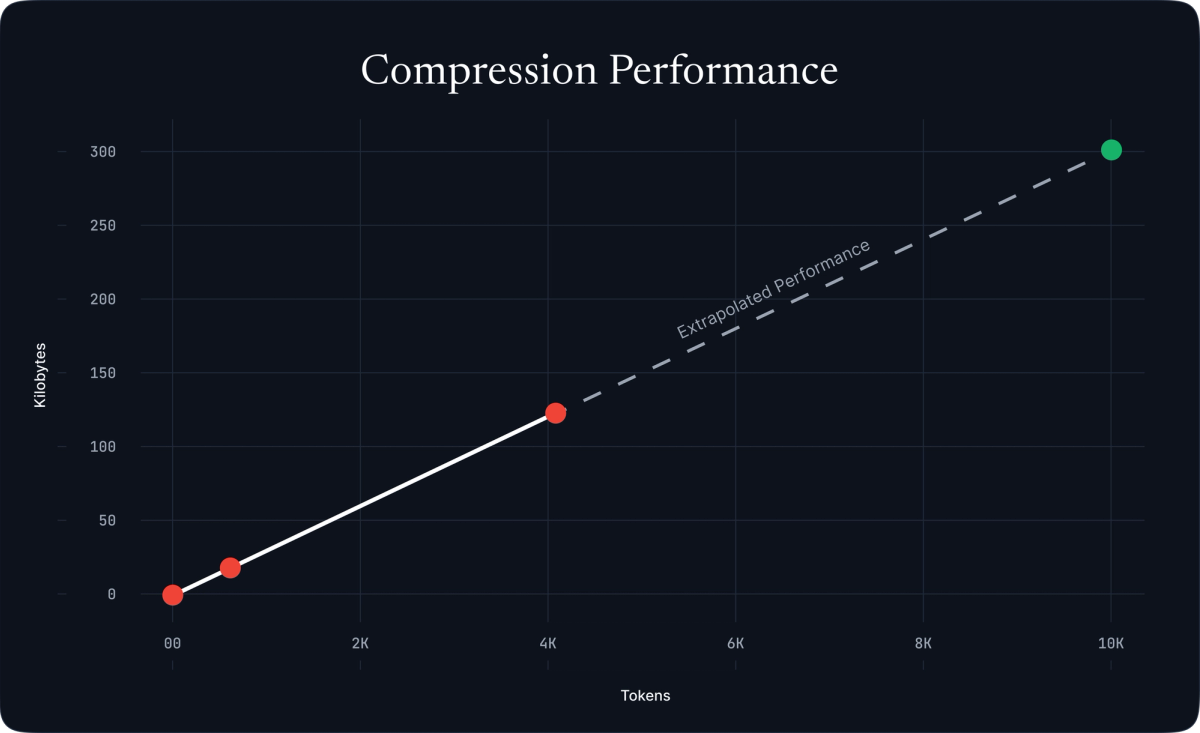

This achieves remarkable efficiency: for k=20, we observe only ~25-31 bytes per token after compression, or approximately 250-313 KB for 10,000 tokens.

Figure 1: Compression outline showing the flow from top-k logprobs through sorting, quantization, delta encoding, and gzip compression.

Figure 2: Compression performance across different sequence lengths, extrapolating to ~300 KB for 10k tokens.

During verification (verifier side)

The verifier doesn't need to regenerate the entire sequence. Instead, it randomly samples S positions (default: 10) from the decode phase and recomputes only those single-token distributions using KV-cache optimization.

For each sampled position t, we:

- Recompute the top-k log-probabilities using the exact context

- Align with the operator's top-k by taking the intersection: ℐₜ = Sₜʷ ∩ Sₜᵛ

- Track the overlap size mₜ = |ℐₜ| and Jaccard similarity Jₜ = mₜ/(2k - mₜ)

We then aggregate the aligned log-probabilities:

- Operator set: Lʷ = ⋃ₜ∈𝒯 {ℓₜ,ⱼʷ : j ∈ ℐₜ}

- Verifier set: Lᵛ = ⋃ₜ∈𝒯 {ℓₜ,ⱼᵛ : j ∈ ℐₜ}

Typically, this gives us n₁ = n₂ ≈ S × m samples, where m ≈ 10-20 is the average overlap.

Statistical testing

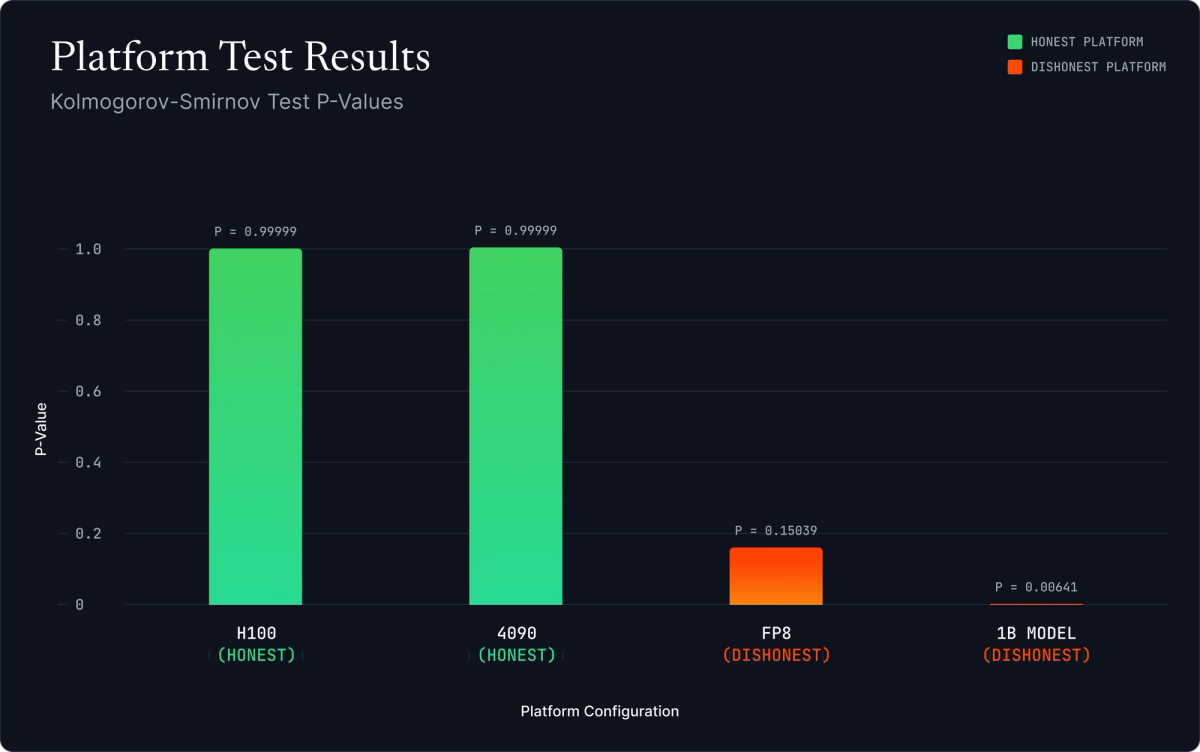

With two samples of log-probabilities in hand, we apply the Kolmogorov-Smirnov (KS) two-sample test. This non-parametric test compares the cumulative distribution functions:

Dₙ₁,ₙ₂ = sup_x |Fₙ₁(x) - Gₙ₂(x)|

where the effective sample size is nₑff = (n₁ × n₂)/(n₁ + n₂).

The KS test produces a p-value pₖₛ.

When the operator is honest and running the claimed model, the distributions match and pₖₛ ≈ 1.

When the operator is dishonest—using a different model, quantization, or spoofing strategy—the distributions diverge and pₖₛ drops dramatically.

We set a threshold pₘᵢₙ = 0.95: operators with pₖₛ ≥ pₘᵢₙ are accepted as honest.

Figure 3: Complete verification flow showing the client request, operator inference with compressed logprobs, verifier sampling and recomputation, and trust record updates.

Decode-spoofing resistance

A critical vulnerability in many verification schemes is speculative decoding attacks: a dishonest operator prefills with the expensive claimed model M but switches to a cheaper model M' during decode to minimize generation costs. Since generation typically accounts for the majority of compute in long sequences, this attack offers substantial economic benefits to malicious actors.

Prefill-only verification methods (including TOPLOC) cannot detect this attack because they only validate the initial context processing. Once validation passes, the operator is free to use any model for generation.

LOGIC specifically samples from decode positions, making this attack immediately detectable. If a operator prefills with M but decodes with M', then at sampled decode positions t:

- Operator's log-probs: ℓₜ,ⱼʷ = log p_M'(iⱼᵗ | context_t)

- Verifier's log-probs: ℓₜ,ⱼᵛ = log p_M(iⱼᵗ | context_t)

These come from different conditional distributions, producing systematic differences that the KS test reliably detects. This property is essential for permissionless networks where economic incentives naturally encourage decode-time spoofing.

Robust across hardware and configurations

Unlike methods that rely on exact activation matching, LOGIC is inherently robust to hardware non-determinism. Different GPUs, CUDA versions, and numerical precision variations all affect the exact values of intermediate activations with only minimal impact on the shape of top-k log-probability distributions.

We validate this robustness by tracking both the KS p-value and Pearson correlation of log-probability pairs. Assuming small numeric drift ε with E[ε] = 0 and Var(ε) = σ², the correlation

r ≈ Var(ℓʷ) / √(Var(ℓʷ)(Var(ℓʷ) + σ²)) → 1

as σ² → 0. This dual-metric approach (KS + correlation) provides robust detection even in the presence of minor numerical variations.

Integration with existing inference engines

One of LOGIC's key advantages is its zero-modification integration with existing inference engines. Because top-k token log-probabilities are already computed by most engines as part of sampling, LOGIC simply captures and compresses this existing data.

LOGIC works seamlessly with any OpenAI-compatible API that supports returning logprops:

- vLLM: Direct integration through standard API

- SGLang: Native compatibility with existing workflows

This portability means LOGIC can be deployed across heterogeneous GPU networks without requiring operators to run custom-modified inference software. Inference clients simply return an additional compressed payload alongside their usual text responses.

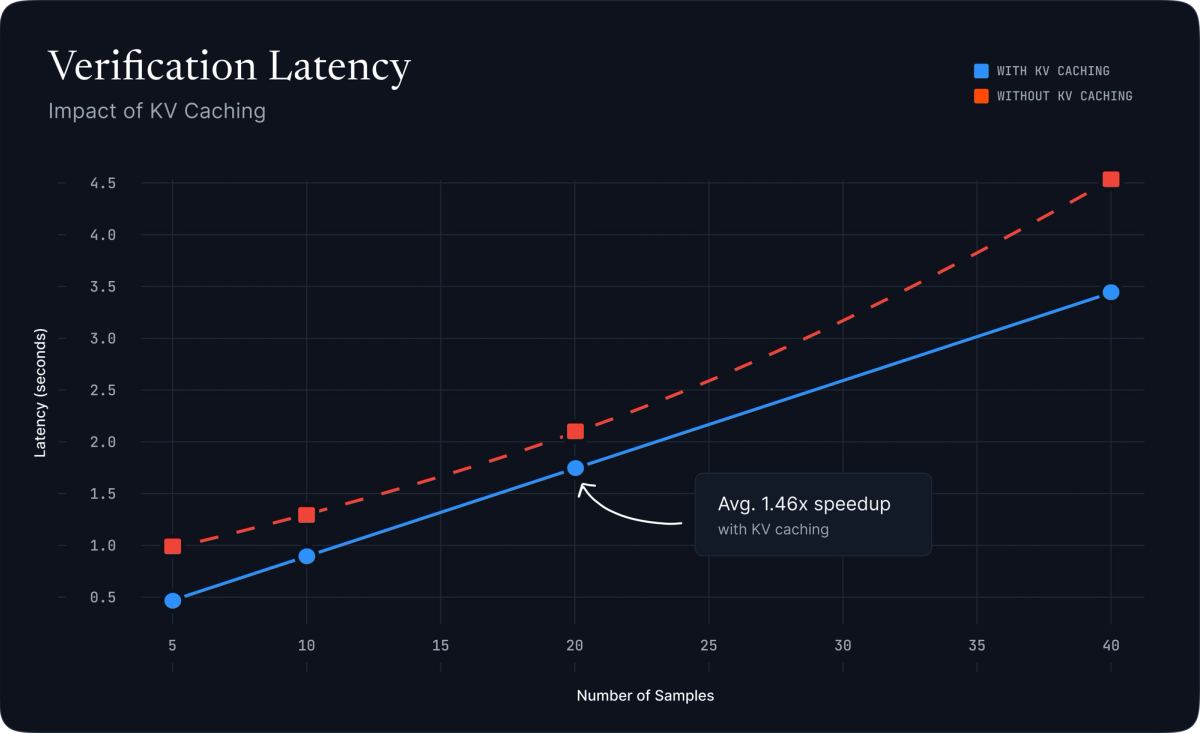

The verification server is equally straightforward: it runs standard single-token inference calls with KV-caching enabled, making verification speed scale linearly with the number of sampled positions.

Figure 4: Verification latency vs. number of samples with and without KV caching. KV caching provides a 1.46× average speedup.

Experimental validation

We rigorously tested LOGIC on our deployed decentralized network using 10,000 diverse prompts spanning coding, reasoning, creative writing, and question answering.

Setup

- Base model: Llama-3.2-3B-Instruct (BF16)

- Dishonest baselines:

- Llama-3.2-1B-Instruct (different model size)

- Llama-3.2-3B-Instruct quantized to FP8

- Hardware: H100, RTX 4090

- Settings: k=10, samples S ∈ {5, 10, 20, 40}

Results

Honest operators are reliably accepted: Both H100 and RTX 4090 operators running the correct model yielded pₖₛ > 0.999, well above our threshold of 0.95.

Dishonest operators are reliably rejected:

- Operators running Llama-3.2-1B-Instruct produced pₖₛ ≈ 0.006

- Operators running FP8-quantized variants produced pₖₛ ≈ 0.150

Both dishonest strategies are clearly distinguishable from honest behavior.

Figure 5: Distribution of KS p-values across honest operators (H100, 4090) and dishonest operators (FP8 quantization, 1B model spoofing). Clear separation enables reliable detection.

Efficiency metrics

- Payload size: 250-313 KB per 10k tokens (with k=20)

- Verification latency: 0.98 seconds for 10 samples with KV caching

- Verification compute: Only S single-token forward passes (negligible vs. full generation)

- Detection accuracy: 100% separation between honest and dishonest operators in our experiments

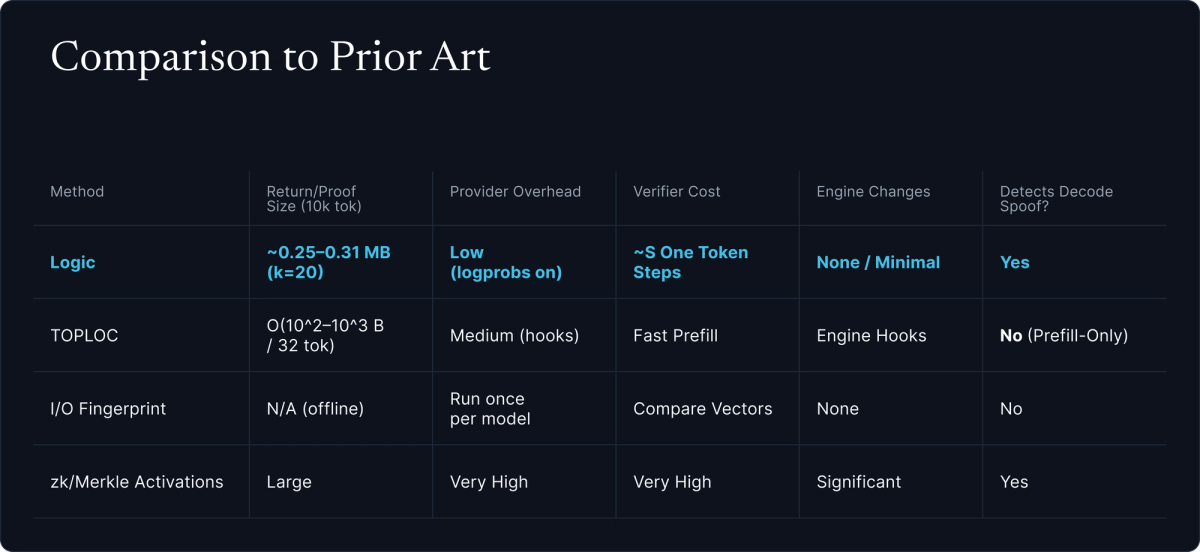

Comparison to other verification methods

The landscape of inference verification methods reflects different trade-offs between security guarantees, computational overhead, and practical deployability.

TOPLOC

TOPLOC compresses top-k last-layer activations with polynomial encoding, achieving impressive 1000× memory efficiency and 100× faster validation. However, in permissionless settings:

- Prefill-only validation leaves the system vulnerable to speculative decoding attacks

- GPU operators can honestly prefill with the claimed model but decode with cheaper alternatives

- In economically-motivated networks, this represents the primary attack vector

- Typically requires modifications to the underlying inference engine

I/O Fingerprint

Methods that hash intermediate activations offer strong detection capabilities but face several challenges:

- Require heavy instrumentation of inference engines with GPU-to-CPU data movement

- Sensitive to hardware non-determinism across different GPUs and CUDA versions

- Typically validate prefill only, missing decode-time spoofing attacks

- Limited native support in production inference frameworks

Zero-Knowledge Proofs

Cryptographic methods like zkSNARKs provide the strongest theoretical guarantees but remain orders of magnitude too slow and expensive for modern LLM inference. Even optimistic projections suggest ZK proof generation would cost 100× more than the original inference, making them impractical for production deployment.

LOGIC

LOGIC prioritizes practical deployability in permissionless environments:

- Decode-spoofing resistant: Samples from generation phase where economic incentives for cheating are highest

- Zero-modification integration: Works with all major inference engines out-of-the-box

- Hardware agnostic: Robust across GPU types, CUDA versions, and numerical precision

- Minimal overhead: ~250-313 KB per 10k tokens, negligible verification compute

- Statistical guarantees: Extremely close student models may require higher k, larger S, or multi-prompt challenges

For systems where participants have strong economic incentives to minimize costs decode-time verification is not optional. LOGIC's design directly addresses this threat model while maintaining practical efficiency.

Challenges and future directions

While LOGIC provides a production-ready solution for decentralized inference verification, several areas warrant further development:

Distinguishing close student models

Extremely well-distilled student models that closely mirror the teacher's log-probability distributions may be harder to distinguish. However, if a student model produces statistically indistinguishable distributions on the evaluated slice, it is effectively equivalent in behavior for those contexts. Mitigation strategies include:

- Increasing k (more tokens per position)

- Increasing S (more sampled positions)

- Multi-prompt challenges with diverse contexts

- Adaptive sampling based on operator history

Quantization and compression variants

Different quantization schemes (INT8, FP8, W8A8, etc.) and KV-cache compression methods may require empirical tuning of thresholds. Our current experiments cover FP8 quantization, but the ecosystem of optimization techniques continues to evolve.

Adaptive sampling and reverification

Network patterns, CUDA version differences, GPU defects, or other environmental factors may occasionally cause legitimate numerical drift. An adaptive sampling strategy could:

- Increase sampling density for operators with borderline p-values

- Reverify suspicious samples before making final trust decisions

- Build longitudinal trust profiles rather than single-request verdicts

Extension to other modalities

While LOGIC currently focuses on text-based LLM inference, the underlying principle (verifying output distributions rather than exact values) could extend to other domains. Multi-modal language models and diffusion-based image generation both produce output distributions that could be similarly verified.

Moving forward

LOGIC provides a production-ready path to verifiable decentralized inference. By leveraging log-probability distributions that inference engines already compute, we achieve strong verification guarantees with minimal compute and network overhead, and no engine modifications.

The method's resistance to decode-time spoofing makes it particularly well-suited for permissionless networks where economic incentives naturally encourage cost-cutting attacks.

As AI inference increasingly moves toward decentralized architectures, practical verification methods like LOGIC become essential infrastructure. We hope this work helps standardize trust mechanisms in open inference networks and enables the next generation of permissionless compute protocols.

Contributors: Amarjot Singh, Francesco Virga, Sean Smith, Sam Hogan

Code: https://github.com/context-labs/logic

Paper: [Coming Soon]

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.