Content

Explore our latest articles, guides, and insights on AI and machine learning.

Feb 21, 2026

LLM Evaluation Tools: The Complete Comparison Guide (2026)

Compare the 9 best LLM evaluation tools — DeepEval, RAGAS, Promptfoo, LangSmith, Braintrust, and more. Includes code examples, pricing, and a decision framework for picking the right tool.

Feb 21, 2026

LLM API Pricing Comparison 2026: 30+ Models, Every Provider

The most complete LLM API pricing comparison for 2026 — covers 30+ models from OpenAI, Anthropic, Google, Mistral, plus open-source inference providers (Groq, Together AI, Fireworks AI, inference.net) that slash costs by 50–95%.

Feb 20, 2026

LLM Observability: A Complete Guide to Monitoring Production Deployments

Learn how to implement LLM observability with metrics, tracing, evals, and cost monitoring. A practical guide for engineers running LLMs in production.

Feb 20, 2026

vLLM Advanced: Building Custom Inference Pipelines at Scale (2026 Guide)

Go beyond LLM.generate() — master vLLM's advanced API: LLMEngine, AsyncLLMEngine, structured output, multi-GPU serving, and production tuning. Complete guide for 2026.

Feb 19, 2026

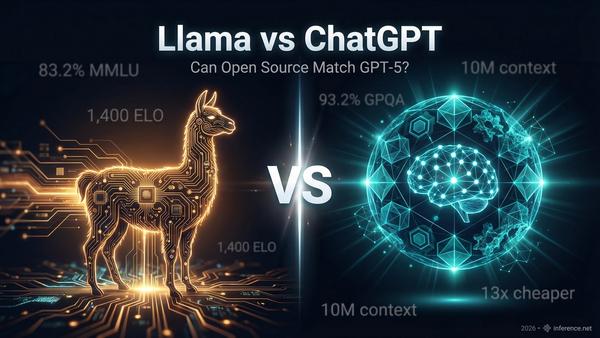

Llama vs ChatGPT: Can Open Source Match GPT-5? (2026)

Llama 4 Maverick vs GPT-5 and GPT-5.2 compared on benchmarks, token pricing, privacy, and fine-tuning. Concrete use-case decision framework. February 2026 data.

Feb 9, 2026

Crawl4AI: The Complete Guide to LLM Web Scraping

Learn Crawl4AI from installation to production pipeline. This guide covers extraction strategies, LLM integration, Schematron structured output, and cost-optimized scraping at scale.

Feb 8, 2026

AI Readiness Assessment: 6-Dimension Framework & Scoring

Assess your organization's AI readiness across 6 dimensions with our scoring framework. Evaluate data, infrastructure, talent, and governance. Free guide.

Feb 7, 2026

AI Governance Maturity Model: 5 Stages + Assessment Tool

Assess and improve AI governance with a 5-stage maturity model aligned to NIST AI RMF, ISO 42001, and the EU AI Act. Includes a practical self-assessment.

Jan 31, 2026

vLLM Docker Deployment: Production-Ready Setup Guide (2026)

Complete guide to deploying vLLM in Docker containers. Covers multi-GPU setup, Docker Compose, Kubernetes, monitoring with Prometheus, and production tuning.

Jan 26, 2026

SGLang: The Complete Guide to High-Performance LLM Inference

Learn SGLang from installation to production. Covers RadixAttention architecture, vLLM benchmarks, Docker/Kubernetes deployment, and troubleshooting.