Aug 27, 2025

What Is Inference Latency & How Can You Optimize It?

Inference Research

Imagine a user waiting while your chatbot thinks for five seconds, or a mobile app pausing because a model call lags; that kind of delay can kill trust and reduce engagement. Inference Latency sits at the heart of LLM inference optimization techniques, with response time, throughput, tail latency, and p99 spikes deciding whether an experience feels instant or sluggish. This article presents focused, practical fixes, including profiling and latency measurement, model compression, quantization, pruning, smart batching, and concurrency tuning, as well as efficient serving and edge inference, so your models run faster, cost less, and scale to deliver real-time, seamless user experiences.

To achieve these outcomes, AI inference APIs offer managed model serving, automatic scaling, reduced cold starts, minimized network delays, and built-in metrics for latency profiling, enabling you to speed up responses and reduce infrastructure costs without retraining your models.

What is Inference Latency and Why is it Important?

Inference latency is the time it takes for a trained machine learning model to receive an input and return a prediction. Measured in milliseconds or seconds, it captures the delay between a request and the model output.

Think of it as response time or inference time in a production system. Low latency enables real-time inference and fast user interaction, while high latency makes systems feel slow and unresponsive.

Why Latency Matters: User Experience, Safety, and Cost

Why care about inference latency? Because it shapes user experience, system performance, and operational cost. In a chat interface, slow response times break conversational flow and drive users away.

In a recommendation engine, delayed suggestions can reduce click-through and conversion rates. In an autonomous vehicle, even a few extra milliseconds to detect a pedestrian can put lives at risk.

Latency also affects costs:

- Slower model runtime may force you to run more instances to meet the service-level

- Objectives

- Raising your cloud bill and hardware needs.

Core Factors That Drive Inference Latency

- Model complexity: Larger models with numerous layers and attention heads require more computational resources, which in turn increases inference time.

- Hardware accelerators: The choice of CPU, GPU, TPU, NPU, FPGA, VPU, or APU determines how fast the model runs. Specialized accelerators often give lower latency per request.

- Data size: High-resolution images, long audio clips, or extensive context windows require more tokens or pixels to process, resulting in longer inference times.

- Batch size: Processing multiple requests together raises throughput but increases the time to return a single prediction in the batch. Batch processing improves model throughput, but can also worsen latency per request.

Hardware Accelerators and How They Affect Latency

CPUs are general-purpose and easy to deploy, but may yield higher latency for large models. GPUs parallelize matrix math and lower latency for many inference workloads. TPUs and NPUs specialize in neural network primitives, which can further reduce inference time.

FPGAs enable you to implement custom pipelines for low latency, albeit at the cost of increased development effort. VPUs and APUs target edge devices where both power and latency are critical. Choose the accelerator that matches model size, precision, and deployment constraints.

Batch Size and the Latency Versus Throughput Trade Off

Would you rather receive one answer quickly or multiple answers per second? Increasing the batch size raises model throughput, often measured in inferences per second or frames per second, but it also increases the wait time for any single request.

Interactive systems prioritize low batch sizes or dynamic batching. For offline processing, prioritize a high batch size to maximize hardware utilization.

Inference Latency Versus Throughput: different metrics for different goals

Inference latency measures the time it takes to make a single prediction. Throughput measures how many predictions you can complete in a given time window.

Optimizing for throughput can negatively impact latency, and vice versa. Design choices depend on the use case: customer-facing APIs demand low latency and predictable tail latency, while analytics pipelines require high throughput.

How to Measure Latency: units and percentiles that matter

Record latency in milliseconds for real-time workloads, and seconds for longer tasks. Track average latency and key percentiles, such as:

- P50

- P95

- P99

To capture both typical and tail behavior. Tail latency matters for user experience because a few slow requests can degrade perceived performance. Monitor cold start time, queuing delay, and inference compute time separately.

Techniques to Reduce Inference Latency in Practice

- Model choice: Select a model that meets accuracy needs with minimal computation.

- Quantization: Use lower precision, such as INT8 or mixed precision, to speed up runtime with a slight loss in accuracy.

- Pruning and distillation: Shrink or replace a large model with a smaller one that runs faster.

- Compilation and kernel tuning: Compile models with runtimes such as TensorRT, ONNX Runtime, or XLA to fuse operators and minimize overhead.

- Batching strategies: Use dynamic batching for bursts and fixed small batches for interactive flows.

- Pipelining and model parallelism: Split work across stages or devices to reduce per-request time.

- Early exit and adaptive computation: Return a result when confidence is sufficient to save cycles.

- Caching and memoization: Reuse previous predictions for repeated inputs.

- Reduce input size: Downsample images or shorten context windows where accuracy allows.

- Keep instances warm: Reduce cold start latency by maintaining hot model processes.

- Edge deployment: Move inference closer to the user on edge devices for lower network latency.

Practical Checklist for Production Latency Management

- Measure baseline latency and record p50, p95, and p99.

- Identify the slowest component: input preprocessing, model runtime, serialization, or network.

- Try quantization and model compilation in a staging environment.

- Test different batch sizes and dynamic batching policies.

- Use the accelerator that gives the best latency per dollar.

- Implement autoscaling tuned to latency SLOs rather than just CPU usage.

- Instrument and alert on tail latency so you catch regressions early.

Which Trade-Offs are You Willing to Make?

- Do you accept a slight accuracy loss for significant latency gains?

- Will you pay more to run enough instances to hit stringent tail latency targets?

Those decisions shape model selection, hardware choice, and deployment patterns. Think in terms of response time budgets and cost per query.

Related Reading

- LLM Inference Optimization

- Model Context Protocol

- Speculative Decoding

- Lora Fine Tuning

- Gradient Checkpointing

- LLM Quantization

- LLM Use Cases

- Post Training Quantization

- vLLM Continuous Batching

9 Optimization Techniques for LLM Inference

1. Model Compression: Shrink the Engine, Keep the Power

Reduce model size and compute by changing the network or training a smaller proxy. Techniques include quantisation, pruning, and knowledge distillation.

Quantisation

Lower weight and activation precision from 32-bit to 8-bit or lower, so tensors use less memory and arithmetic runs faster on integer or mixed precision units. This reduces memory bandwidth and cache pressure, which in turn speeds up token generation and lowers tail latency.

Pruning

Remove low-importance weights or neurons to reduce the number of operations performed during each forward pass. That cuts FLOPs and improves token throughput for real-time and batch workloads.

Knowledge Distillation

Train a compact student model to mimic a large teacher. The student requires fewer computational cycles per token, which improves response time and reduces inference cost.

Why Latency Improves

- Smaller models reduce memory movement and cache misses

- Compute time per token, which shortens response time and increases throughput.

2. Efficient Attention Mechanisms: Rework the Costly Inner Loop

Replace or optimise full attention so the cost grows more slowly with sequence length.

Flash Attention

Reorganises memory access and computation to cut memory bandwidth and intermediate memory use. It uses fused kernels to compute attention with less spilling to DRAM.

Sparse Attention

Limit pairwise attention to nearby or selected tokens to reduce O (N) work to near linear in many cases.

Multi-Head Attention

Split the representation into parallel heads, allowing the model to capture diverse patterns without a huge single matrix, thereby enabling parallel computation and improved hardware utilization.

Why Latency Improves

Reducing memory moves and the number of attention operations lowers per-token compute and reduces latency jitter for long context decoding.

3. Batching Strategies: Fill the GPU to Lower Per-Request Cost

Group multiple inference requests into one larger workload so the GPU throughput rises

Static Batching

Prebuild batches by request class or similar length. It offers predictable throughput but can waste cycles on padding.

Dynamic Batching

Collect arriving requests briefly and automatically pack them into a batch. It adapts to traffic and reduces idle GPU time while balancing latency.

Why Latency Improves

Batching amortizes GPU kernel launch costs and memory transfers across requests, increasing tokens per second and reducing average response time. Which trade-off matters more for your product speed goals and tail latency targets?

4. Key Value Caching: Don’t Recompute What You Already Did

Store the computed keys and values from past tokens to reuse on subsequent decoding steps.

Static Kv Caching

Keep the cache for the fixed input so the decoder can reuse it across generations without recomputing the encoder outputs.

Dynamic Kv Caching

Continually append new keys and values as tokens are generated, enabling streaming and long context decoding without re-running expensive layers.

Why Latency Improves

Eliminating repeated attention over past tokens significantly reduces the computation per token and speeds up token generation and response time.

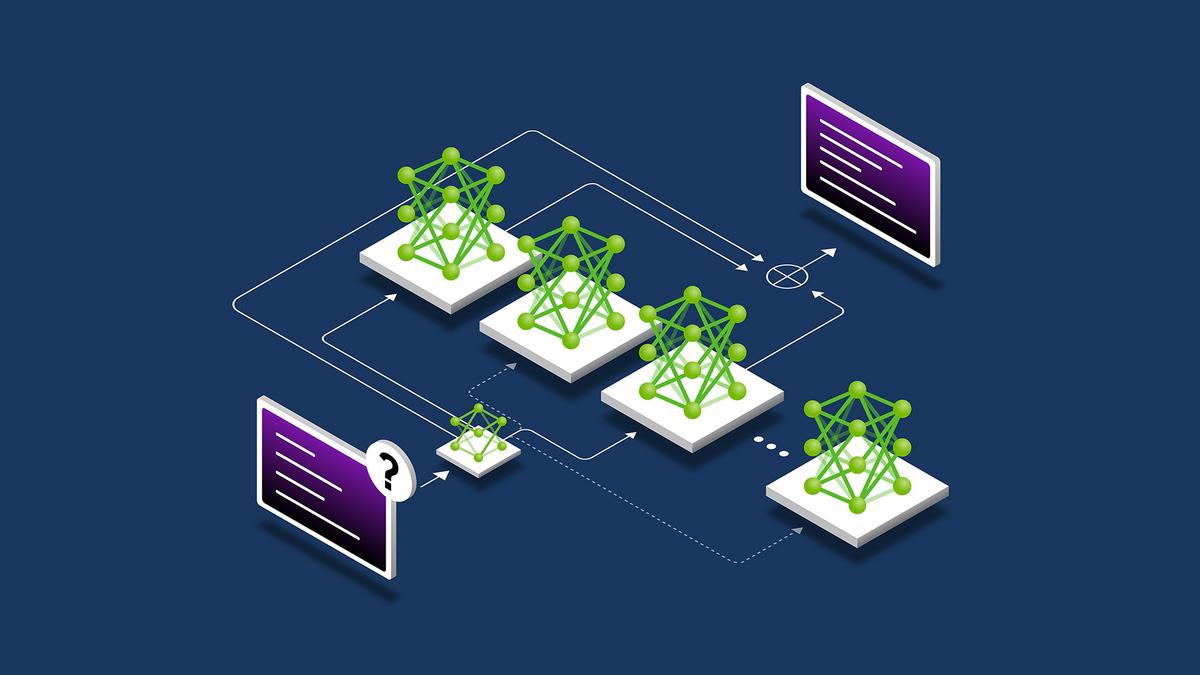

5. Distributed Computing and Parallelisation: Share the Load Across Hardware

Split model and computation across devices to scale memory and compute.

Model Parallelism

Slice model layers across GPUs so a huge model fits in aggregate memory.

Pipeline Parallelism

Assign consecutive layer blocks to different devices and stream micro batches through the pipeline to keep all devices busy.

Tensor Parallelism

Shard large matrices and run matrix math in parallel across GPUs to speed dense linear algebra.

Why Latency Improves

Parallel work reduces per-token wall clock time and removes single-device memory limits, enabling higher throughput and lower latency for large models.

6. Hardware Acceleration: Use Chips Built for Matrix Math

Run models on processors designed for parallel tensor operations like GPUs and TPUs and use features such as mixed precision and tensor cores.

Why Latency Improves

Specialized hardware increases raw FLOPs and memory bandwidth, reducing inference latency and improving steady throughput for both short and long requests.

7. Edge Computing: Push Inference Closer to the User

Run inference on devices near users or local servers to avoid long network trips.

Why Latency Improves

Reduced network RTT and fewer hops trim response time and jitter for applications that need immediate results, such as augmented reality or IoT control.

8. Optimized APIs and Protocols: Shrink the Network Overhead

Use efficient transport and data formats and reduce round trips to lower request processing time.

Protocols

Prefer binary RPC like gRPC over verbose HTTP when you need low latency and high throughput.

Formats

Use compact serialization formats, such as Protobuf or MsgPack, to reduce payload size and parsing costs.

Connection Handling

Utilize persistent connections, HTTP keep-alive, and minimize serialization overhead to prevent a cold start on each call.

Why Latency Improves

Less serialization, fewer round trips, and steadier network throughput cut end-to-end response time and reduce latency jitter.

9. Load Balancing: Prevent Single Server Saturation

Distribute incoming inference requests across a pool of servers and scale the pool to demand.

Strategies

Use least connections or latency-aware routing to reduce overloaded nodes and prevent queue buildup.

Autoscaling and Health Checks

Add capacity before latency spikes and remove failed instances promptly to keep request queuing low.

Why Latency Improves

Smoother request distribution prevents long queues and high tail latency, maintaining consistent response times under load.

Related Reading

- KV Cache Explained

- LLM Performance Metrics

- LLM Serving

- Serving ML Models

- LLM Performance Benchmarks

- LLM Benchmark Comparison

- Pytorch Inference

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs, allowing you to call popular open-source LLMs using the same request shape you already know. The API removes server management and autoscaling headaches.

You send a prompt, the model generates tokens, and you get a response. Expect standard features like streaming output, temperature, and top p controls, and token limits matched to model context windows. Do you want one API for local tests and production traffic? This fits that need.

Why Performance and Cost Matter: Throughput, Response Time, and Cost per Token

Inference focuses on high throughput while holding down response time and cost per token. Low latency and high throughput work together. Faster token generation reduces response time and lowers the CPU or GPU usage per request, which drives down costs.

Throughput per dollar and cost efficiency are crucial when scaling to thousands of concurrent requests or handling heavy batch jobs. Watch p95 and p99 latency and also track mean response time and token throughput to measure real impact.

Batch Processing for Async AI Workloads: How It Scales

For large async jobs, Inference offers specialized batch processing that groups requests for efficient GPU utilization. Dynamic batching and micro batching improve GPU occupancy and increase tokens per second.

Queues and worker pools accept asynchronous payloads and process them in optimized batches to reduce tail latency and increase throughput. This reduces RPC overhead and avoids tiny kernels that cause underutilization.

Document Extraction for RAG: Fast Retrieval and Low Retrieval Latency

Document extraction integrates embeddings, vector stores, and chunking, all tuned for retrieval-aided generation. Embedding latency and retrieval latency get special attention because they affect the overall response time in an RAG pipeline.

Inference optimizes tokenization and chunk size to balance retrieval cost and downstream model latency. It also supports streaming and chunked extraction, allowing you to start generation while the rest of the document processes.

Jump Start Offer: $10 Free API Credits and Quick Onboarding

Start building quickly with $10 in free API credits. Use the credits to test models, measure token latency, and compare throughput across configurations. Try streaming output to measure token generation latency and test batch processing with representative payloads to capture real tail latency numbers.

Reducing Inference Latency: Model-Level Tricks You Can Use

Quantization to FP16 or INT8 reduces compute and memory bandwidth, thereby speeding up token generation. Pruning removes rarely used weights to reduce model size and memory footprint. Operator fusion and kernel fusion reduce kernel launch overhead.

Compiling models with TensorRT or ONNX Runtime improves inference throughput and shrinks latency variance. Try mixed precision first, measure the p95 and p99 latencies, then move to lower precision if the accuracy remains acceptable.

Serving Strategies That Cut Response Time and Tail Latency

Use dynamic batching to group concurrent requests while maintaining low token latency for single-user queries. Implement micro-batching to reduce padding waste and improve GPU utilization. Use warm pools of preloaded models to avoid cold start latency.

Use streaming decoding so that clients see tokens as they arrive, instead of waiting for a full response. Which matters more to your users, p50 or p99 latency? Tune the batching accordingly.

Decoding Choices Affect Token Generation Latency

Greedy sampling and top-k or top-p sampling trade off throughput and output diversity. Beam search increases CPU and memory work per token and raises response time.

Sampling with smaller beams reduces latency and improves tokens per second. Parallelize sampling when possible and prefer fast decoding kernels offered by optimized runtimes.

Hardware and System Optimizations That Reduce Network and Compute Delays

Choose GPUs with high memory bandwidth and NVLink to cut inter-GPU latency for sharded models. Use zero-copy transfers and pinned memory to reduce CPU-GPU transfer overhead. Enable GPU Direct for network paths where available.

Use colocated retrieval and model serving to cut network latency between embedding stores and the model. Monitor memory bandwidth and PCIe bottlenecks, as they directly impact token throughput.

Scaling and Architecture: Serverless Behavior, Cold Starts, and Warm Starts

Serverless simplifies scaling but introduces cold start risk. Mitigate cold starts by keeping warm pools or by offering fast model preload options. Use autoscaling with latency-aware metrics so that scale events respond before the p95 or p99 latency threshold is reached.

For stateful pipelines use sticky sessions or token-level streaming to maintain throughput without high serialization overhead.

Model Parallelism, Sharding, and Offloading Techniques

Model sharding spreads weights across devices to handle large context windows. Pipeline parallelism keeps multiple devices busy, but it also increases cross-device communication, which can lead to higher tail latency.

Offload less active layers to CPU memory to save GPU RAM but measure the added memory swap latency. Pick sharding strategies that minimize cross-node traffic for common request patterns.

Observability: Track Latency Metrics and Where Time Goes

Measure response time, token generation time, tokenization overhead, embedding latency, retrieval latency, serialization time, and network RTT: track p50, p95, p99, and tail latency spikes.

Use flame graphs and trace spans to identify hot paths, such as tokenization or RPC serialization, that add milliseconds per request. Log GPU kernel times and queue wait times to detect throughput limits.

Cost Efficiency: Throughput per Dollar and Practical Trade-offs

Optimize for throughput per dollar by tuning batch size, precision, and decoding strategy. Larger batches improve tokens per second but can raise latency for single requests.

Lower precision reduces cost but can slightly change output quality. Measure cost per token under realistic workloads and use those numbers to choose model size and serving configs.

Practical Checklist to Reduce Latency in Production

- Measure real user patterns and p99 latency for realistic payloads.

- Warm models and maintain warm pools to avoid cold start penalties.

- Use mixed precision and quantization when quality allows.

- Enable dynamic batching with a sensible max batch size.

- Stream tokens to clients to lower perceived response time.

- Co-locate retrieval and model compute to cut network RTT.

- Monitor GPU memory bandwidth and queue times to ensure optimal performance.

- Compile critical models with optimized runtimes, such as TensorRT or ONNX.

- Use zero-copy transfers and pinned memory to reduce CPU-GPU overhead.

Related Reading

- Continuous Batching LLM

- Inference Solutions

- vLLM Multi-GPU

- Distributed Inference

- KV Caching

- Inference Acceleration

- Memory-Efficient Attention

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.