May 21, 2025

17 Best APIs for Machine Learning to Supercharge Your AI Projects

Inference Research

Imagine you’ve just built and deployed a machine learning model that accurately predicts customer churn. Nice! But then, a few weeks later, you notice that its predictions are no longer reliable. What went wrong? Most likely, the model suffers from concept drift, which occurs when the statistical properties of the target variable change over time. APIs allow for Monitoring ML Models in Production, enabling you to gather the new data needed to improve your model’s performance and deploy the updated version as quickly as possible. This article will explore how you can use APIs for machine learning to efficiently integrate powerful ML capabilities into your applications so that you can deliver smarter, faster, and more scalable AI-driven products.

One way to make this process easier is to use AI inference APIs. These tools can help you achieve your objectives by quickly integrating machine learning into your applications to create better, more intelligent software.

What Are Machine Learning APIs and How Do They Work?

A machine learning application programming interface (API) is a service or interface that allows developers to access machine learning models or functionality over the web or within a software environment. APIs have been around for a long time, providing a standardized way for two software applications to communicate.

API Functionality in Data Science

Applied to our context of data science, an API allows for the communication between a web page or app and your AI application. The API opens up specific user-defined URL endpoints, which can be used to send or receive requests with data. These endpoints are not dependent on the application: the interface will stay the same if you update your algorithm.

Benefits of Standardized ML APIs

This minimizes the work required to update the running application. Machine learning APIs provide standardized interfaces to powerful pre-trained models and algorithms. The standardization eases the integration process and efforts by a large margin, no matter what type of model you require or application you have.

Applications can also easily establish uniform communication with different parts of software systems.

How Do Machine Learning APIs Work?

Machine learning APIs typically operate over the internet, where developers send requests to an API endpoint with data that needs processing. The API then processes this data using its machine learning models and returns a response with the desired results. This process abstracts the complexity of machine learning, allowing developers to leverage AI without delving into the details of model training or data pre-processing.

For example, a developer can send an image file to an API endpoint designed for image analysis in image recognition tasks. The API uses pre-trained models to identify objects or features within the image and returns the results in a structured format, such as JSON or XML.

Streamlined Sentiment Analysis via API

Similarly, in sentiment analysis, a developer can send a text snippet to an API that analyzes and returns the sentiment score, indicating whether the text carries positive, negative, or neutral sentiments. These APIs handle the heavy lifting of data processing and model inference so that developers can focus on the application side and integrate the results.

The Benefits of Machine Learning APIs

You get easy access to powerful ML functionalities without building, training, or deploying models. You don’t have to spend resources on data collection, model training, and optimization. The standardized interfaces allow for easy integration. Moreover, the recycling and reuse of models significantly cut down the time and effort needed to build AI-driven solutions.

Cloud-Based Scalability and Up-to-Date Models

These APIs often run on cloud-based platforms. This ensures scalability according to demand and that applications can handle varying workloads without performance degradation. Machine learning APIs offer access to state-of-the-art models and techniques that their providers continuously update and maintain.

Customization and Simplified Integration

Applications benefit from the latest advancements in AI without requiring in-depth knowledge or manual updates. As discussed in the preceding section, many ML APIs allow for customization. Developers are to fine-tune models to suit specific needs or datasets. ML APIs provide uniform endpoints that can interact with machine learning models.

Modularity and Efficient Access

This simplifies the integration of AI functionalities into pre-existing systems. Furthermore, you can easily establish interaction between independent components and the models without deep integration. Such modularity enables diverse applications and users to access and utilize the AI models efficiently.

Related Reading

17 Best APIs for Machine Learning

1. Inference API

Even though Inference isn’t a traditional off-the-shelf API like others on the list, it functions as an API layer for deploying and scaling open-source LLMs. Inference offers developers a lightning-fast, serverless API layer with full OpenAI API compatibility to run top open-source LLMs like:

- Mistral

- LLaMA

- Mixtral

- Many more

High-Speed, Versatile Inference

This makes it incredibly easy to swap from closed to open models while keeping your existing codebase intact. Its performance-to-cost ratio sets Inference apart: It delivers some of the fastest inference speeds on the market while maintaining very low pricing. In addition to real-time inference, it supports batch processing for asynchronous workloads, which is ideal for large-scale data pipelines or automation.

Accessible Entry to Scalable AI

Another standout feature is its document extraction API, tailored for Retrieval-Augmented Generation (RAG). This enables developers to preprocess and embed documents efficiently for high-performance knowledge-based chatbots. New users can experiment with $10 in free credits, making it an accessible entry point for scalable, production-ready AI integration.

💡 Ideal for developers who want complete control over open-source LLMs without worrying about hosting, tuning, or scaling.

Provider

- Inference API

Standout Features

- Low latency

- Real-time inference

2. OpenAI API

The OpenAI API is one of the most popular machine learning APIs available. You can access state-of-the-art large language models like GPT-4o, embeddings, image generation, text-to-speech, speech-to-text, and moderation models for a small fee. With the OpenAI API, you can create your own high-quality AI application and even build a startup around it.

Addressing OpenAI API Limitations

There are two potential issues with using the OpenAI API. First, there are privacy concerns, and second, the cost of using these models can quickly add up, especially if you are trying to build a company around it. This could diminish your profit margin for expansion. This is where other APIs come into play.

Provider

- OpenAI

Standout Features

- Access to advanced AI capabilities

3. Hugging Face API

Machine learning engineers and researchers widely use the Hugging Face API. It allows you to download datasets, models, repositories, and spaces. It is fast and provides many customization options for downloading datasets.

Simplified Model Management and Deployment

You can create a Hugging Face Hub repository, save and share your models, develop and publish your machine learning web applications, and deploy machine learning model endpoints with GPU support. Most people use it with the Transformers library, which makes it easy to fine-tune large machine learning models with just a few lines of code.

Provider

- Hugging Face

Standout Features

- Customization

- Fast performance

4. ElevenLabs API

If you are searching for a cutting-edge solution for sound generation, speech-to-text, and speech-to-speech for AI applications, the ElevenLabs API is the best option available. They offer natural-sounding voices that can bring life to your product. The API includes:

- Voice cloning

- Streaming

- Asynchronous capabilities

- Supports 29 languages and over 100 accents

You can even use text to generate sound effects. Instead of training your model and striving for perfection, you can skip that step and integrate the ElevenLabs API.

Provider

- ElevenLabs

Standout Features

- Voice cloning

- High-quality sound generation

5. Google Cloud AI/ML APIs

Google Cloud offers an extensive suite of AI and machine learning products that cater to various needs. For example, the Vertex AI Platform offers an all-inclusive toolset, including an API, for creating, training, testing, monitoring, tuning, and deploying AI models—platforms like this support developers throughout the entire machine learning lifecycle.

Google Cloud includes Vertex AI Notebooks for rapid prototyping and secure experimentation. Google Cloud’s AI/ML suite also comprises specialized APIs: The Video Intelligence API can analyze videos to identify more than 20,000 objects, places, and actions.

Developers can use the Dialogflow API to build natural conversational experiences into their applications. Speech-to-Text API provides high-accuracy speech recognition. Text-to-Speech API offers natural-sounding speech synthesis. Some other AI services that Google Cloud Platform offers include the following:

- Vision AI provides pre-trained models for image recognition and analysis.

- Natural Language AI offers pre-trained models for sentiment analysis, entity recognition, and language translation.

- Translation AI provides pre-trained models for language translation

- Document AI offers pre-trained models for data extraction and document processing.

Google Cloud provides a unified platform where everything works out of the box. Regardless of your use case, you can find a solution that fits. You also benefit from a pay-as-you-go pricing model that requires no upfront investment.

Provider

- Google Cloud

Standout Features

- Comprehensive documentation

- Large variety of APIs

6. IBM Watson Discovery API

IBM Watson Discovery API has sophisticated data extraction and pattern analysis capabilities, making it a valuable tool for data scientists. It can also efficiently process unstructured data. Businesses use it to analyze large volumes of text and uncover hidden patterns and insights.

IBM Watson Discovery uses custom NLP models and LLMs to deliver powerful natural language processing. This makes the API a suitable tool for sophisticated text analysis, such as in customer service applications that interpret and respond to customer queries. Researchers and development sectors use this API to extract valuable information from extensive datasets.

Provider

IBM

Standout Features

Custom NLP model capabilities

7. Amazon ML

Amazon ML is one of the premier machine learning (ML) APIs available today. With a comprehensive set of artificial intelligence (AI) and ML services, infrastructure, and implementation resources, Amazon ML empowers users to innovate faster and gain deeper insights from their data while lowering costs. Here are some notable general features of Amazon ML:

- Proven Leadership: Amazon, a trusted and proven leader in the industry, builds Amazon ML. With over 20 years of experience, Amazon has a track record of solving real-world business problems in various sectors.

- Generative AI: Amazon ML allows users to reinvent customer experiences with generative AI. Whether building new applications or utilizing services with built-in generative AI, Amazon ML offers a cost-effective cloud infrastructure for generative AI.

- Flexible Customization: Amazon ML enables users to tailor it to their business needs. It provides ready-made, purpose-built AI services that address common business problems and the ability to use their models with AWS ML services.

- ML Adoption Support: Amazon ML supports users at every stage of their ML adoption journey. From kickstarting proof of concepts with AWS experts to upskilling teams through training and hands-on tutorials, Amazon ML provides the necessary support for successful ML adoption.

Provider

- Amazon

Standout Features

- Generative AI capabilities

- Proven leadership

8. Automation Anywhere

Automation Anywhere offers one of the best machine learning APIs in the market. Their platform, Automation Success, is infused with generative AI that can transform any team, system, or process. This review will discuss Automation Anywhere's machine learning APIs' general features, pricing, and overall conclusion.

General Features

- Automation Anywhere’s machine learning APIs are infused across its Automation Success platform. It offers generative AI to interpret and create text, images, and content.

- It includes intelligent document processing, process discovery, and resiliency features.

- Automation Co-Pilot, their AI-powered assistant, is embedded in every application and can be used by business users and automators alike. It enables natural language requests for automations, personalized content generation, and document summarization.

- Automation Anywhere’s machine learning APIs can be used for a variety of use cases, including:

- Customer complaint resolution

- Customer inquiry sentiment analysis

- Order lookup

- Email triage for CPG

- Patient message triage

- After-visit summary for patients

- Medical summary for practitioners

- AML transaction monitoring

- Invoice processing many more

Provider

- Automation Anywhere

Standout Features

- Generative AI capabilities

- Process automation

9. Geneea NLP API

Geneea NLP API is one of the best machine learning APIs available today. It offers a range of features that make it stand out from its competitors. This review will examine its general features, pricing, and overall performance.

General Features

Geneea NLP API offers four types of public API:

- General API (G3): General purpose NLP, i.e., detecting language, names, sentiment, etc. in documents

- Media API V1 (deprecated) (M1): Semantic tagging of newspaper articles

- Media API V2 (M2): Newest version of our Media API; offers entities, suggestions of photos and related articles, and more

- VoC API (C1): Analyzing customer feedback, or routing and analysis of customer support

Intent Detection

- Detecting intent in chat turns

- Primarily aimed to handle common L1 questions

Tailored Solutions and Developer Support

One of Geneea NLP API's strengths is its ability to customize these models to suit your needs, whether handling custom product names, categories, or sentiment. Geneea NLP API offers a range of SDKs and a Knowledge Base to help developers integrate the API into their applications seamlessly.

Provider

- Geneea

Standout Features

- Customizable NLP models

10. BigML

BigML is a comprehensive machine learning platform offering various features to help businesses make data-driven decisions. Its API is one of the best in the market, providing immediate access to machine learning models that can be easily interpreted and exported.

General Features

BigML’s API offers a range of features that make it stand out from other machine learning APIs. These include:

- Comprehensive platform: BigML’s platform offers a range of tools and features to help businesses make the most of their data. These include data visualization, data preprocessing, and machine learning models.

- Immediate access: With BigML’s API, businesses can immediately access a range of machine learning models without needing extensive training or expertise.

- Interpretable and exportable models: BigML’s models are designed to be easily interpretable, making it easy for businesses to understand how the model makes predictions. Models can also be easily exported to other platforms, making it easy to integrate with existing workflows.

- Collaboration: BigML’s platform facilitates collaboration between team members, making it easy to share data and models.

- Programmable & repeatable: BigML’s API is programmable, allowing businesses to automate their machine learning workflows. Models can also be easily repeated, making testing and refining models over time easy.

- Automation: BigML’s platform offers a range of automation features, including automatic model selection and hyperparameter tuning.

- Flexible deployments: BigML’s models can be deployed in various environments, including on-premise, in the cloud, or on mobile devices.

- Security & privacy: BigML takes security and privacy seriously, offering a range of features to ensure that data is kept safe and secure.

Provider

- BigML

Standout Features

- Immediate access to interpretable machine learning models

11. NLP Cloud

NLP Cloud is a specialized ML API focused exclusively on natural language processing tasks. It provides:

- High-performance

- Pre-trained models for sentiment analysis

- Entity recognition

- Summarization and more

This dedicated api for machine learning model processing allows developers to integrate advanced NLP capabilities into their applications without managing the complexities of model training.

Key Features

- Specialization: Optimized solely for NLP, ensuring high performance for language-related tasks.

- Ease of Use: It offers straightforward API integration and comprehensive documentation.

- Customization: Supports fine-tuning to adapt models to specific requirements.

Provider

- NLP Cloud

Standout Features

- Quick integration

- Customizable NLP models

12. Amazon SageMaker API

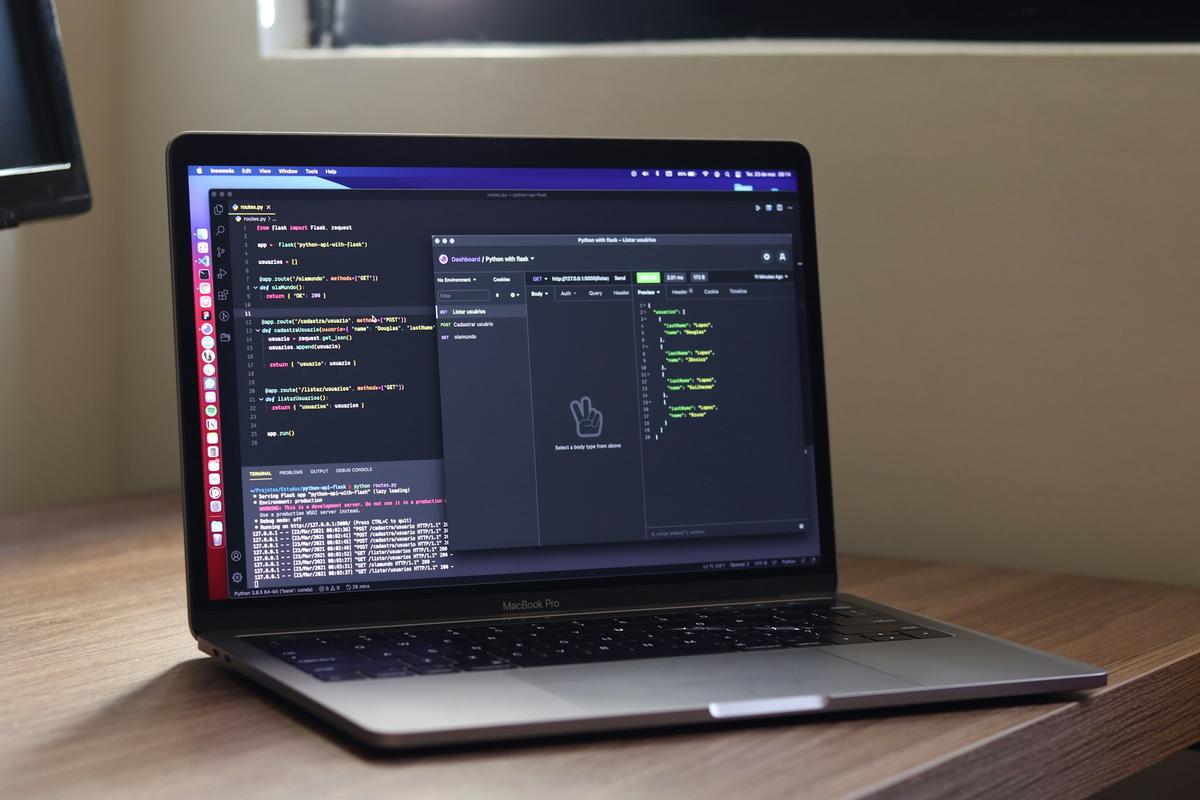

Amazon SageMaker is a fully managed service that empowers developers and data scientists to quickly build, train, and deploy machine learning models. This machine learning model development API supports the entire ML lifecycle within the AWS ecosystem. Developers can combine SageMaker endpoints with a flask api for machine learning model deployment, allowing custom web service integration.

Key Features

- Fully Managed: This simplifies the entire process of building and deploying ML models. Scalable: It easily adjusts to growing application demands with minimal overhead.

- Integration: Seamlessly connects with other AWS services, ensuring a smooth development process.

Provider

- Amazon

Standout Features

- Fully managed service

13. TensorFlow Serving API

TensorFlow Serving is an open-source framework designed specifically for serving machine learning models in production. This API for machine learning model deployment offers a flexible and efficient system for managing multiple models and versions simultaneously. It is often paired with a Flask API for machine learning models to expose custom endpoints for real-time predictions, making it a favorite among developers who need seamless integration.

Key Features

- Flexibility: Supports the concurrent deployment of various models and versions.

- Performance: Optimized for efficient, real-time inference in production environments.

- Open Source: No licensing costs, though infrastructure expenses still apply.

Provider

Standout Features

- Flexibility

- Open-source

14. PyTorch API

PyTorch API, maintained by Facebook, is a widely used open-source machine learning framework known for its flexibility and ease of use in research and production. This platform enables developers to build and experiment with deep learning models and quickly prototype innovative solutions.

PyTorch API supports the creation of a custom API for machine learning model deployment, which can be wrapped in a Flask API for machine learning models for real-world applications.

Key Features

- Flexibility: Ideal for rapid prototyping and experimental research.

- Community Support: Boasts a large, active community and extensive documentation.

- GPU Acceleration: Provides robust support for deep learning and parallel computing.

Provider

- Meta

Standout Features

- Flexibility

- Fast performance

15. Eden AI

Eden AI aggregates multiple ML APIs under a single unified interface, simplifying the integration of various AI services. It provides a one-stop solution for tasks across vision, text, and translation domains. This consolidated approach benefits developers seeking the Best APIs for Machine Learning without the hassle of managing multiple vendor relationships.

Key Features

- Unified Interface: This simplifies access to various AI functionalities from different providers.

- Flexibility: Allows developers to switch between AI services with minimal changes to the codebase.

- Cost Efficiency: Can reduce overall costs by consolidating services under one subscription.

Provider

- Eden AI

Standout Features

- Simplified machine learning API integration

16. Clarifai API

Clarifai API is a leading deep learning platform for computer vision and AI automation, recognized among the Best APIs for Machine Learning in visual recognition. It provides extensive image and video analysis features, enabling applications such as:

- Automated content moderation

- Image tagging

- Visual search

Clarifai’s robust framework simplifies the deployment of an api for a machine learning model focused on visual data.

Key Features

- Specializing in Computer Vision: Offers advanced capabilities for image and video analysis.

- Custom Model Training: Provides options to train models specific to user requirements.

- Ease of Use: Features an intuitive interface and comprehensive documentation for developers.

Provider

- Clarifai

Standout Features

- Specializing in computer vision tasks

17. Specialized Machine Learning APIs

While general-purpose machine learning APIs offer broad functionalities, specialized APIs tailor to specific tasks. They can significantly enhance the performance and accuracy of applications in niche areas. For example, SkyBiometry offers advanced facial recognition and feature detection capabilities for applications that require precise facial analysis.

Roboflow Universe’s Image Similarity API can analyze images to determine their visual similarities. Perspective API can detect toxic, obscene, insulting, or threatening text for use cases such as:

- Content moderation

- User protection

Provider

- Varies

Standout Features

- Varies by API

Related Reading

Integrating Machine Learning APIs into Your Applications

The integration process begins with selecting the right API for your project requirements. For example, if you need image recognition capabilities, you may choose an API like Google Cloud Vision or Microsoft Azure’s Computer Vision API. After selection, thoroughly review the documentation to understand how to use the API, including:

- Available endpoints

- Authentication methods

- Encryption

- Request-response formats

Implementing an ML API into Your Application: The Technical Details

You will implement the API in your application that sends and receives data from the endpoints. This involves constructing HTTP requests with the necessary parameters and authentication tokens. For example, for a text analysis API, you send a POST request to the API containing text data.

Your implementation must gracefully address any errors that may occur. You must properly extract useful information, such as sentiment scores or classification labels, for successful responses.

Preparing Data for ML APIs: The Most Important Step

Most importantly, you must prepare and format the input data to match the ML API’s requirements. You may need to profile, cleanse, validate, and transform your data to guarantee the data’s suitability for analysis and best results.

Pay Attention to Standardization in ML API Integration

Pay attention to standardizing endpoints for model interactions. Platforms like UbiOps provide an easy-to-use deployment layer for data science code, maintaining endpoints in a standardized format even when you upload new code. This standardization simplifies integration and ensures that applications can consistently interact with machine learning models.

Managing and Scaling Your Machine Learning Models

Managing machine learning models effectively ensures the performance and reliability of your AI apps against real-world problems. Here are some key points to consider:

- Tools like MLflow and TensorBoard help track key metrics such as accuracy, precision, recall, and latency, offering insights into model behavior in production environments.

- Teams can implement version control with platforms like DVC or Git to manage model changes and updates. This allows for easy rollbacks if issues arise.

- Logging and alerting systems, such as those from Datadog or Prometheus, help detect anomalies or performance degradation.

Strategies for Scaling ML Models

Models must also remain relevant and practical as data patterns evolve. Therefore, models can be retrained with fresh data using automated pipelines in tools like Kubeflow. For scaling ML models, here are some strategies to consider:

- Make sure models perform well under varying demands and increased workloads.Engineers can use cloud-based solutions like Kubernetes or Amazon SageMaker to orchestrate and manage resources efficiently.

- Load balancing becomes necessary to prevent any single model instance from becoming a bottleneck. To that end, tools like NGINX can distribute incoming requests across multiple instances.

- Developers can optimize models for speed and resource efficiency using quantization or model pruning. These are often implemented with frameworks such as TensorFlow Lite or ONNX.

For custom models, storing model artifacts in a Cloud Storage bucket within the same Google Cloud project ensures accessibility and security.

Common Challenges Implementing Machine Learning Solutions

Implementing machine learning solutions presents its own unique set of challenges. ML models rely on large, diverse, and high-quality datasets. Otherwise, they perform poorly and yield inaccurate results. Thorough data cleaning, preprocessing, and augmentation techniques can ensure data quality and address imbalances.

Optimizing Data for Model Accuracy

Investing in data collection and labeling efforts also helps improve data availability. Feature selection, which involves pinpointing the most pertinent features from an extensive dataset, can be quite a time-intensive process. Effective feature selection is necessary for building accurate models; various techniques and tools can aid this process.

Optimizing ML models requires tuning hyperparameters to adjust learning rates and performance. Proper data preprocessing and optimization techniques can ensure that models receive adequate training and perform well in real-world applications.

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.