May 24, 2025

What Is Machine Learning Observability & Best Practices in Production

Inference Research

Machine Learning Observability is like taking a stroll through the peaceful neighborhood of Predictable Performance. You and your team can step outside and take in the fresh air. You can see how your models are performing, and if anything looks out of place, you can quickly investigate and get to the bottom of it before it becomes a serious problem. In this case, you probably won’t have to involve the neighbors (your stakeholders) to fix the issue. Instead, you’ll be able to resolve it swiftly on your own. Monitoring ML Models in Production plays a crucial role in this process. This article will show you how to achieve ML observability, so you can detect issues early, improve model performance, and ensure your AI systems remain trustworthy as they undergo inevitable changes over time.

One way to achieve machine learning observability is to use Inference's AI inference APIs. With these tools, you can gain complete visibility into your machine learning systems, proactively detect anomalies, improve performance, and confidently scale reliable AI in production.

What is Machine Learning Observability?

Machine Learning Observability, or ML Observability, involves closely monitoring and understanding how machine learning models perform once deployed into real-world environments.

At its core, it’s a systematic approach to monitoring, analyzing, and comprehending the behavior and performance of ML systems once they’re deployed into production environments. It serves as a window into the system's inner workings, allowing us to track how well the models do their job, how the data they receive changes, and whether they use resources efficiently.

Challenges of Deploying ML Models

When developing ML models, developers typically train them on historical data to learn patterns and make predictions or decisions on new data. The real challenge arises when these models are deployed into production systems.

Unlike traditional software, where the behavior is deterministic and predictable, ML models often interact with dynamic and evolving data sources. This dynamic nature introduces uncertainties and complexities that require continuous monitoring and analysis.

Key Components of Machine Learning Observability

ML Observability encompasses several key components. Firstly, it involves monitoring the performance of the models themselves. Developers can track metrics such as:

- Model accuracy

- Prediction latency

- Resource utilization

These metrics provide insights into how well the models perform and whether they meet the desired objectives. Secondly, ML Observability extends beyond just the models to include the entire ML pipeline and infrastructure. Developers also need to monitor data pipelines, ensuring that the input data remains consistent and representative of the training data.

Tracing and Visualizing ML Model Behavior

ML Observability involves logging and tracing model predictions and system events. This allows developers to debug issues, audit model behavior, and ensure compliance with regulatory requirements. By capturing logs and traces of model predictions, developers can trace any unexpected behavior to its root cause and take corrective actions.

Visualization and analysis are crucial aspects of ML Observability. Visualizing the monitored data to gain insights into model behavior, performance trends, and areas for improvement is helpful. This visual representation helps understand complex relationships and make informed decisions about optimizing and scaling the ML system.

Why is Machine Learning Observability Needed?

ML Observability is essential for ML production due to the probabilistic nature of ML models. ML models interact with real-world data that can change and shift, leading to unpredictable scenarios. Data might change, models might degrade, or resources might run out. Without ML Observability, we're flying blind, risking poor performance, crashes, and failures.

One primary reason for observability is to detect and diagnose issues affecting the performance of ML systems. In production environments, models may encounter various challenges, such as data drift, anomalies, or underlying data distribution changes.

Understanding Data Drift in Machine Learning

Data drift occurs when the statistical properties of a machine learning model’s training data change. It can be a covariate shift where input feature distributions change or a model drift where the relationship between the input and target variables becomes invalid.

The divergence between the real-world and training data distribution can occur for multiple reasons, such as:

- Changes in underlying customer behavior

- Changes in the external environment

- Demographic shifts

- Product upgrades, etc

Addressing Data Drift in ML Systems

ML models are trained on historical data, assuming future data will follow a similar distribution. In real-world scenarios, this assumption often doesn't hold.

As the data-generating process evolves, the incoming data can shift gradually or abruptly, leading to drift. Data drift poses a significant challenge for ML systems because models may become less effective or accurate over time if not adapted to the changing data distribution.

Detecting and Mitigating Data Drift in ML Models

For example, an ML model trained to classify customer preferences based on historical data may struggle to make accurate predictions if there's a sudden change in consumer behavior or preferences. Detecting and mitigating data drift requires continuously monitoring incoming data and model performance.

By comparing the statistical properties of new data to the training data, developers can identify instances of data drift and take corrective actions, such as retraining the model on the updated data or implementing adaptive learning techniques that can adjust to new data.

Improving Model Performance with ML Observability

Changes in data distribution can occur not only due to drift over time but also due to intentional or unintentional modifications to the data sources or data collection processes after ML models are deployed into production.

For example, in an e-commerce application, changes to the user interface or website layout may affect how users interact with the platform, leading to changes in the distribution of user behavior data. Similarly, updates to data collection mechanisms or changes in data preprocessing pipelines can alter the characteristics of the incoming data, impacting the performance of deployed models.

Ensuring ML Model Reliability Through Data Monitoring

Such changes can significantly impact the effectiveness and reliability of ML systems. Models trained on outdated or biased data may produce inaccurate or unreliable predictions when deployed in environments with different data distributions. Therefore, it's essential to monitor for changes in data distribution and update models accordingly to ensure their continued effectiveness and reliability in production settings.

Data-related issues can degrade the performance of the models, leading to inaccurate predictions or decisions. By monitoring key metrics such as model accuracy, data consistency and quality, and performance degradation, models can remain stable and reliable over time, and issues can be identified and diagnosed early on, minimizing their impact on the system.

Optimizing Resource Utilization with ML Observability

Another reason why ML Observability is essential is to optimize resource utilization. ML model inference and updating (online retrain or online learning) often require significant computational resources like CPU, memory, and GPU. Inefficient resource allocation can lead to increased costs and decreased system performance. By monitoring the real-time utilization of computational resources, developers can identify bottlenecks and optimize resource allocation to ensure efficient resource utilization.

ML Observability also plays a crucial role in debugging and troubleshooting ML systems. Identifying the root cause of issues in complex production environments can be challenging. By capturing logs and traces of model predictions and system events, developers can trace any unexpected behavior to its root cause and take corrective actions to resolve the issue.

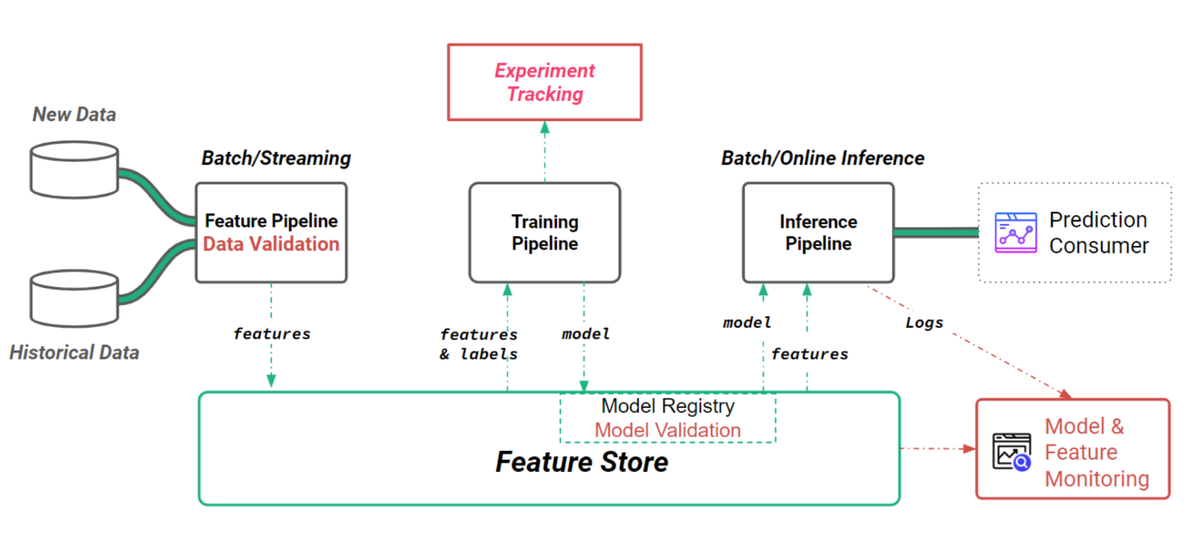

Data Validation in Machine Learning Observability

In an ML context, the adage "garbage in, garbage out" holds particular significance. Even the most sophisticated models can't perform well if fed flawed or inconsistent data. ML Observability encompasses the observability of the quality of data that arrives at feature pipelines.

Maintaining ML Performance with Robust Data Validation

ML systems often operate in environments with limited control over the data they ingest. Data may arrive from various sources with quirks, biases, and anomalies. If left unchecked, these data imperfections can harm ML model performance.

Incorporating robust data validation mechanisms into the feature engineering process is essential. You need to ensure that you have visibility that new data arriving in the system passes your data validation tests.

Data Validation for Robust ML Observability

ML Observability on data validation consists of monitoring the quality and characteristics of incoming data to identify issues that could affect model performance. Data quality issues can range from missing values and outliers to distribution shifts and data drift. Data validation aims to ensure data integrity throughout the feature engineering pipeline.

This involves implementing checks and safeguards to detect and rectify data anomalies before propagating downstream and adversely impacting model training and inference. By establishing ML Observability on data validation, developers can monitor the metrics and mitigate the risk of model degradation due to poor-quality data. Several techniques can be employed for data validation in feature pipelines, including:

- Schema Validation: Verifying that incoming data conforms to expected schemas in terms of data types, formats, and structures

- Statistical Analysis: Performing statistical analyses to identify outliers, anomalies, and distributional shifts in the data

- Data Profiling: Generating descriptive statistics and visualizations to gain insights into data distributions, correlations, and patterns

- Automated Checks: Implementing automated checks and alerts to flag data quality issues in real-time

Related Reading

Is Model Observability Just a Fancy Word for ML Monitoring?

Machine learning model observability and monitoring are often mistaken for one another, but are very different. ML monitoring tracks a model’s performance over time, alerting practitioners when something goes wrong.

In contrast, observability provides the context to understand why a model may be underperforming so that practitioners can take informed steps to remediate issues.

What is ML Monitoring?

ML monitoring in machine learning is tracking a model's performance metrics from development to production and understanding the issues associated with the model’s performance. Metrics that should be monitored include:

- Accuracy

- Recall

- Precision

- F1 score

- MAE

- RMSE

One of the most essential elements of ML monitoring is its alert system, which notifies data scientists or ML engineers when a change or failure is detected. This requires setting conditions and designing metrics or thresholds that indicate when an issue arises.

ML Monitoring Essentials

ML monitoring is an encompassing process that includes monitoring the:

- Data: The ML monitoring system monitors the data used during training and production to ensure its quality, consistency, and accuracy, as well as security and validity.

- Model: Monitoring the model comes after it has been deployed. The monitoring system monitors the model for changes and alerts the data scientist when changes occur.

- Environment: The environment where the model is developed and deployed also contributes to the overall model performance. Issues with either environment affect performance. The ML monitoring system checks for metrics such as CPU, memory, disk, I/O utilization, etc.

Proactive Monitoring for Long-Term ML Model Success

Monitoring all of these helps identify issues as soon as they occur and enables the data scientist to intervene and resolve them. Over time, changes in data and the environment may affect a model's long-run performance. Since ML models are prone to errors, model monitoring enables an organization to avoid these issues and focus more on improving project performance.

Monitoring for data drifts, concept drifts, memory leaks, etc., for models in production is critical for the success of your machine learning project and the results you hope to achieve. It helps you identify model drift, data/feature drift, and data leakage, which can lead to poor accuracy, underperformance, or unexpected bias. Let’s check out what these different issues mean:

Data Drift

Once models are live in production, the input data can change over time, deteriorating the model’s performance and accuracy. The primary issue is that the data used during training, testing, and model validation changes and is different from the data input in production. Therefore, it’s essential to consistently monitor for data drift.

Concept Drift

Since production models are used in real time and data evolves in real time, changes in the relationships between input and output data are bound to happen. This is known as concept drift. Here, the data has evolved based on real-time events, changes in consumer patterns, etc.

Adversarial Inputs

It’s important to be alert for data inputs made by an attacker, which can cause performance degradation.

Bias and Fairness

As users interact with a model, they unintentionally introduce their own biases, and often, the data used to train the model can also be biased. Monitoring for bias is essential to ensure a model provides fair and accurate predictions.

Data Leakages

This occurs when the dataset used during training contains relevant data, but similar data is not obtainable when the model is in production. The difference in the dataset results in a higher accuracy rate during training and low performance during production.

Bugs

When deploying ML models, many issues can arise that weren’t seen during testing or validation, not just within the data itself. It could be the system usage, a UX error, etc. ML monitoring is essential for the following reasons:

- It enables you to analyze the accuracy of the prediction

- It helps eliminate prediction errors

- It ensures the best performance by alerting the data scientist to issues as they arise

What is ML Observability?

Observability measures the health of the internal states of a system by understanding the relationship between the system’s inputs, outputs, and environment. In machine learning, this means monitoring and analyzing the inputs, prediction requests, and generated predictions from your model before providing an understanding of insights whenever an outage occurs.

Observability comes from the control system theory, which tells us that you can only control a system to the extent you can know/observe it. This means that managing the accuracy of the results, usually across different system components, requires observability.

Holistic Monitoring Across Complex ML Systems

Observability becomes more complex in ML systems as you need to consider multiple interacting systems and services such as:

- Data inputs/pipelines

- Model notebooks

- Cloud deployments

- Containerized infrastructure

- Distributed systems

- Microservices

This generally means that you need to monitor and aggregate many systems. ML observability combines the stats of performance data and metrics from every part of an ML system to provide insight into the problems facing the ML system. So, more than alerting the user to the situation arising from the model, ML observability provides resolutions and insights for solving the problem.

Granular Evaluation and Measurement in ML Observability

Making measurements is crucial for ML observability. When analyzing your model performance during training, measuring top-level metrics isn’t enough and will provide an incomplete picture. You must slice your data to understand how your model performs for various data subsets. ML observability also uses a slice-and-dice approach to evaluate the model's performance.

Observability doesn’t just stop at application performance and error logging. It also includes monitoring and analyzing prediction requests, performance metrics, and the generated predictions from your models over time, as well as evaluating the results.

Leveraging Domain Knowledge for Effective ML Observability

Another important factor needed for ML observability is having domain knowledge. Domain knowledge helps with precise and accurate insight into the changes that occur in the model.

For example, when modeling and evaluating a recommender model for an ecommerce fashion store, you need to be aware of the fashion trends to understand the changes in the model correctly. Domain knowledge also helps during data collection and processing, feature engineering, and result interpretation.

ML Monitoring vs. ML Observability

The simple difference between ML monitoring and ML observability is “the What vs. the Why.” ML monitoring tells us “the What,” while ML observability explains the What, Why, and sometimes How to Resolve It.

ML Monitoring

- ML monitoring notifies us about the problem.

- Monitoring alerts us to a component’s outage or failure.

- ML Monitoring answers the what and when of model problems.

- Monitoring tells you whether the system works.

- ML Monitoring is failure-centric.

ML Observability

- ML observability is knowing the problem exists, understanding why the problem exists and how to resolve it.

- ML Observability gives a system view on outages – taking the whole system into account.

- ML Observability gives the context of why and how.

- Observability lets you ask why it’s not working.

- ML Observability understands the system regardless of an outage.

For instance, let’s say a model in production faces a concept drift problem. An ML monitoring solution will be able to detect the performance degradation in the model. In contrast, an ML observability solution will compare data distributions and other key indicators to help pinpoint the cause of the drift.

Related Reading

Machine Learning Observability Best Practices

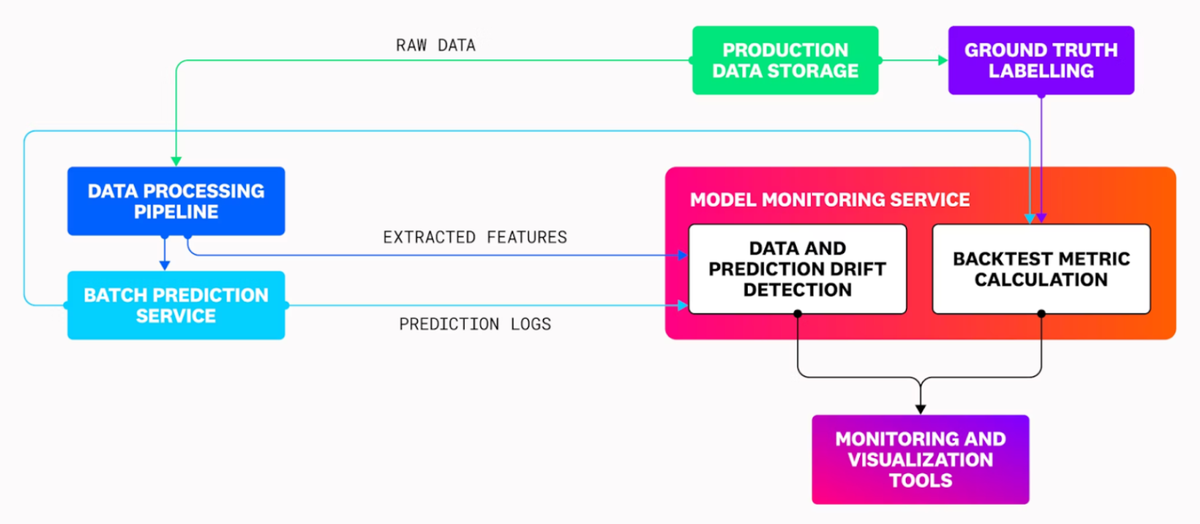

To monitor your model’s performance in production, you’ll need to set up a system that can examine the data fed to the model and its predictions. Effective model monitoring requires a service sitting alongside the prediction service that can ingest samples of input data and prediction logs, use them to calculate metrics, and forward these metrics to observability and monitoring platforms for alerting and analysis.

The following diagram shows the flow of data in a typical model monitoring system, traveling from the ML pipeline to the monitoring service so that calculated metrics can be forwarded to observability and visualization tools:

The monitoring service should be able to calculate data and prediction drift metrics and backtest metrics that directly evaluate the accuracy of predictions using historical data. Using this service, along with tools for monitoring the data processing pipelines being used for feature extraction, you can spot key issues affecting your model's performance. These include:

Data Skew: Why Training and Serving Data Must Match

Training-serving skew occurs when a significant difference between training and production conditions occurs, causing the model to make incorrect or unexpected predictions.

ML models have to be trained precisely with data similar in structure and distribution to what they’ll see in production, while avoiding overfitting, which happens when the model is too closely fit to the training data so that it cannot generalize to the data it sees in production.

Drift: How Changes in Data Can Affect Model Performance

Production conditions can cause unpredictable changes in the data your model is working with. Drift refers to when these changes cause the model’s inputs and outputs to deviate similarly to those during training, likely indicating a decrease in predictive accuracy and general reliability.

ML models generally have to be retrained at a set cadence to cope with drift, which can occur in several ways.

Data Drift

Changes in the input data fed to the model during inference refer to changes in the overall distribution of possible input values and the emergence of new patterns in data that weren’t present in the training set.

Significant data drift can occur when real-world events change user preferences, economic figures, or other data sources, as in the COVID-19 pandemic’s effect on financial markets and consumer behavior. Data drift can be a leading indicator of reduced prediction accuracy, since a model will tend to lose its ability to interpret data that’s too dissimilar to what it was trained on.

Prediction Drift

Refers to changes in the model’s predictions. If the distribution of prediction values changes significantly over time, this can indicate that the data is changing, predictions are worsening, or both.

For example, a credit-lending model used to evaluate users in a neighborhood with lower median income and different average household demographics from the training data might start making significantly different predictions. Further evaluation would be needed to determine whether these predictions remain accurate and merely accommodate the input data shift, or whether the model’s quality has degraded.

Concept Drift

Refers to that latter case, when the relationship between the model’s input and output changes so that the ground truth the model previously learned is no longer valid. Concept drift tends to imply that the training data needs to be expired and replaced with a new set.

Input Data Processing Issues Can Lead to Bad Predictions

Issues in the data processing pipelines feeding your production model can lead to errors or incorrect predictions. These issues can look like unexpected changes in the source data schema (such as a schema change made by the database admin that edits a feature column in a way that the model isn’t set up to handle) or errors in the source data (such as missing or syntactically invalid features).

Directly Evaluate Prediction Accuracy to Spot Model Issues

Where possible, you should use backtest metrics to track the quality of your model’s predictions in production. These metrics directly evaluate the model by comparing prediction results with ground truth values collected after inference.

Backtesting can only be performed in cases where the ground truth can be captured quickly and authoritatively used to label data. This is simple for supervised learning models, where the training data is labeled. It’s more difficult for unsupervised problems, such as clustering or ranking, and these use cases often must be monitored without backtesting.

Capturing Ground Truth for Accurate ML Evaluation

The ground truth must be obtained in production using historical data ingested at some feedback delay after inference. Each prediction must be labeled with its associated ground truth value to calculate the evaluation metric. Depending on your use case, you must create these labels manually or pull them in from existing telemetry, such as RUM data.

For example, let’s say you have a classification model that predicts whether a user who refers to your application’s homepage will purchase a subscription. The ground truth data for this model would be the new signups that occur.

Archiving and Labeling Predictions for ML Backtesting

To prepare your prediction data for backtesting, it’s ideal to archive prediction logs in a simple object store (such as an Amazon S3 bucket or Azure Blob Storage instance). This way, you can roll up logs at a desired time interval and then forward them to a processing pipeline that assigns the ground truth labels. Then, the labeled data can be ingested into an analytics tool that calculates the final metric for reporting to your dashboards and monitors.

When generating the evaluation metric, it is essential to choose a rollup frequency for the calculation and its ingestion into your observability tools that balances granularity and frequent reporting with accounting for seasonality in the prediction trend. For example, calculate the metric daily and visualize it in your dashboards with a 15-day rollup.

Choosing the Right Evaluation Metrics for ML Models

Different evaluation metrics apply to other types of models. For example, classification models have discrete outputs, so their evaluation metrics work by comparing discrete classes. Classification evaluations like precision, recall, accuracy, and AU-ROC target different qualities of the model’s performance, so you should pick the ones that matter the most for your use case.

If your use case places a high cost on false positives (such as a model evaluating loan applicants), you’ll want to optimize for precision. On the other hand, if your use case places a high cost on missed true positives (such as an email spam filter), you’ll want to optimize for recall. If your model doesn’t favor precision, recall, or accuracy and wants a single metric to evaluate its performance, you can use AU-ROC.

Comparing Metrics and Evaluating Regression Models

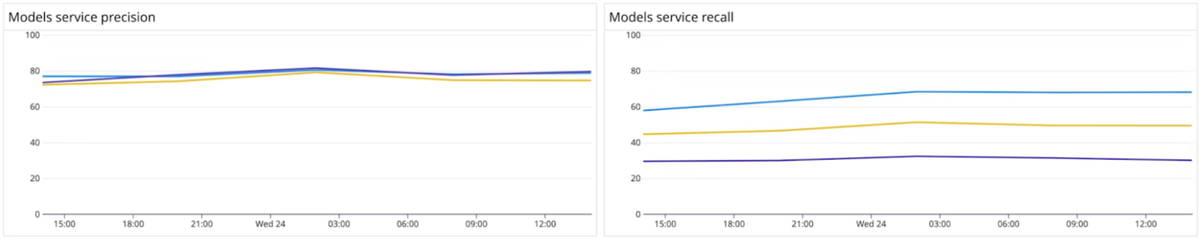

Because precision, recall, and accuracy are calculated using simple algebraic formulas, you can also easily use a dashboarding tool to calculate and compare each from labeled predictions. The following screenshot shows a dashboard that compares precision and recall across three different model versions.

For models with continuous decimal outputs (such as a regression model), you should use a metric that can compare continuous predictions and ground truth values. One frequently used metric for evaluating these models is root mean squared error (RMSE), which takes the square root of the average squared difference between ground truth and prediction.

Aligning Evaluation Metrics with Business KPIs

Correlating evaluation metrics with business KPIs can help you understand whether your predictions are accurate relative to the ground truth and whether or not the model is facilitating your application's overall performance.

For example, taking the previous recommendation-ranking regression model, these KPIs might come from RUM data and track user engagement rates and intended business outcomes (such as signups or purchases) on the recommended pages.

Centralizing Metrics and Insights for Collaborative ML Monitoring

Because your model relies on upstream and downstream services managed by disparate teams, it is helpful to have a central place for all stakeholders to report their analysis of the model and learn about its performance. By forwarding your calculated evaluation metrics to the same monitoring or visualization tool you’re using to track KPIs, you can form dashboards that establish a holistic view of your ML application.

Suppose your organization is testing multiple model versions simultaneously. In that case, it will also be easier to build visualizations that track and break down model performance data and business analytics by those versions.

Ground truth evaluation metrics are the most accurate and direct way to monitor model performance, but are often complicated, if not impossible, to obtain within a reasonable feedback delay.

Monitor Prediction and Data Drift

Even when ground truth is unavailable, delayed, or challenging to obtain, you can still use drift metrics to identify trends in your model’s behavior. Prediction drift metrics track the changes in the distributions of model outputs (i.e., prediction values) over time. This is based on the notion that if your model behaves similarly to how it acted in training, it’s probably of a similar quality.

Data drift metrics detect changes in the input data distributions for your model. They can be early indicators of concept drift because if the model is getting significantly different data from what it was trained on, it’s likely that prediction accuracy will suffer. Spotting data drift early can retrain your model before significant prediction drift sets in.

Drift Detection Tools for Reliable ML Monitoring

Managed ML platforms like Vertex AI and Sagemaker provide tailored drift detection tools. If you’re deploying your custom model, you can use a data analytics tool like Evidently to intake predictions and input data, calculate the metrics, and then report them to your monitoring and visualization tools.

Depending on the size of your data and the model type, you’ll use different statistics to calculate drift, such as the Jensen-Shannon divergence, Kolmogorov-Smirnov (K-S) test, or Population Stability Index (PSI).

For example, let’s say you want to detect data drift for a recommendation model that scores product pages according to the likelihood that a user will add the product to their shopping cart. You can detect drift in this categorical model by finding the Jensen-Shannon divergence between the most recent input data samples and the test data used during training.

Tracking Feature and Attribution Drift for Deeper Insights

Feature drift and feature attribution drift on retrain break down data drift by looking at the distributions of the individual feature values and their attributions. This decimal value changes how feature values are weighted when calculating the final prediction.

While changes in the production data typically cause feature drift, feature attribution drift is usually the result of repeated retrainings. Both metrics can help you find specific issues related to changes in a subset of the data.

Detecting Attribution Drift Behind Prediction Changes

For example, if a model’s predictions are drifting significantly while data drift looks normal, feature attribution drift may be causing the final feature values to deviate. By monitoring feature attribution drift, you can spot these changing attributions affecting predictions even as feature distributions remain consistent.

This case implies that the feature drift was caused by frequent retraining, rather than changes in the input data.

Managing Feature Interactions and Drift in Complex Models

As you break down your model’s prediction trends to detect feature and feature attribution drift, it’s also helpful to consider how features interact with each other and which features have the most significant impact on the end prediction.

The more features you have in your model, the more difficult it becomes to set up, track, and interpret drift metrics for all of them. For image and text-based models with thousands to millions of features, you might have to zoom out and look only at prediction drift, or find proxy metrics representing feature groups.

Setting Alerts and Managing Retraining Based on Drift Metrics

For any of these drift metrics, you should set alerts with thresholds that make sense for your particular use case. To avoid alert fatigue, you must pick a subset of metrics that report quickly and are easily interpretable. The frequency of drift detection will determine how often you retrain your model, and you need to figure out a cadence for this that accounts for the computational cost and development overhead of training while ensuring that your model sticks to its SLOs.

As you retrain, you must closely monitor for new features and feature attribution drift, and where possible, evaluate predictions to validate whether or not retraining has improved model performance. For example, the following alert fires a warning when data drift increases by 50% weekly.

Detect Data Processing Pipeline Issues

Failures in the data processing pipelines that convert raw production data into features for your model are a common cause of data drift and degraded model quality. If these pipelines change the data schema or process data differently from what your model was trained on, your model’s accuracy will suffer. Data quality issues can stem from issues like:

- Changes in processing jobs that cause fields to be renamed or mapped incorrectly.

- Changes to the source data schema that cause processing jobs to fail.

- Data loss or corruption before or during processing.

These issues often arise when multiple models addressing unique use cases leverage the same data. Input data processing can also be compromised by misconfigurations, such as:

- A pipeline points to an older version of a database table that was recently updated.

- Permissions are not updated following a migration, so pipelines lose data access.

- Errors in feature computation code.

Automating Data Validation and Alerting for Prediction Reliability

You can establish a bellwether for these data processing issues by alerting on unexpected drops in the quantity of successful predictions. To help prevent them from cropping up in the first place, you can add data validation tests to your processing pipelines and alert on failures to check whether the input data for predictions is valid.

To accommodate your production model's continual retraining and iteration, it’s best to automate these tests using a workflow manager like Airflow. This way, you can ingest task successes and failures into your monitoring solution and create alerts for them.

Monitoring Schema Changes to Prevent Pipeline Breakages

By tracking database schema changes and other user activity, you can help your team members ensure their pipelines are updated accordingly before breaking. Using a managed data warehouse like Google Cloud BigQuery, Apache Snowflake, or Amazon RedShift, you can query for schema change data, audit logs, and other context to create alerts in your monitoring tool.

By using service management tools to centralize knowledge about data sources and processing, you can help ensure that model owners and other stakeholders are aware of data pipeline changes and their potential impacts on dependencies.

Service Catalog for Cross-Team Data Coordination

For example, you might have one team in your organization pulling data from a feature store to train their recommendation model, while another team manages that database, and a third team starts pulling it for business analytics.

A service catalog enables the database owners to centralize the documents detailing their schema and make it easier to contact them to request a change. It also maps the dependent services owned by the other two teams.

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.