May 23, 2025

What Is Machine Learning Orchestration & 8 Best Tools for the Workflow

Inference Research

Machine learning models hold great promise for automating decision-making processes across various industries. Deploying these models into production and keeping them in good shape over time can prove challenging. The difficulties often arise because machine learning models are not static; they change over time, as does the environment in which they operate. Monitoring ML Models in Production is a critical part of this process. Machine Learning Orchestration helps teams manage the many processes involved in deploying and maintaining machine learning models to make these tasks easier and more efficient. In this article, we'll explore how Machine Learning Orchestration can help you automate and scale your machine learning workflows with minimal engineering overhead, using powerful, cost-efficient tools that accelerate model deployment and simplify the path from prototype to production.

One effective tool for achieving your Machine Learning Orchestration objectives is AI inference APIs. These help automate and accelerate the deployment of inference processes, reducing the manual effort required to get machine learning models to production and keeping them running smoothly over time.

What is Machine Learning Orchestration?

Machine learning orchestration automates the deployment, administration, and monitoring of machine learning models at scale. Orchestration coordinates the many components and processes in a machine learning pipeline, such as:

- Data preparation

- Feature engineering

- Model training

- Validation

- Deployment

Machine learning orchestration aids in automating and simplifying the development of machine learning models, allowing enterprises to reduce time to market and increase the efficiency and accuracy of their machine learning operations.

Machine Learning Orchestration Platforms Overview

Platforms for machine learning orchestration offer tools and infrastructure for automating and controlling various stages of the machine learning process. An ML orchestration platform may include the following main features:

- Versioning and data management

- Model development and refinement

- Model testing and validation

- Model deployment and execution

- Monitoring and alerting that is automated

- Other data and application services integration

Machine learning orchestration platforms free data scientists and engineers from the mundane tasks of managing and deploying infrastructure so that they may instead concentrate on creating and upgrading models.

What Is an Orchestration Layer and How Does It Improve Machine Learning Workflows

An orchestration layer is an architectural component that automates and manages complicated workflows or processes. It lies between the many systems or apps in the workflow and manages their interactions. An orchestration layer's primary function is to simplify and automate the administration of complicated processes by providing a centralized point of control.

It offers tools and Application Programming Interfaces that allow developers to build, run, and monitor complicated processes without worrying about managing the underlying infrastructure. The layer in cloud computing often uses a cloud management platform, a collection of tools and services for controlling the lifecycle of cloud-based resources.

Resource Examples and Monitoring Tools

Resources include virtual machines, containers, storage volumes, network interfaces, and other components. It may also contain tools for monitoring and evaluating workflow performance, allowing developers to spot bottlenecks or other problems and make changes to increase efficiency and dependability.

What Is Orchestration Software and How Does It Help Machine Learning?

In a distributed computing context, data orchestration software automates the administration and coordination of various systems, applications, and services. It offers a single platform for managing and monitoring complex workflows, activities, and procedures that span several systems and services.

It’s extensively used in cloud computing, container orchestration, and DevOps contexts to automate application and service deployment, setup, scaling, and administration. It may also automate complicated operations and activities in data centers and other large-scale IT infrastructures.

Orchestration Benefits for Business

Orchestration helps businesses by automating complicated processes and workflows. It enhances productivity, minimizes mistakes, and boosts scalability. Automating regular processes and freeing up staff for more strategic work may also help firms save costs and improve agility.

What Are Machine Learning Orchestration Approaches?

Machine learning orchestration approaches consist of various strategies that simplify and automate the processes of developing and managing machine learning models. They include:

AutoML

AutoML refers to the practice of automating the whole machine learning process, from data preparation and feature engineering through model selection and hyperparameter tweaking. Solutions such as Google Cloud AutoML, H2O.ai, and DataRobot allow users to construct and deploy machine learning models without having significant ML skills.

Hyperparameter tuning

This includes automating the process of tweaking model hyperparameters to enhance model performance. Hyperparameter tuning tools such as AWS SageMaker and Optuna provide optimization strategies and tools for determining the ideal hyperparameters for a particular model.

Pipeline orchestration

This includes automating the many steps of the machine learning pipeline as well as model training and deployment. Workflow automation capabilities are provided by data orchestration tools such as Apache Airflow, Kubeflow, and Luigi, which allow data scientists to develop, run, and monitor complicated ML processes.

Model management

Model management refers to managing the whole lifespan of machine learning models, from creation and testing to deployment and monitoring. Model management solutions such as MLflow, TensorFlow Serving, and Kubeflow provide infrastructure and APIs for deploying and maintaining models in production.

Related Reading

8 Best ML Orchestration Tools for Developers

Inference

- Best For: Developers deploying LLMs at scale

- Learning Curve: Low

- Built For ML?: Yes (LLM-focused)

- DevOps Difficulty: Very Easy

- Key Strength: Serverless LLM APIs, batch processing, and RAG-ready

Airflow

- Best For: Teams are already using it for ETL

- Learning Curve: Medium

- Built For ML?: No

- DevOps Difficulty: Moderate

- Key Strength: Flexibility and wide adoption

Kubeflow

- Best For: Kubernetes-native ML workflows

- Learning Curve: High

- Built For ML?: Yes

- DevOps Difficulty: Hard

- Key Strength: Full ML lifecycle on Kubernetes

MLflow

- Best For: Experiment tracking + light orchestration

- Learning Curve: Low to Medium

- Built For ML?: Yes, but not orchestration

- DevOps Difficulty: Easy

- Key Strength: Reproducibility and tracking

Metaflow

- Best For: Python-loving data scientists

- Learning Curve: Low

- Built For ML?: Yes

- DevOps Difficulty: Very Easy

- Key Strength: Ease of use, cloud integration

Prefect

- Best For: Modern, beginner-friendly orchestration

- Learning Curve: Low

- Built For ML?: No

- DevOps Difficulty: Very Easy

- Key Strength: Simple setup, great UX

Dagster

- Best For: Teams wanting structure + type safety

- Learning Curve: Medium

- Built For ML?: Yes

- DevOps Difficulty: Easy

- Key Strength: Strong testing and data contracts

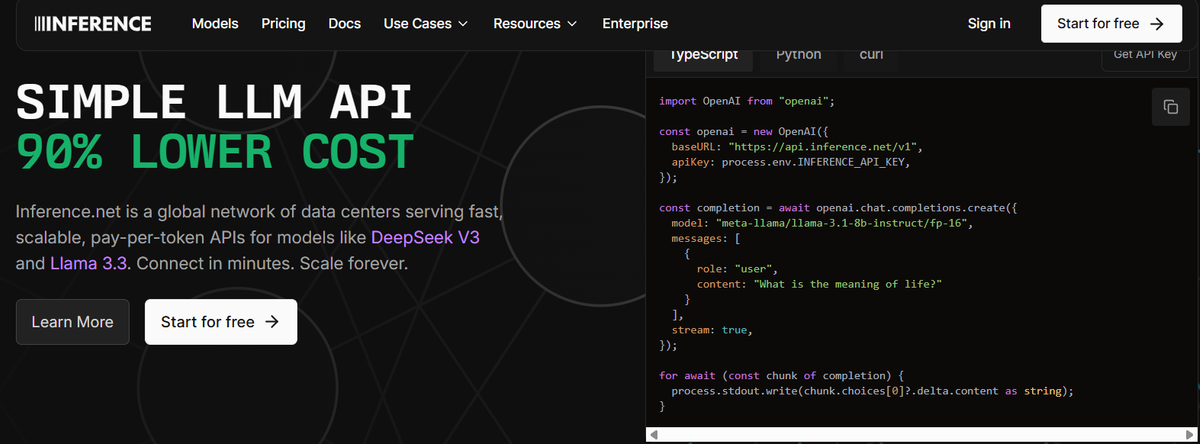

1. Inference: The Best Tool for LLMs

Inference is a serverless orchestration platform that is purpose-built for developers working with large language models. While traditional ML orchestration tools focus on task scheduling and pipeline control, Inference delivers OpenAI-compatible inference APIs optimized for production-scale LLM deployment, all with market-leading cost efficiency and performance.

Scalable Orchestration for Generative AI

Unlike generic workflow engines, Inference is designed to handle the real-world demands of generative AI applications. Whether you're powering chatbots, running batch jobs for async processing, or building RAG pipelines with document extraction, Inference gives you:

- Blazing-fast, serverless LLM APIs for top open-source models

- Specialized batch inference for large-scale asynchronous workloads

- Integrated RAG capabilities, including document parsing and extraction

- $10 in free credits to get started without any upfront cost

- Scalable architecture that grows with your needs without extra DevOps overhead

For teams looking to move beyond experimentation and into reliable, production-grade AI, Inference offers the easiest on-ramp to scalable inference orchestration, without the complexity or cloud sprawl. It's orchestration that thinks like a developer.

2. Apache Airflow: The Classic Choice for ML Orchestration

Airflow was initially built at Airbnb, but the Apache Software Foundation has run the open-source Python project since 2016. The platform is designed to help developers create, schedule, and monitor complex workflows programmatically.

Although it isn’t used only for machine learning orchestration, Airflow remains one of the most popular ML tools on the market today, thanks to benefits like:

- Modular architecture for infinite scalability

- Dynamic pipeline generation defined in Python

- Extensible libraries for variable levels of abstraction

- Lean design and templated code consistency

User-Friendly, Cloud-Integrated Workflow Tool

Airflow is easy to use thanks to its web application interface and reliance on Python. It integrates with AWS, Google Cloud Platform (GCS), and Microsoft Azure so developers can extend to existing infrastructures without issues.

With an active community that uses and contributes to the open-source platform, Airflow offers a vast repository of documentation and support.

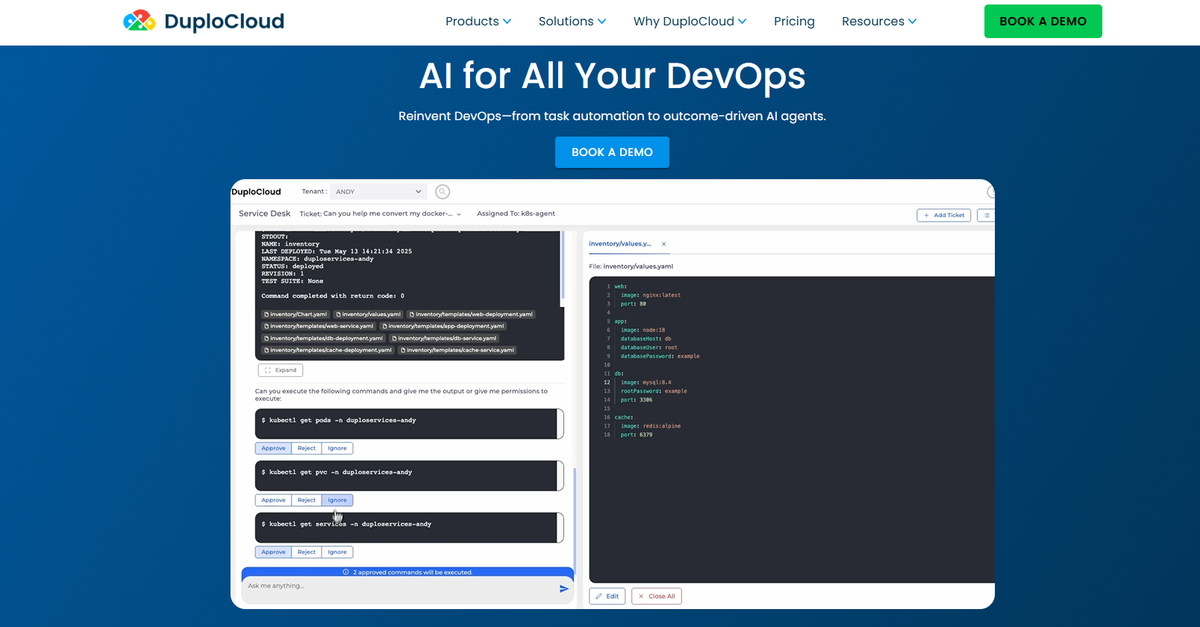

3. DuploCloud: The Secure Choice for ML Workloads

DuploCloud streamlines AI and ML orchestration, enhances security, and empowers developers to scale seamlessly, all within the same environment. The low-code/no-code platform makes implementing Amazon Web Services (AWS) workflows easy and deploying large language models through Google Kubernetes Engine (GKE).

Extensive integrations with popular DevOps tools make it easy for teams to use DuploCloud to build ML models without redesigning their org charts or refiling their code base. Here are some of the most exciting features DuploCloud offers machine learning developers and data scientists:

Access-based security

DuploCloud offers end-to-end encryption, threat detection, and automated compliance checks so developers can launch with strengthened ML security from day one.

Integrated CI/CD pipelines

With continuous integration and deployment features, DuploCloud ensures that each release and update is faster and more reliable.

Real-time monitoring

DuploCloud’s 24/7 security and around-the-clock monitoring systems deliver real-time insights that developers can use to identify risks, resolve issues, and improve performance.

Infrastructure as code

If you decide to use IaC, standardizing and automating infrastructure setup with DuploCloud makes it easy to manage, replicate, and scale experiments and deployments consistently.

4. Kedro: The Pythonic Approach to ML Orchestration

Kedro is another Python-based open-source workflow orchestration framework. Engineers can use it to explore and transition production-ready code into their experiments. Kedro standardizes the code used in machine learning projects so that data science and engineering teams can collaborate seamlessly. The significant features of Kedro’s data science development environment include:

Kedro-Viz

Developers can visualize pipelines and workflows with precise blueprints, making it easy to track experiments, trace data lineage, and collaborate with stakeholders.

Data Catalog

Kedro’s lightweight data connectors make it easy to save, load, and integrate data from a wide variety of file systems and file formats.

Project Templates

Developers can use Kedro to design standardized templates that keep configurations, code, tests, and docs consistent across projects. Kedro also supports seamless integrations with popular development tools and deployment environments, such as:

- Amazon SageMaker

- Apache Airflow

- Spark

- Argo

- Azure ML

- Databricks

- Docker

- Kubeflow

- Prefect

5. Kubeflow: The Kubernetes Choice for ML Pipeline Orchestration

Kubeflow is a free, open-source toolkit that uses Kubernetes specifically for ML pipeline orchestration. Its pre-configured containers are designed to support the entire ML operations lifecycle, from training and testing to deployment.

Although Googlers were responsible for the origin of Kubernetes, developers from major tech players, including Google, Cisco, IBM, and Red Hat, came together to launch Kubeflow.

Kubernetes-Powered ML Workflow Integration

The toolkit combines containerized Kubernetes application development with easy integrations to popular ML workflow systems like Airflow. In that sense, Kubeflow is often part of a broader ML tech stack rather than standing alone as a one-stop shop platform.

6. Metaflow: The Data Scientist’s Best Friend

Metaflow is a framework built to support ML and AI projects. Its workflow management features aim to help data scientists focus on creating models instead of getting bogged down in manual management tasks and machine learning operations (MLOps) orchestration tasks.

Metaflow enables engineers to run experiments, develop, test, debug, and analyze their results locally. Metaflow also facilitates collaboration across multiple cores and instances, making it easy to scale to the cloud.

Netflix-Built, Cloud-Ready Workflow Deployment

Once engineers are satisfied with their experiments, they can deploy workflows to production and trust that they will automatically update in response to changing data. Metaflow was initially developed at Netflix, so it’s no surprise that it’s an expensive tool.

It integrates seamlessly with major cloud providers like AWS, GCP, and Microsoft Azure and various machine-learning-oriented programming languages.

7. Prefect: The Flexible Alternative to Airflow

Prefect is a modern workflow orchestration platform that promises increased flexibility and simplicity compared to solutions like Airflow. Its focus on entirely local building, debugging, and deployment sets it apart, in addition to easy setup and quick pipeline deployment. There are three main products under Prefect’s umbrella:

Prefect Cloud

This fully managed workflow orchestration option allows machine learning developers to benefit from a fully hosted service.

Prefect Open Source

Billed as an alternative to Airflow, the open-source option allows developers to select the tools and features that support their workflows.

Marvin AI

This engineering framework is designed to help developers build AI models, classifiers, functions, and complete applications built on natural language interfaces.

8. Dagster: The Reliable Choice for ML Orchestration

Dagster is all about building clean, testable, reliable workflows. It’s strong on structure, with built-in type checks and data validation, so you don’t end up with mystery bugs halfway through your pipeline. If you prioritize writing maintainable, production-grade ML pipelines, Dagster is worth looking at.

Related Reading

Machine Learning Orchestration vs MLOps

MLOps, or DevOps for machine learning, is a systematic way of tackling the unique challenges of deploying and maintaining machine learning models in production. Machine learning orchestration is a component of MLOps that focuses specifically on managing the workflows of ML pipelines.

Like most workflow (or pipeline)-based systems, an MLOps system requires an orchestrator. In this context, it can be called a Machine Learning Orchestrator (or, for now, an MLOx).

MLOx Orchestrators: Focus on Airflow

An MLOx’s job is simply that of an orchestrator, which is a mechanism that can manage and coordinate complex workflows and processes on a particular schedule. One orchestrator often used in MLOps and many other ML-related systems is Apache Airflow; others include:

- Dagster

- Prefect

- Flyte

- Mage etc

For this article, I will focus on Airflow.

Orchestrating ML Workflows

I like to think of an MLOx as similar to a movie director (or a conductor for an orchestra, but that can be confusing since we would be talking about orchestrating an orchestra). A director has a script that they work from to direct the various processes to deliver the final product, the movie.

In the context of an MLOx, there is a workflow that would be the equivalent of the script. The orchestrator's role is to ensure that the various workflow processes execute on schedule, in the correct sequence, and deal with failure appropriately.

The Actor-Director of ML Workflows

Airflow adds complexity to this analogy. It also has compute capability, as it can utilize the environment it runs in to run any Python code (similarly with Dagster, Prefect, et).

Being extensible and open source, it becomes more like an actor-director where an Airflow task can be an integral part of the workflow like a director can play an actor's part in the movie.

MLOps Tool and Orchestrator

With Airflow, you can load data into memory, perform some processing, and then pass the data to the next task. In this way, Airflow can be an MLOps tool and an orchestrator.

It can direct some of the required machine learning operations or act as an orchestrator, instantiating processes on TensorFlow clusters or initiating Spark jobs, etc. In short, an MLOx is an orchestrator doing its job with ML tooling.

MLOps Systems Require Orchestration to Work Efficiently

The orchestrator is an essential component in any MLOps stack, as it is responsible for running your machine learning pipelines. To do so, the orchestrator provides an environment set up to execute the steps of your pipeline. It also ensures that the steps of your pipeline only get executed once all their inputs (outputs of previous steps of your pipeline) are available.

The features that make Airflow particularly well-suited as an MLOx are:

- DAGs (Directed Acyclic Graphs): DAGs visually represent ML workflows. DAGs make it easy to see the dependencies between tasks and track a workflow's progress.

- Scheduling: Airflow can be used to schedule ML workflows to run regularly. This can help ensure that ML models are always up-to-date and that they are being used to make predictions promptly.

- Monitoring: Airflow provides several tools for monitoring ML workflows. These tools can be used to track the performance of ML models and identify potential problems.

Think "Airflow and", not "Airflow or"

One complication I see when people are deciding on ML tooling is to look for one tool to do everything, including Orchestration. Some of these all-in-one tools include a basic workflow scheduler to cover the minimum requirements, but it will likely not be nearly as capable as Airflow.

Once your MLOx requirements exceed the included orchestrator’s capability, you need to bring in a more capable orchestrator, redo the scheduling work, and probably rewrite much of the code.

Different Roles in MLOps

Another issue I’ve seen is people comparing Airflow to ML tools that do vastly different things, with some just happening to end in "flow," like MLFlow or Kubeflow. MLFlow is mainly used for experiment tracking and has an entirely different operating method from Airflow.

Airflow is another component in the vast MLOps tooling space, from model registries to experiment tracking to specialized model training platforms. MLOps encompasses many components necessary for effective ML workflow management.

Embracing Structure and Scale

Some people moving into MLOps come from a more experimental data science environment and don’t have experience with the more stringent requirements that DevOps and Data Engineering bring to MLOps. Data scientists work more informally, while MLOps requires a structured approach.

To implement an end-to-end MLOps pipeline, a systematic, repeatable approach is necessary to take the data, extract the features, train the model, and deploy it. Airflow often orchestrates structured processes like these and may require some learning for people used to a more flexible, data science-like approach. If you plan to scale up your MLOps capabilities, you should start with the right tool from the beginning.

Easy Setup with Existing Tools

A final consideration for using Airflow as an MLOx is that many organizations already have an Airflow implementation doing data orchestration work. If someone already knows how to manage the Airflow infrastructure and can help create and run DAGs, you have everything you need to get your MLOx up and running.

Add to this automated DAG generation tools like Gusty and the Astro SDK or ML-specific DAG generators from ZenML and Metaflow, and you can get a working MLOx without knowing much about Airflow.

Related Reading

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.