May 27, 2025

What is Machine Learning Model Drift? Types, Causes and Fixes

Inference Research

Machine learning models are like children—they need consistent care and nurturing to grow up healthy and strong. When you deploy a model into production, it often performs fabulously, minimizing error and making accurate predictions. Over time, as data changes, the model can become confused, leading to poor performance and unreliable predictions. This phenomenon, known as machine learning model drift, can be devastating for businesses relying on accurate, real-time predictions to make critical decisions. The good news is that you can avoid the pitfalls of model drift with timely intervention. In this article, we'll explore the ins and outs of Machine Learning Model Drift, including its causes, types, and monitoring ML models in production to maintain consistently high-performing machine learning models.

One way to detect model drift early is by using AI inference APIs. These tools provide continuous monitoring of your model's performance, helping you identify any irregularities as soon as they occur so you can take action before the drift impacts your business.

What is Model Drift in Machine Learning and Why Does it Happen?

Machine learning models can learn, adapt, and improve. But they're not immune to wear and tear like traditional software systems. A recent MIT and Harvard study states that 91% of ML models degrade over time, deduced from experiments conducted on 128 model and dataset pairs.

Understanding Model Drift's Impact

Production-deployed machine learning models can degrade significantly over time due to data changes or become unreliable due to sudden shifts in real-world data and scenarios. This phenomenon is known as model drift, and it can pose a significant challenge for ML practitioners and businesses.

Why ML Models Degrade Over Time

Model drift, also called model decay, AI aging, or temporal degradation, refers to the degradation of machine learning model performance over time. This means that the model suddenly or gradually starts to provide predictions with lower accuracy compared to its performance during the training period. According to recent research, 91% of ML models suffer from model drift.

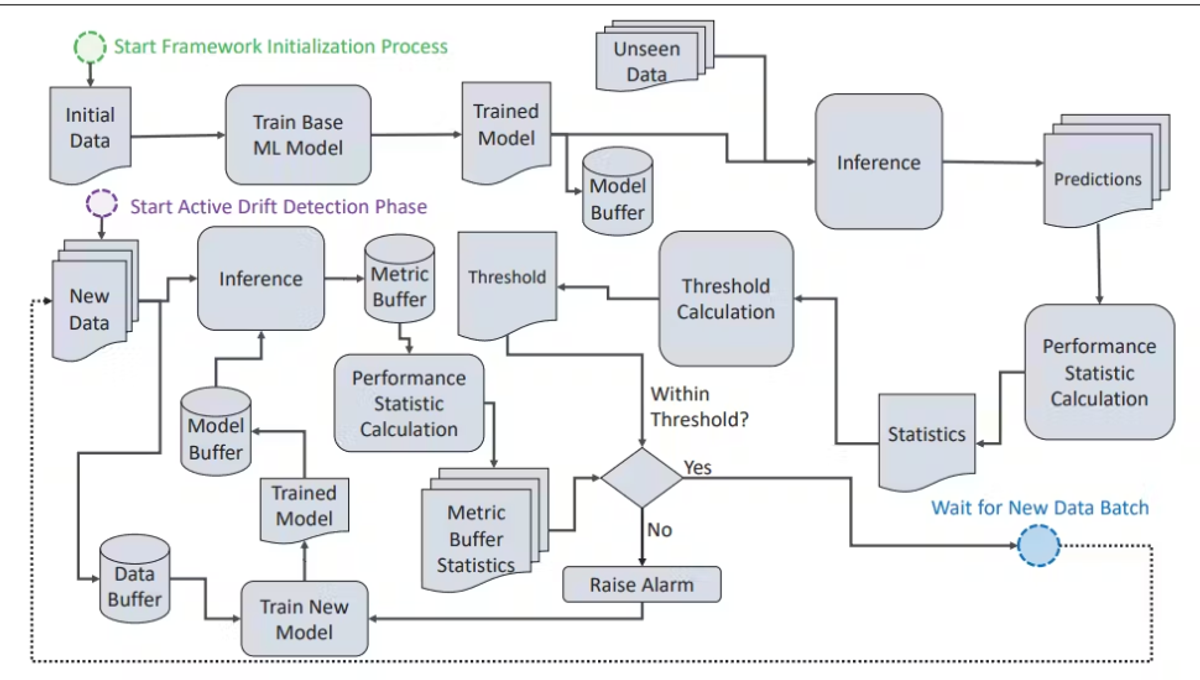

A Generalized Workflow of Model Drift Detection in Machine Learning

What Are the Types of Model Drift?

There are two main types of model drift:

Concept drift

Concept drift happens when the relationship between input variables (or independent variables) and the target variable (or dependent variable) changes. This means that the definition of what we are trying to predict changes, causing our algorithm to provide inaccurate predictions. Reasons for this can include latent features which are variables that have not been included in the model whose predictive value increases over time, rendering the model ineffective. This change can be gradual, sudden, or recurring:

Gradual concept drift

The change in fraudulent behavior is an example of gradual concept drift. As fraud detection methods become more sophisticated, fraudsters adapt to evade fraud detection systems by developing new strategies. An ML model trained on historical fraudulent transaction data would be unable to classify a new strategy as fraud. This means that the model's performance would degrade because the classification of what constitutes fraud has changed over time.

Sudden concept drift

Any sudden change in the environment can impact consumer behavior or global markets. For example, the 2021-2022 global chip shortage caused sudden concept drift, disrupting supply chain models that relied on stable conditions. These models failed to accurately predict new supply constraints and demand surges, resulting in operational challenges.

Despite the shortage, global semiconductor sales grew by 25% in 2021, underscoring the need for companies to quickly adapt their models and strategies to manage the unforeseen scarcity and supply chain issues.

Recurring concept drift

This is also called seasonality. For instance, retail sales increase significantly during the Christmas season or on Black Friday. An ML model that does not account for these known recurring trend changes would provide inaccurate predictions for these periods.

Data drift

Data drift occurs when the statistical properties of the input data change. For instance, as an online platform grows, its user base may shift in age distribution over time. Since the usage habits of young and old people are not the same, a model trained on young people’s usage data would provide inaccurate predictions for old people’s behavior.

- Gradual Concept Drift occurs when patterns in data slowly evolve over time. For instance, in fraud detection, criminals constantly adapt their methods, which degrades the model's accuracy. To address this, one should perform feature engineering and retrain the model regularly.

- Sudden Concept Drift happens when there’s an abrupt change in data distribution. An example is the rapid shift in consumer spending during COVID-19, which disrupted demand forecasts based on pre-pandemic data. In such cases, it’s essential to revisit the solution, potentially using a new algorithm, and evaluate whether the existing solution remains relevant.

- Sudden Concept Drift in Reality can also arise during emergencies, such as a sudden surge in a new respiratory illness. If a model hasn't been trained on similar events, it may fail to recognize the urgency, resulting in poor triage decisions. In these situations, it's crucial to shift to human intervention, retrain the model, and reevaluate the features used.

- Recurring Concept Drift refers to patterns that repeat over time, like holiday spikes in retail sales. A model that doesn’t factor in these seasonal trends may underperform. To correct this, retrain the model to incorporate seasonality and adjust it for these recurring patterns.

- Feature Data Drift occurs when new types of input data appear that weren’t present during training. For example, a sepsis prediction model might encounter new patient data formats or variables. This requires retraining the model on updated data and revisiting feature engineering strategies.

- Label Data Drift happens when the definition of a target variable changes. A financial model may misclassify credit risk if the meaning of “default” evolves. Corrective actions include retraining on the new labeled data and tuning the model accordingly.

- Feedback Data Drift arises when the model's outcomes influence future data in a biased way. For instance, a recommendation system might favor already popular items, reinforcing their selection and leading to skewed results. Evaluating the current solution’s efficacy and determining whether a new approach is needed are key steps.

- Prediction Drift occurs when external factors, like regulatory or market changes, affect model performance, particularly in financial models used for credit risk or trading. It’s important to analyze the impact of these changes on the business and adapt the models accordingly.

What Causes Model Drift?

Understanding the root cause of model drift in machine learning is crucial. Several factors contribute to model drift in ML models, such as:

Changes in the distribution of real-world data

When the distribution of the present data differs from that of the models’ training data. Since the models' training data contains outdated patterns, the model may not perform well on new data, reducing model accuracy.

Changes in consumer behavior

When the target audience's behavior changes over time, the model's predictions become less relevant. This can occur due to changes in trends, political decisions, climate change, media hype, etc. For example, if a recommendation system is not updated to account for frequently changing user preferences, the system will generate stale recommendations.

Model architecture

If a machine learning model is too complex, i.e., has many layers or connections, it can overfit small and unrepresentative training data and perform poorly on real-world data. On the other hand, if the model is too simple, i.e., it has few layers, it can underfit the training data and fail to capture the underlying patterns.

Data quality issues

Using inaccurate, incomplete, or noisy training data can adversely affect an ML model’s performance.

Adversarial attacks

Model drift can occur when a model is intentionally manipulated by malicious actors to alter the input data, resulting in incorrect predictions.

Model and code changes

New updates or modifications to the model’s codebase can introduce drift if not properly managed.

What are Some Real-World Examples of Model Drift?

Model drift deteriorates ML systems deployed across various industries.

Model Drift in Healthcare

Medical machine learning systems are mission-critical, making model drift a significantly dangerous issue. For example, consider an ML system trained for cervical cancer screening. If a new screening method, such as the HPV test, is introduced and integrated into clinical practice, the model must be updated accordingly; otherwise, its performance will deteriorate. In Berkman Sahiner and Weijie Chen's paper, titled "Data Drift in Medical Machine Learning: Implications and Potential Remedies," they demonstrate that data drift can be a significant contributor to performance deterioration in medical ML models.

They demonstrate that in the event of a concept shift leading to data drift, a full overhaul of the model is necessary, as even a minor change may not yield the desired results.

Model Drift in Finance

Banks use credit risk assessment models when giving out loans to their customers. Sometimes, changes in economic conditions, employment rates, or regulations can affect a customer’s ability to repay loans. If the model is not regularly updated with real-world data, it may fail to identify customers at higher risk of default, leading to increased loan defaults.Many financial institutions rely on machine learning (ML) models to make high-frequency trading decisions, and it is very common for market dynamics to change in split seconds.

Model Drift in Retail

Today, most retail companies rely on machine learning (ML) models and recommender systems. In fact, the global recommendation engine market is expected to grow from $5.17B in 2023 to $21.57B in 2028. Companies use these systems to determine product prices and suggest products to customers based on their:

- Past purchase history

- Browsing behavior

- Seasonality patterns

- Other factors

The Impact of Evolving Consumer Behavior

If there is a sudden change in consumer behavior, the model may fail to accurately forecast demand. For instance, a retail store wants to use an ML model to predict its customers' purchasing behavior for a new product. They have thoroughly trained and tested a model using historical customer data.

Before the product launch, a competitor introduces a similar product, or market dynamics are disrupted by external factors, such as global inflation. As a result, these events could significantly influence how consumers shop, and in turn, render the ML model unreliable.

Model Drift in Sales and Marketing

Sales and marketing teams tailor their campaigns and strategies using customer segmentation models. If customer behavior shifts or preferences change, the existing segmentation model may no longer accurately represent the customer base. This can lead to ineffective marketing campaigns and a decline in sales.

What Are the Drawbacks of Model Drift in Machine Learning?

Model drift affects various aspects of model performance and deployment. Besides decreased accuracy, it leads to:

- Poor customer experience

- Compliance risks

- Technical debt

- Flawed decision-making

Model Performance Degradation

When model drift occurs due to changes in consumer behavior, data distribution, and environmental changes, the model's predictions become less accurate, leading to adverse consequences, especially in critical applications such as:

- Healthcare

- Finance

- Self-driving cars

Poor User Experience

Inaccurate predictions can lead to a poor user experience and erode users' trust in the application. This, in turn, may damage the organization's or product's reputation and lead to a loss of customers or business opportunities.

Technical Debt

Model drift introduces technical debt, which accumulates unaddressed issues that make it harder to maintain and improve the model over time. If model drift occurs frequently, data scientists and ML engineers may need to repeatedly retrain or modify the model to maintain its performance, adding to the overall cost and complexity of the system.

Related Reading

How to Detect Machine Learning Model Drift? The Role of Model Monitoring in ML

Early drift detection is crucial for maintaining the accuracy and reliability of machine learning models. As ML models process new input data over time, they can become less accurate if the underlying data distribution shifts significantly. Detecting data drift early on enables teams to retrain or calibrate their models before they produce erroneous predictions, which can negatively impact business operations.

Comparing Predicted Values to Actual Outcomes

The most accurate way to detect model drift is by comparing the predicted values from a given machine learning model to the actual values. The accuracy of a model deteriorates as the predicted values deviate increasingly from the actual values. A standard metric used by data scientists to evaluate the accuracy of a model is the F1 score, primarily because it encompasses both the precision and recall of the model.

Tailoring Metrics to Detect Model Drift

That being said, several metrics are more relevant than others, depending on the situation. For example, type 2 errors would be significant for a cancer-tumor image recognition model. Thus, when a specified metric falls below a given threshold, you’ll know that your model is drifting!

Performance Monitoring and Statistical Tests to Detect Drift

There are two other main ways we can detect drift:

Machine Learning Model-Based Approach

A model-based approach to detect whether the incoming input data has drifted or not.

Statistical Tests

There are many statistical tests to detect data drift. They are primarily divided into three categories:

- Sequential analysis methods

- Custom models to detect drift

- Time distribution methods

Machine Learning Approaches to Detect Drift

This approach uses machine learning models specifically designed to detect changes in the input data over time. These models are trained on historical data to understand the typical patterns and distributions. When new data is received, the model compares it against the learned patterns to identify deviations. For example:

Autoencoders

These are neural networks trained to compress and then reconstruct data. If the reconstruction error (the difference between the original data and its reconstruction) becomes significantly larger, it indicates that the new data differs from the training data, signaling potential drift.

Drift Detection Models

Models like ADWIN (Adaptive Windowing) or DDM (Drift Detection Method) can be employed. These models are designed to work online, continuously monitoring the data stream for changes.

Ensemble Models

Combining multiple models to monitor different aspects of the data can improve detection accuracy. For instance, an ensemble could include models monitoring statistical properties and structural changes in the data.

Statistical Tests to Detect Drift

Statistical tests are commonly used to detect data drift by analyzing the properties of the data distribution. These tests can be categorized into three main groups:

Sequential Analysis Methods

Page-Hinkley Test

The Page-Hinkley method is a statistical technique used to detect changes in the mean of a time series of data. It is commonly used to monitor the performance of machine learning models and detect changes in the data distribution that may indicate model drift.

Defining Thresholds for Page-Hinkley Drift Detection

To use the Page-Hinkley method, the first step is to define a threshold value and a decision function. The threshold value is a value above which a change in the mean is considered significant, and the decision function is a function that returns a value of 1 if a change has been detected and a value of 0 if no change has been detected.

Next, the mean of the data series is calculated at each time step, and the decision function is applied to the data to determine if a change has occurred. If the decision function returns a value of 1, it indicates that a change has been detected and the model may be drifting.

The Page-Hinkley method is a straightforward and effective approach for detecting changes in the mean of a data series over time. It is beneficial for detecting small changes in the mean that may not be immediately apparent when looking at the data.

Nevertheless, it is crucial to carefully select the threshold value and decision function to ensure that the method is sensitive enough to detect changes in the data without generating false alarms.

CUSUM (Cumulative Sum Control Chart

This method detects shifts in the mean value of a monitored process over time. It is effective for identifying small and consistent changes in the data.

Custom Models to Detect Drift

KL Divergence

Kullback-Leibler divergence measures the difference between two probability distributions. It can be used to compare new data distribution with the training data.

Chi-Square Test

This test compares the observed frequencies of data with the expected frequencies. It is helpful for categorical data to detect changes in distribution.

Jensen-Shannon Divergence (JSD)

Jensen-Shannon Divergence measures the similarity between two probability distributions. It is suitable in cases where the underlying data distributions are not easily compared using traditional statistical tests, such as when the distributions are:

- Multimodal

- Complex

- Have different shapes

It is less sensitive to outliers than KL Divergence, making it a robust choice for noisy data.

Population Stability Index

The Population Stability Index (PSI) is a statistical measure used to compare the distribution of a categorical variable across two different datasets. The Population Stability Index (PSI) is a tool used to measure the degree of change in the distribution of a variable between two samples or over time.

It is commonly used to monitor changes in the characteristics of a population and to identify potential problems with the performance of a machine learning model.

Unveiling Distributional Shifts

A high PSI value indicates a significant difference between the distributions of the variable in the two datasets, which may suggest model drift. If the distribution of a variable has changed significantly, or if several variables have changed to some extent, it may be necessary to recalibrate or rebuild the model to improve its performance.

Time Distribution Methods

Kolmogorov-Smirnov (KS) Test

The Kolmogorov-Smirnov (K-S) test is a non-parametric statistical test used to determine whether two sets of data come from the same distribution. It is often used to test whether a sample of data comes from a specific population or to compare two samples to determine if they come from the same population.The null hypothesis in this test is that the distributions are the same. If this hypothesis is rejected, it suggests that there is a drift in the model. The K-S test is a valuable tool for comparing datasets and determining whether they come from the same distribution.

Mann-Whitney U Test

This test compares the ranks of two independent samples to determine if they come from the same distribution. It is suitable for detecting drift in non-parametric data.

T-Test

This test compares the means of two groups to determine if there are significant differences. It helps detect drift in normally distributed data. These approaches and tests provide a comprehensive toolkit for detecting data drift, each of which is suitable for different data and drift patterns. By combining these methods, organizations can ensure their machine-learning models remain accurate and reliable.

Related Reading

Best Practices for Improving Model Performance and Reducing Model Drift

Every machine learning model requires specific evaluation and monitoring metrics to accurately reflect its performance. Establishing an appropriate baseline performance is crucial for accurately comparing metric values. The selected metrics should align with the specific objectives and features of the model.

For example, classification models are best evaluated using accuracy, precision, and recall metrics, which measure how well the model differentiates between different classes. Regression models are assessed using metrics like:

- Mean absolute error

- Mean squared error

- Root mean squared error

- R-squared

Evaluating Model Performance with Prediction-Actuality Metrics

This focuses on the difference between predicted and actual values. Natural language processing models utilize metrics such as BLEU, ROUGE, and perplexity to evaluate the quality and relevance of generated text against reference texts. When selecting your metric, understand its underlying principles and limitations.

For instance, a model trained on imbalanced data may exhibit high accuracy values but fail to account for less frequent events. In such cases, metrics like the F1 score or the AUC-ROC curve would provide a more comprehensive assessment.

Keep an Eye on Your Metrics

Regularly tracking your machine learning model’s performance metrics, such as accuracy, precision, recall, F1 score, and AUC-ROC curve, is crucial for spotting deviations from expected performance. This can be accomplished with automated monitoring tools or by manually reviewing model predictions and performance reports. Monitoring these metrics helps ensure that your model remains accurate and reliable over time.

Analyzing Data Distribution

Constantly monitoring the data distribution of input features and target variables over time helps detect any shifts or changes that may indicate model drift. This can be done using statistical tests, anomaly detection algorithms, or data visualization techniques.

Setting Up Data Quality Checks

Accurate data is necessary for good and reliable model performance. Implementing robust data quality checks helps identify and address errors, inconsistencies, or missing values in the data that could impact the model’s training and predictions. You can use data visualization techniques and interactive dashboards to track these changes in data quality over time.

Leverage Automated Monitoring Tools

Automated monitoring tools offer a more streamlined approach to tracking model performance and data quality throughout the machine learning lifecycle. They provide real-time alerts, historical tracking with observability, and logging features to facilitate proactive monitoring and intervention. Automated tools also reduce the team’s overhead costs.

Continuous Retraining and Model Versioning

Periodically retraining machine learning models with updated data helps teams adapt to changes in data distribution and maintain optimal performance. Retraining can be done manually or automated using MLOps deployment techniques as soon as training is complete. These include:

- Continuous monitoring

- Continuous training

- Continuous deployment

Model versioning enables the tracking of different model versions' performance and comparison of their effectiveness. This enables data scientists to identify the best-performing model and revert to previous versions if necessary.

Human-in-the-Loop Monitoring

It is crucial to have an iterative review and feedback process conducted by expert human annotators and data scientists. This process can validate the detected model drifts and decide whether they are significant or temporary anomalies. Human-in-the-loop monitoring also helps determine when and how to retrain the model when model drift is detected.

MLOps

MLOps is a set of practices that can be used to create sustainable machine learning models. This includes automating the building, testing, and deployment of models, as well as monitoring and managing their performance over time. By using MLOps, it’s possible to detect and prevent model drift early and resolve issues before the model degrades significantly.

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.