Jun 26, 2025

Complete Batch Learning vs. Online Learning Starter Guide

Inference Research

In machine learning, accurate predictions rely on up-to-date data. When the data used to train a model differs from the data encountered in the real world, the model's performance can decline. The best way to avoid this scenario? Continuous learning. In machine learning, continuous learning is like the guitar player who practices new songs every day, rather than waiting until concert week to learn them. To fully grasp these concepts, it's also essential to understand What is Inference in Machine Learning. This article will help you clearly understand the difference between batch learning and online learning in machine learning so you can confidently choose the right approach for building accurate, efficient models.

Inference's AI inference APIs can help you get up and running with machine learning more quickly, whether you choose batch or online learning. With our solution, you can deploy models to production fast and start making predictions while improving your model.

What is Batch Learning and Online Learning?

Batch learning represents the training of machine learning models in a batch manner. In other words, batch learning represents the training of the models at regular intervals, such as:

- Weekly

- Bi-weekly

- Monthly

- Quarterly

All Data, All the Time

In batch learning, the system is not capable of learning incrementally. The models must be trained using all the available data every single time. The data gets accumulated over a period of time. The models are then trained with the collected data at periodic intervals.

The Resource Demands of Batch Models

Model training requires significant time and computing resources, so it’s typically done offline. After training, models are deployed into production and run without further learning. Batch learning—also known as offline learning—allows the model to output results at regular intervals, based on the performance of models trained with new data.

Static Nature of Batch Models

Building offline models or models trained in a batch manner requires training the models with the entire training dataset. Improving the model's performance would require retraining it with the whole training dataset again. These models are static, meaning that once they are trained, their performance will not improve unless a new model is re-trained.

Combating Model Drift Through Retraining

The model’s performance decays over time as the world evolves while the model remains unchanged—a phenomenon known as model or data drift. The solution is to retrain it regularly on up-to-date data. Offline models or models trained using batch learning are deployed in the production environment by replacing the old model with the newly trained model.

Automated Batch Model Updates

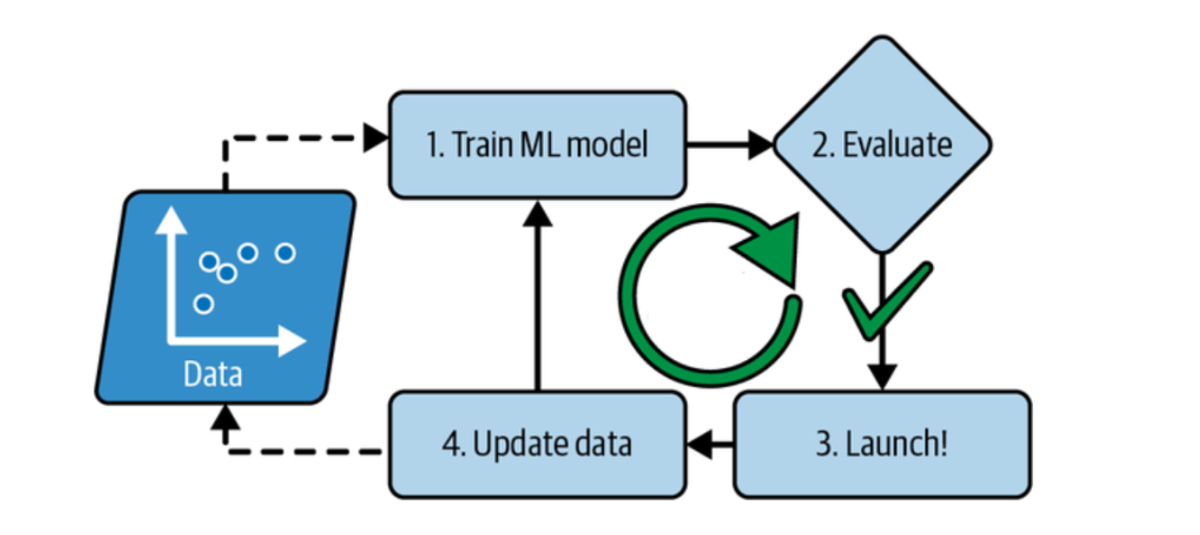

For the model to learn about the new data, it would need to be trained from scratch with all the data. The old model would then need to be replaced with the latest model. Additionally, as part of batch learning, the entire process of training, evaluating, and deploying a machine learning system becomes automated.

Optimizing Batch Training Frequency

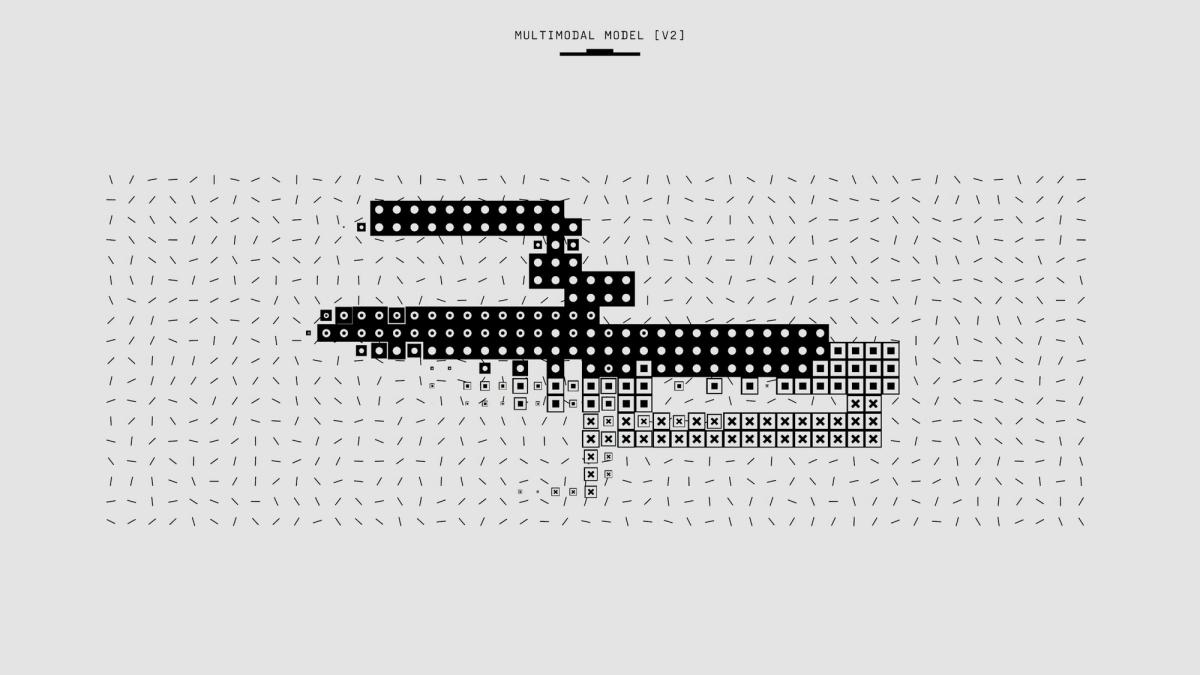

The following picture represents the automation of batch learning. Model training using the whole dataset can take several hours. Thus, it is recommended to run the batch frequently rather than weekly, as training on the complete set of data would require a significant amount of computing resources (CPU, memory space, disk space, disk I/O, network I/O, etc.).

Key Characteristics of Batch Learning

- Data Processing: Trained on the entire dataset and focused on deep learning algorithms.

- Model Update: Parameters in a model are updated infrequently, and earlier versions may need to be retrained using the entire dataset.

- Resource-Intensive: Extremely computationally and memory-intensive, where a large amount of data is crunched.

- Predictive Performance: In some cases, it may achieve very high accuracy because of a detailed analysis of the data used in the training phase.

When to Use Batch Learning

You might wonder: When is batch learning ideal? Batch learning excels in scenarios where your dataset is static or changes infrequently. It’s perfect for offline processing where you can afford to spend time on training, like:

- Image Classification: You typically use large, labeled datasets, such as ImageNet, where the data is fixed, and the model needs to be trained once for long-term use.

- Predictive Maintenance: Batch learning is effective when you have years of sensor data from machinery and need to build a model to predict future failures.

In these cases, the computational overhead of batch learning is justified because the data is stable, and the model doesn’t need to be updated frequently.

What is Online Learning?

In online learning, training occurs incrementally by continuously feeding data as it arrives or in small groups, also known as mini-batches. Each learning step is fast and inexpensive, enabling the system to learn about new data as it becomes available on the fly.

Online learning is particularly well-suited for machine learning systems that receive data as a continuous stream (e.g., stock prices) and require rapid or autonomous adaptation to changes.

Resource Efficiency in Online Learning

It is also a good option if you have limited computing resources. Once an online learning system has learned about new data instances, it does not need them anymore, so you can discard them (unless you want to be able to roll back to a previous state and “replay” the data) or move the data to another form of storage (warm or cold storage) if you are using the data lake.

This can save a considerable amount of space and cost. The diagram given below represents online learning.

Online Learning's Out-of-Core Advantage

Online learning algorithms can also be used to train systems on large datasets that cannot fit in a single machine’s main memory (this is also known as out-of-core learning). The algorithm loads a portion of the data, performs a training step on that data, and repeats the process until it has processed all the data.

Governing Adaptability in Online Models

One of the key aspects of online learning is the learning rate. The rate at which you want your machine learning to adapt to a new dataset is called the learning rate. A system with a high learning rate will tend to forget the learning quickly. A system with a low learning rate will be more like batch learning.

Key Characteristics of Online Learning

- Data Processing: Analyzes arriving data in small packets that come in a stream.

- Model Update: Models are constantly evolving, particularly in real-time or nearly real-time environments.

- Resource Efficient: Sought in less quantity as well as at any specific time in the computation process.

- Adaptive Performance: Able to make adjustments to the results of data regardless of the changes, which is suitable for changing climates.

When to Use Online Learning

You might be thinking: Where does online learning shine? It’s ideal for dynamic environments where data is constantly evolving or where real-time predictions are required.

- Stock Market Predictions: Prices change every second. Online learning enables your model to update with each new piece of data, ensuring it always has the most current information.

- Recommender Systems (e.g., Netflix, YouTube): As users interact with content, the system learns their preferences in real-time, updating recommendations immediately.

Online learning’s ability to adapt quickly is critical in these cases. Nevertheless, there’s always a trade-off: because the model continuously evolves, it might not always converge to the “best” solution as batch learning might.

Why Online Learning vs Batch or Offline Learning?

Before we learn the concepts of batch and online learning, let’s understand why we need different types of model training or learning in the first place.

The key aspect to understand is the data. When the data is limited and comes at regular intervals, we can adopt both types of learning based on the business requirements. When we talk about big data, the following aspects become the key considerations when deciding the model of education:

Volume

The data comes in large volumes. Many preprocessing steps need to be performed to make it available for:

- Training

- Prediction

This would require:

- IT infrastructures

- Software systems

- Appropriate expertise

- Experience in data processing

Velocity

As with the high volume of data, the data coming at high speed (tweets) can also become key criterion.

Variety

Similar to volume and variety, the data can become different. Examples are data for aggregator services such as:

- Uber

- Airbnb

An appropriate selection of learning methods, such as batch learning or online learning, is made to manage big data while delivering on the business requirements.

The Differences Between Batch Learning and Online Learning

Online learning and batch learning, also known as offline learning, are both methods of training machine learning models. The key difference between the two approaches is how they use data to train the model. In batch learning, the model learns from a training dataset simultaneously. The entire dataset must be present before any training can occur. Once the model is trained and deployed, it can make predictions on new data.

Retraining Process

If the incoming data changes over time or the model needs to be updated, a new batch training process must be conducted. This involves:

- Collecting all the latest data

- Retraining the model

- Deploying the updated version

In contrast, online learning takes a different approach. Instead of waiting for a large dataset to accumulate, online learning enables the machine learning model to start making predictions after only seeing a small portion of the data. As new data comes in, the model incrementally updates its knowledge to adapt to changes over time.

Why It Matters: Choosing the Right Approach for Your Needs

Choosing between online learning and batch learning can affect the performance of your machine learning project. The right approach will depend on:

- The specific requirements of your business use case

- The nature of your data

Consider an e-commerce platform like Amazon. User behavior constantly changes, and the model that powers product recommendations needs to adapt quickly as trends shift. Online learning lets you update the model soon, ensuring the recommendations stay relevant.

Financial Forecasting

Consider long-term financial forecasting. You may have stable, historical data that doesn’t need constant updates. Batch learning allows you to process large volumes of this data simultaneously, ensuring your model is robust without needing constant fine-tuning.

Knowing when to use batch or online learning isn’t just about technical preference. It’s about optimizing performance for the specific demands of your project.

Related Reading

Batch Learning vs. Online Learning

Batch learning and online learning represent opposite approaches to machine learning model training. While batch learning builds a model on an entire dataset simultaneously, online learning incrementally updates a model as new data arrives.

How Does Batch Learning Work?

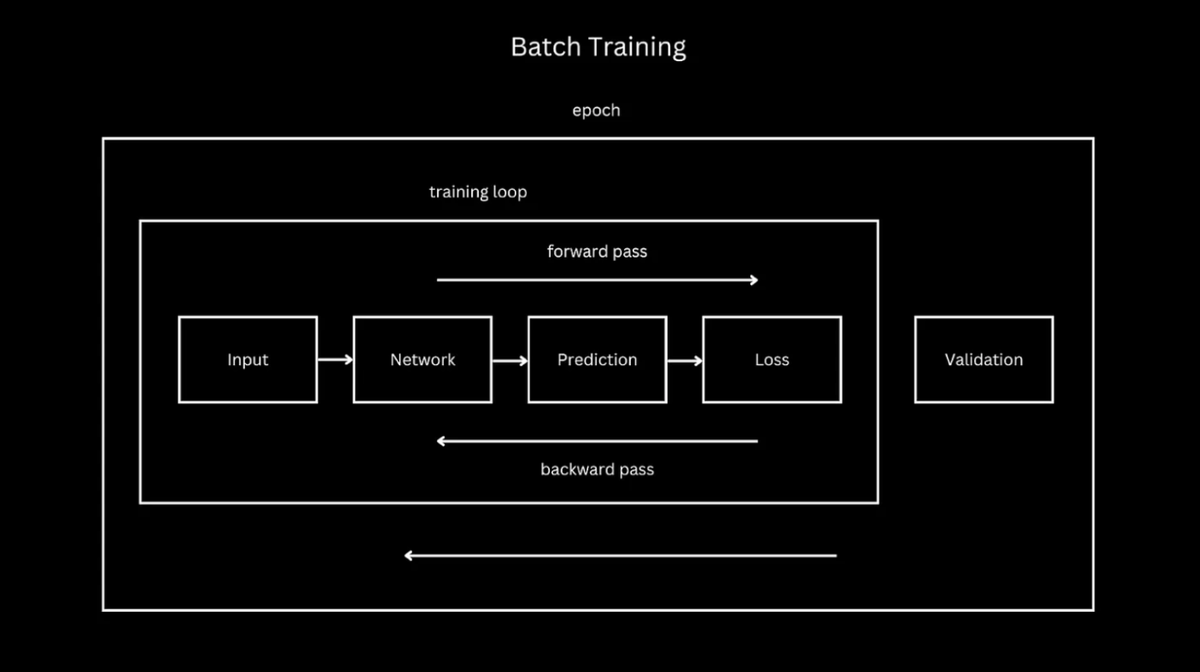

In the beginning, batch learning starts with a model and a full dataset. The model’s parameters are usually initialized to random values. From there, the model begins training, which consists of feeding the data into the model to learn patterns and make predictions.

The training data is split into two sets: a training set and a test set. The model learns from the training set, and the test set is used to validate its accuracy and ensure it can generalize its predictions to unseen data.

Smaller Batches

The training set is divided into smaller batches. This:

- Allows the model to train on less data at a time

- Improves memory efficiency

The model runs through these batches in a series of forward and backward passes to optimize its parameters.

Epoch Completion

After the model has completed an epoch, which refers to one pass over all the data, it validates its performance against the test set. This process is repeated many times until the model achieves an optimal level of performance.

Once the model is finished training, it can be deployed into production, where it can make predictions on new data.

Static Data

Batch learning works well when there is a large amount of static data. Once trained, the model will make accurate predictions until it is retrained on a new dataset.

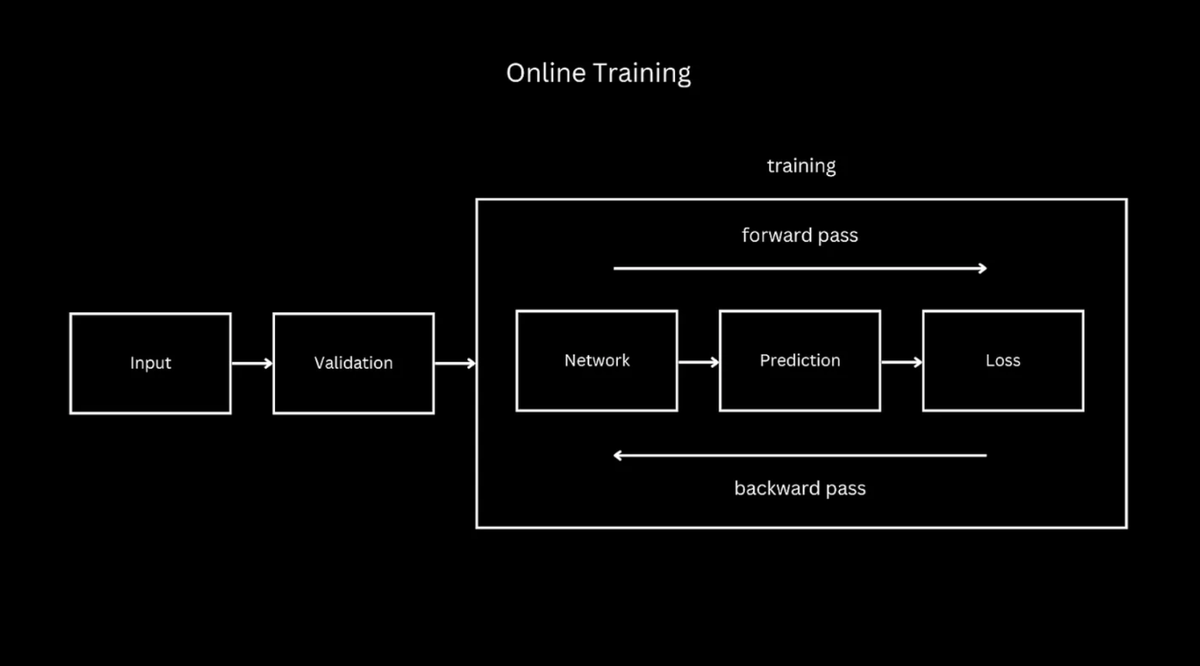

How Does Online Learning Work?

Online learning works much differently than batch learning. Instead of starting with a full dataset, online learning receives data samples on the fly. This means there’s a steady stream of data samples to feed into the model.

The first difference between batch and online training is that online training requires significantly more data, which needs a low-latency input to the model.

Sequential Training

Similar to batch training, the model does a forward and backward pass on samples it receives to adjust weights. The second difference occurs here: Once a model trains on a sample, it generally doesn’t see that sample again. This is called sequential training. Online models will train on a data sample once and then move on to the other data samples coming into the system.

Since there is more than enough data to train, there’s no need to batch it and revisit to improve model performance.

Defining Epochs

If we never revisit data, what’s an epoch in online learning? It can be whatever you define it as. Similar to batch training, it’s a group of training samples, but since there isn’t a definitive training set, it isn’t defined by the total number of training samples. It’s characterized by either:

- Several training samples

- A period of time spent training on data

Just as in batch training, epochs are also completed in online training.

Progressive Validation

Unlike batch training, we don’t validate our model at the end of an epoch in online learning. How do we validate an online model? This is our fourth difference, and it’s something fascinating. Since our model constantly trains and only sees a data sample once, we can validate it before training on it.

Like batch training, we have to use data we haven’t seen to validate our model. Unlike batch training, we don’t visit data samples multiple times, so there’s no need to separate data for validation. This process is called progressive validation.

Continuous Training

Like batch training, validation assesses a model's performance during the training process. This training loop continues as long as data is coming in, and we need this model to serve user requests. Data will continue to stream into the system, and the model will use that data to train and improve model weights.

Our fifth difference between batch and online training is that we don’t stop training. Not until another model is ready to replace the one we’re training. This is done when a different model (architecture change, not weight updates) performs better in the task. This usually means better accuracy and/or resource usage in production systems.

Offline Replacement

A better model is determined via experimentation, or training another model(s) offline, comparing it to our online model, and putting it online (in serving) when it performs better. Notice how the replacement model is trained offline. Models are trained offline to catch up to the leading edge of incoming data. We could start training a model online, but that would take too long for a model to see the performance needed to replace current models. This isn’t justifiable from a time or resource standpoint.

We can train the model offline with many training samples already going through the system. This means they aren’t getting the cutting-edge data, but instead training on data that online models have previously seen, until they can train online.

Offline Methods

Offline training in online learning systems can be done in multiple ways, but there are two familiar ones. The first is using the data training online models to batch train offline models. This offers the flexibility and resource efficiency of batch training until the model starts training online.

The other way is to mimic online training offline (doing the exact training process as listed in this section above), but using more resources to go through those training steps faster. This allows a model to catch up to the cutting edge of data while simulating the training process that online models undergo.

Learning Rate

It’s important to note that this approach also requires batching to parallelize the data and increase training data throughput. It also preserves many of the benefits of sequential training (better performance on non-stationary tasks).

One last difference between batch and online training is how we apply the learning rate in online training. In batch training, the learning rate decreases toward the end to ensure the optimal parameters are reached. In online training, there isn’t an end to training; therefore, there is no decrease in the learning rate. Instead, the learning rate is data-dependent and fluctuates.

Batch Learning vs. Online Learning: Key Differences

While the key differences between batch and online learning relate to efficiency and data processing, here’s a more detailed breakdown of how the two approaches compare across several categories.

Training Strategy

How data is consumed in online and batch learning defines their core strategies. As we’ve already touched on, batch learning is like a marathon. The model trains on the entire dataset at once. You wait until you have all the data in hand before training begins.

This one-shot method has its perks. It’s thorough, processes data in large volumes, and ensures the model sees everything before making any decisions.

Real-time Adaptation

But online learning? It’s more like a relay race. Instead of waiting for the whole dataset, you hand off data piece by piece, allowing the model to adjust after each new leg of information. The model continuously updates itself, making online learning a natural fit for situations where data keeps flowing, like in real-time applications.

Imagine you’re managing a streaming service. Your user base and preferences are constantly changing. If you relied on batch learning, you’d miss these micro-shifts until the next batch. With online learning, your model adapts immediately as new data trickles in, ensuring users get fresh, relevant recommendations.

Efficiency and Resource Management

This might surprise you: Online learning is often the more resource-efficient option. Why? Because it doesn’t need to load massive datasets into memory all at once. It updates the model in smaller increments. Online learning might be your go-to if you’re tight on memory or processing power.

On the flip side, batch learning consumes a lot of resources upfront. Since it processes the entire dataset in one go, it requires enough memory to simultaneously hold and compute all data points. Once training is complete, the model is typically more stable, and you don’t need to keep retraining it unless new data arrives.

Resource Optimization

If you’re working with resource constraints (think limited hardware or cloud costs), online learning can help optimize memory usage. But it does come with a catch. Your update algorithms need to be more sophisticated to ensure you’re balancing resource efficiency with performance.

Model Convergence

Which method converges faster? Batch learning, by design, converges more stably and predictably because it processes the entire dataset. Each training epoch refines the model’s weights based on the whole picture, leading to:

- More accurate

- Consistent updates

Online learning can be more unpredictable. Since it updates incrementally, the model may never truly converge, especially if the incoming data is non-stationary (constantly changing). While this might sound like a drawback, it’s a feature for scenarios where rapid adaptation is more important than perfect accuracy.

Rapid Adjustment

The model can quickly adjust to new patterns, though it might oscillate more in its predictions, especially if the data stream is noisy.

Data Handling

Batch learning requires you to retrain your model from scratch when new data arrives. This can be computationally expensive and time-consuming, especially with massive datasets. You process all the data together, and only after retraining will your model reflect any new patterns or trends.

Online learning adapts continuously. You feed new data to the model, updating it in real time. This makes online learning incredibly powerful in scenarios where data changes frequently, but it also introduces challenges like:

- Data drift

- Concept drift

Drift Handling

Data drift occurs when the statistical properties of input data change over time, while concept drift refers to changes in the relationship between input and output variables.

Think of a predictive model for financial trading. Market conditions can change by the minute, and a batch learning model would quickly become outdated in such a dynamic environment. Online learning allows your model to react instantly to new market data, ensuring it stays current.

Stability and Accuracy

Batch learning tends to be more stable because it processes the entire dataset in a single sweep. The weights are adjusted based on the full spectrum of data, reducing volatility in the model’s behavior. Batch learning is generally the better choice when accuracy is the priority.

Online learning excels in its responsiveness. It can react to new patterns or sudden changes in the data, making it ideal for real-time applications. This responsiveness comes at a cost: Since the model updates continuously, it’s more prone to fluctuation, especially when the data is noisy or inconsistent.

Choosing Wisely

The balance? Batch learning provides stability and higher accuracy in static environments, while online learning gives you adaptability and speed when data patterns are constantly evolving.

Trade-offs and Challenges

Accuracy vs. Efficiency

Let’s discuss the trade-offs between accuracy and efficiency. Batch learning can improve the accuracy of the model, especially with a large, static dataset. Since the model trains on all the data simultaneously, it can fine-tune its parameters more effectively, leading to a more accurate outcome.

This comes at the cost of time and computational resources. It’s a bit like using a sledgehammer to crack a nut. It's powerful, but maybe overkill for some situations.

Accuracy Trade-off

Online learning sacrifices some of that raw accuracy for:

- Efficiency

- Adaptability

By updating the model in real time, you save on resources, but you might not reach the same level of precision. Think of it as a nimble tool that’s:

- Quick

- Effective, not always as polished in the result

Strategic Choice

It’s a trade-off you must consider carefully based on your project needs.

Complexity in Model Tuning

Online learning brings some complexity to model tuning. You must carefully control the learning rate at which the model adjusts its parameters. A learning rate that’s too high will cause the model to oscillate, missing the optimal solution, while a learning rate that’s too low will make the model sluggish to adapt.

One solution is learning rate decay, where the learning rate gradually decreases over time to allow finer adjustments as the model approaches a stable solution. Techniques like momentum help the model avoid oscillating by smoothing the updates over time.

Example in Python (Learning Rate Decay):

Implementing learning rate decay

- initial_lr = 0.1

- decay_rate = 0.01

- epoch = 1

while epoch < num_epochs:

learning_rate = initial_lr / (1 + decay_rate * epoch)

Update model with new learning rate

# ...

epoch += 1

Parameter Tuning

In batch learning, you also have tuning parameters like batch size and number of epochs, but the challenge isn’t as dynamic because you’re working with the entire dataset at once. The key in batch learning is finding the sweet spot between speed and convergence accuracy by adjusting these parameters.

Data Preprocessing Needs

In batch learning, preprocessing typically happens upfront. Since you have the whole dataset, you can clean, normalize, and transform it in bulk before feeding it to the model. You can afford to spend time optimizing your preprocessing pipeline, ensuring everything is just right before training begins.

Online learning requires an adaptable preprocessing pipeline. Because data constantly flows in, you need to preprocess it on the fly, which can introduce additional challenges. The model must handle incomplete or noisy data and still make real-time decisions.

Data Streaming

Tools like Kafka or Apache Flink can help you manage this data stream, but the system’s complexity increases as you balance speed and quality.

Overfitting and Underfitting

Overfitting is a risk in both paradigms, but it plays out differently in online and batch learning. In batch learning, the risk comes from over-exposing the model to all the data simultaneously, leading to a model that fits the training data too closely. Regularization techniques like L1/L2 penalties or dropout can help combat this.

In online learning, the risk of overfitting is less about the volume of data and more about the model’s sensitivity to new data. If the model overreacts to every new data point, it can overfit to recent trends rather than generalize well across the data stream.

Balancing Techniques

Here, techniques like sliding windows or buffering, where only recent data points are used for training, can help strike a balance.

Example (Sliding Window in Online Learning):

Using a sliding window to avoid overfitting in online learning

window_size = 100 # Only consider the last 100 data points

for i, data_point in enumerate(streaming_data):

if i >= window_size:

window_data = streaming_data[i - window_size:i]

- Train the model on window_data instead of the full stream

- Update the model incrementally

This ensures your model doesn’t get too stuck on the past or overreact to the most recent data.

Advanced Techniques to Improve Online and Batch Learning

Hybrid Approaches

Let’s start with something that might surprise you: sometimes, online and batch learning don’t have to exist in isolation. They can be combined into hybrid approaches to get the best of both worlds. The most common hybrid technique is mini-batch gradient descent, which sits between batch and online learning extremes.

Instead of updating the model after seeing every data point (as in online learning) or waiting to process all the data (as in batch learning), mini-batch gradient descent updates the model in small batches.

Mini-Batch Approach

How does this work? Mini-batch training divides the dataset into small, manageable chunks, allowing the model to update its parameters more frequently than in full-batch training. Enough data is still used at each step to ensure more stable updates than online learning. Here’s a code example to illustrate mini-batch gradient descent in action:

Mini-batch gradient descent example

import numpy as np

batch_size = 32 # Size of each mini-batch

learning_rate = 0.01

iterations = 1000

n_samples, n_features = X.shape

weights = np.zeros(n_features)

bias = 0

for i in range(iterations):

for start in range(0, n_samples, batch_size):

end = start + batch_size

X_batch = X[start:end]

y_batch = y[start:end]

Make predictions

y_pred = np.dot(X_batch, weights) + bias

Calculate gradients

dw = (1 / batch_size) * np.dot(X_batch.T, (y_pred - y_batch))

db = (1 / batch_size) * np.sum(y_pred - y_batch)

Update weights

weights -= learning_rate * dw

bias -= learning_rate * db

Hybrid Balance

This hybrid approach strikes a balance between efficiency and accuracy. It’s especially useful when you want the adaptability of online learning but don’t want the instability of updating after each data point. Mini-batch updates allow the model to train faster than full-batch methods while being more stable than purely online approaches.

Regularization Techniques in Online Learning

You might wonder: how do we prevent models from overfitting when using online learning, especially with a continuous data stream? The answer lies in regularization. By its very nature, online learning is prone to overfitting to recent data, especially when the data changes over time. That’s where L1/L2 regularization, dropout, and elastic net come into play.

L1/L2 Regularization

These techniques penalize large weights, encouraging simpler models that generalize better. L1 regularization can drive some weights to zero, creating sparse models, while L2 regularization ensures that weights remain small but non-zero. Here’s an example of L2 regularization with stochastic gradient descent in an online learning setting:

Example Of L2 Regularization In SGD for Online Learning

learning_rate = 0.01

l2_penalty = 0.001

weights = np.zeros(n_features)

for i in range(n_samples):

xi = X[i, :]

yi = y[i]

Predict

y_pred = np.dot(xi, weights)

Compute gradients with L2 regularization

dw = (y_pred - yi) * xi + l2_penalty * weights

Update weights

weights -= learning_rate * dw

Dropout

This technique randomly drops out a portion of the neurons during training, forcing the model not to rely too heavily on any one feature. Although commonly associated with deep learning, similar dropout-like techniques can be used in online learning to prevent the model from overfitting to the most recent data.

Elastic Net

Combines both L1 and L2 regularization, giving you the benefits of sparsity from L1 and the stability of L2.

Handling Data Drift in Online Learning

One of the biggest challenges in online learning is data drift. Over time, the distribution of the input data might change (data drift), or the underlying relationship between features and labels might evolve (concept drift). If your model can’t handle these shifts, its performance can deteriorate quickly.

One way to combat data drift is by using a sliding window approach. The model is trained only on the most recent data points, ensuring that outdated data doesn’t influence the model’s decisions too much. Here’s how you can implement a sliding window in Python:

Sliding Window Approach for Online Learning

window_size = 100

weights = np.zeros(n_features)

for i, data_point in enumerate(streaming_data):

Only consider the last 'window_size' data points

if i >= window_size:

window_data = streaming_data[i-window_size:i]

- Perform online learning on window_data

- Update weights incrementally

Another effective method is adaptive learning rates. As the model encounters new data, you should adjust the learning rate based on how much the data has shifted. Techniques like dynamic models, where the model structure itself can evolve with the data, are also being researched in real-time systems.

Transfer Learning & Online Learning

Here’s something often overlooked: you don’t always need to start from scratch when using online learning. Transfer learning can be instrumental. You can start with a pre-trained model already trained on a large dataset and then fine-tune it on your streaming data using online learning techniques.

This allows you to leverage the knowledge of a model trained on static data and make it adaptable to new, incoming data streams.

Transfer Learning

You’ve pre-trained a language model on a massive text corpus like Wikipedia. Now, you want to deploy this model to improve a real-time recommendation system that learns from user behavior. You can use transfer learning to initialize the model with the pre-trained parameters and then apply online learning to adapt the model to your specific users’ preferences.

When to Choose Online vs. Batch Learning

Evaluation Based on Data Volume

Choosing between online and batch learning often comes down to the volume of data. If you have a static dataset that doesn’t change much over time, batch learning is typically the better choice. It allows the model to train on all available data simultaneously, optimizing for accuracy and convergence.

If you’re working with a massive dataset, say, millions or billions of data points, batch learning can become infeasible due to memory and computational limitations.

Scalability Advantage

Online learning shines. It processes data incrementally, allowing the model to scale even when faced with vast data. If your dataset keeps growing (as in streaming data), online learning becomes not just an option but a necessity.

Real-life example

Companies like Twitter and Netflix deal with an immense volume of data. While batch learning might be used for long-term models like content recommendations, online education is necessary for immediate tasks like real-time fraud detection or user interaction predictions.

Impact of Data Freshness and Latency

Here’s where online learning takes the lead: data freshness and latency. Online learning is your best bet if you need your model to respond immediately to new data.

It ensures that your model stays up-to-date with the most recent patterns and trends, making it ideal for real-time applications like:

- Stock market predictions

- Personalized recommendations

- Dynamic pricing models

Latency Consideration

Batch learning introduces latency because you must wait until the entire dataset is available before training. If your system can tolerate delays or only needs to be updated periodically, batch learning might still be a good fit. In high-frequency trading, where stock prices fluctuate by the second, batch learning would be too slow. Online education provides real-time updates, allowing your model to react instantly to market conditions.

Cost Implications

We need to consider the cost implications of both approaches. Batch learning often requires significant computational power and memory upfront, especially when working with a massive dataset. This can be costly regarding infrastructure (GPU, CPU usage) and time (training may take hours or even days).

Online learning can be more cost-efficient because it spreads the computational load over time. Instead of retraining the model every time new data (as in batch learning), the model updates incrementally. Online learning requires infrastructure that can handle continuous updates, which may increase costs in the long run, particularly if real-time processing is critical.

Cloud Economics

If you work with a static dataset in a cloud environment, batch learning might be more economical. But if your application demands frequent updates or is built on streaming data, online education can lower operational costs by minimizing retraining needs and distributing the computational burden.

Related Reading

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models. Inference offers developers the highest performance at the lowest cost in the market.

Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications. Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.