Mar 29, 2025

Is Gemma LLM Worth Your Time? Here’s a Close Look at What It Can Do

Inference Research

Large language models can be overwhelmingly complex. You can fine-tune or deploy a lightweight model to boost performance in a real-world application. But how do you choose the right one? With so many options available, picking the model that will work best for your project is no easy feat. In this article, we’ll explore the features, inference engine, and benefits of Gemma LLM to help you quickly determine whether this specific model is the right choice to meet your objectives.

AI inference APIs can help you get up and running with Gemma LLM. These tools simplify the process of evaluating a model's performance, allowing you to optimize your project and gain a competitive edge quickly.

What is Gemma LLM and Its Key Capabilities

Gemma is Google’s open, lightweight language model designed for efficient deployment and fine-tuning across a range of devices. A family of open language models based on Google’s Gemini models, Gemma is trained on up to 6 trillion tokens of text. These models are considered the lighter versions of Gemini. The Gemma family consists of two sizes:

- 7 billion parameter model for efficient deployment on GPU and TPU

- A 2 billion-parameter model for CPU and on-device applications.

Hardware Accessibility and Consistent Context Window

All the variants can run on various consumer hardware, even without quantization, and have a context length of 8,192 tokens. Here’s a breakdown of the variants:

- Gemma-7 b: Base 7B model.

- Gemma-7 b-it: Instruction fine-tuned version of the base 7B model.

- Gemma-2 b: Base 2B model.

- Gemma-2 b-it: Instruction fine-tuned version of the base 2B model.

Post-Release Enhancements to Gemma's Instruction Models

A month after the original release, Google released a new version of the instruction models. This version features:

- Enhanced coding capabilities

- Factual accuracy

- Instruction following

- Multi-turn quality

The model is also less prone to begin with "Sure."

- gemma-1.1-7b-it

- gemma-1.1-2b-it

Gemma's Broad Competence in Text Understanding

Gemma exhibits strong generalist capabilities in text domains and state-of-the-art understanding and reasoning skills at scale. It achieves better performance than other open models of similar or larger scale across various domains, including:

- Question answering

- Commonsense reasoning

- Mathematics

- Science

- Coding

For both models, the pre-trained, fine-tuned checkpoints and open-source codebase for inference and serving are released by the Google Team.

The Technical Specifications of Gemma LLM

Gemma builds upon recent advancements in sequence models, transformers, deep learning, and large-scale training in a distributed manner. It continues Google’s history of releasing open models and ecosystems, following:

- Word2Vec

- Transformer

- BERT

- T5

- T5X

The responsible release of Gemma aims to:

- Improve the safety of frontier models

- Provide equitable access to this technology

- Pave the way to rigorous evaluation and analysis of current techniques

- Foster the development of future innovations

Prioritizing Safety in Gemma's Deployment

Nevertheless, thorough safety testing specific to each use case is crucial before deploying or using Gemma. Gemma follows the architecture of a decoder-only transformer, which was introduced in 2017. Both the Gamma 2B and the 7B models have a vocabulary size of 256k. Both models even have a context length of 8192 tokens.

Advanced Architectural Features in Gemma

The Gemma even includes the recent advancements made in the transformers’ architecture, including:

- Multi-Query Attention: The 7B model uses multi-head attention, while the 2B model implements multi-query attention (with num_kv_heads=1). This choice is based on performance improvements demonstrated at each scale through ablation studies.

- RoPE Embeddings: Instead of absolute positional embeddings, both models employ rotary positional embeddings in each layer. Additionally, embedding sharing across inputs and outputs minimizes model size.

- GeGLU Activations: The regular ReLU activation function is replaced by the GeGLU activation function, giving good performance.

- Normalizer Location: Gemma deviates from the GOTO practice by normalizing both the input and output of each transformer sub-layer, using RMS norm as the normalization method.

How Well Do the Gemma Models Perform?

Here’s an overview of the base models and their performance compared to other open models on the LLM Leaderboard (higher scores are better):

LLama 2 70B Chat (reference)

- License: Llama 2 license

- Commercial use?: ✅

- Pretraining size [tokens]: 2T

- Leaderboard score ⬇️: 67.87

Gemma-7B

- License: Gemma license

- Commercial use?: ✅

- Pretraining size [tokens]: 6T

- Leaderboard score ⬇️: 63.75

DeciLM-7B

- License: Apache 2.0

- Commercial use?: ✅

- Pretraining size [tokens]: unknown

- Leaderboard score ⬇️: 61.55

PHI-2 (2.7B)

- License: MIT

- Commercial use?: ✅

- Pretraining size [tokens]: 1.4T

- Leaderboard score ⬇️: 61.33

Mistral-7B-v0.1

- License: Apache 2.0

- Commercial use?: ✅

- Pretraining size [tokens]: unknown

- Leaderboard score ⬇️: 60.97

Llama 2 7B

- License: Llama 2 license

- Commercial use?: ✅

- Pretraining size [tokens]: 2T

- Leaderboard score ⬇️: 54.32

Gemma 2B

- License: Gemma license

- Commercial use?: ✅

- Pretraining size [tokens]: 2T

- Leaderboard score ⬇️: 46.51

Gemma 7B is a strong model, with performance comparable to the best models in the 7B weight, including Mistral 7B. Gemma 2B is an interesting model for its size, but it doesn’t score as high in the leaderboard as the best capable models with a similar size, such as Phi 2. Feedback from the community about real-world usage will be valuable.

Related Reading

How Was Gemma Trained, and What Are Its Benchmarks and Performance Metrics

Gemma’s pretraining relied on high-quality data curation and preprocessing methods. The team prioritized diverse datasets that were filtered for safety and helpfulness. The pretraining data consisted of 2T and 6T tokens, respectively, for the 2B and 7B models, primarily sourced from mathematics, code, and Web Docs.

Rigorous Data Curation for Safety and Quality

The training data underwent a thorough filtering process to remove unwanted or unsafe content, including sensitive data and personal information. The filtering pipeline employed heuristic methods along with model-based classifiers to ensure the quality and safety of Gemma’s dataset.

The Compute Infrastructure, Model Sizes, and Training Methodology

Gemma's two model sizes include 2B and 7B parameters, which were trained on 2 trillion and 6 trillion tokens of data, respectively. The models underwent supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF) to further improve performance. SFT involved a mix of human-generated, English-only synthetic, and text-only prompt-response pairs.

Strategic Data Mixtures and Synthetic Data Filtering in Training

The training pipeline included comprehensive evaluations to select data mixtures that targeted specific capabilities, such as:

- Instruction-following

- Factuality

- Creativity

- Safety

Even synthetic data underwent filtering to remove toxic outputs or examples with personal information. RLHF utilized human preferences to train a reward function optimized to enhance model performance and mitigate potential issues, such as reward hacking.

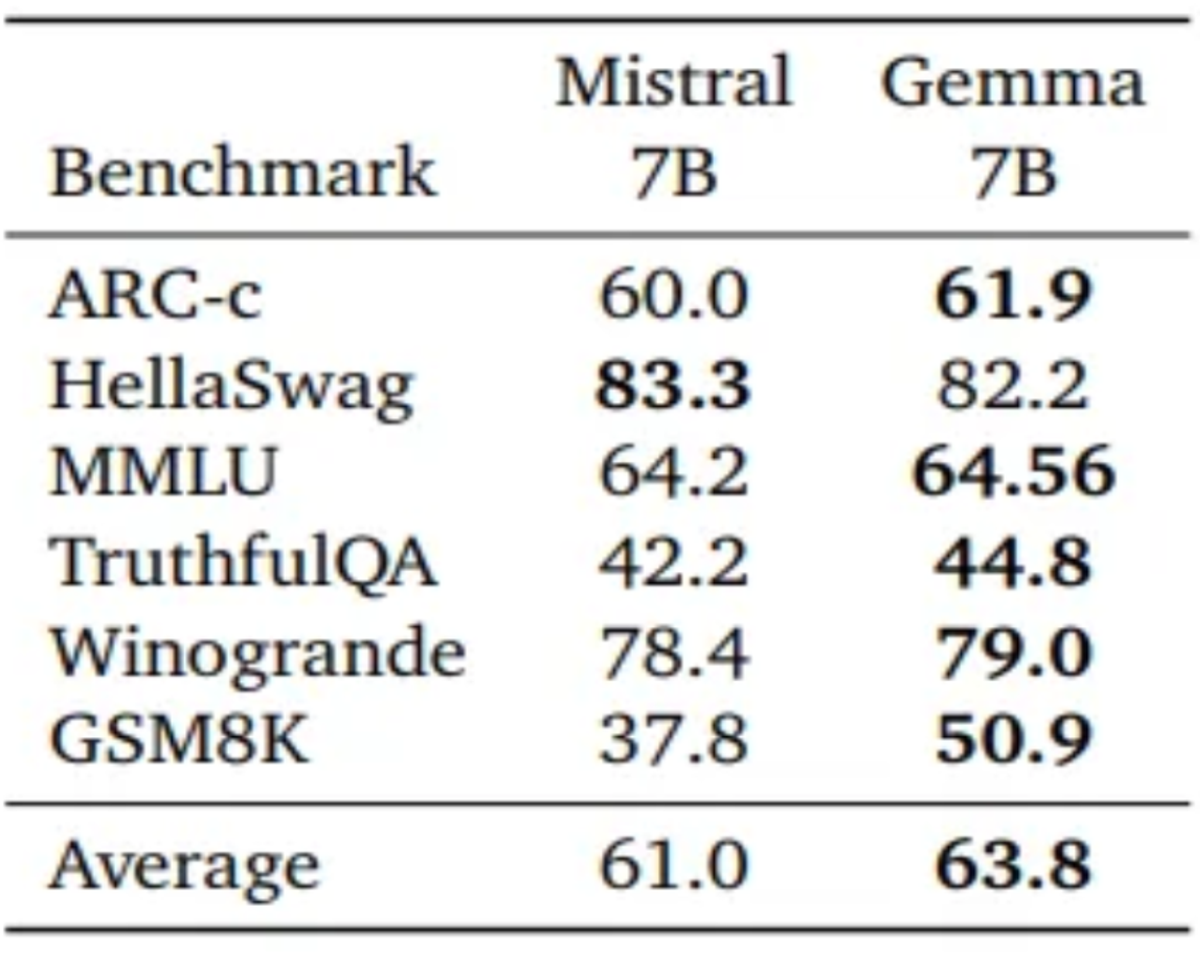

Key Benchmarks and Performance Metrics for Gemma

Gemma outperforms Mistral on five out of six benchmarks, with the sole exception being HellaSwag, where they achieve similar accuracy. This dominance is evident in tasks like ARC-c and TruthfulQA, where Gemma surpasses Mistral by nearly 2% and 2.5% in accuracy and F1 score, respectively.

Evidence from MMLU Perplexity

Even on MMLU, where perplexity scores are lower is better, Gemma achieves a significantly lower perplexity, indicating a better grip of language patterns. These results solidify Gemma’s position as a powerful language model, capable of handling complex NLP tasks with high accuracy and efficiency.

How Does Gemma Compare to Other LLMs?

Looking at the results, Gemma outperforms Mistral on five of six benchmarks, with the sole exception being HellaSwag, where they get similar accuracy. This dominance is evident in tasks like ARC-c and TruthfulQA, where Gemma surpasses Mistral by nearly 2% and 2.5% in accuracy and F1 score, respectively.

Gemma's Superior Language Understanding

Even on MMLU, where perplexity scores are lower, Gemma achieves a significantly lower perplexity, indicating a better grip of language patterns. These results solidify Gemma’s position as a powerful language model, capable of handling complex NLP tasks with good accuracy and efficiency.

Exploring the Variants of Gemma LLM

Google’s Gemma open-source LLM family offers a range of versatile models catering to diverse needs. Let’s look at the different sizes and versions, exploring strengths, use cases, and technical details for developers:

Size Matters: Choosing Your Gemma

- 2B: This lightweight champion excels in resource-constrained environments like CPUs and mobile devices. Its memory footprint of around 1.5GB and fast inference speed make it ideal for tasks like text classification and simple question answering.

- 7B: Striking a balance between power and efficiency, the 7B variant shines on consumer-grade GPUs and TPUs. Its 5GB memory requirement unlocks more complex tasks, such as:

- Summarization

- Code generation

Tuning the Engine: Base vs. Instruction-tuned

- Base: Fresh out of the training process, these models offer a general-purpose foundation for various applications. They require fine-tuning for specific tasks but provide flexibility for customization.

- Instruction-tuned: Pre-trained on specific instructions, such as “summarize” or “translate,” these variants offer out-of-the-box usability for targeted tasks. They sacrifice some generalizability for improved performance in their designated domain.

Technical Tidbits for Developers

- Memory Footprint: 2B models require around 1.5GB of memory, while 7B models demand approximately 5GB. Fine-tuning can slightly increase this footprint.

- Inference Speed: 2B models excel in speed, making them suitable for real-time applications. 7B models offer faster inference compared to larger LLMs but may not match the speed of their smaller siblings.

- Framework Compatibility: Both sizes are compatible with major frameworks like TensorFlow, PyTorch, and JAX, allowing developers to leverage their preferred environment.

Matching the Right Gemma to Your Needs

The choice between size and tuning depends on your specific requirements. The 2B base model is an excellent starting point for resource-constrained scenarios and straightforward tasks. If you prioritize performance and complexity in particular domains, the 7B instruction-tuned variant could be your champion.

Fine-tuning either size allows for further customization to suit your unique use case. This is just a glimpse into the Gemma variants. With its diverse options and open-source nature, Gemma empowers developers to explore and unleash its potential for various applications.

Getting Started with Gemma

We will get started with Gemma. We will be working with Google Colab because it comes with a free GPU. Before we get started, we need to accept Google’s Terms and Conditions to download the model.

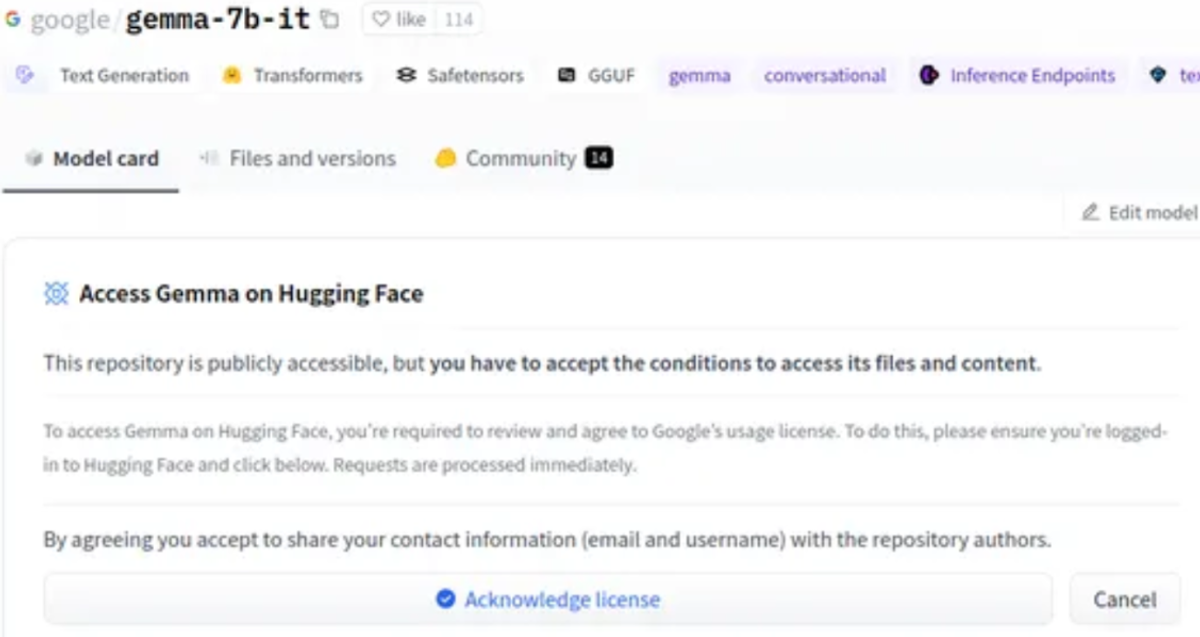

Step 1: Opening Gemma

Click on this [link](https://huggingface.co/google/gemma-7b) to go to Gemma on HuggingFace. You will be presented with something like the following:

Step 2: Click on Acknowledge License

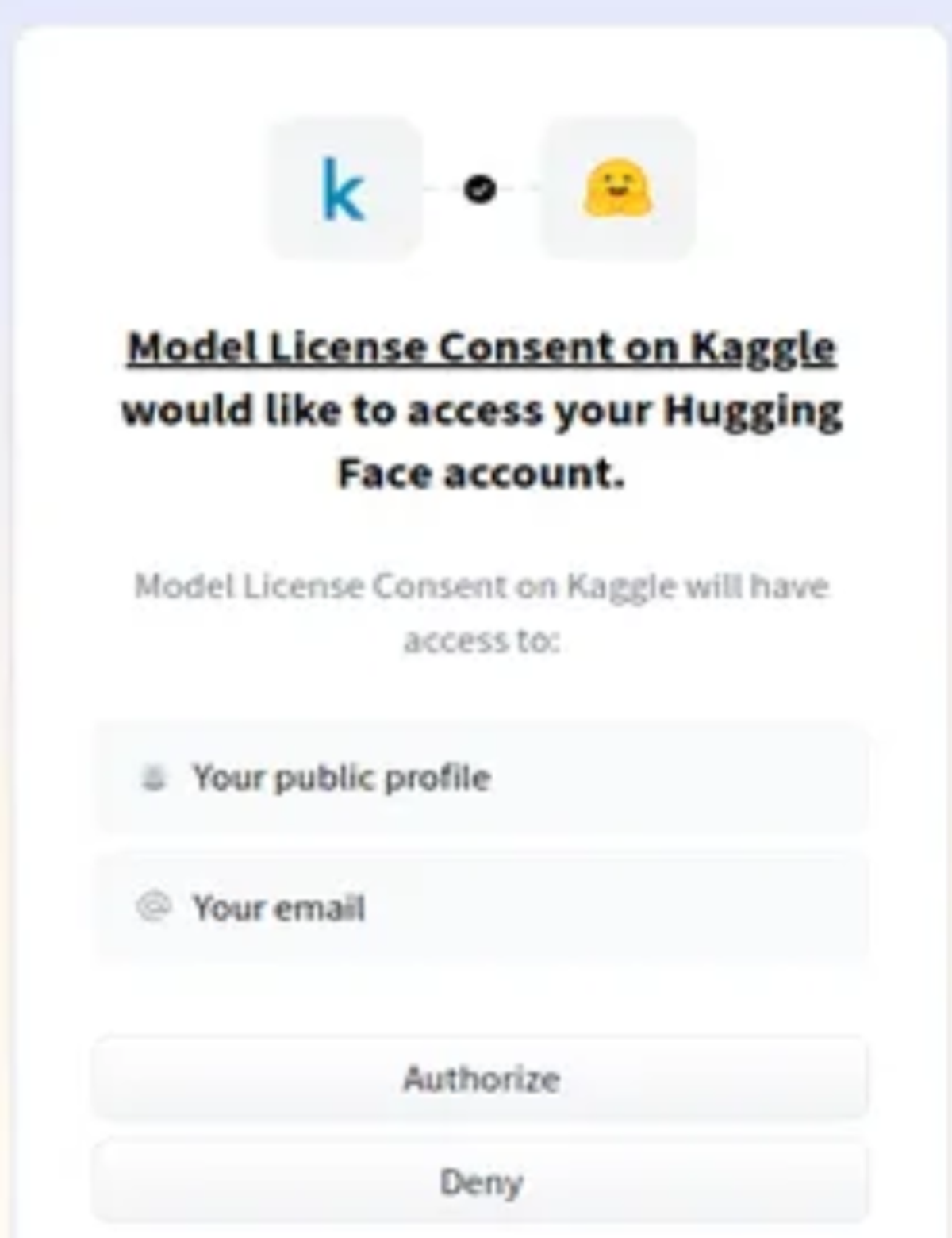

If you click on "Acknowledge License," you will see a page as shown below.

Click on Authorize. Done, we are now ready to download the model. Before, let’s generate a new HuggingFace Token. For this, you can go to the HuggingFace Settings and generate a new Token. This token will be helpful because we need it to authorize inside Google Colab to download the Google Gemma Large Language Model.

Step 3: Installing Libraries

To get started, we first need to install the following libraries.

```python

!pip install -U accelerate bitsandbytes transformers huggingface_hub

```Accelerate

Allows distributed training and mixed-precision training for faster and more efficient model training. The accelerate library even helps with faster inference of the Large Language Models.

Bitsandbytes

Allows quantization of model weights to 4-bit or 8-bit precision, reducing memory footprint and computation requirements. Because we are dealing with a 7 billion-parameter model, which requires around 30-40 GB of GPU VRAM, we need to quantize it to fit within the Colab GPU's memory.

Transformers

Provide pre-trained language models, tokenizers, and training tools for natural language processing tasks. We work with this library to download the Gemma model and start inferring it.

Huggingface_hub

Facilitates access to the Hugging Face Hub, a platform for sharing and seeing language models and datasets. We need this library to log in to Hugging Face so that we can verify that we are authorized to download the Google Gemma Large Language Model. The -U option after the install indicates that we are fetching the latest updated versions of all the libraries.

Step 4: Typing Important Command

Now, type the following command:

```python

!huggingface-cli login

```Hugging Face Token and Setup

The above command will ask you to provide the HuggingFace Token, which we can get from the HuggingFace website. Give this token and press the Enter button, and you will receive a Login Successful message. Now let’s move on to coding.

- Import necessary classes for model loading and quantization, from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig.

- Configure model quantization to 4-bit for memory and computation efficiency quantization_config = BitsAndBytesConfig(load_in_4bit=True).

- Load the tokenizer for the Gemma 7B Italian model. tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b-it").

- Load the Gemma 7B Italian model itself, with 4-bit quantization. model = AutoModelForCausalLM.from_pretrained("google/gemma-7b-it", quantization_config=quantization_config).

AutoTokenizer

This class dynamically loads the pre-trained tokenizer associated with the given model, ensuring compatibility and eliminating the need for manual configuration.

AutoModelForCausalLM

Similar to the tokenizer, this class automatically loads the pre-trained Causal Language Model architecture based on the provided model identifier.

quantization_config = BitsAndBytesConfig (load_in_4bit=True)

This line creates a config object for quantization, telling that the model’s weights should be pushed in 4-bit precision instead of the original 32-bit. This, to a great extent, reduces memory consumption and potentially speeds up computations, making the model more efficient for resource-constrained environments.

Tokenizer = AutoTokenizer.from_pretrained(“google/gemma-7b-it”)

This line loads the pre-trained tokenizer specifically designed for the “google/gemma-7b-it” model. This tokenizer knows how to break down text into separate Tokens that the model can understand and process.

model = AutoModelForCausalLM.from_pretrained(“google/gemma-7b-it”, quantization_config=quantization_config)

This line loads the actual “google/gemma-7b-it” model, but with the crucial addition of the quantization_config object. This ensures that the model weights are created in the 4-bit format that we have discussed earlier, adding the benefits of quantization. Our Gemma Large Language Model is downloaded, converted into a 4-bit quantized model, and loaded into the GPU.

Step 5: Inferencing the model

Now let’s try inferring the model.

```python

Define input text

input_text = "List the key points about Responsible AI"

Tokenize the input text

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

Generate text using the model

outputs = model.generate(

**input_ids, # Pass tokenized input as keyword argument

max_length=512, # Limit output length to 512 tokens

)

Decode the generated text

print(tokenizer.decode(outputs[0]))

```

Define Input Text

The code starts by assigning the Prompt “List the key aspects of Responsible AI” to the input_text variable.

Tokenize Input

The tokenizer object associated with the downloaded model is used to convert the text into numerical tokens that the model can understand.

The return_tensors=”pt” line tells about the conversion to a PyTorch tensor for efficient GPU processing. The resulting tensor of token IDs is then moved to the GPU using to (“cuda”) if available.

Generate Text

The model.generate function is called with the tokenized input (input_ids) and a maximum output length of 512 tokens. This instructs the model to generate text based on the provided Prompt, respecting the given length limit.

Decode and Convert

The generated text, represented as a sequence of token IDs, is decoded back into human-readable text using the tokenizer.decode function. Finally, the decoded text is printed out.

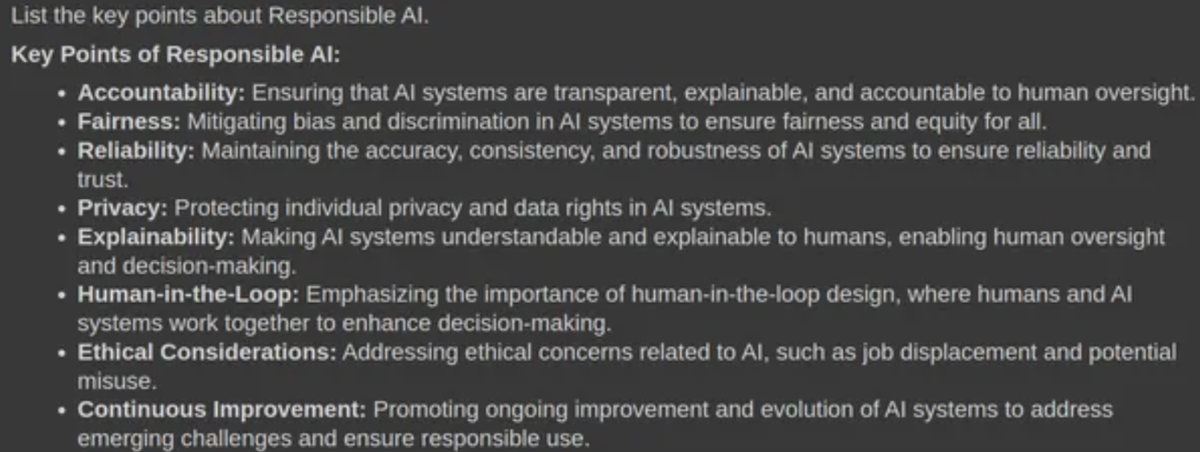

Step 6: Response Generation

Running the code has generated the following response

The model has generated a satisfactory response to the provided query. It has highlighted all the key aspects that go into creating a Responsible AI. This is a relevant and accurate answer to the question asked. Let’s let the AI ask a common-sense question.

```python

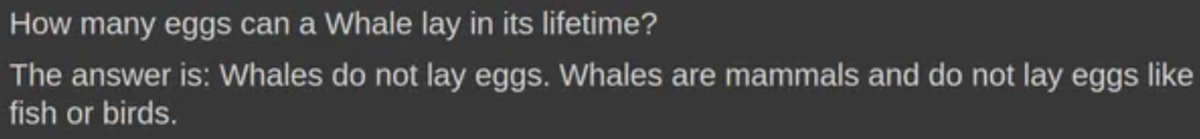

input_text = "How many eggs can a Whale lay in its lifetime?"

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids,max_length=512)

print(tokenizer.decode(outputs[0]))

```

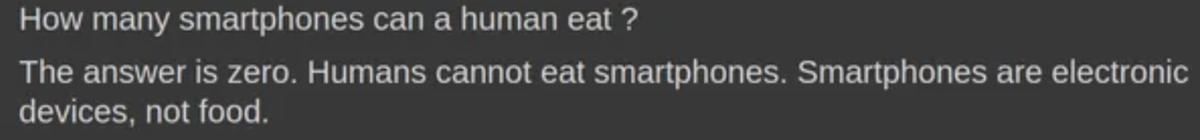

```python

input_text = "How many smartphones can a human eat ?"

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids,max_length=512)

print(tokenizer.decode(outputs[0]))

```

So far, so good. The model possesses good common-sense abilities. It's able to identify what’s wrong in the sentence and output the same, as shown in the pictures above. Let’s try asking some math questions.

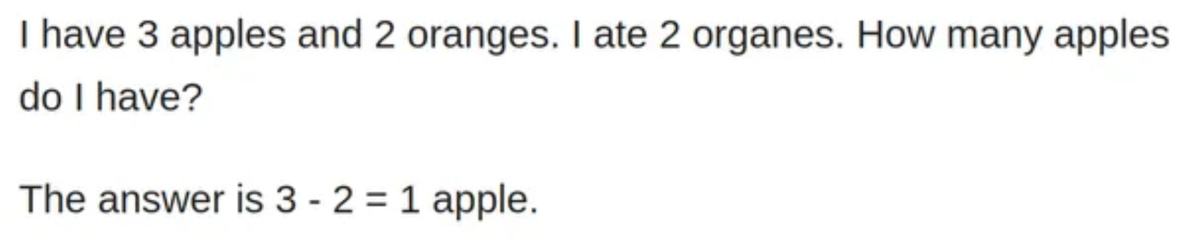

```python

input_text = "I have 3 apples and 2 oranges. I ate 2 organes. How many apples do I have?"

input_ids = tokenizer(input_text, return_tensors="pt").to('cuda')

outputs = model.generate(**input_ids,max_new_tokens=512)

print(tokenizer.decode(outputs[0]))

```

The model struggled to answer this simple, tricky math question. Let’s try do some Prompt Engineering here. Let’s add additional info in the Prompt and run it like below:

```python

input_text = "I have 3 apples and 2 oranges. \ I ate 2 oranges. How many apples do I have? \ Think Step by Step. For each step, re-evaluate your answer."

input_ids = tokenizer(input_text, return_tensors="pt").to('cuda')

outputs = model.generate(**input_ids,max_new_tokens=512) print(tokenizer.decode(outputs[0]))

```Wow, a simple tweak in the Prompt and the model answered correctly. It began thinking incrementally that is step by step. And for each step, it starts re-evaluating its answer, if it’s right or wrong. And finally, it has steered to the correct answer. Let’s try asking the model to write a simple Hello World program in Python.

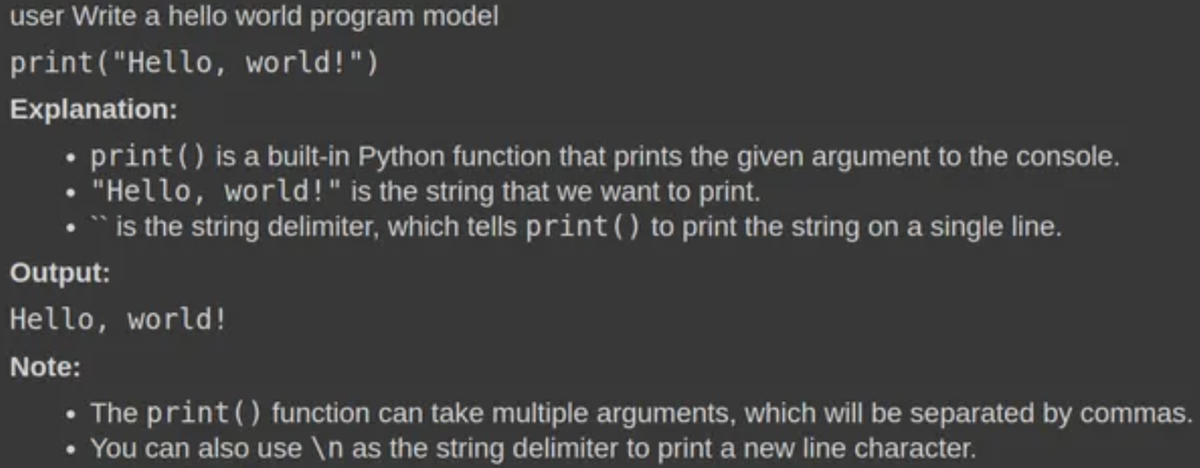

```python

input_text = "Write a hello world program"

input_ids = tokenizer(input_text, return_tensors="pt").to('cuda')

outputs = model.generate(**input_ids,max_new_tokens=512) print(tokenizer.decode(outputs[0]))

```

Related Reading

Stepwise Guide to Fine-Tune Google’s Gemma LLM

Fine-tuning Gemma on your custom dataset begins with setting up a systematic way to clean and preprocess your data, establishing a training routine, and creating evaluation metrics for quality control. First, install and update all necessary Python packages to avoid errors.

```python

%%capture

%pip install -U bitsandbytes

%pip install -U transformers

%pip install -U peft

%pip install -U accelerate

%pip install -U trl

%pip install -U datasets

```Next, import the packages we will use to load the dataset, model, and tokenizer, and perform supervised fine-tuning (SFT) and inference.

```python

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

BitsAndBytesConfig,

HfArgumentParser,

TrainingArguments,

pipeline,

logging,

)

from peft import (

LoraConfig,

PeftModel,

prepare_model_for_kbit_training,

get_peft_model,

)

import os, torch, wandb

from datasets import load_dataset

from trl import SFTTrainer

```Define Variables for Fine-Tuning

We need to define names for the base model and dataset, as well as the name of the fine-tuned model, which we will upload to Hugging Face Hub later. These variables will be utilized at various stages, including loading the dataset and model, tokenization, training, and model saving.

```python

base_model = "/kaggle/input/gemma/transformers/7b-it/2"

dataset_name = "hieunguyenminh/roleplay"

new_model = "gemma-7b-it-v2-role-play"

```Load Hugging Face API Key

Next, we will load the Hugging Face API key from Kaggle secrets (environment variables).

```python

from kaggle_secrets import UserSecretsClient

user_secrets = UserSecretsClient()

secret_hf = user_secrets.get_secret("HUGGINGFACE_TOKEN")

```Log in to Hugging Face CLI

Now we can use the API key to log in to Hugging Face CLI. This will allow us to access the model and also save it on Hugging Face Hub.

```python

!huggingface-cli login--token $secret_hf

```Initialize W&B Workspace

We will initiate the weights and biases (W&B) workspace using the W&B API key. We will use this workspace to track model training.

```python

secret_wandb = user_secrets.get_secret("wandb")

Monitoring the LLM

wandb.login(key = secret_wandb)

run = wandb.init(

project='Fine tuning Gemma 7B',

job_type="training",

anonymous="allow"

)

```Load the Dataset

Now we can retrieve the first 1000 rows of data from the role-play dataset available on Hugging Face and display a sample of the `text` column.

```python

Loading the dataset

dataset = load_dataset(dataset_name, split="train[0:1000]")

dataset["text"][100]

```Our dataset comprises a continuous conversation between the user and the assistant, focusing on celebrity style. It is role-playing.

Load the Model and Tokenizer

To avoid memory issues, we will load our model in 4-bit precision using BitsAndBytesConfig. It will load the model directly from Kaggle without requiring a download.

```python

# Load base model(Gemma 7B-it)

bnbConfig = BitsAndBytesConfig(

load_in_4bit = True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16,

)

model = AutoModelForCausalLM.from_pretrained(

base_model,

quantization_config=bnbConfig,

device_map="auto"

)

model.config.use_cache = False # silence the warnings. Please re-enable for inference!

model.config.pretraining_tp = 1

model.gradient_checkpointing_enable()

```Next, load the tokenizer and configure the pad token to fix the issue with fp16.

```python

Load tokenizer

tokenizer = AutoTokenizer.from_pretrained(base_model)

tokenizer.padding_side = 'right'

tokenizer.pad_token = tokenizer.eos_token

tokenizer.add_eos_token = True

tokenizer.add_bos_token, tokenizer.add_eos_token

```Add the Adapter Layer

By adding the adapter layer to our model, we can fine-tune it more efficiently. Instead of training the entire model, we only need to update the parameters of the adapter layers, which will accelerate the training process. Our target modules will be 'o_proj', 'q_proj', 'up_proj', 'v_proj', 'k_proj', 'down_proj', and 'gate_proj'.

```python

model = prepare_model_for_kbit_training(model)

peft_config = LoraConfig(

lora_alpha=16,

lora_dropout=0.1,

r=64,

bias="none",

task_type="CAUSAL_LM",

target_modules=['o_proj', 'q_proj', 'up_proj', 'v_proj', 'k_proj', 'down_proj', 'gate_proj']

)

model = get_peft_model(model, peft_config)

```Train the Model

In order to begin training, we need to specify the hyperparameters. These parameters are fundamental and can be modified to enhance the training process and improve the performance of the model. If you want to better grasp each hyperparameter, we suggest reading the Fine-Tuning LLaMA 2 tutorial.

To set up the Supervised Fine-tuning (SFT) trainer, we need to provide it with the model, dataset, Lora configuration, tokenizer, and training parameters as arguments.

```python

trainer = SFTTrainer(

model=model,

train_dataset=dataset,

peft_config=peft_config,

max_seq_length= 512,

dataset_text_field="text",

tokenizer=tokenizer,

args=training_arguments,

packing= False,

)

```

We will now run the training process using the `.train` function. The fine-tuning took almost 1 hour and 1 minute. The training loss gradually decreased, and you can even reduce this loss by increasing the number of epochs.

```python

trainer.train()

```

Finish W&B Session

Now we can finish the W&B session and configure the model for inference. ```python

wandb.finish()

model.config.use_cache = True

```

Save the Model

We will now save the model adapter locally and upload it to the Hugging Face Hub. The `push_to_hub` command will create the repo and push the adapter config and adapter weights to the hub.

```python

Save the fine-tuned model

trainer.model.save_pretrained(new_model)

trainer.model.push_to_hub(new_model, use_temp_dir=False)

```

Note: This is just an adapter that we are saving. The complete model is around 18 GB.

Model Inference

In order to generate a response using our fine-tuned model, we need to follow a few steps. First, we will create a prompt in the role-play dataset format. Then, we will pass the prompt to the tokenizer and subsequently to the model to generate predictions. To translate the predicted output into readable text, we will decode it using the tokenizer.

```python

prompt = '''<|system|>Harry Potter is a wizard known for his distinctive lightingshaped scar and his remarkable journey through magic and battles against the dark wizard Voldemort.

<|user|> What is the meaning of hate?

<|assistant|>'''

inputs = tokenizer(prompt, return_tensors='pt', padding=True, truncation=True).to("cuda")

outputs = model.generate(**inputs, max_length=500, num_return_sequences=1)

text = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(text)

```Try It Again

Now let’s try it again with a new character: Michel Jordan.

```python

prompt = '''<|system|>Michael Jordan an NBA legend known for his competitive drive six championship wins with the Chicago Bulls.

<|user|> What motivates you in the life?

<|assistant|>'''

inputs = tokenizer(prompt, return_tensors='pt', padding=True, truncation=True).to("cuda")

outputs = model.generate(**inputs, max_length=500, num_return_sequences=1)

text = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(text)

```

We have done a good job fine-tuning the base model to understand the new style of response generation.

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.