Jun 20, 2025

28 Best Vector Databases to Accelerate AI Product Development

Inference Research

In an age where artificial intelligence helps to automate and enhance nearly every aspect of our lives, expectations for AI-powered products are higher than ever. Users expect these tools to not only deliver precise results, but also to generate those results quickly. Meeting these demands requires reliable vector search, which is powered by vector databases. Machine Learning Frameworks play a vital role in integrating these capabilities seamlessly. This blog will explore the best vector databases to help you build and scale AI-powered products faster to boost performance, user experience, and real-world impact.

Inference’s AI inference APIs can help you achieve your goals even faster. They provide reliable vector search, so your applications can deliver precise results quickly and keep users happy without the long wait times.

What is a Vector Database?

A vector database is a specific kind that saves information in the form of multi-dimensional vectors representing specific:

- Characteristics

- Qualities

The number of dimensions in each vector can vary widely, from just a few to several thousand, based on the data's:

- Intricacy

- Detail

Vector Creation

This data, including text, images, audio, and video, is transformed into vectors using:

- Machine learning models

- Word embeddings

- Feature extraction techniques

The primary benefit of a vector database is its ability to swiftly and precisely locate and retrieve data according to their:

- Vector proximity

- Resemblance

Semantic Search

This allows for searches rooted in semantic or contextual relevance rather than relying solely on exact matches or set criteria as with conventional databases. With a vector database, you can:

- Search for songs that resonate with a particular tune based on melody and rhythm.

- Discover articles that align with another specific article in theme and perspective.

- Identify gadgets that mirror the characteristics and reviews of a device.

How Do Vector Databases Work?

Traditional databases store simple data like words and numbers in a table format. Vector databases:

- Work with complex data called vectors

- Use unique methods for searching

While regular databases search for exact data matches, vector databases look for the closest match using specific similarity measures.

ANN Search

Vector databases use special search techniques known as Approximate Nearest Neighbor (ANN), including methods like:

- Hashing

- Graph-based searches

To understand how vector databases work and how they differ from traditional relational databases like SQL, we first have to understand the concept of embeddings. Unstructured data lacks a predefined format, posing challenges for traditional databases. Data may include:

- Text

- Images

- Audio

Embedding Explained

To leverage this data in artificial intelligence and machine learning applications, it's transformed into numerical representations using embeddings. Embedding is like giving each item, whether a word, image, or something else, a unique code that captures its meaning or essence. This code helps computers understand and compare these items more:

- Efficiently

- Maningfully

Embedding Analogy

Consider it turning a complicated book into a summary that still captures the main points. This embedding process is achieved using a special neural network designed for the task. Word embeddings convert words into vectors so that words with similar meanings are closer in the vector space.

This transformation allows algorithms to understand relationships and similarities between items. Embeddings serve as a bridge, converting non-numeric data into a form that machine learning models can work with, enabling them to discern patterns and relationships in the data more effectively.

Features of a Good Vector Database

Vector databases have emerged as powerful tools to navigate the vast terrain of unstructured data without relying heavily on human-generated labels or tags. Data may include:

- Images

- Videos

- Texts

When integrated with advanced machine learning models, their capabilities hold the potential to revolutionize numerous sectors, from e-commerce to pharmaceuticals. Here are some of the standout features that make vector databases a game-changer.

Scalability and Adaptability

A robust vector database effortlessly scales across multiple nodes as data grows, reaching millions or billions of elements. The best vector databases offer adaptability, allowing users to tune the system based on:

- Variations in insertion rate

- Query rate

- Underlying hardware

Multi-User Support and Data Privacy

Databases are expected to accommodate multiple users. Merely creating a new vector database for each user isn't efficient. Vector databases prioritize data isolation, ensuring that any changes made to one data collection remain unseen to the rest unless shared intentionally by the owner. This not only supports multi-tenancy but also provides data privacy and security.

Comprehensive API Suite

A genuine and effective database offers a full set of APIs and SDKs. This ensures that the system can interact with diverse applications and can be managed effectively. Leading vector databases, like Pinecone, provide SDKs in various programming languages such as:

- Python

- Node

- Go

- Java

User-Friendly Interfaces

User-friendly interfaces in vector databases are pivotal in reducing the steep learning curve associated with new technologies. These interfaces offer:

- A visual overview

- Easy navigation

- Accessibility to features that might otherwise remain obscured

Related Reading

28 Best Vector Databases to Accelerate AI Product Development

1. Inference: A Powerful Tool for RAG Applications

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

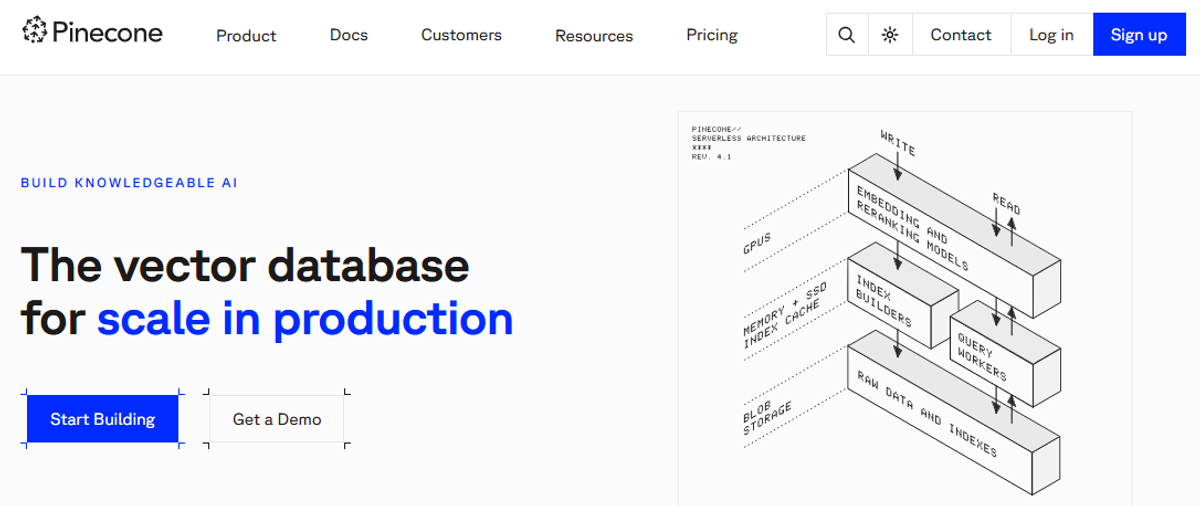

2. Pinecone: The Managed Vector Database

Pinecone is a managed, cloud-native vector database with a straightforward API and no infrastructure requirements. Users can launch, operate, and expand their AI solutions without any:

- Infrastructure maintenance

- Service monitoring

- Algorithm troubleshooting

The solution processes data quickly and lets users use metadata filters and sparse-dense index support for high-quality relevance, guaranteeing speedy and accurate results across various search needs.

Key features:

- Detection of duplicates

- Rank tracking

- Data search

- Classification

- Deduplication

3. MongoDB Atlas: The Hybrid Database

MongoDB Atlas is the most popular managed developer data platform that can handle many:

- Transactional

- Search workloads

Atlas Vector Search uses a specialized vector index that is automatically synced with the core database and can be configured to run on separate infrastructure. It offers the benefits of an integrated database with independent scaling, which is often why users would look to a vector database.

Key features:

- Integrated database + vector search capabilities

- Independent provisioning for database and search index

- Storage for 16 MB of data per document

- High availability, strong transaction guarantees, multiple levels of data durability, archiving, and backup

- Industry leader in transactional data encryption

- Hybrid search

4. Milvus: The Open-Source Vector Database

Milvus is an open-source vector database that facilitates:

- Vector embedding

- Efficient similarity search

- AI applications

It was published in October 2019 under the open-source Apache License 2.0 and is now a graduate project under the auspices of the LF AI & Data Foundation. The tool simplifies unstructured data search and delivers a uniform user experience independent of the deployment environment.

Stateless Design

To improve elasticity and adaptability, all components in the refactored version of Milvus 2.0 are stateless. Use cases for Milvus include:

- Image search

- Chatbots

- Chemical structure search

Key features:

- Searching trillions of vector datasets in milliseconds

- Unstructured data management is simple

- Reliable vector database that is always available

- Highly scalable and adaptable

- Search hybrid

- Unified Lambda structure

- Supported by the community and acknowledged by the industry

5. Chroma: The Embedding Database

Chroma DB is an open-source, AI-native embedding vector database that aims to simplify the process of creating LLM applications powered by natural language processing by making knowledge, facts, and skills pluggable for machine learning models at the scale of LLMs and avoiding hallucinations.

Many engineers have expressed a desire for ChatGPT, but use this link via embedding-based document retrieval for data. For data, it also provides batteries included with everything teams need to store, embed, and query data, including strong capabilities like filtering, with more features like intelligent grouping and query relevance on the way.

Key features:

- Feature-rich (queries, filtering, density estimates, and many other features)

- LangChain (Python and JavaScript), LlamaIndex, and more will be added shortly

- The same API in your Python notebook scales to your cluster for development, testing, and production

6. Weaviate: The Advanced Vector Database

Weaviate is a cloud-native, open-source vector database that is:

- Resilient

- Scalable

- Quick

The tool can convert text, photos, and other data into a searchable vector database using cutting-edge machine learning models and algorithms. It can perform a 10-NN neighbor search over millions of items in single-digit milliseconds. Engineers can use it to vectorize their data during the import process or submit their vectors, ultimately creating systems for:

- Question-and-answer extraction

- Summarization

- Categorization

Weaviate Modules

Weaviate modules enable the use of prominent services and model hubs like OpenAI, Cohere, or HuggingFace, as well as local and bespoke models. Weaviate is designed with:

- Scale

- Replication

- Security in mind

Key features:

- Built-in modules for AI-powered searches

- Q&A, combining LLMs with your data, and automated categorization

- Complete CRUD capabilities

- Cloud-native, distributed, grows with your workloads, and operates nicely on Kubernetes

- Seamlessly transfer ML models to MLOps using this database

7. Deep Lake: The AI Database

Deep Lake is an AI database powered by a proprietary storage format explicitly designed for deep-learning and LLM-based applications that leverage natural language processing. It uses vector storage and various features to help engineers deploy enterprise-grade LLM-based products faster.

Deep Lake works with data of any size, is serverless, and allows you to store all data in a single location. It also offers tool integrations to help streamline your deep learning operations. Using Deep Lake and Weights & Biases, you can:

- Track experiments

- Achieve full model repeatability

W&B Integration

The integration automatically delivers dataset-related information (URL, commit hash, view ID) to your W&B runs.

Key features:

- Storage for all data types (embeddings, audio, text, videos, images, pdfs, annotations, and so on)

- Querying and vector search

- Data streaming during training models at scale

- Data versioning and lineage for workloads

- Integrations with tools like LangChain, LlamaIndex, Weights & Biases, and many more

8. Qdrant: The Open-Source Vector Search Engine

Qdrant is an open-source vector similarity search engine and database. It offers a production-ready service with an easy-to-use API for storing, searching, and managing points-vectors and high-dimensional vectors with an extra payload. The tool was designed to provide extensive filtering support.

Qdrant’s versatility makes it a good pick for neural network or semantic-based matching, faceted search, and other applications.

Key features:

- JSON payloads can be connected with vectors, allowing for payload-based storage and filtering.

- Supports a wide range of data types and query criteria, such as text matching, numerical ranges, geo-locations, and others.

- The query planner makes use of cached payload information to improve query execution.

- Write ahead during power outages. The update log records all operations, allowing for easy reconstruction of the most recent database state.

- Qdrant functions independently of external databases or orchestration controllers, which simplifies configuration.

9. Elasticsearch: The Open-Source Analytics Engine

Elasticsearch is an open-source, distributed, and RESTful analytics engine that can handle:

- Textual

- Numerical

- Geographic

- Structured

- Unstructured data

Based on Apache Lucene, it was initially published in 2010 by Elasticsearch N.V. (now Elastic). Elasticsearch is part of Elastic Stack, a suite of free and open tools for data:

- Intake

- Enrichment

- Storage

- Analysis

- Visualization

Scalable Search

Elasticsearch can handle a wide range of use cases. It centrally stores your data for lightning-fast search, fine-tuned relevance, and sophisticated analytics that scale easily. It expands horizontally to accommodate billions of events per second while automatically controlling how indexes and queries are dispersed throughout the cluster for slick operations.

Key features:

- Clustering and high availability

- Automatic node recovery and data rebalancing

- Horizontal scalability

- Cross-cluster and data center replication, which allows a secondary cluster to operate as a hot backup

- Cross-datacenter replication

- Elasticsearch identifies errors to keep clusters (and data) secure and accessible.

- Works in a distributed architecture built from the ground up to provide constant peace of mind.

10. Vespa: The Data Serving Engine

Vespa is an open-source data serving engine that allows users to store, search, organize, and make machine-learned judgments over massive data at serving time. Giant data sets must be dispersed over numerous nodes and examined in parallel, and Vespa is a platform that handles these tasks for you while maintaining excellent availability and performance.

Key features:

- Writes are acknowledged back to the client and issued in a few milliseconds when they are durable and visible in queries.

- While servicing requests, writes can be delivered at a continuous rate of thousands to tens of thousands per node per second.

- Data is copied with redundancy that may be configured.

- Queries can include any combination of structured filters, free text search operators, vector search operators, and enormous tensors and vectors.

- Matches to a query can be grouped and aggregated based on a query definition.

- All of the matches are included, even if they are running on several machines at the same time.

11. Vald: The Cloud-Native Vector Search Engine

Vald is a distributed, scalable, and fast vector search engine. Built with cloud-native in mind, it employs the quickest ANN algorithm, NGT, to help find neighbors. Vald offers automated vector indexing, index backup, and horizontal scaling, allowing it to search across billions of feature vector data.

It’s simple and extremely configurable. The highly configurable Ingress/Egress filter can be customized to work with the gRPC interface.

Key features:

- Vald offers automatic backups through Object Storage or Persistent Volume, allowing disaster recovery.

- It distributes vector indexes to numerous agents, each retaining a unique index.

- The tool replicates indexes by storing each index in many agents. When a Vald agent goes down, the duplicate is automatically rebalanced.

- Highly adaptable. You may choose the number of vector dimensions, replicas, etc.

- Python, Golang, Java, Node.js, and more programming languages are supported.

12. ScaNN: A Vector Similarity Search Method

ScaNN (Scalable Nearest Neighbors) is a method for efficiently searching for vector similarity at scale. Google’s ScaNN proposes a brand-new compression method that significantly increases accuracy. According to ann-benchmarks.com, this allows it to outperform other vector similarity search libraries by a factor of two.

It includes search space trimming and quantization for Maximum Inner Product Search and additional functions like Euclidean distance. The implementation is intended for x86 processors that support AVX2.

13. pgvector: The PostgreSQL Vector Extension

pgvector is a PostgreSQL extension that can search for vector similarity. You can also use it to keep embeddings as well. pgvector helps you store all the application data in one place. Its users benefit from ACID compliance, point-in-time recovery, JOINs, and all of the other fantastic features for which we love PostgreSQL.

Key features:

- Exact and approximate nearest neighbor search

- L2 distance, inner product, and cosine distance

- Any language with a PostgreSQL client

14. Faiss: The Facebook Vector Database

Facebook AI Research developed Faiss as an open-source library for fast, dense vector similarity search and grouping. It includes methods for searching sets of vectors of any size, up to those that may not fit in RAM. It also comes with code for:

- Evaluation

- Parameter adjustment

Faiss is based on an index type that maintains a set of vectors and offers a function for searching them using L2 and/or dot product vector comparison. Some index types, such as precise search, are simple baselines.

Key features:

- Returns the nearest neighbor and the second closest, third closest, and k-th nearest neighbor.

- You can search several vectors simultaneously rather than just one (batch processing).

- Uses the greatest inner product search rather than a minimal Euclidean search.

- Other distances (L1, Linf, etc.) are also supported to a lesser extent.

- Returns all elements within a specified radius of the query location (range search).

- Instead of storing the index in RAM, you can save it to disk.

15. ClickHouse: The Analytical Database

ClickHouse is an open-source column-oriented DBMS for online analytical processing that enables users to produce analytical reports in real time by running SQL queries. The actual column-oriented DBMS design is at the heart of ClickHouse’s uniqueness.

This distinct design provides compact storage with no unnecessary data accompanying the values, significantly improving processing performance. It uses vectors to process data, which improves CPU efficiency and contributes to ClickHouse’s exceptional speed.

Key features:

- Data compression is a feature that significantly improves ClickHouse’s performance.

- ClickHouse combines low-latency data extraction with the cost-effectiveness of employing standard hard drives.

- It uses multicore and multiserver setups to accelerate massive queries, a rare feature in columnar DBMSs.

- With robust SQL support, ClickHouse excels at processing various queries.

- ClickHouse’s continuous data addition and quick indexing meet real-time demands.

- Its low latency provides quick query processing, which is critical for online activities.

16. OpenSearch: The Search Engine for AI

This is an interesting solution among other vector databases. Using OpenSearch as a vector database combines the power of classical search, analytics, and vector search into a single solution. The vector database features of OpenSearch help speed up AI application development by minimizing the work required for developers to:

- Operationalize

- Manage

- Integrate AI-generated assets

You can bring in your models, vectors, and information to enable vector, lexical, and hybrid search and analytics, with built-in:

- Performance

- Scalability

Key features:

- OpenSearch may be used as a vector database for various purposes, such as search, personalization, data quality, and as an engine.

- Among its search use cases are multimodal search, semantic search, visual search, and generation AI agents.

- Using collaborative filtering techniques, you can create product and user embeddings and fuel your recommendation engine with OpenSearch.

- OpenSearch users can use similarity search to automate pattern matching and data duplication to aid data quality operations.

- The solution lets you create a platform with an integrated, Apache 2.0-licensed vector database that offers a dependable and scalable solution for embeddings and powerful vector search.

17. Apache Cassandra: The NoSQL Database for Big Data

Cassandra is a free, open-source distributed, wide-column store NoSQL database management system. It was designed to handle massive volumes of data across many commodity servers while maintaining high availability with no single point of failure.

Cassandra will soon be equipped with vector search, demonstrating the Cassandra community’s dedication to quickly delivering dependable innovations. Cassandra’s popularity is growing among AI developers and businesses dealing with enormous data volumes as it allows them to build complex, data-driven applications.

Key features:

- Cassandra will have a new data type to facilitate the storage of high-dimensional vectors that will allow for the manipulation and storage of Float32 embeddings, which are extensively used in AI applications.

- The tool will provide a new storage-attached index (SAI), VectorMemtableIndex, to support approximate nearest neighbor (ANN) search capabilities.

- It will offer a new Cassandra Query Language (CQL) operator, ANN OF, to make it easier for users to run ANN searches on their data.

- Cassandra’s new vector search feature is designed to extend the existing SAI framework, eliminating the need to redesign the fundamental indexing engine.

18. KDB.AI Server: The Knowledge-Based Vector Database

KDB.AI is a knowledge-based vector database and search engine that enables developers to create scalable, dependable, and real-time apps by offering:

- Enhanced search

- Recommendation

- Personalization for AI applications that use real-time data

Key features:

- KDB.AI is unique among vector databases because it allows developers to add temporal and semantic context to their AI-powered applications.

- KDB.AI integrates seamlessly with popular LLMs and machine learning workflows and tools, such as LangChain and ChatGPT.

- Its native support for Python and RESTful APIs allows developers to perform common operations such as data ingestion, search, and analytics in their preferred applications and languages.

19. PostgreSQL: The Open-Source Relational Database

PostgreSQL is an open-source relational database that supports vector data through extensions like pgvector. This extension enables efficient similarity search on vector data, integrating with PostgreSQL’s ecosystem.

Key features:

- pgvector extension: Allows storage and querying of vector embeddings, facilitating similarity searches within the PostgreSQL environment

- Indexing: Supports various indexing methods, such as ivfflat, to optimize vector search performance

- Scalability: Offers scalability and support for large datasets, making it suitable for vector data applications.

- Flexibility: Enables complex queries combining vector searches with traditional SQL operations, providing a unified platform for diverse data types.

20. Redis: The In-Memory Database

Redis is an in-memory data structure store known for its speed and flexibility. The addition of the RedisAI module extends its capabilities to support vector data and AI model serving.

Key features:

- In-memory speed: Provides fast data retrieval and processing due to its in-memory nature.

- Vector similarity search: RedisAI allows the storage and querying of vector data, supporting rapid similarity searches.

- AI integration: Enables model serving and vector operations within the same environment, streamlining AI workflows.

- Scalability: Redis Cluster enables scaling across multiple nodes, maintaining performance and reliability.

21. Valkey: The Vector Database for High-Dimensional Data

Valkey is a specialized vector database designed to efficiently manage and search high-dimensional vector data. It offers tools and APIs tailored for vector data management and retrieval.

Key features:

- Optimized storage: Uses advanced data structures and indexing methods to efficiently store and retrieve vector data.

- High performance: Designed for rapid vector similarity searches, ensuring low latency even with large datasets.

- Rich API: Provides APIs for managing vector data, supporting a range of use cases from AI to search engines.

- Scalability: Supports distributed deployments, allowing scaling as data and query loads increase.

22. Marqo: The Open-Source Neural Search Engine For Your Text Data

Marqo is an open-source neural search engine that lets users index and search textual data using deep learning models. It provides a simple experience to build search applications with advanced natural language understanding capabilities.

Marqo's Textual Understanding

Marqo uses vector representations to store and search textual data. When indexing documents in Marqo, text is converted into high-dimensional vectors using deep learning models. Vector representations capture the semantic meaning of the text, allowing Marqo to perform semantic similarity searches.Under the hood, Marqo uses existing vector database technologies to store and retrieve vector representations. It abstracts the complexity of working directly with low-level vector databases and provides a higher-level interface tailored for text search applications.

Pros

- Fully managed and easy to set up

- Supports vector and text search

- Offers a REST API for indexing and querying

Marqo is excellent for e-commerce platforms with an extensive catalog of products and user-generated content, such as:

- Product descriptions

- Reviews

- Q&As

Traditional keyword-based searches often fail to yield the most relevant results. Users can find products based on their intent and meaning by enabling semantic searches, even if they don't use the exact keywords.

For example, a user searching for “comfortable summer shoes" could be shown all relevant results, including sandals, flip-flops, and breathable sneakers, even if the product descriptions don't explicitly contain the words "comfortable" or "summer."

23. Transwarp Hippo: The Commercial Vector Database For Massive Datasets

Transwarp Hippo is a commercially licensed, enterprise-level, cloud-native distributed vector database that handles the complexities and demands of massive datasets. Its capabilities are suitable for use cases that rely heavily on vector operations, such as similarity search and clustering.

Pros

- Transwarp Hippo's cloud-native architecture and distributed design permit high performance and scalability.

- Supports a wide range of vector operations, including similarity search and high-density clustering.

- Features such as data partitioning, sharding, and incremental data ingestion provide flexibility and efficiency in data management.

Transwarp Hippo's Role in Real-Time Recommendations

Transwarp Hippo can help power a real-time recommendation system. Such a system requires processing and analyzing vast user data to generate personalized product recommendations, including:

- Past purchases

- Browsing history

- Product interactions

Transwarp Hippo's Scalable Recommendations

During high-traffic periods, such as sales events, Transwarp Hippo's scalability ensures the recommendation system can handle the query surge without performance degradation. The ability to ingest incremental data enables the system to update user profiles and preferences in real-time, ensuring that recommendations remain relevant and timely.

24. Annoy: The Fastest Vector Database For Read-Only Operations

Annoy, created by Spotify, is a lightweight yet powerful database management system. It's designed for lightning-fast searches of large datasets, making it perfect for applications that need quick results. It’s a C++ library with Python bindings to search for points in space that are close to a given query point.

Annoy also creates large, read-only file-based data structures that are mapped into memory, allowing multiple processes to share the same data.

25. Nmslib: The Open-Source Library for Non-Metric Spaces

Nmslib is a specialized open-source vector database that focuses on non-metric space. It's an excellent choice for those unique projects that require a more niche solution. The goal of the project is to create a practical and comprehensive toolkit for searching in generic and non-metric spaces.

Even though the library contains a variety of metric-space access methods, the primary focus is on generic and approximate search methods, in particular, on methods for non-metric spaces. NMSLIB is the first library with principled support for non-metric space searching.

26. LanceDB: The Vector Database Built For Persistent Storage

LanceDB is an open-source database for vector search built with persistent storage, which significantly simplifies retrieval, filtering, and management of embeddings. LanceDB's core is written in Rust and is built using Lance, an open-source columnar format designed for performing machine learning workloads.

LanceDB APIs work seamlessly with the growing Python and JavaScript ecosystems. Manipulate data with DataFrames, build models with Pydantic, and store and query with LanceDB.

27. Vectra: The Local Vector Database For Node.js

Vectra is a local vector database for Node.js, offering capabilities similar to those of Pinecone or Qdrant, but built using local files. Each Vectra index is a folder on disk. There's an index.json file in the folder that contains all the vectors for the index, along with any indexed metadata.

Memory Footprint and Metadata Management

When you create an index, you can specify which metadata properties to index, and only those fields will be stored in the index.json file. All of the other metadata for an item will be stored on disk in a separate file keyed by a GUID. Keep in mind that your entire Vectra index is loaded into memory, so it's not well-suited for scenarios like long-term chatbot memory. Use a real vector DB for that.

28. SingleStore Database: The Relational Database That Supports Vectors

SingleStore Database began supporting vector storage as a feature in 2017, when vector databases were not yet a thing. The robust vector database capabilities of SingleStoreDB are designed to seamlessly serve AI-driven applications, chatbots, image recognition systems, and other similar use cases.

With SingleStoreDB, the necessity for maintaining a dedicated vector database for your vector-intensive workloads becomes obsolete.

Vector Data in a Relational World

Diverging from conventional vector database approaches, SingleStoreDB takes a novel approach by housing vector data within relational tables alongside diverse data types. This innovative amalgamation enables you to effortlessly access comprehensive metadata and additional attributes related to your vector data, all while leveraging the extensive querying capabilities of SQL.

SingleStore’s Latest New Features for Vector Search

We are thrilled to announce the arrival of SingleStore Pro Max. One of the highlights of the release includes enhancements to vector search. Two important new features have been added to improve vector data processing and the performance of vector search:

- Indexed approximate-nearest-neighbor (ANN) search

- A VECTOR data type

Powering Large-Scale Semantic AI

Indexed ANN vector search facilitates the creation of large-scale semantic search and generative AI applications. Supported index types include inverted file (IVF), hierarchical navigable small world (HNSW), and variants of both based on product quantization (PQ) — a vector compression method.

The VECTOR type makes it easier to create, test, and debug vector-based applications. New infix operators are available for DOT_PRODUCT (<*>) and EUCLIDEAN_DISTANCE (<->) to help shorten queries and make them more readable.

Key Features

- Real-time analytics and HTAP capabilities for GenAI applications.

- Highly scalable vector store support.

- Scalable, distributed architecture.

- Support for SQL and JSON queries.

- Inbuilt Notebooks feature to work with vector data and GenAI applications.

- Extensible framework for vector similarity search.

Related Reading

How to Choose the Right Vector Database for Your AI Project

Performance and Latency: Finding Fast and Reliable Vector Databases

Performance and latency are crucial when selecting a vector database, particularly for real-time applications such as conversational AI. Low latency also ensures that queries return results almost instantaneously, providing a better user experience and improved system performance. In such situations, selecting a database with high-speed retrieval capabilities is crucial.

Throughput Needs

Query traffic on production systems, especially those where users are performing operations simultaneously, requires a database with high throughput. This requires a robust architecture and effective resource utilization to ensure reliable performance without bottlenecks, even during high workloads.

Optimized Algorithms

Most vector databases use advanced approximate nearest neighbor (ANN) algorithms, such as hierarchical navigable small world (HNSW) graphs or inverted file (IVF) indexes, to achieve fast and efficient performance. These algorithms are search-accurate and low-cost, making them ideal for balancing performance with the scalability of high-dimensional vector searches.

Scalability of Vector Database: Making Sure Your Database Can Grow with Your Needs

Scalability is crucial when selecting a vector database, as the data size increases over time. We must ensure that the database can handle the current data and scale easily as the need grows. A database that slows down with increased data or user volumes will cause performance issues and reduce our system’s performance.

Horizontal scaling

Horizontal scaling is an essential property for achieving scalability in vector databases. Providing sharding and distributed storage enables the database to distribute the data load across multiple nodes, ensuring smooth operation as data or query volumes increase. This is especially important for real-time response applications, where low latency in high-traffic conditions is mandatory.

Cloud vs. On-Premise

Choosing between cloud-managed services and on-premises solutions also impacts scalability. Cloud-managed services, such as Pinecone, make scaling easier by automatically deploying resources as needed. These services are ideal for dynamic workloads.

Control, Configuration, and Customization

Self-hosted solutions (such as Milvus or FAISS) offer more control, but still require manual configuration and resource management. They are ideal for organizations with very particular infrastructure requirements.

Data Types and Modality Support: Does the Database Support Your Types of Data?

Today’s apps frequently utilize multimodal embeddings of multiple data types, including:

- Text

- Images

- Audio

- Video

To meet these requirements, a vector database must be able to store and query multimodal embeddings seamlessly. This will ensure the database can handle complex data pipelines and support image search, audio analysis, and cross-modal retrieval.

Dimensionality Handling

Embeddings produced by complex neural networks are generally large, with as many as 512 to 1024 dimensions. The database must efficiently store and query such high-dimensional vectors, as unreliable handling can result in higher latency and increased resource consumption.

Query Capabilities in Vector Database: How Does the Database Handle Searches?

An efficient nearest-neighbor search is crucial for obtaining accurate and relevant results, particularly in real-time applications.

Hybrid Search

Besides similarity searches, hybrid searches are becoming increasingly important. A hybrid search combines vector similarity and metadata filtering to provide more tailored, contextual results.

In a product recommendation engine, for example, a query could prioritize embeddings corresponding to the user’s preferences and filter through metadata such as price range or category.

Custom Ranking and Scoring

More advanced use cases usually involve specialized ranking and scoring processes. A vector database that enables developers to implement their algorithms allows them to personalize search results based on their business logic or industry requirements.

This adaptability allows the database to accommodate custom workflows, making it useful for a wide range of niche applications.

Indexing and Storage Mechanisms: What Are the Vector Database’s Underlying Structures?

Indexing strategies ensure that a vector database runs efficiently with minimal resource consumption. Depending on use cases, databases use different methods, such as Hierarchical Navigable Small World (HNSW) graphs or Inverted File (IVF) indexes.

The indexing algorithm chosen mainly depends on the performance requirement of our application and the data size. Effective indexing ensures faster query execution and lower computational costs.

Disk vs. In-Memory Storage

Storage options have a significant impact on retrieval speed and resource utilization. In-memory databases store data in RAM and have a significantly faster access speed than disk-based storage. Nevertheless, this speed comes at the expense of higher memory consumption, which isn’t always feasible with large data sets.

Disk storage, while slower, is more cost-effective and better suited for large data sets or applications that don’t require real-time performance.

Persistence and Durability

Data persistence and durability are key to the reliability of our vector database. Persistent storage ensures that embeddings and associated data are safely synchronized and can be recovered in the event of failure, like hardware malfunction or power disruption.

An efficient vector database must support automatic backups and failover recovery to prevent data loss and ensure the availability of critical applications.

Integration and Compatibility: Can the Vector Database Fit into My Existing System?

APIs and SDKs

We need APIs and SDKs in our preferred programming languages for seamless integration with our application. Our system can easily communicate with the vector database through various client libraries, saving development time.

Framework Support

Support for AI frameworks such as TensorFlow and PyTorch is essential for current AI projects. Integration packages such as LangChain make it easier to connect our vector database with large language models and generative systems.

Ease of Deployment

Containerized and easy-to-deploy vector databases simplify the configuration of our infrastructure. These capabilities are the most technologically basic, whether cloud-based or on-premises, and reduce the technical cost of integrating the database into our pipeline.

Cost Considerations: What Are the Vector Database’s Financial Requirements?

Initial Investment

Choose a vector database based on the licensing costs of a proprietary solution versus an open-source offering. Open-source databases can be free, but they may also require technical expertise for deployment and maintenance.

Operational Expenses

Continuous operating costs include Cloud service charges, maintenance fees, and scaling costs. Cloud-based services are more straightforward but may have a higher upfront cost as data and query volumes increase.

Total Cost of Ownership (TCO)

We need to evaluate the long-term total cost of ownership, as well as initial and operational costs. Considering scalability, support, and resource requirements enables us to select a database that aligns with our budget and growth needs.

Community and Vendor Support: How Active and Helpful Are the Database’s Developers?

Active Development

A strong community or vendor development will keep the database current with feature updates and improvements. Its regular updates demonstrate an initiative to stay current with users and industry trends.

Support Channels

Professional support, good documentation, and active community forums are essential for assistance and support. These tools help solve issues efficiently.

Ecosystem and Plugins

An ecosystem with additional tools and plugins makes the vector database more robust. Such integrations enable customization and extend the database capabilities to fit different use cases.

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.