Aug 18, 2025

A Beginner’s Guide to LLM Quantization for AI Efficiency

Inference Research

Running a large language model on a laptop or small server means slow responses, rising cloud bills, and tight memory. LLM Quantization reduces model size and compute by moving weights and activations to lower precision, improving inference speed, lowering latency, and cutting costs. Want to cut costs and run models on your own hardware without trading away much accuracy? This article walks through model compression tools such as weight quantization, activation quantization, post training quantization, and quantization aware training, and gives clear steps so you can easily understand and apply LLM Quantization to run powerful AI models faster, cheaper, and on smaller hardware without sacrificing too much accuracy.

To help with that, AI inference APIs act as a practical solution, packaging quantized models and managing hardware, batching, and precision so you can deploy faster and focus on building features rather than tuning models.

What is an LLM Quantization?

The first GPT in 2018 had about 0.11 billion parameters. By late 2019, GPT 2 reached 1.5 billion. GPT 3 exploded to 175 billion parameters in late 2020. Models like GPT 4 report over 1 trillion parameters.

As parameter counts climb, so do memory requirements for weights, activations, optimizer states and the KV cache. That growth pushes model sizes past the capacity of many GPUs and other accelerators, which constrains both training and real time hosting.

The result:

Fewer teams can run large models locally, latency goes up, and serving costs rise.

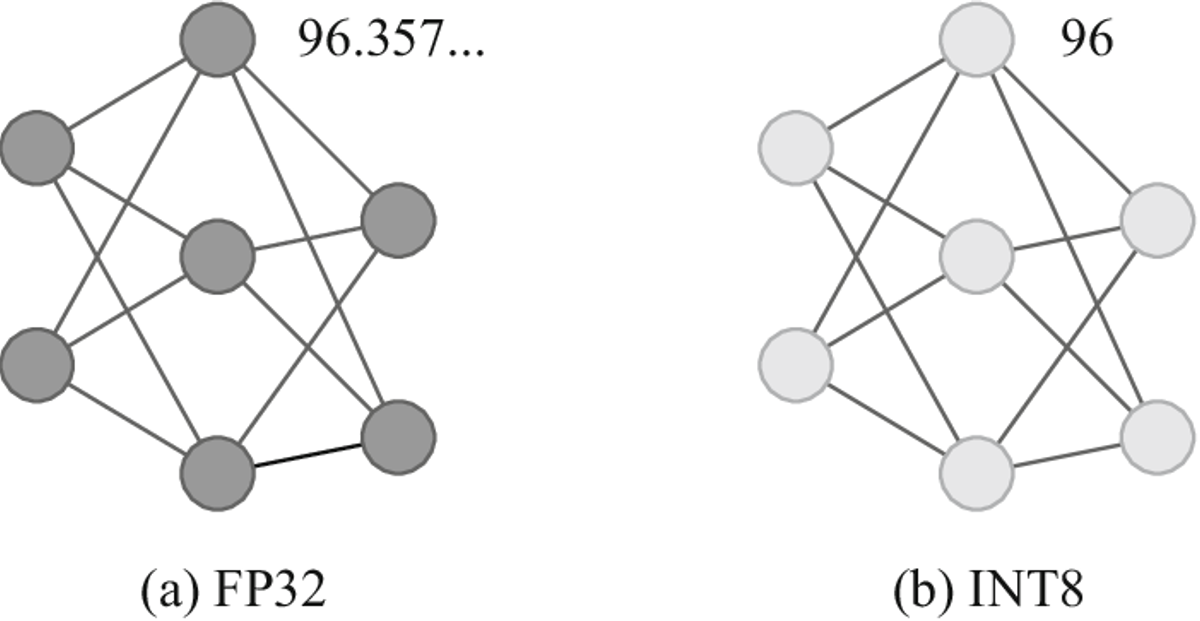

What Quantization Means: Shrinking Numeric Precision

Quantization converts model weights and activations from high precision formats, like 32 bit floating point, into lower precision formats such as 8-bit integer or even 4-bit integer. In plain terms, you store each parameter with fewer bits.

That reduces memory and storage while lowering the bandwidth and compute per operation. You trade off a small amount of numeric fidelity for much higher efficiency.

Image Compression Analogy: Why Bits Can Be Cut Without Breaking Things

Think of a high-resolution photo saved as a compressed file for the web. You remove some information to shrink the file. The picture still looks good for most uses, but takes less storage and loads faster.

Quantization does the same for a model. It introduces quantization noise, but often preserves the behavior the model needs for inference.

Theory of Quantization: How Numeric Reduction Works

Weights in neural networks are learned coefficients that shape activations across layers. Quantization maps continuous high-precision values to a discrete set of lower-precision levels. Core concepts include scale and zero point, which map integer representable values back into a floating point range.

You can apply symmetric quantization where values are centered around zero, or asymmetric quantization with a non-zero zero point. You can quantize per tensor, using a single scale for a whole tensor, or per channel, using different scales for each output channel. Per-channel quantization often preserves accuracy better for convolutional and linear layers in LLMs.

How LLM Quantization Works in Practice: Steps and Options

- Precision reduction: Convert FP32 or FP16 weights and sometimes activations to int8 or int4. That lowers the memory footprint for model parameters and the KV cache.

- Calibration: For post-training approaches, run a calibration dataset through the model to collect activation ranges. Use those ranges to set scales and zero points.

- Static versus dynamic activation quantization: Static quantization precomputes activation ranges and uses quantized kernels during inference. Dynamic quantization quantizes activations on the fly using observed ranges at runtime.

- Post-training quantization and quantization-aware training: Post-training quantization is fast and does not require retraining. Quantization-aware training simulates quantization during training to adapt the weights so the model tolerates lower precision better.

- Dequantization: Quantized computation often requires occasional dequantization operations when interfacing with float operations. Efficient inference engines fuse operations to avoid costly conversions.

- Per channel and tensor: Choose per channel for better accuracy, per tensor when memory or hardware constraints demand simplicity.

Common Quantization Methods Explained

- Post-training quantization PTQ: Apply quantization after training with calibration. Quick and hardware-friendly.

- Quantization aware training QAT: Inject quantization effects during training so weights adapt. More accurate but more costly.

- Integer only inference: Convert model to integer arithmetic for faster, lower power execution on supported hardware.

- Mixed precision: Keep sensitive parts in higher precision, such as FP16 or BF16, while quantizing the bulk to int8 or int4.

- Rounding and adaptive rounding: Algorithms like optimal rounding and Hessian weighted rounding reduce quantization error with small extra computation

- Advanced algorithms: GPTQ style and AWQ style post-training algorithms use layer-wise reconstruction and weight clustering to recover accuracy at 4 bits and below.

Benefits of LLM Quantization: What You Gain

- Lower memory footprint: Weight and activation memory drop dramatically when you move from 32-bit to 8-bit or 4-bit. That lets larger models run on GPUs with 24 gigabytes or less.

- Faster inference and higher throughput: Smaller data types reduce memory bandwidth and increase cache efficiency. Many inference stacks provide faster int8 matrix multiply kernels.

- Lower energy use: Less memory movement and more straightforward arithmetic reduce power draw.

- Higher concurrency: Smaller KV caches per token let you serve more simultaneous streams from the same GPU memory budget.

- Cost savings: Using fewer or less expensive accelerators can host the same model, lowering cloud or rack-level costs.

- Better deployment flexibility: Run on edge devices, mobile, or modest cloud instances where full precision models would not fit.

When to Use Quantization: Practical Rules of Thumb

Use quantization if you must deploy on limited GPU memory, such as 24 gigabytes or less. Use it when you need lower latency or higher throughput for real-time services like chatbots.

Use it to shrink serving costs and increase concurrency by reducing the KV cache size per token. Choose quantization if you can tolerate small accuracy trade-offs.

Ask yourself:

Does the application tolerate slight degradation in rare cases? If yes, quantization may be a good trade.

When Not to Use Quantization: Known Limits

Do not quantize when you require the highest possible accuracy for safety-critical systems. Skip

it if the model is already small and gains would be marginal.

Avoid it when your hardware stack lacks support for the chosen quantized format or when inference libraries do not provide optimized int kernels.

Trade Offs and Risks: Quantization Noise and Accuracy Loss

Quantization introduces quantization noise. That can cause slight shifts in logits and downstream sampling behavior. Outlier weights and long tail activation distributions make some layers sensitive.

Embedding layers, layer norms, and softmax operations often need special handling or higher precision. You can reduce errors with:

- Per channel scales

- Bias correction

- Calibration

Some tasks show larger accuracy degradation at 4 bits than at 8 bits. Measure on a representative validation set and profile failure modes.

KV Cache, Tokens, and Serving Effects

Quantizing the KV cache reduces per-token memory cost. That directly increases the number of tokens you can keep in memory and improves concurrency.

Smaller KV entries also reduce memory bandwidth when streaming tokens during autoregressive decoding. Quantize KV representations carefully, as errors can accumulate over long generation sequences.

Hardware and Software Considerations: Practical Deployment Notes

- GPUs: NVIDIA tensor cores may accelerate mixed precision, like FP16 and int8. Some GPU runtimes require specific kernel implementations for int8 to see speed gains.

- Inference engines: Use optimized stacks such as:

- TensorRT

- ONNX Runtime

- OpenVINO

specialized projects like bits and bytes, llama dot cpp, and GPTQ toolkits for lower precision inference.

- Precision support: Check whether your hardware supports integer-only or mixed-precision fast paths and whether drivers and libraries include int8 or 4-bit fused kernels

- Memory alignment and packing: Low-bit formats like 4-bit often pack two values per byte. That requires tooling to pack and unpack efficiently to avoid overhead.

Advanced Techniques: Getting 4-bit to Work at Scale

Channel-wise quantization and layer-wise reconstruction reduce error when going to 4 bits. Algorithms that reconstruct activations using small calibration sets can yield high fidelity without retraining.

Quantization-aware training remains the gold standard for the least accuracy loss when you can afford retraining. Combine mixed precision, treating attention and layer norm in higher precision while quantizing feed-forward layers, for balanced results.

Operational Tips: Practical Steps for Teams

Start with post-training int8 quantization and run a calibration pass on a representative dataset. Measure perplexity and downstream task metrics. If loss is unacceptable, try per-channel scales or quantization-aware training on a smaller training run.

Profile memory and latency with real traffic patterns. Test long-running generations to detect KV cache degradation. Log edge cases where quantization changes outputs significantly and iterate.

Which quantization method fits your use case? Consider the hardware available, the tolerance for accuracy loss, and whether you can retrain.

Related Reading

- Model Context Protocol

- Speculative Decoding

- Lora Fine Tuning

- Gradient Checkpointing

- LLM Use Cases

- Post Training Quantization

- vLLM Continuous Batching

Types of LLM Quantization

Linear quantization maps a floating-point range to evenly spaced integers using a scale and a zero point.

You compute per-tensor or per-channel min and max values:

Derive scale = (max - min) / (Qmax - Qmin)

Pick a zero point so that the real zero maps to an integer, round the scaled values, and store only the integer weights plus the scale and zero point. The integers are dequantized on the fly or used directly in integer kernels.

Advantages

- Simple to implement

- Drops model size substantially

- Accelerates inference on hardware with INT8 kernels.

Limitations

it assumes a roughly uniform distribution of values, so tails and outliers cause larger quantization error; per-tensor schemes lose fidelity on layers with varied ranges. Would you use per-channel scales when weight distributions vary across output channels to cut error further

Blockwise Quantization: Split the Matrix and Treat Each Piece on Its Own

Blockwise quantization divides large tensors into small blocks and applies independent quantization per block. That raises fidelity because each block has a tighter dynamic range, which shrinks quantization noise for nonuniform weight distributions typical in LLM attention and feed-forward layers.

Blockwise schemes pair well with specialized kernels that pack blocks efficiently. The trade-offs are increased bookkeeping for scales and zero points, some extra memory for those parameters, and more complex kernel implementations. When models show heavy per-row or per-column variance, blockwise methods usually outperform global linear mapping.

Weight Quantization versus Activation Quantization: One is Static, One is Fluid

Weights are fixed after training, so weight quantization is easier: you can precompute scales and zero points and ship compact weight blobs. Activations change per input and often require runtime calibration or dynamic adjustment.

Activation quantization is harder because you must capture the activation dynamic range robustly; without it, you can clip necessary signals or introduce significant errors. Pairing weight quantization with activation quantization unlocks the most size and speed gains. Ask whether your target hardware supports low-precision activation GEMMs before committing to full activation quantization.

Post Training Quantization PTQ: Fast, Low cost, Deployable

PTQ takes a trained model and maps weights and possibly activations to lower precision without retraining. You run a calibration dataset through the model to collect activation statistics, compute scales and zero points, and then quantize. PTQ is quick, needs no access to original training, and suits production where a small accuracy drop is acceptable.

The downside:

PTQ accuracy can degrade on sensitive layers or very low bit widths like 4 bits, unless you use per-channel or blockwise schemes and intelligent calibration. When you cannot afford retraining, PTQ is usually the first practical step.

Quantization Aware Training QAT: Build The Model To Tolerate Low Precision

QAT inserts simulated quantization during training so the model learns to compensate for quantization noise. The forward and sometimes the backward passes use fake quantization operators, with straight-through estimators for gradient flow. QAT keeps a floating-point copy of weights for updates while using quantized weights to compute activations.

Benefits:

Better accuracy at low bit widths, especially under aggressive compression.

Trade-offs:

It costs training time, needs the original training pipeline and data, and increases memory use during training. Use QAT when accuracy is a primary constraint and you can afford extra training cycles.

Dynamic Quantization: Adjust at Inference Time for Quick Wins

Dynamic quantization defers some quantization decisions to inference. Weights get statically quantized while activation ranges are measured or adapted per batch. This enables immediate deployment with slight quality loss and is very effective on CPU inference, where re-quantizing activations per batch is cheap.

The approach suits models that see diverse inputs where static activation ranges fail. The drawback is that dynamic adjustment can add per-batch overhead and may not match the raw throughput of fully static quantized kernels on specialized accelerators.

Mixed Precision Quantization: Pick The Right Bits For Each Layer

Mixed precision assigns different bit widths per layer or tensor. For example, keep attention output and layer norm in FP16 while quantizing large linear layers to INT8 or INT4.

Mixed precision can be chosen manually using sensitivity analysis or automatically with search algorithms that optimize accuracy versus memory and latency budgets.

The main benefit:

You retain accuracy where it matters and compress the heavy parts.

Drawbacks:

Implementation complexity and the need for heterogeneous kernel support. Are you ready to profile layer sensitivity and target layerwise int8, int4, or float16 to hit latency targets

Per-Channel Versus Per-Tensor Scaling: Where To Place Your Precision

Per-tensor uses a single scale per tensor, while per-channel uses separate scales per output channel or row. Per-channel reduces quantization error for matrices where different channels have different dynamic ranges, and is commonly used for weights.

It costs more storage for scales but often yields significant accuracy gains, especially at low bits. Per-tensor remains useful when kernel simplicity and memory alignment matter.

Calibration Techniques: How to Choose Ranges That Shrink Error

Calibration collects activation statistics on a representative dataset to set quant ranges. Standard methods include max range, percentile clipping to ignore outliers, and statistical methods using mean and standard deviation to define a symmetric range. Percentile calibration is robust when outliers would otherwise skew scales.

Some recipes use layerwise or blockwise calibration and fine-tune thresholds with a small evaluation set. QLoRA style approaches replace linear layers with quantized linear layers that manage internal ranges, often removing separate calibration steps for weights. The calibration method you choose depends on the input distribution and the application's tolerance to occasional clipping.

Low Bit And Binary Ternary Methods Extreme Compression With Bigger Accuracy Hits

Binary quantization maps weights to two values, typically ±1. Ternary adds a zero option and uses three values. These methods cut model size drastically and can enable bit-packed inference using logical ops.

The downside: substantial accuracy loss in many LLM tasks unless you combine them with heavy retraining, layer rearchitecting, or compensation techniques. Expect accuracy drops often measured in single-digit points or more; use these approaches when memory and compute are severely constrained, and model quality can be traded away.

Blockwise And Distribution-Aware Blocks: Handle Nonuniform Distributions Naturally

When weight histograms differ across a tensor, splitting into blocks that align with those local distributions reduces quantization error. Distribution-aware blocking groups value with similar statistics and then apply tailored scales.

That gives better fidelity than straight global mapping while remaining compatible with low-precision kernels designed for blocked formats. The cost shows up in more scale metadata and more complex packing, which can limit speedups if kernels do not exploit the block layout.

Hardware And Kernel Considerations: Quantization is Only as Good as The Runtime

Different accelerators have different support for low-precision math. CPUs often benefit from dynamic int8 kernels and optimized backends like FBGEMM or QNNPACK. GPUs may use INT8 tensor cores, vectorized INT4 kernels, or prefer FP16/FP32 mix.

Custom runtimes may need special packing, block sizes, or per-channel dequantization to reach peak efficiency. Thus, pick quant formats that map well to target hardware to get absolute latency and throughput gains.

Qlinear And Qlora Style Layers: Package Quantization Inside The Operators

Some toolchains replace linear layers with QLinear implementations that store quantized weights and do dequantize or fused integer math internally. QLoRA style adapters can let you fine-tune quantized models or use LoRA with quantized backbones without separate calibration steps. These patterns reduce integration friction and let inference engines handle quant details.

Choosing An Approach: What to Try First

Start with PTQ with per-channel int8 for weights and dynamic int8 activation quantization. If accuracy loss exceeds tolerance, try blockwise int4 or mixed precision and test QAT on the most sensitive layers.

Use a representative calibration set, measure quantization error and downstream task metrics, and iterate. Keep hardware constraints and kernel support in mind as you test options. Which metric matters most, peak throughput or minimal quality loss, will guide the next step.

Related Reading

- KV Cache Explained

- LLM Performance Metrics

- LLM Serving

- Pytorch Inference

- Serving Ml Models

- LLM Benchmark Comparison

- Inference Optimization

- Inference Latency

- LLM Performance Benchmarks

Practical Steps for Quantizing a Model

Choose a model that matches your task and target hardware.

Ask which family you need:

GPT-style generative behavior or an encoder model for retrieval. Check license, size, and layer types because some transformer variants respond differently to reduced precision. Convert the model to a quantization-friendly format early.

For PyTorch, keep a scripted or traced module or an ONNX graph. For TensorFlow, export a SavedModel or TFLite flatbuffer. Strip training artifacts and freeze the graph so weights and operator shapes are fixed before you collect statistics. Prepare a calibration set and an evaluation set that mirror expected prompts and token lengths.

Pick Your Quantization Strategy with Purpose

Decide whether you will use post-training quantization PTQ or quantization-aware training QAT. PTQ is faster and often enough for weight-only quantization and int8. QAT adds fake quantization nodes and fine-tuning to recover accuracy when lower bits are aggressive.

Choose Bit Widths and Formats Next.

Common choices are int8 and int4 for weights, float16 or bfloat16 for activations, or mixed precision where weights are int4 and activations remain fp16.

Pick Scaling Style

linear scaling maps values uniformly, while logarithmic or floating point-like formats preserve small values better.

Select Per-Tensor or Per-Channel Scaling for Weights

Use per-channel symmetric scaling for linear layers to reduce quantization error, and per-tensor asymmetric scaling for activations where zero points help preserve sparsity.

Prepare the Calibration Dataset and Metrics

Assemble 200 to 2000 representative sequences for calibration, depending on model size and token variety. Include edge cases like long contexts and short prompts. For calibration, collect activation histograms and min-max ranges, or use percentile clipping, such as 99.9 percentile, to avoid outlier blow-up.

Choose evaluation metrics tied to the task:

- Perplexity for language modeling

- Token accuracy for classification

- BLEU or ROUGE for generation

- Sampling quality measures for conversational systems

Add system metrics like latency p50 and p95, throughput in tokens per second, memory footprint, and power draw for hardware-constrained deployments.

Apply Quantization to Weights and Activations Step by Step

- Export a stable inference graph or module.

- Run a calibration pass in FP32 while recording activation ranges and weight statistics.

- Compute scale and zero point for each tensor or channel using min max, KL divergence, or MSE criteria.

- Quantize weights to target integer format using chosen scaling method and rounding with bias correction if available.

- For activation quantization, choose dynamic quantization, which computes scales at runtime, or static quantization, which applies calibrated scales.

- Consider weight-only quantization to reduce model memory without touching activation flows, then test inference memory and latency.

- If accuracy loss is unacceptable, run quantization-aware training by inserting fake quant nodes and fine-tuning with a small learning rate for a few epochs to recover lost performance.

- Evaluate options such as groupwise quantization, block quantization, or learned scale factors to trade off accuracy and compression.

Calibration Techniques and Tricks That Move the Needle

Use histogram or KL divergence-based calibration to select clipping thresholds that reduce quantization noise from outliers. Try percentile clipping, such as 99.5 or 99.9, depending on how extreme your activations are. Use per-channel scaling for weights and per-tensor scaling for activations by default.

For very low bit counts, explore advanced methods such as GPTQ style second-order correction or AWQ, which uses asymmetric quantization with learned scales. Apply bias correction to weight quantization to remove systematic shifts in bias after rounding. When calibrating, log layer-wise quantization error and monitor token-level logits difference for the most fragile layers.

Evaluate Accuracy and Performance with Concrete Tests

Run the evaluation set and check task metrics like perplexity, exact match, or generation quality. Compute logits similarity, such as cosine distance or average KL across tokens, to detect distribution shifts. Benchmark latency with representative batch sizes and sequence lengths, and record throughput in tokens per second.

Measure memory consumption for model weights and activations during inference. Profile power and hardware utilization when possible. If a single metric drops sharply, isolate which layers or operators cause the jump and revert those layers to higher precision or apply mixed precision to those blocks.

Iterate and Fix the Real Problems

If accuracy drops unacceptably, try these moves: increase activation precision to fp16 while keeping weights low bit, switch from per tensor to per channel quantization for offending layers, lower group size, adjust clipping percentile, or apply short QAT passes on a small dataset.

Try newer calibration algorithms like LSQ or learned scale quantization, where the scale is a parameter fine-tuned with gradients. If one layer is brittle, keep that layer in FP16 and quantize the rest. Track changes using a small but representative test suite so you can measure regression quickly.

Deployment and Inference Engine Integration

Convert the quantized model to the runtime format your target engine supports, such as:

- ONNX Runtime quantized graph

- TensorRT engine

- Vendor SDK

Replace generic operators with optimized low-precision kernels and use packed weight storage to reduce memory bandwidth. Align tensor layouts to the hardware's preferred format to avoid unnecessary copies. Test the runtime with exact prompt flows and sampling parameters to ensure sampling behavior remains consistent after quantization.

Hardware Level Optimizations That Matter

Map quantized operations to hardware primitives such as int8 GEMM on CPU VNNI or tensor core int8 on modern GPUs. Use fused kernels for matmul and layer norm when the runtime supports them. Take advantage of memory mapping and weight packing to reduce memory traffic.

Use batch sizes and sequence lengths that keep compute units busy but avoid spilling activation data to host memory. Consider FPGA or DPU deployments when you need ultra-low power, and validate operator coverage because some custom accelerators omit specific kernels.

Common Pitfalls and How to Avoid Them

Unexpected accuracy loss often comes from poor calibration or extreme clipping. Weight-only quantization can still break generation quality if key layers are small and sensitive. Overaggressive group sizes can add quantization noise.

Ignoring activation distribution shifts caused by prompt engineering will cause surprises in production. Validate across multiple prompt types and temperatures and log both functional and infrastructure metrics after deployment.

Tools, Libraries, and Methods to Try

Use bitsandbytes for int8 and int4 weight compression in PyTorch, ONNX Runtime quantization for cross-framework deployment, TensorRT for GPU-optimized int8 paths, and TensorFlow Model Optimization Toolkit for TF models. Explore model-specific methods such as GPTQ and AWQ for aggressive weight-only quantization with low accuracy drop. Use lightweight runtimes like ggml or llama cpp for CPU deployments where memory maps and quantized kernels matter.

Quick Questions to Guide Your Next Step

Which model family and size will you target? What hardware is available for inference and does it support int8 or custom low-precision math? Do you have a calibration dataset that reflects production prompts? Answering these will narrow choices and speed implementation.

Start Building with $10 in Free API Credits Today!

Inference offers OpenAI-compatible serverless inference APIs that run top open source LLMs with careful attention to throughput, latency, and cost. The stack uses weight quantization and activation quantization so models fit more compute into the same GPU memory. You will see int8 and int4 rollouts and mixed precision options like FP16 alongside quantized kernels to balance accuracy and speed.

These choices reduce memory footprint and increase throughput while keeping inference latency low on GPU and specialized hardware. Want a concrete metric to compare? Pick a model, try int8 weight only quantization and compare tokens per second against FP16 on your first request.

Start Building Now with $10 Free API Credits

Sign up and use $10 in free API credits to test serverless endpoints with different quantization settings and batch sizes. The OpenAI-compatible API surface lets you swap models and compare FP16, int8, and int4 variants with the same call patterns. Instrument throughput, latency, and token error rates.

Try a small RAG flow:

- Ingest documents

- Generate quantized embeddings

- Run retrieval

- Call the inference endpoint

For final generation while tracking cost per query. Ready to spin up a serverless instance and run your first quantized throughput test

Related Reading

- Continuous Batching LLM

- Inference Solutions

- vLLM Multi-GPU

- Distributed Inference

- KV Caching

- Inference Acceleration

- Memory-Efficient Attention

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.