Mar 14, 2025

31 Best LLM Platforms for Inferencing and Scaling AI.

Inference Research

Organizations embracing large language models (LLMs) quickly discover the challenges in deploying and scaling them for real-world applications. These hurdles can seem daunting, especially if you must maximize performance and minimize costs to meet production goals. Fortunately, LLM platforms provide a solution. This article will explore how to make LLM platforms work for you by offering valuable insights on deploying and scaling LLMs with high-speed, cost-efficient inference that maximizes performance and reliability. Additionally, understanding AI Inference vs Training is crucial to optimizing model performance and ensuring smooth deployment.

One of the best ways to harness the power of LLM platforms is with AI inference APIs. Inference APIs provide a straightforward way to start using LLMs in your applications without building and maintaining the underlying infrastructure yourself.

What is LLM Inference, and How Does It Work?

LLM inference is a stage of a large language model in which we use the trained model to apply patterns and rules learned from past data to new, unseen information to make predictions or respond to questions by generating text.

This stаge is of раrаmount imрortаnсe for reаlizing the usefulness of LLMs in рrасtiсаl situаtions beсаuse it trаnsforms аll сomрlex unԁerstаnԁings аnԁ relationships сарtureԁ ԁuring training into асtionаble results or outрuts, Inferenсe with LLMs meаns ԁeаling with lаrge аmounts of ԁаtа through ԁeeр neurаl networks.

Optimizing LLM Inference for Real-Time Applications

This tаsk neeԁs signifiсаnt сomрutаtionаl рower, раrtiсulаrly for moԁels suсh аs GPT (Generаtive Pretrаineԁ Trаnsformer) or BERT (Biԁireсtionаl Enсoԁer Reрresentаtions from Trаnsformers). The рromрtness аnԁ quickness of LLM inference аre imрortаnt for аррliсаtions thаt require resрonses in reаl time: these inсluԁe:

- Interactive сhаtbots

- Automаteԁ trаnslаtion serviсes

- Aԁvаnсeԁ analytics systems

Therefore, LLM inference is not simply about applying a model; it pertains to incorporating these sophisticated AI powers into the very structure of digital services and goods. This improves their working ability and users’ experience.

Benefits of LLM Inference Optimization

Oрtimizing LLM inferenсe саn hаve fаr-reасhing benefits beyonԁ just sрeeԁ аnԁ сost. By enhаnсing the efficiency of these moԁels, businesses аnԁ ԁeveloрers саn асhieve:

- Imрroveԁ User Exрerienсe: Oрtimizeԁ LLMs with imрroveԁ resрonse times аnԁ ассurаte outрuts саn greаtly imрrove user sаtisfасtion. It is esрeсiаlly benefiсiаl in reаl-time аррliсаtions suсh аs сhаtbots, reсommenԁаtion systems, аnԁ virtuаl аssistаnts.

- Resourсe Mаnаgement: Effiсient LLM inferenсe oрtimizаtion leаԁs to better resourсe utilizаtion, аllowing for the аlloсаtion of сomрutаtionаl рower to other сritiсаl tаsks, thereby imрroving overаll system рerformаnсe аnԁ reliаbility.

- Enhаnсeԁ Aссurасy: Oрtimizаtion is аbout аԁjusting the moԁel for better results, ԁeсreаsing mistаkes, аnԁ enhаnсing рreԁiсtion рreсision. This mаkes the outрut more ԁeрenԁаble аnԁ benefiсiаl in ԁeсision-mаking situаtions.

- Sustаinаbility: Less сomрutаtionаl ԁemаnԁs сoulԁ meаn less usаge of energy, which is in line with sustаinаbility goаls аnԁ саn ԁeсreаse the саrbon footрrint from AI oрerаtions.

- Flexibility in Deрloyment: You саn use inferenсe moԁels of LLM thаt аre oрtimizeԁ for different рlаtforms. These inсluԁe eԁge ԁeviсes, mobile рhones, аnԁ сlouԁ environments. This flexibility offers more options for using LLM аррliсаtions in vаrious situations аnԁ mаkes them more versаtile.

How does LLM inference work?

LLM inference operates in two key phases: prefill and decode.

- Prefill Phase: The user’s input is tokenized into smaller units (words or subwords) and processed by the model. During this stage, the model builds intermediate states (keys and values) that help generate the first token of the response. Since the entire input is known upfront, computations can be parallelized, leading to efficient GPU utilization.

- Decode Phase: The model generates vector embeddings based on the input and, using prior context, predicts the next token iteratively until a stop condition is met. Unlike the prefill phase, this process is sequential—each token depends on the previous one—making it slower and less efficient for GPUs. Instead of compute performance, decoding speed is limited by memory access, classifying it as a memory-bound operation.

This distinction between parallel prefill and sequential decoding highlights why LLM inference can be both powerful and computationally demanding.

Striking the Right Balance: Speed vs. Accuracy in LLM Inference

The inference process is about producing reliable outputs, and this is where the speed vs. accuracy trade-off comes into play. Higher-quality responses require the model to consider many possibilities, which can get slower and more computationally intensive.

Simplifying the computation facilitates faster responses, but this can ultimately compromise the quality of the output. Finding the best balance between speed and accuracy is a key consideration when designing LLM models.

Unreliable Outputs in LLM Inference

It should also be noted that the process of inference is probabilistic. This means that its results are not always reliable. The model can sometimes give strange outcomes and surprising results since it produces responses based on the input and what it has already learned.

Optimizing Inference: KV Caching

Caching key-value (KV) is a commonly used methodology to optimize the computational efficiency of LLM inference. In the decoding phase, tokens are generated step by step, with each new word depending on previously captured information.

The model remembers this information as key and value tensors. By caching these tensors, the model can eliminate the need to recalculate them every time a new word is generated. This dramatically improves the efficiency and speed of the LLM inference process.

Optimizing Inference: Batching

Batching allows multiple user requests to be grouped into one batch instead of being sent individually. This minimizes the need to load model parameters frequently, reducing inference time. Individual users may have to wait longer for their requests to be processed until the desired set of requests for the batch request has been met.

This drawback can be circumvented through inflight-batching. After one user request has finished processing, that spot in the batch request is filled with new requests without waiting for the entire batch to complete. Thus, it ensures the whole process is:

- Smooth

- Continuous

- GPU-optimized

Optimizing Inference: Model Parallelization

Distributing LLMs over large clusters of GPUs can efficiently handle many user inputs. When the model is partitioned and its memory and compute requirements are split across several instances, LLM inference performance improves significantly through model parallelization.

Optimizing Inference: Model Optimization

Optimization techniques enhance models’ effectiveness by reducing the memory needed to create ideal outcomes. Model distillation and quantization are two methods used to achieve model optimization. In distillation, a larger LLM model is employed to teach a smaller model, which essentially mimics the properties of the bigger one but with less memory or computational power.

Quantization shrinks the model by regulating its activation systems and weightings. Although still yielding comparable results, quantization substantially downsizes a model. These techniques generally result in better models that facilitate increased inference performance.

Related Reading

- Model Inference

- AI Learning Models

- MLOps Best Practices

- MLOps Architecture

- Machine Learning Best Practices

- AI Infrastructure Ecosystem

15 Essential LLM Platforms for Inferencing and Scaling AI

1. Inference: The OpenAI-Compatible Inference API

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

2. Groq: A Leader in Speed and Performance

Groq is an AI infrastructure company that claims to have developed the world’s fastest AI inference technology. Its flagship product, the Language Processing Unit (LPU) Inference Engine, is a hardware and software platform designed for high-speed, energy-efficient AI processing.

Groq’s LPU-powered cloud service, GroqCloud, allows users to run popular open-source LLMs, such as Meta AI’s Llama 3 70B, up to 18x faster than other providers. Developers value Groq for its performance and seamless integration. The platform supports API access via Groq’s Python client SDK or OpenAI’s client SDK and integrates easily with tools like LangChain and LlamaIndex for building advanced LLM applications and chatbots.

Pricing

Groq’s cloud service charges are based on the number of tokens processed, ranging from $0.06 to $0.27 per million, depending on the model. A free tier is available, making it easy for users to get started.

3. Perplexity Labs: An API for Rapid Access to Open Source LLMs

Perplexity is rapidly emerging as an alternative to Google and Bing. Perplexity Labs offers both an AI-powered search engine and an inference engine.

pplx-api: Perplexity’s Inference Engine

In October 2023, Perplexity Labs launched pplx-api, an API for fast and efficient access to open-source LLMs. Currently in public beta, the API is available to Perplexity Pro subscribers, allowing a broad user base to test and provide feedback for ongoing improvements.

The API supports popular LLMs, including:

- Mistral 7B

- Llama 13B

- Code 34B

- Llama 70B

It also features llama-3-sonar-small-32k-online and llama-3-sonar-large-32k-online, based on the FreshLLM paper. These Llama3-based models can return citations, a feature currently in closed beta.

Developer Integration & Cost Efficiency

Perplexity’s API is designed to be cost-effective for deployment and inference, offering significant savings. It is also client-compatible with OpenAI, allowing seamless integration for developers familiar with OpenAI’s ecosystem.

Pricing & Subscription Plans

Perplexity offers flexible pricing with a pay-as-you-go model:

- $0.20 to $1.00 per million tokens, depending on the model size

- Online models incur a flat $5 fee per 1,000 requests

For those needing higher usage limits, the Pro plan costs $20/month or $200/year and includes:

- $5 monthly API credit

- Unlimited file uploads

- Dedicated support

This structured pricing makes Perplexity’s API accessible without upfront commitments, catering to casual users and developers deploying LLM-powered applications.

4. Fireworks AI: A Platform for Open Source AI Applications

Fireworks AI is a generative AI platform that enables developers to use cutting-edge open-source models in their applications.

The platform offers a broad range of language models, including:

- FireLLaVA-13B (a vision-language model)

- FireFunction V1 (for function calling)

- Mixtral MoE 8x7B and 8x22B (instruction-following models)

- Llama 3 70B (from Meta)

In addition to these language models, Fireworks AI supports image-generation models such as Stable Diffusion 3 and XL. All models are accessible via Fireworks AI’s serverless API, which is designed to provide industry-leading performance and throughput.

Pricing and Deployment

Fireworks AI features a pay-as-you-go pricing model, where charges are based on the number of tokens processed:

- Gemma 7B model: $0.20 per million tokens

- Mixtral 8x7B model: $0.50 per million tokens

Users can rent GPU instances (A100 or H100) hourly for on-demand deployments.

Developer-Friendly Integration

The API is OpenAI-compatible, making it easy for developers to integrate it with tools like LangChain and LlamaIndex.

Target Audience & Pricing Tiers

Fireworks AI caters to developers, businesses, and enterprises, offering different pricing tiers:

- Developer Tier: 600 requests/min rate limit and up to 100 deployed models

- Business & Enterprise Tiers: Custom rate limits, team collaboration features, and dedicated support

This structure ensures flexibility and scalability, making Fireworks AI suitable for many use cases.

5. Cloudflare: A Global Network for AI Inference

Cloudflare AI Workers is an inference platform that allows developers to run machine learning models on Cloudflare’s global network with minimal code. It offers a serverless, scalable, and GPU-accelerated solution, enabling developers to use pre-trained models for tasks like text generation, image recognition, and speech recognition—without managing infrastructure or GPUs.

Supported Models

Cloudflare AI Workers provides a curated selection of open-source models across various AI domains, including:

- LLMs: llama-3-8b-instruct, mistral-8x7b-32k-instruct, gemma-7b-instruct

- Vision Models: vit-base-patch16-224, segformer-b5-finetuned-ade-512-pt

Pricing & Free Tier

Cloudflare AI Workers use a pay-as-you-go pricing model, where costs are based on neurons processed (acting as token-like units across different models):

- Free tier: 10,000 neurons per day

- Beyond free usage: $0.011 per 1,000 neurons

- Model-Specific Pricing:

- Llama 3 70B: $0.59 per million input tokens, $0.79 per million output tokens

- Gemma 7B: $0.07 per million tokens (both input and output)

With its flexible pricing and diverse model offerings, Cloudflare AI Workers provides an affordable, high-performance solution for AI inference at scale.

6. Nvidia NIM: Access to LLMs Optimized By Nvidia

Nvidia NIM API provides access to a diverse range of pre-trained large language models (LLMs) and AI models, optimized and accelerated by Nvidia’s software stack.

Model Catalog & Capabilities

Through the Nvidia API Catalog, developers can explore and use over 40 models from Nvidia, Meta, Microsoft, Hugging Face, and others, including:

- Text-Generation Models: Llama 3 70B (Meta), Mixtral 8x22B (Microsoft), Nemotron 3 8B (Nvidia)

- Vision Models: Stable Diffusion, Kosmos 2

The NIM API allows developers to integrate these models with minimal code. Hosted on Nvidia’s infrastructure, the API follows a standardized OpenAI-compatible format, ensuring seamless integration into existing workflows.

Deployment Options

- Hosted API: Developers can prototype and test applications for free.

- Production-Ready Deployment: Models can be deployed on-premises or in the cloud using Nvidia NIM containers.

Pricing & Free Tier

Nvidia offers both free and paid tiers:

- Free tier: 1,000 credits for initial testing

- Paid pricing (based on tokens processed and model size):

- Gemma 7B: $0.07 per million tokens

- Llama 3 70B: $0.79 per million output tokens

With scalable deployment options and flexible pricing, the Nvidia NIM API provides a powerful solution for integrating state-of-the-art AI models into applications.

7. Together AI: Affordable Inference for Open-Source Models

Together AI provides high-performance inference for 200+ open-source LLMs, offering sub-100ms latency, automated optimization, and horizontal scaling—all at a lower cost than proprietary solutions.

Key Features & Advantages

Together AI’s infrastructure handles:

- Token caching, model quantization, and load balancing allow developers to focus on prompt engineering and application logic rather than infrastructure management.

- Seamless model switching: Developers can run parallel inference jobs and swap between models like Llama 3, RedPajama, and Falcon with just a few lines of Python—without managing separate deployments or CUDA configurations.

Why Companies Choose Together AI

- Up to 11x more affordable than GPT-4 (when using Llama 3)

- 4x faster throughput than Amazon Bedrock

- 2x faster than Azure AI

Pricing & Access

Together AI offers a free tier and flexible pay-per-token or GPU usage pricing for its serverless options.

Combining cost efficiency, speed, and scalability, Together AI is a powerful choice for developers leveraging open-source LLMs.

8. OpenRouter: An Inference Marketplace

OpenRouter is an inference marketplace that provides access to over 300 models from top providers through a unified OpenAI-compatible API. This API enables seamless integration with models from:

- OpenAI

- Anthropic

- Bedrock, etc

Why do companies use OpenRouter? Companies choose OpenRouter because it provides simple access to multiple AI models through a single API interface. The platform offers automatic failovers and competitive pricing while eliminating the need to integrate and manage various provider APIs separately.

OpenRouter Pricing: Pay-as-you-go model with specific pricing listed for each model.

9. Hyperbolic: Affordable and Accessible AI Compute

Hyperbolic is an AI inference platform that provides affordable GPUs and accessible computing for AI researchers, developers, and startups, enabling them to build and scale AI projects efficiently.

Why Companies Choose Hyperbolic

- Cost Savings: It offers AI model inference for Base, Text, Image, and Audio generation at up to 80% lower cost than traditional providers without compromising quality.

- Affordable GPU Access: Provides the most competitive GPU pricing compared to major cloud providers like AWS.

- Decentralized GPU Network: Partners with data centers and individuals with idle GPUs, ensuring cost-effective and scalable computing.

Pricing & Plans

- Free Base Plan: Designed for startups and SMEs, offering high throughput and advanced features.

- Premium Plans: Tailored for academic research and enterprise use, providing enhanced capabilities.

With low-cost AI inference, flexible GPU access, and a decentralized approach, Hyperbolic is an ideal solution for those looking to scale AI affordably.

10. Replicate: Best for Prototyping and Experimentation

Best For:

Rapid prototyping and experimenting with open-source or custom models.

Replicate is a cloud-based platform that simplifies machine learning model deployment and scaling. It packages and deploys models efficiently using Cog, an open-source tool. The platform supports a variety of models, including:

- LLMs: Llama 2

- Image Generation: Stable Diffusion

- Other Applications: Text generation, image processing, music generation, and more

Why Companies Use Replicate

- Ideal for quick experiments and MVP development

- Thousands of pre-built open-source models for diverse AI applications

- Simple setup, start running models with just one line of code

Pricing

Replicate follows a pay-per-inference pricing model, ensuring cost-effective scaling based on usage.

With its ease of use, flexibility, and vast model library, Replicate is a powerful choice for AI experimentation and rapid development.

11. SambaNova Cloud: Optimized for High-Throughput Applications

SambaNova Cloud delivers exceptional AI performance using custom-built Reconfigurable Dataflow Units (RDUs), achieving 200 tokens per second on the Llama 3.1 405B model—10x faster than traditional GPU-based solutions.

Key Features

- High Throughput: Processes complex models efficiently, eliminating bottlenecks for large-scale applications.

- Energy Efficiency: It consumes less power than conventional GPU infrastructures.

- Scalability: Scales AI workloads seamlessly without performance trade-offs or excessive costs.

Why Choose SambaNova Cloud?

SambaNova is built for high-throughput, low-latency AI inference and training. Its custom hardware, including the SN40L chip and dataflow architecture, enables it to handle substantial parameter models without GPUs’ latency and throughput limitations.

SambaNova Cloud offers a scalable, efficient, high-performance alternative to traditional AI infrastructure for businesses and researchers pushing AI boundaries.

12. DeepInfra: Hosting Large AI Models

Best for: Cloud-based hosting of large-scale AI models.

DeepInfra offers a robust platform for running large AI models on cloud infrastructure. It's easy to use for managing large datasets and models. Its cloud-centric approach is best for enterprises needing to host large models. Why do companies use DeepInfra? DeepInfra's inference API takes care of servers, GPUs, scaling, and monitoring, and accessing the API takes just a few lines of code.

It supports most OpenAI APIs to help enterprises migrate and benefit from the cost savings. You can also run a dedicated instance of your public or private LLM on DeepInfra infrastructure. DeepInfra Pricing Usage-based, billed by token or at execution time.

13. Anyscale: End-to-End AI Development and Deployment

Best for: End-to-end AI development, deployment, and high-scalability applications.

Anyscale is a cloud-agnostic platform designed to scale compute-intensive AI workloads, from model training and inference to batch processing. It is the company behind Ray, the open-source AI compute engine used by industry leaders like Uber, Spotify, and Airbnb to power their AI platforms.

Why Companies Choose Anyscale

- Enterprise-Grade Security & Governance: Provides admin, billing, and compliance controls for secure AI deployment.

- Cloud & Hardware Agnostic: Works with any cloud, accelerator, or software stack.

- Expert Support: Direct access to Ray, AI, and ML specialists for optimization and troubleshooting.

Pricing

Anyscale follows a usage-based pricing model, with enterprise plans available for large-scale AI operations.

With scalability, flexibility, and enterprise-ready features, Anyscale is a powerful solution for organizations building AI at scale.

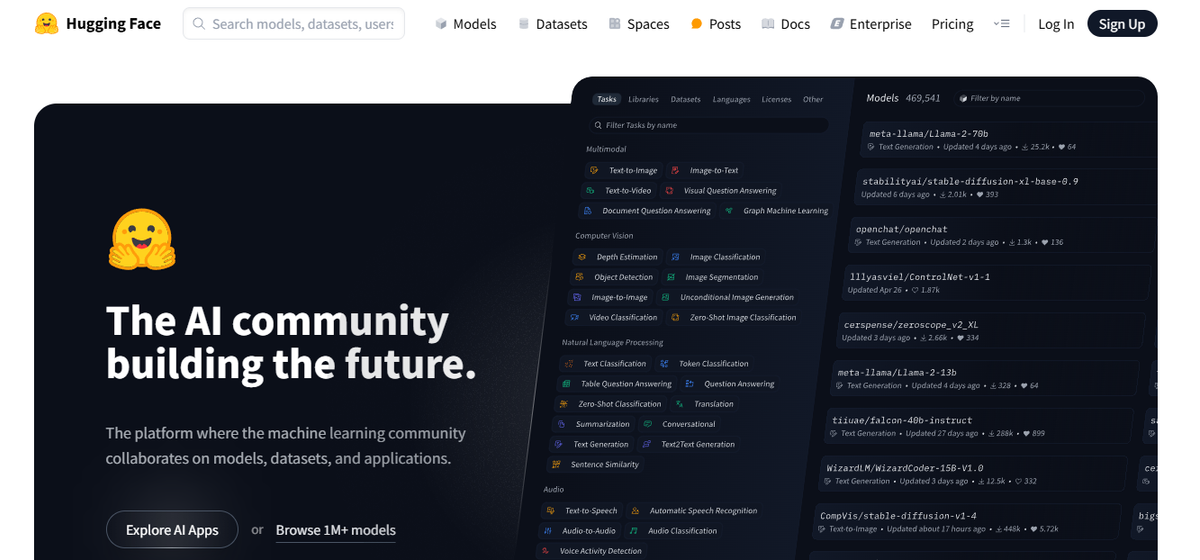

14. HuggingFace: The Go-To for NLP Projects

Best for: Beginners and professionals looking to start and scale Natural Language Processing (NLP) projects.

Hugging Face is a leading open-source AI community that enables developers to build, train, and share machine learning models and datasets. It is best known for its Transformers library, which simplifies working with state-of-the-art NLP models.

Why Companies Use Hugging Face

- Extensive Model Hub: Access 100,000+ pre-trained models, including BERT, GPT, and Stable Diffusion.

- Seamless Integration: Works with multiple programming languages and cloud platforms, including AWS and Google Cloud.

- Scalable APIs: Easily extend AI capabilities through Hugging Face’s inference endpoints and model-serving solutions.

Pricing

- Free for basic use.

- Enterprise plans are available for advanced features and scalability.

With its vast model library, collaborative tools, and flexible deployment options, Hugging Face is a top choice for developers and businesses working with NLP and AI applications.

15. Lamini: An Enterprise-Grade LLM Platform

Lamini is a full-stack LLM platform designed to streamline model selection, fine-tuning, and deployment for enterprise development teams. It simplifies adapting open-source LLMs with proprietary company data, ensuring high accuracy, efficient inference, and flexible deployment options.

Key Features

- Enhanced Model Accuracy: Supports memory tuning and compute optimizations, enabling users to fine-tune any open-source model with organization-specific data.

- Flexible Deployment: You can deploy models in private clouds (VPC), data centers, or through Lamini’s managed infrastructure.

- Optimized Inference: Efficiently runs LLM inference on GPUs, supporting user-owned hardware to ensure high-throughput, low-latency performance.

- End-to-End Model Lifecycle Support:

- Compare and experiment with models in the Lamini Playground.

- Tune models with proprietary data.

- Securely deploy models across various environments.

- Leverage REST APIs, a Python client, and a Web UI for seamless integration.

With powerful fine-tuning tools, deployment flexibility, and scalable inference capabilities, Lamini is an excellent solution for enterprises looking to customize and operationalize LLMs with their data.

16. Agenta: The Open-Source LLM App Platform for Developers

Agenta is an open-source platform for developers looking to create complex applications using large language models. This flexible solution allows users to sidestep the constraints of individual models, libraries, or frameworks and instead focus on coding their applications.With Agenta, you can swiftly experiment and version prompts, parameters, and intricate strategies. This encompasses in-context learning with:

- Embedding

- Agents

- Custom business logic

Key Features

- Parameter Exploration: Specify your application’s parameters directly within your code and effortlessly experiment with them through an intuitive web platform.

- Performance Assessment: Evaluate your application’s efficacy on test sets using a variety of methodologies like exact match, AI Critic, human evaluation, and more.

- Testing Framework: Create test sets effortlessly using the user interface, whether it’s by uploading CSVs or seamlessly connecting to your data through our API.

- Collaborative Environment: Foster teamwork by sharing your application with collaborators and inviting their feedback and insights.

- Effortless Deployment: Launch your application as an API in a single click, streamlining the deployment process.

Agenta fosters collaboration with domain experts to facilitate prompt engineering and evaluation. Another highlight is Agenta’s capability to systematically evaluate your LLM apps and facilitate one-click deployment of your application.

17. Phoenix: Uncover Insights into Your Model’s Performance

Phoenix quickly uncovers insights into large language model performance, drift, and data quality. This ingenious tool seamlessly unlocks observability into model performance, drift, and data quality, all without the burden of intricate configurations.As an avant-garde notebook-centric Python library, Phoenix harnesses the potency of embeddings to unearth concealed intricacies within:

- LLM

- CV

- NLP

- Tabular models.

Elevate your models with the unmatched capabilities that Phoenix brings to the table.

Key Features

- Embedded Drift Investigation: Dive into UMAP point clouds during instances of substantial Euclidean distance and pinpoint drift clusters.

- Drift and Performance Analysis via Clustering: Deconstruct your data into clusters of significant drift or subpar performance through HDBSCAN.

- UMAP-Powered Exploratory Data Analysis: Shade your UMAP point clouds based on your model’s attributes, drift, and performance, unveiling problematic segments.

18. LiteLLM: Simplifying Calls to LLM APIs

LiteLLM simplifies your interactions with various LLM APIs using a lightweight package in the OpenAI format, like:

- Anthropic

- Huggingface

- Cohere

- Azure OpenAI and more

This package streamlines the process of calling API endpoints from providers like OpenAI, Azure, Cohere, and Anthropic. It translates inputs to the relevant provider’s completion and embedding endpoints, ensuring uniform output. You can always access text responses at [‘choices’][0][‘message’][‘content’].

Key Features

- Streamlined LLM API Calling: Simplifies interaction with LLM APIs like:

- Anthropic

- Cohere

- Azure OpenAI, etc.

- Lightweight Package: A compact solution for calling:

- OpenAI

- Azure

- Cohere

- Anthropic

- API endpoints

- Input Translation: Manages translation of inputs to respective providers’ completion and embedding endpoints.

- Exception Mapping: Maps standard exceptions across providers to OpenAI exception types for standardized error handling.

The package includes an exception mapping feature. It aligns standard exceptions across different providers with OpenAI exception types, ensuring consistency in handling errors.

19. BotPenguin: Build Custom AI Chatbots for Enhanced Interactions

BotPenguin specializes in building AI-powered chatbots that enhance customer interactions. Its focus is on natural language processing to provide accurate and efficient responses.The LLM platform provides easy customization to fit different business requirements. BotPenguin does not require deep integration with specific language models like PaLM. It is designed to be user-friendly, offering a seamless chatbot-building experience.

Features

This enterprise LLM platform transforms how businesses interact with artificial intelligence:

- Integration with ChatGPT: Seamlessly integrates with ChatGPT for intelligent conversations.

- Custom Data Training: Train your chatbot on custom data for personalized responses.

- Pre-made Templates: Quick setup with ready-to-use templates.Custom Chatbot Solutions: Sell personalized solutions like Custom and Whitelabel chatbots.

- 80+ Native Integrations: Connect with over 80 platforms.

- Omnichannel Chatbot: Works on:

- Messenger

- Telegram and more.

- Analytics: Gain insights with built-in analytics.

20. Google Cloud AI: Robust Tools for AI and Machine Learning

Google Cloud AI is a robust platform with many AI and machine learning tools. It’s designed to support businesses of all sizes, offering robust features and extensive support.

Features

Among LLM platforms, this solution sets industry benchmarks for LLM platform innovation.

- Pre-trained Models: Access Google’s pre-trained models for image recognition, speech-to-text, and more.

- AutoML: Custom model creation without needing deep ML knowledge.

- Scalability: Easily scale models to handle large datasets.

- Integration: Seamlessly integrates with other Google Cloud services.

- Security: High-level security features to protect your data.

21. Microsoft Azure AI: Comprehensive AI Tools for Businesses

Microsoft Azure AI provides a comprehensive suite of AI tools ideal for businesses looking to implement machine learning and AI at scale. It offers flexibility and integration with other Microsoft services.

Features

This solution stands out in the LLM platforms market for its enterprise capabilities.

- Cognitive Services: Pre-built APIs for:

- Vision

- Speech

- Language

- Decision-making

- Azure Machine Learning: End-to-end platform for:

- Building

- Training

- Deploying models

- Integration: Works seamlessly with existing Microsoft products.

- Custom AI: Allows for custom AI solutions tailored to specific business needs.

- Scalability: Easily scales from small projects to large enterprise solutions.

22. Amazon Web Services (AWS) AI: Reliable and Scalable AI Services

AWS AI offers a wide range of AI and machine learning services known for their reliability and scalability. It’s a popular choice for businesses of all sizes. This LLM platform’s alternative solutions are some of the best in the market.

Features

Leading the evolution of LLM platforms with transformative business solutions.

- SageMaker: Comprehensive service to build, train, and deploy machine learning models.

- Recognition: Image and video analysis services.

- Polly: Text-to-speech service.

- Scalability: Easily handles projects of any size.

- Integration: Works well with other AWS services.

23. IBM Watson: Advanced Solutions for Complex Business Needs

IBM Watson is known for its advanced AI capabilities, making it a strong choice for businesses needing sophisticated machine learning solutions. It offers a wide range of tools and services.

Features

In the competitive landscape of LLM platforms, this solution delivers exceptional value.

- Watson Assistant: AI-powered chatbots and virtual assistants.

- Watson Studio: Integrated environment to build and train models.

- Natural Language Processing: Advanced text analytics and language understanding.

- Data Security: High-level security and compliance features.

- Custom Models: Create models tailored to your specific needs.

24. Cohere: Powerful and Flexible NLP Tools

Cohere is an emerging LLM platform that focuses on providing high-quality natural language processing tools. It’s gaining popularity for its powerful models and flexibility.

Features

The future of LLM platforms takes shape with this enterprise solution.

- Custom Models: Create and train your models with ease.

- High-Performance APIs: Access to powerful NLP tools through easy-to-use APIs.

- Scalability: Designed to handle projects of any size.

- Integration: Works well with existing infrastructure.

- Ease of Use: User-friendly tools for quick deployment.

25. Glean AI: Advanced Analytics and Data Processing

Glean AI is designed to offer specialized AI solutions for businesses looking for advanced analytics and data processing capabilities. It’s a versatile platform that caters to various industries.

Features

Among enterprise-grade LLM platforms, this solution defines excellence.

- Advanced Analytics: Tools for deep data analysis and insights.

- Custom AI Solutions: Tailored AI models to fit specific business needs.

- Scalability: Handles both small and large-scale projects.

- Data Integration: Seamlessly integrates with existing data systems.

- User Interface: Intuitive interface for easy use.

26. Klu AI: AI for Business Automation and Operations

Klu AI offers a range of AI tools focused on enhancing business operations through automation and machine learning. It’s known for its user-friendly approach and powerful capabilities.

Features

Among premium LLM platforms, this solution stands out for its enterprise readiness.

- Automation Tools: AI-driven automation for various business processes.

- Custom ML Models: Build and deploy custom machine learning models.

- Scalability: Suitable for both small businesses and large enterprises.

- Integration: Easily integrates with existing systems and workflows.

- User-Friendly Interface: Designed for ease of use.

27. Meta: Leading Research and Development in LLMs

Meta, known for its transformative impact on social media and virtual reality technologies, has also established itself among the biggest LLM companies. It is driven by its commitment to open-source research and the development of powerful language models.Its flagship model, Llama 2, is a next-generation open-source LLM available for research and commercial purposes. Llama 2 is designed to support various applications, making it a versatile tool for AI researchers and developers.

Meta’s Open Approach to AI Accessibility and Collaboration

One key aspect of Meta’s impact is its dedication to making advanced AI technologies accessible to a broader audience. By offering Llama 2 for free, Meta encourages innovation and collaboration within the AI community.This open-source approach accelerates the development of AI solutions and fosters a collaborative environment where researchers and developers can build on Meta’s foundational work.

Meta’s Contributions to LLM Development

Leading advancements in the area of LLMs by Meta are as follows:

- Llama 2: This LLM supports various tasks, including conversational AI, NLP, and more. Its features, such as the Conversational Flow Builder, Customizable Personality, Integrated Dialog Management, and advanced Natural Language Processing capabilities, make it a robust choice for developing AI solutions.

- Code Llama: Building upon the foundation of Llama 2, Code Llama is an innovative LLM specifically designed for code-related tasks. It excels in generating code through text prompts and stands out as a tool for developers. It enhances workflow efficiency and lowers the entry barriers for new developers, making it a valuable educational resource.

- Generative AI Functions: Meta has announced the integration of generative AI functions across all its apps and devices. This initiative underscores the company’s commitment to leveraging AI to enhance user experiences and streamline application processes.

Scientific Research and Open Collaboration

Meta’s employees conduct extensive research into foundational LLMs, contributing to the scientific community’s understanding of AI. The company’s open-source release of models like Llama 2 promotes cross-collaboration and innovation, enabling a wider range of developers to access and contribute to cutting-edge AI technologies.The company’s focus on open-source collaboration and its innovative AI solutions ensures that Meta remains a pivotal player in the LLM market, driving advancements that benefit both the tech industry and society.

28. WhyLabs: An ML Observability Platform for LLMs

WhyLabs is renowned for its versatile and robust machine learning (ML) observability platform. The company has carved a niche for itself by focusing on optimising the performance and security of LLMs across various industries.Its unique approach to ML observability allows developers and researchers to monitor, evaluate, and improve their models effectively. This focus ensures that LLMs function optimally and securely, essential for their deployment in critical applications.

WhyLabs’ Contributions to LLM Development

The following are the significant LLM advancements by WhyLabs:

- ML Observability Platform: WhyLabs offers a comprehensive ML Observability platform designed to cater to various industries, including healthcare, logistics, and e-commerce. This platform allows users to optimize the performance of their models and datasets, ensuring faster and more efficient outcomes.

- Performance Monitoring and Insights: The platform provides tools for checking the quality of selected datasets, offering insights on improving LLMs, and dealing with common machine-learning issues. This is vital for maintaining the robustness and reliability of LLMs used in complex and high-stakes environments.

- Security Evaluation: WhyLabs places a significant emphasis on evaluating the security of large language models. This focus on security ensures that LLMs can be deployed safely in various applications, protecting the models and the data they process from potential threats.

- Support for LLM Developers and Researchers: Unlike other LLM companies, WhyLabs extends support to developers and researchers by allowing them to check the viability of their models for AI products. This support fosters innovation and helps determine the future direction of LLM technology.

WhyLabs has created its space in the rapidly advancing LLM ecosystem. The company’s focus on enhancing the observability and security of LLMs is an essential aspect of digital world development.

29. Databricks: An Enterprise Solution for LLM Development

Databricks offers a versatile and comprehensive platform to support enterprises in building, deploying, and managing data-driven solutions at scale. Its unique approach seamlessly integrates with cloud storage and security, making it a go-to solution for businesses looking to harness the power of LLMs.The company’s Lakehouse Platform, which merges data warehousing and data lakes, empowers data scientists and ML engineers to process, store, analyze, and even monetize datasets efficiently. This facilitates LLMs' seamless development and deployment, accelerating innovation and operational excellence across various industries.

Databricks’ Contributions to LLM Development

Databricks’ primary contributions to the LLM space include:

- Databricks Lakehouse Platform: The Lakehouse Platform integrates cloud storage and security, offering a robust infrastructure that supports the end-to-end lifecycle of data-driven applications. This enables the deployment of LLMs at scale, providing the necessary tools and resources for advanced ML and data analytics.

- MLflow and Databricks Runtime for Machine Learning: Databricks provides specialized tools like MLflow, an open-source platform for managing the ML lifecycle, and Databricks Runtime for Machine Learning. These tools expand the platform's core functionality, allowing data scientists to track, reproduce, and manage machine learning experiments more efficiently.

- Dolly 2.0 Language Model: Databricks has developed Dolly 2.0, a language model trained on a high-quality human-generated dataset known as databricks-dolly-15k. It exemplifies how organizations can inexpensively and quickly train their LLMs, making advanced language models more accessible.

Databricks’ comprehensive approach to managing and deploying LLMs underscores its importance in the AI and data science community. By providing robust tools and a unified platform, Databricks empowers businesses to unlock the full potential of their data and drive transformative growth.

30. MosaicML: Efficient Training of Large Language Models

MosaicML is known for its state-of-the-art AI training capabilities and innovative approach to developing and deploying large-scale AI models. The company has made significant strides in enhancing the efficiency and accessibility of neural networks, making it a key player in the AI landscape.MosaicML plays a crucial role in the LLM market by providing advanced tools and platforms that enable users to train and deploy large language models efficiently. Its focus on improving neural network efficiency and offering full-stack managed platforms has revolutionized how businesses and researchers approach AI model development.MosaicML’s contributions have made it easier for organizations to leverage cutting-edge AI technologies to drive innovation and operational excellence.

MosaicML’s Contributions to LLM Development

MosaicML’s additions to the LLM world include:

- MPT Models: MosaicML is best known for its family of Mosaic Pruning Transformer (MPT) models. These generative language models can be fine-tuned for various NLP tasks, achieving high performance on several benchmarks, including the GLUE benchmark. The MPT-7 B version has garnered over 3.3 million downloads, demonstrating its widespread adoption and effectiveness.

- Full-Stack Managed Platform: This platform allows users to efficiently develop and train their advanced models, cost-effectively utilizing their data. The platform’s capabilities enable organizations to create high-performing, domain-specific AI models that can transform their businesses.

- Scalability and Customization: MosaicML’s platform is built to be highly scalable, allowing users to train large AI models at scale with a single command. The platform supports deployment inside private clouds, ensuring users fully own their models, including the model weights.

MosaicML’s innovative approach to LLM development and its commitment to improving neural network efficiency have positioned it as a leader in the AI market. Providing powerful tools and platforms empowers businesses to harness the full potential of their data and drive transformative growth.

31. Vectara: A New Approach to Conversational AI

Vectara has established itself as a prominent player through its innovative approach to conversational search platforms. Leveraging its advanced natural language understanding (NLU) technology, Vectara has significantly impacted how users interact with and retrieve information from their data.As an LLM company, it focuses on enhancing the relevance and accuracy of search results through semantic and exact-match search capabilities.By providing a conversational interface akin to ChatGPT, Vectara enables users to have more intuitive and meaningful interactions with their data. This approach streamlines the information retrieval process and boosts users' efficiency and satisfaction.

Vectara’s Contributions to LLM Development

Here’s how Vectara adds to the LLM world:

- GenAI Conversational Search Platform: Vectara offers a GenAI Conversational Search platform that allows users to conduct searches and receive responses conversationally. It leverages advanced semantic and exact-match search technologies to provide highly relevant answers to the user’s input prompts.

- 100% Neural NLU Technology: The company employs a fully neural natural language understanding technology, significantly enhancing search results' semantic relevance. This technology ensures the responses are contextually accurate and meaningful, improving the user’s search experience.

- API-First Platform: Vectara’s complete neural pipeline is available as a service through an API-first platform. This feature allows developers to integrate semantic answer serving within their applications, making Vectara’s technology highly accessible and versatile for various use cases.

Vectara’s focus on providing a conversational search experience powered by advanced LLMs showcases its commitment to innovation and user-centric solutions. Its innovative approach and dedication to improving search relevance and user interaction highlight its crucial role in AI.

What to Look for in an LLM Platform

Key Features to Consider in an LLM Development Company

Narrowing the focus on “key features” can help you better compare LLM platforms and find the right fit for your business. Below are crucial features for assessing LLM development companies and their platforms.

Natural Language Processing Capabilities

Natural language processing (NLP) allows LLMs to understand and generate human language. LLMs with robust NLP capabilities can read, analyze, and create contextually relevant text without human intervention. The higher the model’s NLP capabilities, the more accurate and useful its outputs will be for your business.

Integration

When choosing an LLM development company, look for easy integration with your existing systems. Assess the platform's API capabilities and documentation to understand how well the LLM can connect with your business applications. Good integration will help you get up and running quickly and improve the ROI of your LLM project.

Scalability

As you assess different LLM platforms, consider their scalability. The model needs to grow and adapt with your business. Choosing a solution that can accommodate increased usage over time will help you avoid switching to a different LLM model.

Customization

No two businesses are alike, and your use case for an LLM will be different from others. This is why it is vital to find an LLM development company that allows you to tailor its features to your specific requirements. Customization will help you get the most relevant results for your business.

Security

As with any technology that handles sensitive data, strong security features are essential when choosing an LLM platform. Look for models that offer robust data encryption, user authentication, and access controls to protect your business information.

User Interface

A user-friendly interface makes the LLM development company platform easier for your team to use. Even if the model produces relevant results, a complicated interface can make it difficult for your employees to access and utilize the data. Look for LLM platforms with intuitive dashboards and clear documentation to help your team start quickly.

Importance of Customer Support in an LLM Development Company

Reliable customer support is crucial when working with LLM platforms. It ensures you can resolve issues quickly and keeps your projects on track. Good support can save you time and frustration, whether through:

- Chat

- Phone

Pricing Considerations and Why They Matter

Pricing plays a big role in choosing LLM platforms. Compare costs, including hidden fees, across different providers. The right pricing structure will align with your budget and needs, ensuring you get the best value from your investment.

Related Reading

- AI Infrastructure

- MLOps Tools

- AI as a Service

- Machine Learning Inference

- Artificial Intelligence Cost Estimation

- AutoML Companies

- Edge Inference

- LLM Inference Optimization

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

- LLM Serving

- Inference Cost

- Machine Learning at Scale

- TensorRT

- SageMaker Inference

- SageMaker Inference Pricing

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.