Mar 10, 2025

53 Essential MLOps Tools for Seamless AI Model Management

Inference Research

You may feel excited about the possibilities as your machine learning model moves from development to production. Even so, you might also have lingering concerns about operational efficiency. Not only do models need to be accurate, but they must also perform reliably over time. That means addressing any issues that arise as quickly as possible. MLOps tools help mitigate these challenges by automating and streamlining the processes for deploying and monitoring machine learning models in production. This article will help you get started with MLOps tools to help you build, deploy, and scale AI models with minimal friction. Additionally, understanding AI Inference vs Training is crucial to optimizing model performance and ensuring smooth deployment.

Inference's AI inference APIs can help you achieve your objectives by ensuring models perform reliably in real-world applications.

What are MLOps Tools?

MLOps tools are software programs that help data scientists, machine learning engineers, and IT operations teams integrate, streamline workflows and machine learning components, and collaborate more effectively. Ultimately, they support the central goal of MLOps:

- Automating the process of generating, deploying, and monitoring models.

- Merging machine learning, DevOps, and data engineering.

What Do MLOps Tools Do? Get Ready to Meet Your New Best Friends

MLOps tools can be categorized based on their primary functions within the MLOps pipeline. By understanding these categories, organizations can choose the tools that align with their unique needs.

- MLOps Platform: This comprehensive solution includes capabilities for every aspect of the MLOps pipeline, from data ingestion to model deployment and monitoring.

- Experiment Tracking and Model Management: Manages machine learning experiments and different model versions to ensure they can be shared and reproduced

- Data Versioning and Management: Tracks changes to data, maintains data lineage, and ensures data quality for machine learning projects

- Feature Stores: A centralized repository for storing, managing, and serving features (measurable characteristics within a dataset) to machine learning models

- Model Serving and Deployment: Streamlines the process for delivering machine learning models to production environments

- Model Monitoring and Observability: Tracks metrics and provides insights into model performance in production

- Workflow Orchestration: Automates and coordinates various steps in the MLOps pipeline

Choosing the Right MLOps Tool

Some tools may span multiple categories and offer more comprehensive solutions. This means the best tool for your organization depends on a project’s unique requirements, your team’s skills, and your existing technology infrastructure.

It’s worth noting that some tools may span multiple categories and offer more comprehensive solutions. This means the best tool for your organization depends on a project’s unique requirements, your team’s skills, and your existing technology infrastructure.

Related Reading

- Model Inference

- AI Learning Models

- MLOps Best Practices

- MLOps Architecture

- Machine Learning Best Practices

- AI Infrastructure Ecosystem

53 Essential MLOps Tools for Seamless AI Model Management

1. Inference – The New Frontier in MLOps Tools

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

2. Large Language Models Framework

With the introduction of GPT-4 and GPT-4o, the race has begun to produce large language models and realize the full potential of modern AI. LLMs require vector databases and integration frameworks for building intelligent AI applications.

3. Qdrant

Qdrant is an open-source vector similarity search engine and vector database that provides a production-ready service with a convenient API, allowing you to store, search, and manage vector embeddings.

Its most notable features include:

- Easy-to-use API: It provides an easy-to-use Python API, allowing developers to generate client libraries in multiple programming languages.

- Fast and accurate: It uses a unique custom modification of the HNSW algorithm for Approximate Nearest Neighbor Search, providing state-of-the-art search speeds without compromising accuracy.

- Rich data types: Qdrant supports various data types and query conditions, including string matching, numerical ranges, geo-locations, and more.

- Distributed: It is cloud-native and can scale horizontally, allowing developers to use just the right amount of computational resources for any amount of data they need to serve.

- Efficient: Qdrant is developed entirely in Rust, a language known for its performance and resource efficiency.

4. LangChain

LangChain is a versatile and robust framework for developing applications powered by language models. It offers several components to enable developers to build, deploy, and monitor context-aware and reasoning-based applications.

The framework consists of four main components:

- LangChain libraries: The Python and JavaScript libraries offer interfaces and integrations that allow you to develop context-aware reasoning applications.

- LangChain templates: A collection of easily deployable reference architectures covering various tasks, providing developers with pre-built solutions.

- LangServe: A library that enables developers to deploy LangChain chains as a REST API.

- LangSmith: A platform that allows you to debug, test, evaluate, and monitor chains built on any LLM framework.

Experiment Tracking and Model Metadata Management Tools

These tools allow you to manage model metadata and help with experiment tracking:

5. MLFlow

MLflow is an open-source tool that helps you manage core parts of the machine learning lifecycle. It is generally used for experiment tracking, but you can also use it for reproducibility, deployment, and model registry. You can manage machine learning experiments and model metadata using:

- CLI

- Python

- R

- Java

- REST API

MLflow has four core functions:

- MLflow tracking: Storing and accessing code, data, configuration, and results.

- MLflow projects: Packaging data science source for reproducibility.

- MLflow models: Deploying and managing machine learning models to various serving environments.

- MLflow model registry: A central model store that provides versioning, stage transitions, annotations, and model management.

6. Comet ML

Comet ML is a platform for tracking, comparing, explaining, and optimizing machine learning models and experiments. It can be used with any machine learning library, such as:

- Scikit-learn

- PyTorch

- TensorFlow

- Hugging Face

Comet ML is designed for individuals, teams, enterprises, and academics. It allows users to easily visualize and compare experiments and visualize samples from images, audio, text, and tabular data.

7. Weights & Biases

Weights & Biases is an ML platform for experiment tracking, data and model versioning, hyperparameter optimization, and model management. It also allows users to:

- Log artifacts:

- Datasets

- Models

- Dependencies

- Pipelines

- Results

- Visualize datasets:

- Audio

- Visual

- Text

- Tabular

Weights & Biases features a user-friendly central dashboard for machine learning experiments. Like Comet ML, it integrates with other machine learning libraries, such as:

- Fastai

- Keras

- PyTorch

- Hugging Face

- YOLOv5

- Spacy and more

Note: You can also use TensorBoard, Pachyderm, DagsHub, and DVC Studio to track experiments and manage ML metadata.

Orchestration and Workflow Pipelines MLOps Tools

These tools help you create data science projects and manage machine learning workflows:

8. Prefect

Prefect is a modern data stack for monitoring, coordinating, and orchestrating workflows between and across applications. It is an open-source, lightweight tool built for end-to-end machine-learning pipelines.

You can use either Prefect Orion UI or Prefect Cloud for databases:

- Prefect Orion UI: An open-source, locally hosted orchestration engine and API server providing insights into the local Prefect Orion instance and workflows.

- Prefect Cloud: A hosted service that allows you to visualize flows, flow runs, and deployments while managing accounts, workspaces, and team collaboration.

9. Metaflow

Metaflow is a powerful, battle-hardened workflow management tool for data science and machine learning projects. It was built for data scientists so they can focus on creating models instead of worrying about MLOps engineering.

With Metaflow, you can design workflows, run them at scale, and deploy models in production. It automatically tracks and versions machine learning experiments and data. Additionally, you can visualize results in a notebook.

Metaflow works with multiple cloud providers (including AWS, GCP, and Azure) and various machine-learning Python packages (such as Scikit-learn and TensorFlow). The API is also available for the R language.

10. Kedro

Kedro is a Python-based workflow orchestration tool for creating reproducible, maintainable, modular data science projects. It integrates software engineering concepts into machine learning, such as modularity, separation of concerns, and versioning.

With Kedro, you can:

- Set up dependencies and configuration

- Set up data

- Create, visualize, and run pipelines

- Perform logging and experiment tracking

- Deploy on a single or distributed machine

- Create maintainable, modular, and reusable data science code

- Collaborate with teammates on projects

Data and Pipeline Versioning Tools

With these MLOps tools, you can manage tasks around data and pipeline versioning:

11. Pachyderm

Pachyderm automates data transformation with data versioning, lineage, and end-to-end pipelines on Kubernetes. You can integrate with any data (Images, logs, video, CSVs), any language (Python, R, SQL, C/C++), and at any scale (Petabytes of data, thousands of jobs).

The Community Edition is open-source and designed for small teams, while organizations and teams needing advanced features can opt for the Enterprise Edition.

Just like Git, you can version your data using a similar syntax. In Pachyderm, the highest level of the object is Repository, and you can use Commit, Branches, File, History, and Provenance to track and version datasets.

12. Data Version Control (DVC)

Data Version Control (DVC) is an open-source and popular tool for machine learning projects. It works seamlessly with Git to provide code, data, model, metadata, and pipeline versioning.

DVC is more than just a data-tracking and versioning tool. You can use it for:

- Experiment tracking (model metrics, parameters, versioning).

- Creating, visualizing, and running machine learning pipelines

- Workflow for deployment and collaboration

- Reproducibility

- Data and model registry

- Continuous integration and deployment (CI/CD) for machine learning using CML

Note: DagsHub can also be used for data and pipeline versioning.

13. LakeFS

LakeFS is an open-source scalable data version control tool that provides a Git-like version control interface for object storage. It enables users to manage their data lakes as they would their code. With LakeFS, users can version-control data at an exabyte scale, making it a highly scalable solution for managing large data lakes.

Additional capabilities:

- Perform Git operations like branch, commit, and merge over any storage service

- Faster development with zero-copy branching for frictionless experimentation and easy collaboration

- Use pre-commit and merge hooks for CI/CD workflows to ensure clean workflows

- A resilient platform allows for faster recovery from data issues with the revert capability

Feature Stores

Feature stores are centralized repositories for storing, versioning, managing, and serving features (processed data attributes used for training machine learning models) in production and for training purposes.

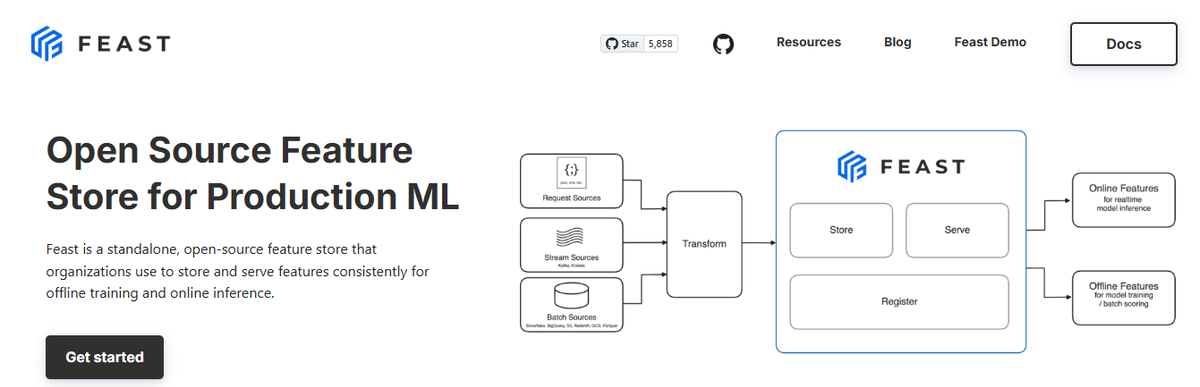

14. Feast

Feast is an open-source feature store that helps ML teams productionize real-time models and build a feature platform that fosters collaboration between engineers and data scientists.

Key features:

- Manage an offline store, a low-latency online store, and a feature server to ensure consistent feature availability for training and serving purposes

- Avoid data leakage by creating accurate point-in-time feature sets, freeing data scientists from dealing with error-prone dataset joining

- Decouple ML from data infrastructure by providing a single access layer

15. Featureform

Featureform is a virtual feature store that enables data scientists to define, manage, and serve their ML model's features. It helps data science teams enhance collaboration, organize experimentation, facilitate deployment, increase reliability, and ensure compliance.

Key features:

- Enhance collaboration by sharing, reusing, and understanding features across the team

- Orchestrated data infrastructure to ensure features are ready for production

- Increase reliability by preventing modifications to features, labels, or training sets

- Ensure compliance with built-in role-based access control, audit logs, and dynamic serving rules

Model Testing

These MLOps tools help test model quality and ensure machine learning models' reliability, robustness, and accuracy.

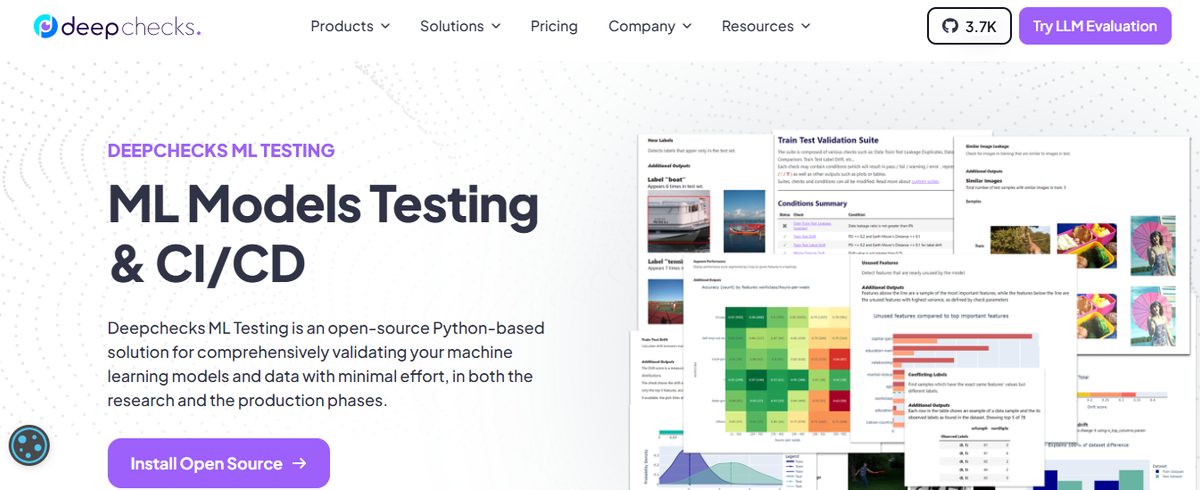

16. Deepchecks ML Models Testing

Deepchecks is an open-source solution that ensures thorough ML validation from research to production. It provides a holistic approach to validating data and models through three key components:

Key components:

- Deepchecks Testing: You can build custom checks and suites for tabular, NLP, and computer vision validation.

- CI & Testing Management: Helps you collaborate with your team and manage test results effectively.

- Deepchecks Monitoring: Tracks and validates models in production.

17. TruEra

TruEra is an advanced platform that drives model quality and performance through automated testing, explainability, and root cause analysis.

Key features:

- Model Testing & Debugging: Improves model quality during development and production.

- Automated & Systematic Testing: Ensures performance, stability, and fairness.

- Model Evolution Tracking: Extracts insights for faster and more effective model development.

- Bias Identification: Pinpoints specific features contributing to model bias.

- Seamless Integration: It easily integrates into your current infrastructure and workflow.

Model Deployment and Serving Tools

These MLOps tools make model deployment simple, scalable, and efficient.

Kubeflow

Kubeflow simplifies machine learning model deployment on Kubernetes, making it portable and scalable. It supports data preparation, model training, optimization, prediction serving, and performance monitoring in production.

Key features:

- A centralized dashboard with an interactive UI

- ML pipelines for reproducibility and streamlined workflows

- Native support for JupyterLab, RStudio, and Visual Studio Code

- Hyperparameter tuning and neural architecture search

- Training jobs for TensorFlow, PyTorch, PaddlePaddle, MXNet, and XGBoost

- Job scheduling for managing workflows

- Multi-user isolation for administrators

- Works with all major cloud providers

18. BentoML

BentoML is a Python-first deployment tool for fast and scalable machine learning applications. It provides parallel inference, adaptive batching, and hardware acceleration for optimized performance.

Key features:

- Interactive centralized dashboard for organizing and monitoring ML models

- Supports all major ML frameworks, including:

- Keras

- ONNX

- LightGBM

- PyTorch

- Scikit-learn

- Enables model deployment, serving, and monitoring in a single solution

19. Hugging Face Inference Endpoints

Hugging Face Inference Endpoints is a cloud-based deployment service that allows users to deploy trained ML models for inference without managing infrastructure.

Key features:

- Cost-efficient pricing starts at $0.06 per CPU core/hr and $0.6 per GPU/hr

- Quick deployment within seconds

- Fully managed with autoscaling capabilities

- Integrated into the Hugging Face ecosystem

- Provides enterprise-level security

Model Monitoring in Production ML Ops Tools

Whether your ML model is in development, validation, or deployment to production, these tools can help you monitor various factors.

20. Evidently

AI is an open-source Python library monitoring ML models during development, validation, and production. It checks data and model quality, data drift, target drift, and regression and classification performance.

Has three main components:

- Tests (Batch Model Checks): Perform structured data and model quality checks

- Reports (Interactive Dashboards): Provide interactive visualizations for data drift, model performance, and target monitoring

- Monitors (Real-Time Monitoring): Track data and model metrics from deployed ML services

21. Fiddler

Fiddler AI is an ML model monitoring tool with an easy-to-use, straightforward UI. It lets you explain and debug predictions, analyze model behavior for the entire dataset, deploy machine learning models at scale, and monitor model performance.

Key features:

- Performance Monitoring: In-depth visualization of data drift, including when and how it’s happening

- Data Integrity: Prevent incorrect data from being used for model training

- Tracking Outliers: Detect both univariate and multivariate outliers

- Service Metrics: Gain insights into ML service operations

- Alerts: Set up notifications for models or groups of models to detect issues in production

Runtime Engines

The runtime engine is responsible for loading the model, preprocessing input data, running inference, and returning the results to the client application.

22. Ray

Ray is a versatile framework designed to scale AI and Python applications, making it easier for developers to manage and optimize their machine learning projects.

The platform consists of two main components:

- Core Distributed Runtime: Provides fundamental elements for constructing and expanding distributed applications

- Tasks: Stateless functions executed within the cluster

- Actors: Stateful worker processes created within the cluster

- Objects: Immutable values accessible by any component within the cluster

- AI Libraries: Includes scalable datasets for ML, distributed training, hyperparameter tuning, reinforcement learning, and scalable serving

23. Nuclio

Nuclio is a robust framework focused on data, I/O, and compute-intensive workloads. It is designed to be serverless, meaning you don't need to worry about managing servers. Nuclio is well-integrated with popular data science tools, such as Jupyter and KFP.

It also supports various data and streaming sources and can be executed over CPUs and GPUs.

Key features:

- Requires minimal CPU/GPU and I/O resources to perform real-time processing while maximizing parallelism

- Integrates with a wide range of data sources and ML frameworks

- Provides stateful functions with data-path acceleration

- Offers portability across all kinds of devices and cloud platforms, especially low-power devices

- Designed for enterprise use

End-to-End MLOps Platforms

If you’re looking for a comprehensive MLOps tool that can help during the entire process, here are some of the best:

24. AWS SageMaker

Amazon Web Services SageMaker is a one-stop solution for MLOps. It allows you to train and accelerate model development, track and version experiments, catalog ML artifacts, integrate CI/CD ML pipelines, and deploy, serve, and monitor models in production seamlessly.

Key features:

- A collaborative environment for data science teams

- Automate ML training workflows

- Deploy and manage models in production

- Track and maintain model versions

- CI/CD for automatic integration and deployment

- Continuous monitoring and retraining models to maintain quality

- Optimize cost and performance

25. DagsHub

DagsHub is a platform for the machine learning community to track and version data, models, experiments, ML pipelines, and code. It allows your team to build, review, and share machine learning projects.

It is a GitHub for machine learning, providing various tools to optimize the end-to-end ML process.

Key features:

- Git and DVC repository for ML projects

- DagsHub logger and MLflow instance for experiment tracking

- Dataset annotation using a Label Studio instance

- Diffing Jupyter notebooks, code, datasets, and images

- Ability to comment on files, code lines, or datasets

- Create project reports similar to GitHub wikis

- ML pipeline visualization

- Reproducible results

- CI/CD for model training and deployment

- Data merging

- Integration with GitHub, Google Colab, DVC, Jenkins, external storage, webhooks, and New Relic

26. Iguazio MLOps Platform

The Iguazio MLOps Platform is an end-to-end MLOps solution that enables organizations to automate the machine learning pipeline from data collection and preparation to training, deployment, and monitoring in production. It provides an open (MLRun) and managed platform.

A key differentiator is its flexibility in deployment options. It allows AI applications to be deployed on any cloud, hybrid, or on-premises environment—particularly beneficial for industries like healthcare and finance, where data privacy is crucial.

Key features:

- Ingest data from any source and build reusable features using an integrated feature store

- Continuous training and evaluation of models at scale with automated tracking, data versioning, and CI/CD

- Deploy models to production in just a few clicks

- Constant monitoring of model performance and mitigation of model drift

- Simple dashboard for managing, governing, and monitoring models in real time

27. TrueFoundry

TrueFoundry is a cloud-native ML training and deployment PaaS built on Kubernetes. ML teams can train and deploy models efficiently without requiring deep Kubernetes expertise.

Key features:

- Jupyter Notebooks: Start Notebooks or VSCode Server on the cloud with auto shutdown

- Batch or inference jobs: Write Python scripts, log metrics, models, and artifacts, and trigger jobs manually or on a schedule

- Model deployment as APIs: Deploy model artifacts as APIs or wrap them in FastAPI, Flask, etc., with built-in autoscaling and canary deployments

- Easy debugging: View logs, metrics, and cost insights for all services

- Model registry: Tracks all models and versions, deployment status, and metadata

- LLM fine-tuning: Deploy open-source LLMs with one click and fine-tune on custom data

- ML software deployment: Deploy commonly used ML tools like LabelStudio and Helm Charts

- Multi-environment management: Manage multiple Kubernetes clusters and move workloads seamlessly

28. Airflow

Airflow is a mature, open-source workflow orchestration platform for managing data pipelines and various tasks. It is written in Python and provides a user-friendly web UI and CLI for defining and managing workflows.

Key features:

- Generic workflow management: Not limited to ML; handles data processing, ETL, and model training workflows

- DAGs (Directed Acyclic Graphs): Defines workflows with tasks and dependencies

- Scalability: Supports running workflows across a cluster of machines

- Large community: Offers extensive documentation and resources

- Flexibility: Integrates with multiple data sources, databases, and cloud platforms

29. Anaconda

Anaconda is a comprehensive MLOps, data science, and AI platform that provides everything needed for efficient model development and deployment.

Features and capabilities:

- All-in-one solution: Includes features and integrations covering most aspects of data workflows

- Open-Source vs. Proprietary: Offers an open-source core with enterprise options

- Security: Provides robust package verification and a secure repository.

- Integrations: Works with various IDEs, cloud platforms, and data science tools

- Scalability: Suitable for individual users and large enterprises

30. Optuna

Optuna is an open-source automatic hyperparameter optimization framework designed for ML experts.

Key features:

- Uses state-of-the-art algorithms to prune unpromising samples quickly

- Automatically searches for optimal hyperparameters using Python-based implementations

- Supports parallelized hyperparameter searches across multiple threads without modifying the original code

31. SigOpt

SigOpt is a model-building and optimization tool that simplifies tracing runs, visualizing training, and scaling hyperparameter tuning across models and libraries.

Key features:

- Interactive Dashboard: This allows users to assess various models based on statistical metrics (F1 Score, accuracy, etc.)

- Version Control: Enables users to compare past models and tweak hyperparameters for better results

- Minimal Code: Runs and compares different models with just a few lines of code

32. Clear ML: The Open-Source MLOps Tool for Collaboration and Experiment Management

Clear ML is an open-source platform that prioritizes collaboration and user experience. It offers a comprehensive suite of tools for managing machine learning experiments, orchestrating MLOps workflows, and maintaining a model registry. Clear ML allows you and your team to:

- Track experiments

- Reproduce results

- Share findings

It also helps manage and version datasets, ensuring reproducibility and data integrity. Clear ML also supports model deployment to various environments and provides monitoring capabilities to track model performance in production.

Key Features

- Navigate through experiments, data, and models easily, making MLOps accessible.

- Track changes to your dataset using data versioning, ensuring reproducibility and understanding the evolution of your data.

- Hyperparameter optimization for tuning model performance.

33. Databricks: The Unified Analytics Platform for MLOps

Databricks is a unified data analytics platform that provides a comprehensive suite of tools for:

- Data preparation

- Model development

- Training

- Deployment

- Monitoring

It's a good choice for teams that need a platform that strongly focuses on big data and Apache Spark. It simplifies the process of building and deploying machine learning models by providing a managed environment with integrated tools like MLflow for experiment tracking and model management. It also offers:

- Data versioning

- Collaboration

- Scalable data processing

Key Features

- Unified data analytics platform.

- Support for various machine learning frameworks.

- Integration with Apache Spark.

34. H2O.ai: Automated Machine Learning MLOps

H2O.ai is a leading platform for automated machine learning (AutoML). It offers a comprehensive suite of tools for building and deploying machine learning models with minimal human intervention. It's a good choice for teams that need a platform with a strong focus on AutoML.

The open-source platform includes tools like H2O, a distributed machine learning platform that supports various algorithms and can be used for multiple ML tasks. They also offer commercial products like Driverless AI, which automates many aspects of the machine learning workflow.

Key Features

- Automatic model selection and tuning.

- Easy-to-use interface.

- Support for various data sources.

35. Polyaxon: The Customizable Machine Learning Platform

Polyaxon is a flexible and customizable platform for managing the machine learning lifecycle. It's a good choice for teams that need a platform with a strong focus on flexibility and customization. Polyaxon allows you to automate and orchestrate machine learning workflows, making it easier to manage complex ML pipelines.

It also provides tools for experiment tracking, model versioning, and collaboration, enabling teams to work together more effectively on ML projects.

Key Features

- Customizable workflows.

- Support for various machine learning frameworks.

- Integration with popular tools and services.

36. Dataiku: The End-to-End MLOps Platform

Dataiku is an end-to-end platform that makes data science and machine learning more accessible and collaborative across organizations. It provides a visual and code-based environment where users can prepare data, build and train models, and deploy them into production.

Dataiku emphasizes collaboration and aims to bridge the gap between:

- Data scientists

- Data engineers

- Business analysts

It offers a range of features for data preparation, model development, and MLOps, making it a comprehensive solution for managing the entire machine learning lifecycle.

Key Features

- Visual and code-based interface for data science and machine learning.

- Collaboration features. An end-to-end platform for managing the whole machine learning lifecycle.

- Support for various data sources and machine learning frameworks.

- Tools for data preparation, model development, and deployment.

37. DigitalOcean Paperspace: Affordable MLOps Infrastructure

DigitalOcean is a cloud provider that offers a wide range of infrastructure and services suitable for MLOps, including those previously offered by Paperspace. They provide virtual machines (VMs) with various configurations, including GPUs, which are crucial for training and running machine learning models.

Paperspace, now integrated into DigitalOcean, enhances the platform's MLOps capabilities. It provides tools like Notebooks, Deployments, Workflows, and Machines, designed explicitly for:

- Developing

- Training

- Deploying AI applications

It is a good choice for teams that need a platform focused on affordability and ease of use.

Key Features

- We offer a wide range of VMs with different configurations, including GPUs.

- Flexible pricing models based on usage.

- Paperspace tools like:

- Notebooks

- Deployments

- Workflows

- Machines for MLOps

- Control over your MLOps infrastructure and environment.

38. Google Cloud Vertex AI Platform: The MLOps Platform for Google Cloud Users

Google Cloud Vertex AI Platform is a unified platform for building and deploying machine learning models. It's a good choice for teams already using Google Cloud or needing a platform that can integrate with other Google Cloud services.

Key Features

- Unified platform for building and deploying models.

- Support for various machine learning frameworks.

- Integration with other Google Cloud services.

39. Meltano: Open-Source DataOps for

Machine Learning

Meltano is a free, open-source DataOps platform that provides a command-line interface (CLI) for building data pipelines. It emphasizes a code-first approach, allowing developers to define and manage their data pipelines using YAML configuration files. This approach enables version control, reproducibility, and easier collaboration within data teams.

Meltano supports a wide range of data sources and destinations (extractors and loaders) through its integration with Singer, an open-source standard for data extraction. It also incorporates data transformation tools like dbt, enabling end-to-end data pipeline management within a unified framework.

Key Features

- Code-first approach using YAML for defining data pipelines.

- Integration with Singer for a wide range of data sources and destinations.

- Support for dbt for data transformation.

- Command-line interface for managing and running pipelines.

40. Airbyte: The Open-Source Data Integration Tool

Airbyte is a data integration platform that helps you move data from various sources, such as databases, APIs, and files, to different destinations, including data warehouses and data lakes. It's designed to be extensible and supports various connectors for other data sources and destinations.

Airbyte's core engine is implemented in Java. It uses a protocol to extract and load data in a serialized JSON format. The company also offers developer kits to make creating and customizing connectors easier.

Key Features

- Extensible platform with support for a wide range of connectors.

- Developer kits for creating and customizing connectors.

- Low-code connector builder.

41. Iterative DataChain: Data Management for AI Applications

DataChain is a Pythonic data-frame library designed to manage and process unstructured data for AI applications. It enables efficient organization, versioning, and transforming unstructured data like images, videos, text, and PDFs, making it ready for machine learning workflows.

DataChain allows for integrating AI models and API calls directly into the data processing pipeline, facilitating tasks such as data enrichment, transformation, and analysis. It supports multimodal data processing and provides functionalities for:

- Dataset persistence

- Versioning

- Efficient large-scale data handling

Key Features

- Pythonic data-frame library for AI

- Efficient handling of unstructured data at scale

- Integration of AI models and API calls in data pipelines

- Dataset persistence and versioning.

42. dbt: The SQL-Based Transformation Tool

dbt (data build tool) is a popular open-source tool that enables data analysts and engineers to transform data in their warehouses more effectively. It uses a code-based approach, allowing users to define data transformations using SQL SELECT statements, making it accessible to those familiar with SQL.

dbt promotes best practices in software engineering, making it easier to manage and maintain complex data transformation workflows, such as:

- Modularity

- Reusability

- Testing

It also integrates with popular data warehouses like Snowflake, BigQuery, and Redshift, allowing seamless data transformation within these platforms.

Key Features

- Code-based data transformation using SQL SELECT statements.

- Modularity and reusability for efficient code management.

- Integration with popular data warehouses and orchestration tools.

43. Snowflake: The Cloud Data Platform for MLOps

Snowflake is a cloud-based data platform that provides a unified environment for data warehousing, data lakes, data engineering, and application development. It's designed to handle diverse workloads related to machine learning and AI.

For MLOps, Snowflake offers a robust platform for:

- Managing and processing large datasets

- Building and training machine learning models

- Deploying AI applications

It provides tools for data preparation, feature engineering, model development, and deployment, all within a scalable and secure cloud environment.

Key Features

- Cloud-based data platform with a unified environment for various data workloads.

- Scalable and secure infrastructure for managing and processing large datasets.

- Supports diverse workloads, including data warehousing, data lakes, and data engineering.

- Offers tools for data preparation, feature engineering, and model development.

- Enables deployment and management of AI/ML models in a cloud environment.

44. Talend Data Preparation: A User-Friendly Data Preparation Tool

Talend Data Preparation is a tool for easily accessing, cleansing, and preparing data for use in machine learning models. It offers a user-friendly interface with visual tools and built-in transformations, making it accessible to technical and non-technical users.

Talend Data Preparation supports many data sources, including databases, files, and cloud applications. It also integrates with other Talend products, such as Talend Data Integration and Talend Big Data, enabling users to build end-to-end data pipelines for machine learning workflows.

Key Features

- User-friendly interface with visual tools.

- A wide range of built-in transformations for data cleansing and preparation.

- Support various data sources, including:

- Databases

- Files

- Cloud applications

- Integration with other Talend products for building end-to-end data pipelines.

- Collaboration features for team-based data preparation.

45. TensorFlow: The Open-Source ML Framework

TensorFlow is a popular open-source machine learning framework developed by Google. It provides a comprehensive ecosystem of tools and libraries for building and deploying various machine learning models, including deep neural networks.

TensorFlow supports many functionalities, from data preprocessing and model development to:

- Model training

- Deployment

- Monitoring

It offers different levels of abstraction, allowing users to choose between low-level APIs for fine-grained control and high-level APIs like Keras for easier model building. TensorFlow also provides tools across various platforms for:

- Model optimization

- Distributed training

- Deployment

Key Features

- Supports a wide range of machine learning models, including deep neural networks.

- Offers various levels of abstraction with different APIs.

- Provides tools for model optimization and deployment.

- Large and active community support.

46. Determined AI: The Platform for Deep Learning

Determined AI is a platform specifically designed to streamline the development and training of deep learning models. It simplifies resource management, experiment tracking, and distributed training, allowing machine learning engineers to focus on model development and optimization rather than infrastructure management.

Determined AI supports popular deep learning frameworks like TensorFlow and PyTorch, providing a unified platform for:

- Model training

- Hyperparameter tuning

- Experiment tracking

It also offers features for efficient resource allocation and distributed training across multiple GPUs and machines, enabling faster model development and iteration.

Key Features

- Simplifies distributed training and hyperparameter tuning.

- Experiment tracking and visualization.

- Efficient resource management for model training.

- Works with PyTorch and TensorFlow.

47. LlamaIndex: The Data Framework for LLMs

LlamaIndex (formerly GPT Index) is a data framework that simplifies connecting your data to large language models (LLMs). It provides tools for indexing, structuring, and querying data sources, making it easier to build LLM-powered applications that can access and utilize your data effectively. LlamaIndex simplifies the retrieval and grounding of LLM responses by providing a modular and customizable framework. It supports various data sources, including APIs, PDFs, documents, and SQL databases, and offers different indexing strategies to optimize data retrieval for LLMs.

Key Features

- Simplifies the process of connecting your data to LLMs.

- Provides tools for indexing, structuring, and querying data sources.

- Supports various data sources and indexing strategies.

- Facilitates the development of retrieval-augmented generation (RAG) applications.

48. GuardRails AI: Framework for Reliable LLM Applications

Guardrails AI is a framework that enhances the reliability and safety of LLM applications. It focuses on validating and correcting LLM output, ensuring that the generated content adheres to predefined rules and guidelines.

Guardrails AI uses a declarative approach, where developers define the expected structure and format of LLM outputs using a specification language called RAIL (Reliable AI Markup Language). This allows precise control over the generated content and helps prevent unexpected or undesirable outputs.

Key Features

- Uses RAIL for defining and enforcing output rules.

- Validates and corrects LLM outputs.

- Integrates with popular LLM frameworks and libraries.

- Helps ensure the reliability and safety of LLM applications.

49. Grafana and Prometheus: The Monitoring Tools for MLOps

Grafana is a popular open-source platform for data visualization and monitoring. It allows you to create interactive dashboards and visualizations from various data sources, including:

- Time-series databases

- Logs

- Application metrics

It is highly extensible and supports many data sources and plugins, making it a versatile tool for visualizing and analyzing data. Prometheus is an open-source monitoring system widely used for collecting and storing time-series data. Prometheus scrapes metrics from instrumented applications and stores them in a time-series database.

Key Features

- Create interactive dashboards and visualizations.

- Supports a wide range of data sources and plugins.

- Highly extensible and customizable.

50. Datadog: The Monitoring and Observability Tool for MLOps

Datadog is a comprehensive monitoring and observability platform for cloud-scale applications and infrastructure. It provides a unified platform for monitoring metrics, traces, and logs from various sources, including:

- Servers

- Databases

- Applications

- Cloud services

In the context of MLOps, Datadog allows for deep insights into model performance by tracking key metrics such as:

- Accuracy

- Precision

- Recall

- F1-score

It can also monitor the health of the underlying infrastructure, including:

- GPU utilization

- Memory usage

- Network performance

This comprehensive monitoring helps identify bottlenecks, optimize resource allocation, and ensure the smooth operation of AI/ML workloads.

Key Features

- Unified platform for monitoring metrics, traces, and logs.

- Real-time dashboards and visualizations.

- Alerting and anomaly detection.

- Integration with popular MLOps tools and frameworks such as LangChain, Kubeflow, etc.

- AI-powered insights and automation include automated anomaly detection and predictive analytics for resource optimization.

51. Dynatrace: The AI-Powered Observability Tool for MLOps

Dynatrace is an AI-powered observability platform that comprehensively monitors and analyzes:

- Applications

- Infrastructure

- User experience

In the context of MLOps, Dynatrace enables organizations to gain deep insights into their AI/ML models:

- Performance

- Health

- Underlying infrastructure

It offers a unified view of metrics, traces, logs, and dependencies, making it easier to identify and resolve issues impacting model accuracy, performance, or availability.

Key Features

- AI-powered observability.

- Automated root cause analysis.

- Offers real-time dashboards and visualizations to track key MLOps metrics and identify trends.

52. Splunk: The Data Analysis Platform for MLOps

Splunk is a platform for analyzing data from machine learning pipelines. It can gather information from different sources, such as logs, metrics, and traces, giving you a complete picture of what's happening with your ML models and infrastructure.

It integrates with popular machine learning frameworks and tools, providing a central platform to analyze model behavior and detect problems like data drift, ensuring the stability and reliability of your AI/ML systems.

Key Features

- Advanced analytics and visualization

- Real-time monitoring and alerting

53. Kserve: The MLOps Tool for Serving Machine Learning Models

KServe is a cloud-native platform for serving machine learning models on Kubernetes that aims to simplify the process of deploying and managing models in production. It provides a standardized and scalable way to deploy models, offering features like autoscaling, canary deployments, and model versioning.

KServe is designed to be flexible and supports various machine learning frameworks, including:

- TensorFlow

- PyTorch

- XGBoost

Key Features

- Unified framework for managing computing resources.

- Flexible enough to handle most machine learning tasks.

Related Reading

- AI Infrastructure

- AI as a Service

- Machine Learning Inference

- Artificial Intelligence Cost Estimation

- AutoML Companies

- Edge Inference

- LLM Inference Optimization

Start building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

- LLM Serving

- LLM Platforms

- Inference Cost

- Machine Learning at Scale

- TensorRT

- SageMaker Inference

- SageMaker Inference Pricing

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.