Mar 14, 2025

What is LLM Serving & Why It’s Essential for Scalable AI Deployment

Inference Research

Picture this: You’ve integrated a large language model into your application, and it's not meeting your expectations. The output is delayed, and your users are frustrated. Performance bottlenecks are ruining the seamless experience you hoped to provide. The problem likely stems from the deployment of the LLM. LLM serving, or how large language models are deployed and scaled, is critical in determining performance. This blog will explore the ins and outs of LLM serving and offer tips to help you efficiently deploy and scale your LLM with low latency and optimal cost. You can integrate LLMs into your applications and avoid performance bottlenecks to deliver a smooth user experience. Additionally, understanding AI Inference vs Training is crucial to optimizing model performance and ensuring smooth deployment.

One way to achieve your goals is by leveraging AI inference APIs. These tools can help you efficiently deploy and scale your LLM, reducing latency and cutting costs.

What is LLM Serving and Its Importance

LLM serving is the backbone of deploying large language models in real-world applications. These models have transformed AI by powering natural language processing, code generation, and conversational AI. Deploying them at scale presents significant engineering challenges.

LLM serving ensures efficient, scalable deployment by handling live inference requests in production environments. It involves key processes such as model deployment, API integration, scalability management, and performance monitoring. Adequate LLM serving empowers organizations to deliver intelligent, humanlike interactions seamlessly by bridging the gap between computationally intensive models and real-world applications.

LLM Serving Metrics: Throughput and Latency

When deploying large language models, two critical performance metrics come into play: throughput and latency.

- Throughput measures how many tokens the inference server generates per second across multiple user requests. A higher throughput means the system can efficiently handle more users and process responses faster.

- Latency refers to the time taken to generate a complete response. In streaming mode, it measures explicitly the time to first token (TTFT)—how long it takes for the first token to appear after a request. Lower latency results in a more responsive user experience.

Simply put, latency is what users feel—the wait time for a response. Throughput determines how many users the system can serve efficiently and how fast new words appear in streaming mode. Optimizing both ensures a smooth and scalable user experience.

Solutions for Optimizing LLM Serving

Deploying large language models (LLMs) in a production environment is far from trivial. These models can range from several gigabytes to tens of gigabytes, requiring GPUs with sufficient memory to maintain accuracy and performance. While smaller models can run efficiently on personal hardware, enterprise-scale deployments, such as those on OpenShift, demand more robust solutions.

A deployed LLM is typically accessed by multiple applications and users simultaneously. Adding more resources isn’t always feasible due to infrastructure constraints unlike traditional scaling methods. Techniques like batching queries, caching, and buffering must be explicitly managed to optimize response times.

LLMs require fine-tuning at load time, making a one-size-fits-all model loader ineffective. Fortunately, various solutions exist to address these challenges, enabling efficient and scalable LLM serving in production environments.

Related Reading

- Model Inference

- AI Learning Models

- MLOps Best Practices

- MLOps Architecture

- Machine Learning Best Practices

- AI Infrastructure Ecosystem

What are the Key Fundamentals of LLM Serving?

The inference process of large language models involves two primary phases:

- Input encoding, or the prefill phase, involves taking a user’s input and processing it within the LLM architecture to establish an intermediate state for the model.

- On the other hand, output decoding involves the LLM generating the next token in a sequence until a specified stopping criterion is met.

Optimizing LLM Inference

The autoregressive nature of LLMs like GPT-4 can introduce significant latency during inference. For example, the model must produce each word sequentially to generate a simple ten-word sentence.

The colossal size of contemporary LLMs, with their billions of parameters, requires substantial computational resources that can lead to memory bandwidth constraints. When these become a bottleneck, they can significantly hinder the inference process.

KV Caching: Trading Memory for Compute

The KV cache helps address some of the computational inefficiencies associated with LLM inference. It stores the keys and values generated from previously processed tokens during the prediction of the next token. This is critical because vanilla Transformers' attention mechanism is quadratic, meaning computational demands increase as the sequence length grows.

Trade-Offs of Using KV Cache: Speed Gains vs Memory Overhead

The KV cache first comes into play during the input encoding (prefill) phase, where intermediate states are computed for each token within the input sequence. During output decoding, the LLM refers to the stored keys and values from the input sequence within the KV cache to predict the next token.

By leveraging the KV cache, we avoid reprocessing every prior token’s information at each step when generating a new token. Instead, we only use the information from the last generated token stored within the KV cache. This effectively reduces the computation requirements of the attention mechanism to linear complexity. This efficiency comes at the cost of higher memory usage.

PagedAttention: Virtual Memory for LLMs

Another advancement made to the attention mechanism that improves LLM serving is PagedAttention. Initially developed by the team behind vLLM, PagedAttention has now been integrated into other popular serving frameworks such as TGI and TensorRT-LLM.

This promising method addresses memory wastage issues in existing KV cache setups (in particular, attention keys and values) by tackling several key problems:

- Internal Fragmentation: Over-allocating memory due to unknown output length.

- Reservation: Allocated memory is not used at the current step but will be required in the future.

- External Fragmentation: Memory inefficiency due to varying sequence lengths.

How PagedAttention Optimises Memory Efficiency in Multi-Sequence Decoding

PagedAttention mitigates these issues by storing continuous keys and values in non-contiguous memory blocks of fixed size. This approach significantly improves memory utilization, with 3- 5x enhancement claims. Specifically, less than 4% of the KV cache space is wasted, as internal fragmentation occurs only within the last block of the sequence. PagedAttention enables memory sharing during the decoding phase, generating multiple output sequences from the same input sequence. This reduces memory overhead from complex algorithms such as parallel sampling and beam search.

Continuous Batching: Improving Overall Throughput

The LLM inference process is memory-bound, involving frequent read and write operations to memory without maximizing the GPU's compute power potential. This inefficiency in resource utilization can significantly hinder overall performance. One way to address this issue is to increase the number of sequences processed per iteration of loading model parameters, thereby improving overall computation utilization. The naive, or more commonly referred to as static batching method, involves sending a fixed batch size of input sequences for inference. However, due to the nature of how LLMs are utilized and behave, not all input sequences are entirely processed at the same time. This means that even if computing power is available to process additional sequences, it cannot be released for new requests as other sequences are still being processed.

Continuous Batching: Solving Tail Latency and Improving Throughput in LLM Serving

This challenge can be better illustrated with an example. Consider a batch size of 32 sequences, where more than three-quarters of the sequences output only 100 tokens, while the remaining quarter may output 500 or more tokens. This scenario leads to a situation where large amounts of resources remain unutilized because the time to output 100 tokens is much faster. As a result, resources cannot be released for further processing, as they are waiting for the quarter of more extended sequences to be completed. A newer approach in the industry attempts to fix this inefficiency. Instead of waiting for the more extended sequences to finish, continuous batching continuously schedules new requests as soon as a prior request (sequence) is completed. This method ensures better utilization of available compute power and is further illustrated on the right side of the image above. Continuous batching allows for better overall throughput and latency, especially in the case of online serving, where we typically do not want users to wait long for the LLM output. The ability to handle requests one after the other without waiting for all previously batched requests to complete is crucial to ensuring minimal waiting time for each request.

Speculative Decoding: Accelerating Token Generation

Speculative decoding is an advanced technique designed to accelerate the inference process and reduce token latency in autoregressive models. This approach can be better understood by imagining a smaller (draft) model assisting your LLM. While the smaller model might not be as capable, it can swiftly identify candidate tokens, which the larger model considers.Even without using a smaller model, you can leverage your prompt to generate “choices” for your LLM in scenarios with less creative results, such as summarization, using n-grams, a statistical method for predicting the next token based on previous tokens.

Draft Model: Avoiding Idle Compute Resources

Since the inference process is often memory-bound, we can utilize a smaller draft model to predict several potential token choices in parallel with the larger model’s inference process to avoid leaving compute resources idle.

Because smaller models can perform the forward pass faster during inference than larger models, they can effectively provide token choices for the larger model to evaluate. To implement this technique, you need to identify a smaller version of the large model that uses the same tokenizer to ensure consistency in token representation and smooth integration of predictions.

Balancing Speed and Accuracy in Speculative Decoding with Draft Models

For example, to speed up the inference of a model like meta-llama/Llama-2-7 b-hf, you might use a smaller model such as JackFram/llama-68m for speculative sampling of tokens, as both share the same tokenizer.The output quality may suffer if the size difference between the draft and the target models is too significant. Therefore, if computational resources allow, consider using a larger draft model to minimize this size disparity. For instance, you could use Facebook/opt-1.3b alongside Facebook/opt-6.7b to ensure consistent results.

N-Gram: Another Approach to Speculative Decoding

An alternative approach to speculative decoding with a smaller draft model is employing the n-gram method in context-dependent tasks. Tasks such as summarization, document QA, and code editing often exhibit high n-gram overlap, meaning the generated text by the LLM tends to match sequences of tokens in the original input or prompt. By leveraging these potential matches, we can assist the LLM in making token considerations. Specifically, we can derive n-grams from the prompt to generate potential token candidates, which the LLM can then evaluate and incorporate into the output. Like the draft model approach, this method requires no modification to the model itself, yet can achieve significant speed-ups in scenarios where outputs and inputs share similar token sequences.

MEDUSA: A Parameter-Efficient Approach

Speculative decoding methods like the draft model and n-grams involve no modification to the existing LLM. In contrast, MEDUSA adopts a different approach by adding additional “heads” to the LLM in a parameter-efficient manner. The creators of MEDUSA identified key issues with traditional speculative decoding methods, such as the challenge of finding a good draft model and the overall system complexity of deploying an additional model for generating token candidates.

MEDUSA: Leveraging Auxiliary Decoder Heads for Faster LLM Inference

In MEDUSA, the additional “heads” are trained decoder heads added to the model that is being used. This method is parameter-efficient because only the new decoder heads are trained in the initial approach (MEDUSA-1) while the base model remains frozen. The latest version (MEDUSA-2) includes full-model training, which results in even greater speedup. According to paper 4, MEDUSA can achieve a speedup of 2.2- 2.8x 5 times across different prompts without compromising quality.

Multi-Step Token Prediction in MEDUSA: Reducing Forward Passes for Faster Generation

MEDUSA’s core idea involves using the newly trained decoder heads to predict tokens. Specifically, the k-th head predicts a token for the timestamp at the (t+k+1) th position, whereas the original LLM decoder head predicts only the (t+1) th position. Essentially, MEDUSA attempts to predict tokens for multiple timesteps from the next token position.

Suppose token candidates are selected based on custom acceptance criteria (extended from those used in the draft model). In that case, MEDUSA can bypass several forward passes required by the LLM to generate the selected tokens, using the outputs from the newly added decoder heads.

Tree Attention in MEDUSA: Structuring Attention Flow for Efficient Decoding

In addition to the decoder heads, MEDUSA uses tree attention, a tree-structured attention mechanism in which only predecessor tokens are used for attention flow. This involves employing a custom tree-structured attention mask.

If you’re interested in more in-depth explanations of the various speculative decoding techniques, Julien Simon from HuggingFace provides excellent insights on how each method works.

Components of LLM Serving

Understanding LLM Serving and Its Distinct Components

Large language models are incredibly complex systems with multiple components. Serving LLMs involves a comprehensive understanding of inference and serving, and the two have distinct characteristics. For instance, inference focuses on loading an LLM, optimizing its performance, and running the model to respond to user queries. On the other hand, it focuses on the architecture behind responding to user requests and orchestrating inference calls to ensure smooth operations.

An LLM serving framework loads the model, manages memory, optimizes performance, and responds to user queries. While serving LLMs is similar to traditional machine learning models, their complexities and unique characteristics necessitate developing dedicated serving solutions that can address their distinct challenges.

LLM Serving Frameworks Have Distinct Components

While LLM serving frameworks are primarily responsible for orchestrating inference requests, they also come with components that load and run the model. The engine loads the model and manages memory and performance optimizations.

The server orchestrates user requests, ensuring smooth operations and responding to queries as quickly as possible. These two components are often integrated and work together to serve LLMs. For instance, vLLM is an inference engine providing an OpenAI-compatible HTTP server built with FastAPI.

Key Features of LLM Serving Frameworks

LLM serving frameworks can be evaluated based on their capability to manage memory and optimize performance effectively.

The following features are critical when selecting a framework to deploy LLMs:

- Memory Management of KV Cache

- Memory Optimization

- Model-Specific Optimization

- Batch Support

- HTTP/gRPC API Support

- Request Queuing

Popular LLM Serving Frameworks

Several LLM serving frameworks are available today, each with unique features to optimize the inference of large language models. The following section reviews the most popular LLM serving solutions, highlighting their key benefits and capabilities.

vLLM

vLLM has rapidly emerged as the go-to open-source framework for serving large language models, cementing its popularity among developers. Boasting an impressive 21.9k stars on GitHub, vLLM is a top choice for those looking to deploy LLMs at scale.

Developed at UC Berkeley, vLLM is renowned for popularizing the innovative PagedAttention technique, which other frameworks like TGI and TensorRT-LLM have since adopted. As of now, vLLM supports up to 38 distinct transformer architectures. This versatility allows developers to deploy dozens or hundreds of different LLMs using a single, streamlined workflow.

Notable Features and Capabilities

- Efficient memory management with PagedAttention

- Continuous batching of inference requests

- Fast model execution with CUDA/HIP graph

- Support for a wide range of GPUs and accelerators

- Optimized CUDA kernels

- Distributed inference with tensor parallelism

- OpenAI-compatible API server

- Support for multimodal models

- Support for multi-nodes with Ray

- Production-ready inference server with OpenTelemetry tracing and Prometheus metrics

TensorRT-LLM

From NVIDIA, TensorRT-LLM stands out as a popular alternative to vLLM for serving large language models, offering seamless integration with the NVIDIA Triton Inference Server.

With a strong following of 7.4k stars on GitHub, TensorRT-LLM supports approximately 40+ transformer architectures, making it a robust choice for developers looking to leverage NVIDIA’s hardware for high-performance LLM serving.

Notable Features and Capabilities

- Optimized inference with compiled model via TensorRT

- Efficient memory management with PagedAttention

- Distributed inference with tensor and pipeline parallelism

- Improve token latency with speculative decoding

- Support for a wide range of multimodal models

- Support for multi-nodes

TGI

Text Generation Inference (TGI), created by the developers of transformers, is a serving framework that has gained popularity with 8.3k stars on GitHub. It provides support for approximately 26 different model architectures. With direct integration with the HuggingFace Hub, TGI offers a simple launcher to deploy LLMs on your infrastructure quickly.

Notably, if a specific model architecture is not directly supported, but is available in the transformers library, the deployment will fall back to the default transformers implementation. This fallback lacks capabilities in tensor parallelism and flash attention.

Notable Features and Capabilities

- Efficient memory management with PagedAttention

- Continuous batching of inference requests

- Support for a range of GPUs and accelerators

- Distributed inference with tensor parallelism

- Support for multimodal models

- OpenAI-compatible API server

- Production-ready inference server with:

- OpenTelemetry tracing

- Prometheus metrics

Top 15 LLM Serving Frameworks and Solutions

1. Inference.net

Inference.net offers OpenAI-compatible serverless inference APIs for top open-source LLM models, giving developers the highest performance, lowest cost, and most flexibility in the market.

In addition to standard inference, Inference.net provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications. Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

2. WebLLM

WebLLM is a high-performance, in-browser LLM inference engine powered by WebGPU hardware acceleration. AI models like Llama 3 can run directly in the browser without server dependencies. WebLLM supports real-time AI interactions with features like streaming responses, structured JSON generation, and logit-level control.

It offers full compatibility with the OpenAI API, allowing developers to integrate AI easily into web applications while ensuring privacy and efficiency through its modular design. WebLLM is ideal for building:

- Chatbots

- Assistants and more

3. LM Studio

LM Studio is a powerful desktop application that enables users to run large language models (LLMs) completely offline on their local machine. It supports various hardware configurations and allows users to experiment with different models and configurations.

LM Studio offers a user-friendly chat interface and an OpenAI-compatible local server, making it versatile for developers who want to integrate LLMs into their applications or experiment with various models.

4. Ollama

Ollama is a powerful, open-source LLM serving engine that enables local inference. This capability allows users to run language models directly on their machines without relying on cloud services. This capability enhances privacy, reduces latency, and provides greater control over the models used. It is an ideal solution for developers and organizations leveraging AI while maintaining data security.

5. vLLM

vLLM (Virtual Large Language Model) is an advanced open-source library designed for high-performance inference and serving of Large Language Models (LLMs). It leverages innovative features such as PagedAttention for efficient memory management, continuous batching for optimal GPU utilization, and support for various quantization methods to enhance inference speed.

vLLM is compatible with an OpenAI-like API and integrates seamlessly with the Hugging Face ecosystem, making it a versatile tool for AI practitioners.

6. LightLLM

LightLLM is a Python-based framework for fast and efficient inference of Large Language Models (LLMs). Renowned for its lightweight design, easy scalability, and high-speed performance, LightLLM leverages the strengths of several well-regarded open-source implementations, such as:

- FasterTransformer

- TGI

- vLL

- FlashAttention

The framework supports advanced features that optimize GPU utilization and memory management, making it ideal for development and production environments.

7. OpenLLM

OpenLLM is a versatile platform that simplifies the self-hosting of large language models (LLMs). It allows developers to run state-of-the-art open-source models as OpenAI-compatible APIs, like:

- Llama

- Qwen

- Mistral, and more

With built-in chat interfaces, optimized inference backends, and seamless integration with Docker, Kubernetes, and BentoCloud, OpenLLM streamlines deploying, managing, and interacting with custom and popular LLMs.

8. HuggingFace TGI

Hugging Face Text Generation Inference (TGI) is a robust and scalable solution designed to serve large language models efficiently. Optimized for inference workloads, TGI supports various open-source and custom models, providing fast and scalable text generation services.

It is particularly suited for high-performance environments where speed and resource efficiency are critical and integrates seamlessly with Hugging Face’s model hub.

9. GPT4ALL

GPT4All by Nomic is a series of models and an ecosystem for training and deploying models locally on your computer. The platform enables users to run large language models (LLMs) efficiently on desktops and laptops, emphasizing privacy by keeping data on the device. Inspired by OpenAI’s ChatGPT, the GPT4All desktop application offers a familiar interface for interaction.

Integrating Nomic’s embedding models allows users to easily pull information from local documents into their chats, providing a streamlined experience. GPT4All supports popular model architectures like LLaMa and Mistral and utilizes the efficient llama.cpp and Nomic's C backend, making it accessible for users across various skill levels.

10. llama.cpp

llama.cpp is an optimized, dependency-free C/C++ implementation designed for running large language models (LLMs) like Llama locally. As the default implementation for GGML-based models, it forms the backbone of many tools in the LLM ecosystem.

This library supports various bindings, including Python, enabling seamless interaction with different models. Engineered for high performance, llama.cpp runs efficiently across diverse hardware configurations, from Apple Silicon to x86 architectures. It also includes advanced features like multi-level integer quantization and custom CUDA kernels for NVIDIA GPUs, making it a powerful solution for local and cloud-based LLM deployments.

11. Triton Inference Server with TensorRT-LLM

NVIDIA’s Triton Inference Server is an enterprise-grade platform designed to streamline the deployment of large language models (LLMs) in production. It supports frameworks like TensorFlow, PyTorch, and ONNX, ensuring efficient model serving with high performance.

When paired with TensorRT-LLM, an open-source framework optimized for LLM inference, developers can fine-tune models for maximum efficiency. TensorRT-LLM accelerates inference using optimized kernels, paged attention, and efficient key-value (KV) caching, making it ideal for high-throughput applications.

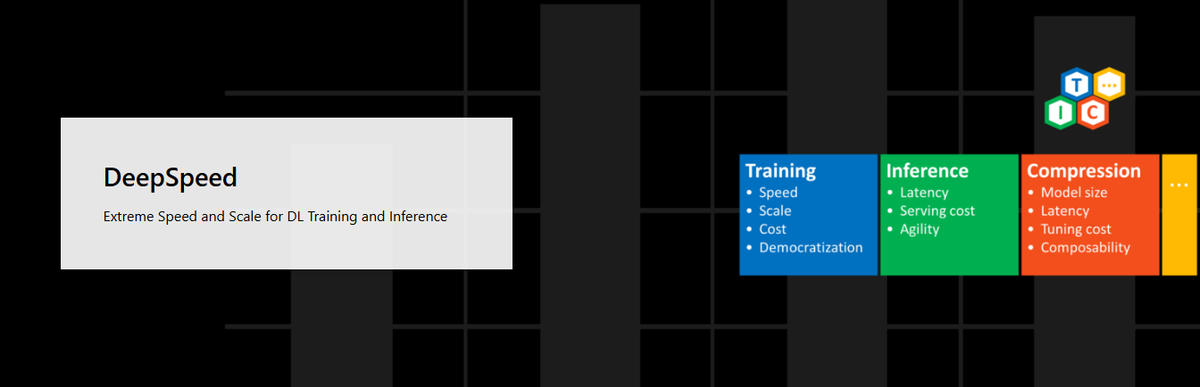

12. DeepSpeed MII

The DeepSpeed Model Implementation for Inference (MII) aims to make low-latency, low-cost inference of powerful models feasible and easily accessible.

The DeepSpeed Model Implementation for Inference (MII) aims to make low-latency, low-cost inference of powerful models feasible and easily accessible.

13. RayLLM with RayServe

Built on Ray Serve, RayLLM benefits from a distributed computing framework that provides specialized libraries for data streaming, training, fine-tuning, hyperparameter tuning, and serving, simplifying the development and deployment of large-scale AI models.

RayLLM supports the deployment of multi-model endpoints and provides server capabilities. In contrast, engine capabilities are supplied by integrations such as continuous batching, paged attention, and other optimization techniques through TGI and vLLM integration.

14. TensorRT-LLM

TensorRT-LLM is an open-source library designed to accelerate and optimize inference on the latest LLMs using NVIDIA Tensor Core GPUs. It integrates TensorRT’s Deep Learning Compiler, optimized FasterTransformer kernels, pre- and post-processing, and multi-GPU/multi-node communication into a streamlined Python API for defining, optimizing, and executing LLMs in production.

TensorRT-LLM leverages tensor parallelism to enable efficient inference across multiple GPUs and servers with minimal developer intervention. It includes highly optimized, ready-to-run versions of leading LLMs like:

- Meta Llama 2

- OpenAI GPT-2/GPT-3

- Falcon

- Mosaic MPT

The library also offers a C++ runtime for executing LLM engines, featuring token sampling, KV cache management, and in-flight batching (continuous batching). This technique reduces queue wait times, eliminates the need for padding requests, and maximizes GPU utilization.

TensorRT-LLM simplifies LLM deployment, providing peak performance and flexibility without requiring deep C++ or NVIDIA CUDA expertise.

15. Text Generation Inference (TGI)

At HuggingFace, a Rust, Python, and gRPC server powers HuggingChat, the Inference API, and the Inference Endpoint. Text Generation Inference utilizes tensor parallelism (Accelerate) for faster inference on multiple GPUs.

It supports continuous batching for increased throughput, quantization, Paged and FlashAttention, token streaming using Server-Sent Events (SSE), and many more. Logit warper (different parameters such as temperature, repetition penalty, top-k, top-n, etc.) supports an optimized set of specific LLMs. The license for usage has been changed. It is not free of charge for commercial use.

Related Reading

- AI Infrastructure

- MLOps Tools

- AI as a Service

- Machine Learning Inference

- Artificial Intelligence Cost Estimation

- AutoML Companies

- Edge Inference

- LLM Inference Optimization

Which LLM Serving Model to Use?

Model Performance: The Big Picture

When choosing a large language model for serving tasks, you'll want to pick one that performs well for your specific use case. Naturally, performance can vary greatly based on the task at hand, so it helps to be specific about your needs and pick a model that has been benchmarked on similar tasks. Several benchmarks have been published, as well as constantly updated rankings.

Dataset Quality: What Was the Model Trained On?

It also matters what data the model was trained on.

- Was it curated or just raw data from anywhere?

- Does it contain NSFW material?

- What’s the license?

Some datasets are provided for research only or non-commercial use, while others are more permissive and allow for all sorts of applications.

Model License: What Can You Actually Do With It?

Models themselves also have licenses, which can be quite different from the data licenses mentioned above. Some are fully open-source, while others claim to be. They may be free to use in most cases, but have some restrictions attached to them (looking at you, Llama 2).

Model Size: Does It Fit Your Hardware?

The size of the model may be the most restrictive point for your choice. The model simply must fit on the hardware you have at your disposal, or the amount of money you are willing to pay.

Related Reading

- LLM Platforms

- Inference Cost

- Machine Learning at Scale

- TensorRT

- SageMaker Inference

- SageMaker Inference Pricing

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.