Mar 15, 2025

A Practical Guide to AWS SageMaker Inference for AI Model Efficiency

Inference Research

Large language models have taken artificial intelligence to new heights. However, deploying these models for real-world use can be challenging despite their exciting capabilities. For instance, a chatbot powered by LLM inference may provide detailed and nuanced responses to user queries in a staging environment. Once a substantial influx of traffic to the model, such as during a product launch, performance may degrade drastically. AI Inference vs Training plays a crucial role in understanding these challenges. AWS SageMaker Inference can help organizations overcome these hurdles by enabling them to seamlessly deploy and scale AI models to achieve high performance, cost efficiency, and low-latency predictions. This article will help you get started with this powerful tool.

SageMaker Inference offers AI inference APIs, a vital solution for deploying LLMs to production. These APIs help organizations achieve their objectives by managing the complexities of LLM inference to reduce costs, maximize performance, and enable low-latency predictions.

What is the AWS Sagemaker Inference Model?

An inference model is the end product of the machine learning (ML) lifecycle. After you train a model, you use it to predict new data. In the context of Amazon SageMaker, inference refers to how ML models make those predictions.

An inference model can be considered a “snapshot” of the ML model at a particular time that contains all the artifacts to make predictions. In AWS SageMaker, inference models are deployed within a managed environment, allowing you to serve and make predictions on your trained model.

Amazon SageMaker Makes Inference Easy

Amazon SageMaker is a managed service that allows developers and data scientists to quickly build, train, and deploy machine learning (ML) models. SageMaker removes the heavy lifting from each step of the machine learning process, making it easier to develop high-quality models.

It provides a broad set of capabilities, such as:

- Preparing and labeling data

- Choosing an algorithm

- Training and tuning a model

- Deploying it to make predictions

Benefits of SageMaker Inference

Amazon SageMaker AI lets you deploy models in production for inference for any use case. SageMaker AI caters to a wide range of inference requirements, from low latency (a few milliseconds) and high throughput (millions of transactions per second) scenarios to long-running inference for use cases such as:

- Multilingual text processing

- Text-image processing

- Multi-modal understanding

- Natural language processing

- Computer vision

SageMaker AI provides a robust and scalable solution for all your inference needs.

Cost-Effective Inference Performance Optimization

Achieve optimal inference performance and cost with Amazon SageMaker AI. Amazon SageMaker AI offers more than 100 instance types with varying levels of compute and memory to suit different performance needs. To better utilize the underlying accelerators and reduce deployment cost, you can deploy multiple models to the same instance.

To further optimize costs, you can utilize autoscaling, which automatically adjusts the number of instances based on traffic volume. It shuts down instances when there is no usage, thereby reducing inference costs.

Simplify Inference Management With MLOps

Reduce operational burden using SageMaker MLOps capabilities. As a fully managed service, Amazon SageMaker AI handles setting up and managing instances, ensuring software version compatibility, and patching versions. With built-in integration of MLOps features, it helps offload the operational overhead of:

- Deploying

- Scaling

- Managing ML models

This enables them to reach production faster.

Four Diverse Inference Options

Amazon SageMaker AI offers a wide range of inference options to meet diverse business needs.

- Real-Time Inference: Real-time, interactive, and low-latency predictions for use cases with steady traffic patterns. You can deploy your model to a fully managed endpoint that supports autoscaling.

- Serverless Inference: Low latency and high throughput for use cases with intermittent traffic patterns. Serverless endpoints automatically launch and scale compute resources as needed, eliminating the need to select instance types or manage scaling policies manually.

- Asynchronous Inference: Low latency for use cases with large payloads (up to 1 GB) or long processing times (up to one hour), and near real-time latency requirements. Asynchronous Inference helps save costs by autoscaling the instance count to zero when there are no requests to process.

Batch Transform: Offline inference on data batches for use cases with large datasets. With Batch Transform, you can preprocess datasets to remove noise or bias, and associate input records with inferences to help with result interpretation.

Scale Up or Down With Ease

SageMaker Model Deployment can support any scale of operation, including models that require rapid response in milliseconds or need to handle millions of transactions per second. It also integrates with SageMaker MLOps tools, making managing models throughout the machine learning pipeline possible.

Related Reading

- Model Inference

- AI Learning Models

- MLOps Best Practices

- MLOps Architecture

- Machine Learning Best Practices

- AI Infrastructure Ecosystem

Overview of AWS Sagemaker Inference Options

Real-Time Inference: Speedy Predictions When You Need Them Most

Real-time inference enables users to instantaneously generate predictions from their machine learning models instantaneously, making it ideal for applications that require immediate responses to events as they unfold. For example, a fraud detection system can leverage real-time inference to flag fraudulent transactions as they occur.

Real-time inference enhances user experience personalization by dynamically adapting to individual behaviors. A streaming service, for instance, could utilize real-time inference to recommend new shows or movies based on a user’s viewing history, ensuring that personalized content suggestions are delivered promptly.

Serverless Inference: Automatic Scaling for Variable Workloads

Serverless Inference is another option offered by AWS Sagemaker Inference. With this option, you don't have to worry about managing servers or scaling your applications. AWS handles all of this for you, allowing you to focus on developing your machine learning models.

Serverless Inference can be particularly useful for applications with variable workloads. For instance, a retail business might see a surge in traffic during the holiday season. With Serverless Inference, the company can easily handle this increased traffic without providing additional resources.

Batch Transform: Efficiently Process Large Datasets

Batch Transform is an AWS Sagemaker Inference option that allows users to process large volumes of data in batches. This can be useful for applications that don't need real-time predictions. For instance, a business might use Batch Transform to analyze its sales data at the end of each day.

Batch Transform can also preprocess large volumes of data before feeding it into a machine learning model. This can help improve the performance of your models, as they can be trained on clean, well-structured data.

Asynchronous Inference: Handling Long-Term Predictions with Ease

Asynchronous Inference is an AWS Sagemaker Inference option that enables users to make requests for predictions and receive the results later. This can be beneficial for applications that can afford to wait for the results. For instance, an application might use Asynchronous Inference to analyze user behavior over time.

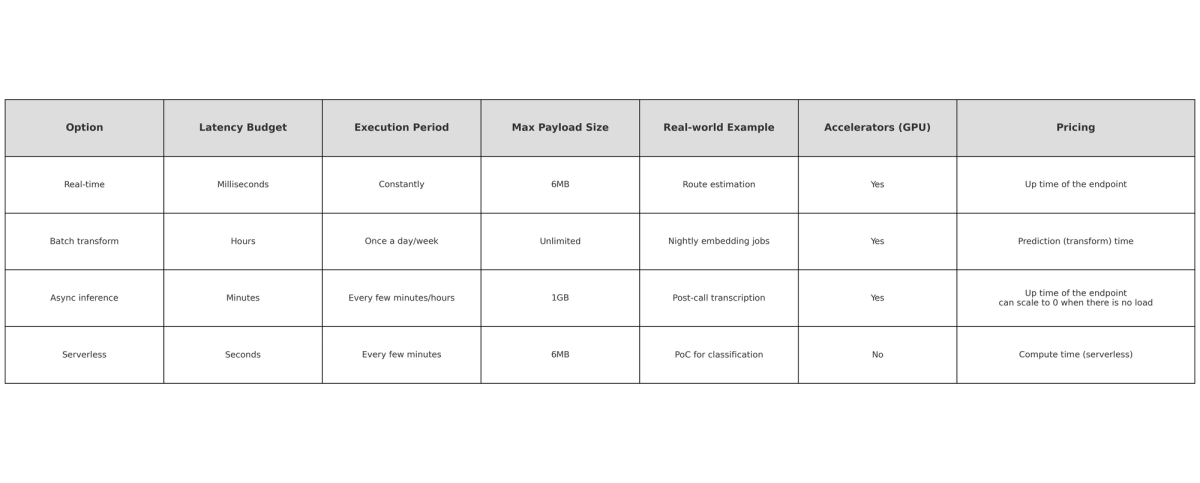

Each of these inference options has different characteristics and use cases. We have created a table to compare the current SageMaker inference in latency, execution period, payload, size, pricing, and getting-started examples on using each inference option.

Comparison table

Related Reading

- AI Infrastructure

- MLOps Tools

- AI as a Service

- Machine Learning Inference

- Artificial Intelligence Cost Estimation

- AutoML Companies

- Edge Inference

- LLM Inference Optimization

How to Use AWS Sagemaker Model Deployment for Inference

Create a Model in SageMaker Inference

The first step to deploying a machine learning model for inference using AWS SageMaker is to create a model in SageMaker Inference. This process involves specifying the location of your model artifacts stored in Amazon S3 and associating it with a container image.

The container image can be either one you created or a pre-built one provided by AWS. Once you make a model in SageMaker Inference, you can deploy it to an endpoint for real-time inference or use it with batch transform for batch inference.

Choose Your Inference Option

You need to choose an inference option. AWS SageMaker offers two main options for inference: real-time inference and batch transform. Real-time inference (online inference) is ideal for applications requiring immediate predictions. With this option, you deploy your model to an endpoint and request the endpoint to receive forecasts in real time.

On the other hand, Batch transform is suitable for scenarios where you need to process large volumes of data and can tolerate some latency. With this option, SageMaker outputs your inferences to a specified location in Amazon S3.

Configure Your Endpoint

In this step, you set up a SageMaker Inference endpoint configuration. You also need to decide on the type of instance and the number of cases you need to run behind the endpoint.

To make an informed decision, you can use the Amazon SageMaker Inference Recommender, which offers suggestions based on the size of your model and your memory requirements. In the case of Serverless Inference, the only requirement is to provide your memory configuration.

Create an Endpoint in SageMaker Inference

Now you’re ready to create an endpoint in SageMaker Inference. An endpoint is a live instance of your model ready to serve requests. Once you create an endpoint, you can invoke it to receive inferences as a response.

Invoke Your Endpoint to Receive Inferences

After creating your endpoint, you can invoke it to receive inferences. This process can be done through various AWS platforms, such as:

- The AWS console

- The AWS SDKs

- The SageMaker Python SDK

- AWS CloudFormation

- The AWS CLI

Batch Inference with the Batch Transform

Performing batch inference with the batch transform feature is another way to deploy a machine learning model for inference in AWS SageMaker. With this approach, you point to your model artifacts and input data and then establish a batch inference job.

Unlike hosting an endpoint for inference, Amazon SageMaker outputs your inferences to a designated Amazon S3 location.

Use Model Monitor to Track Model Metrics Over Time

One of the critical aspects of managing machine learning models is monitoring their performance over time. Models can experience drift as the data they were trained on becomes less relevant or the environment changes. This is where SageMaker Model Monitor comes in. The Model Monitor provides detailed insights into your model's performance using metrics.

It tracks various aspects, such as input-output data, predictions, and more, allowing you to understand how your model performs over time. This helps you decide when to retrain or update your models. With the Model Monitor, you can set up alerts to notify you when your model's performance deviates significantly from the established baseline.

Use SageMaker MLOps to Automate Machine Learning Workflows

Automation plays a vital role in optimizing machine learning operations, and AWS SageMaker MLOps offers comprehensive tools to automate key stages of the ML workflow. From data preparation and model training to deployment and monitoring, MLOps helps streamline your processes, minimize manual errors, and ensure that your models remain up-to-date with the latest data.

SageMaker MLOps seamlessly integrates with CI/CD pipelines and version control systems, enabling the automation of model training and deployment. You can configure pipelines to automatically retrain and deploy models based on new data or code updates, ensuring continuous model improvement and delivering the most accurate, relevant predictions for your applications.

Use an Inf1 Instance Real-Time Endpoint for Large-Scale Models

Inf1 instances are powered by AWS Inferentia chips, which are designed specifically for machine learning inference tasks. They offer high performance at a low cost, making them ideal for large-scale applications. In addition, you can use a real-time endpoint with your Inf1 instance.

This allows you to make real-time inference requests to your models, which is especially useful for applications that require immediate predictions. By leveraging Inf1 instances and real-time endpoints, you can run your large-scale applications more efficiently and cost-effectively.

Use SageMaker’s Pre-Built Models to Optimize Inference Performance

AWS SageMaker offers a collection of pre-built models optimized for various inference tasks. These models can significantly speed up the deployment process and improve inference performance. Using pre-built models, you can avoid the time and resources required to develop and train a model from scratch.

These pre-built models cover various applications, such as image and video analysis, natural language processing, and predictive analytics. They are optimized for performance on AWS infrastructure, ensuring fast and efficient inference. Additionally, you can customize these models with your data to better fit your specific use case.

How to Use Amazon SageMaker Inference Recommender

As a data scientist, after a long time spent on model training and development, you are finally ready to deploy your model to a SageMaker endpoint. Nevertheless, you may not know what type of instance to choose to balance both cost and latency. Traditionally, this would involve deploying and testing multiple model deployments using various instance types and sizes.

Evaluating Deployment Efficiency

For each deployment, you collect metrics such as latency, cost, and the maximum number of invocations each deployment can handle (Locust and JMeter are two standard open-source load testing tools). Then, you will need to review each deployment and aggregate the data to compare.

Streamlining Deployment

This iterative process is time-consuming and sometimes takes weeks of experimentation. Newly released in December 2021, Amazon SageMaker Inference Recommender can help you solve this problem and decide what instance to use. It utilizes load testing to help optimize cost and performance, enabling you to select the best instance and configuration for deploying your model.

This blog walks through an example of how to use Amazon SageMaker Inference Recommender in your machine learning workflow and shares tips we learned along the way.

Leveraging Existing Endpoints

If you have already deployed an endpoint for your model successfully, this article is for you. This article assumes that you have a packaged model with inference scripts in S3 from a previous model deployment.

Code Walkthrough

Inference recommender works with SageMaker Model Registry to test and compare the best instance types for a specific Model Package Version in Model Registry. So, before running the inference recommender, you need to use SageMaker Model Registry to create a model package group and register your model version to that package group.

Then, the Amazon Resource Name (ARN) of the registered model version is used when creating an inference recommender.

1. Importing Packages and Creating a Model Package Group

The code below shows the packages that need to be imported. Since Amazon SageMaker Inference Recommender was just released at the end of 2021, you should make sure the SageMaker version is up to date, so it provides the functionality of creating Inference Recommender jobs.

In this code walkthrough example, the version for SageMaker is 2.94.0 and Boto3 is 1.24.27. We also create a SageMaker session and establish a Boto3 client for SageMaker. import osimport sagemakerfrom sagemaker import get_execution_role, Sessionimport boto3region = boto3.Session().region_namerole = get_execution_role()sm_client = boto3.client('sagemaker', region_name=region)sagemaker_session = Session()# check the sagemaker versionprint(sagemaker.__version__) If the SageMaker version is not correct, use below code to install the correct specified version. After running below command, do remember to restart the kernel in order to activate the new version. !pip install --upgrade "sagemaker==2.94.0" Below code shows how to create a model package group:model_package_group_name = "inference-recommender-model-registry"model_package_group_description = "testing for inference recommender"model_package_group_input_dict = { "ModelPackageGroupName": model_package_group_name, "ModelPackageGroupDescription": model_package_group_description,}create_model_package_group_response = sm_client.create_model_package_group( **model_package_group_input_dict)model_package_arn = create_model_package_group_response["ModelPackageGroupArn"]print("ModelPackage Version ARN : {}".format(model_package_arn))

2. Preparing URL for Model Artifacts in S3

SageMaker models need to be packaged in a .tar.gz file. When your SageMaker Endpoint is provisioned, the files in the archive will be extracted and put in /opt/ml/model/ on the Endpoint. To bring your own Deep Learning model, SageMaker expects a single archive file in .tar.gz format, containing a model file (.pth) and the script (.py) for inference.

Post-Deployment Retrieval

If you have deployed your model or used it to do a batch transformation job, your model has already been packaged in this way. From a deployed endpoint, you can find the packaged model files by clicking on the deployed endpoint and navigating to "Endpoint configuration settings" > "Production variants" and clicking on the model name.

From this page, you can find the S3 location of the model package in the section "Model data location." Below, the code assigns a variable with the path to the model tar file stored in S3. This should be updated to point to the S3 path of your model artifacts. model_url = "s3://sagemaker-us-east-2-*******/model/model.tar.gz

3. Prepare Sample Payload Files

After the model artifact is ready, a payload archive needs to be prepared to contain input files that Inference Recommender can invoke your endpoint. Inference Recommender will randomly sample files from this payload archive to call the endpoint, so make sure it includes a similar distribution of input payloads expected in production.

Note that your inference code must be able to read in the file formats from the payload archive, so the input format would be the same as what you would use when you invoke the endpoint from a notebook cell directly. Below are steps to zip this payload file. In this example, the payload folder contains six different testing images in png format.

- Create a local folder “sample_payload” in your working directory on SageMaker instance; Identify a variety of sample payload input files and upload them to this folder!cd ./sample-payload/

- Run below command to get a payload tar file payload_archive_name = "payload_images.tar.gz"!tar czvf ../{payload_archive_name} *

- Upload this payload tar file to S3 sample_payload_url = sagemaker_session.upload_data(path="payload_images.tar.gz", key_prefix="test")print("model uploaded to: {}".format(payload_data_url))

4. Register Model in the Model Registry

As mentioned above, Inference Recommender works with a specific model package version in your Model Registry. This next section walks through creating a new model package version. We will use the Boto3 Sagemaker session we started above to submit our model package version to the model registry.Inference recommender requires some additional parameters to be defined in your model package version in the Model Registry. You can see a list of the parameters necessary to register a model package version in the boto3 documentation page here.

Beyond Required Parameters

In addition to the parameters listed as required here, using the inference recommender also requires that the following parameters be defined:

- Domain - the ML domain of your model package, domains supported by the inference recommender:

- Computer_Vision

- Natural_Language_Processing

- Machine_Learning Task - the task your model accomplishes, tasks supported by inference recommender:

- Image_Classification

- Object_Detection

- Text_Generation

- Image_Segmentation

- Fill_Mask

- Classification

- Regression

- Other

Preparing for Registry Submission

- SamplePayloadUrl - the payload S3 URL from step 3.

- Framework - the framework used by your model package.

- FrameworkVersion - the version of the framework used by your model package.

In the code sections below, we prepare an input dictionary that contains all the required parameters, then submit it to the model registry using Boto3.Note: Select the task that is the closest match to your model. Choose OTHER if none apply. Let's start with some of the basic details of our model package. Here we are using a PyTorch model running on PyTorch version 1.8.0. It is an image segmentation model, so we will use the parameters below.framework = "pytorch" framework_version = "1.8.0"model_name = "my-pytorch-model"ml_domain = "COMPUTER_VISION" ml_task = "IMAGE_SEGMENTATION"create_model_package_input_dict = { "ModelPackageGroupName": model_package_group_name, "Domain": ml_domain.upper(), "Task": ml_task.upper(), "SamplePayloadUrl": sample_payload_url, "ModelPackageDescription": "{} {} inference recommender".format(framework, model_name), "ModelApprovalStatus": "PendingManualApproval",}The following steps set up the inference configuration specifications for your model package. This will provide information on how the model should be hosted.

Matching Framework and Instance Type

First, we need to retrieve the container image, which we will use to host our model. Here, we take the model image provided by SageMaker for PyTorch 1.8.0, the framework we have defined above. We also need to select an instance type here, so that it knows whether we need a container image that uses a GPU or CPU.instance_type = "ml.c4.xlarge"image_uri = sagemaker.image_uris.retrieve( framework=framework, region=region, version=framework_version, py_version="py36", image_scope='inference', instance_type=instance_type,)Next, we provide the other arguments for the inference specification, format the information in the required dictionary structure, and add it to the request dictionary.Some parameters of note:

- SupportedContentTypes - defines the expected MIME type for requests to the model. This should match the Content-Type header used when invoking your endpoint. You can find more information about the data formats used for inference with SageMaker here.

- SupportedRealtimeInferenceInstanceTypes - a user-provided list of instance types that the model can be deployed to.

Inference recommender will test and compare these models; if not provided, inference recommender will automatically select some instance types.

modelpackage_inference_specification = { "InferenceSpecification": { "Containers": [ { "Image": image_uri, # from above "ModelDataUrl": model_url, "Framework": framework.upper(), # required "FrameworkVersion": framework_version, "NearestModelName": model_name } ], "SupportedContentTypes": ["application/x-image"], # required

"SupportedResponseMIMETypes": [], "SupportedRealtimeInferenceInstanceTypes": ["ml.c4.xlarge", "ml.c5.xlarge", "ml.m5.xlarge", "ml.c5d.large", "ml.m5.large", "ml.inf1.xlarge"], # optional } } # add it to our input dictionary create_model_package_input_dict.update (modelpackage_inference_specification)

Finally, the code below is used to register the model version using the above-defined specification.create_model_package_response = sm_client.create_model_package(**create_model_package_input_dict)model_package_arn = create_model_package_response["ModelPackageArn"]print('ModelPackage Version ARN : {}'.format(model_package_arn))

5. Create a SageMaker Inference Recommender Job

Now, with your model package version in Model Registry, you can launch a 'Default' job to get instance recommendations. This only requires your ModelPackageVersionArn and creates a recommendation job, which will provide recommendations within one to two hours. The result is a list of instance types and associated environment variables, with the cost, throughput, and latency metrics as observed by the inference recommendation job. import timedefault_job_name = model_name + "-instance-" + str(round(time.time())) job_description = "{} {}".format(framework, model_name) job_type = "Default" rv = sm_client.create_inference_recommendations_job( JobName=default_job_name, JobDescription=job_description, # optional JobType=job_type, RoleArn=role, InputConfig={"ModelPackageVersionArn": model_package_arn}, )

6. Check the Instance Recommendation Results

By running the code below, you can see the performance metrics comparison table, including:

- MaxInvocations - The maximum number of InvokeEndpoint requests sent to an endpoint per minute.

- Units: None Model Latency - The interval of time taken by a model to respond as viewed from SageMaker.

- Units: Milliseconds CostPerHour - The estimated cost per hour for your real-time endpoint.

- Units: US Dollars CostPerInference - The estimated cost per inference for your real-time endpoint.

- Units: US Dollars - Depending on how many instance types are specified for running the inference recommender, the whole process can take hours.

In this example, it takes about 1 hour. There are two ways to view the job's progress. The first method is to go to under the “SageMaker resources” -> “Inference Recommender Jobs”, where you can view the status of the job created, as shown in the screenshot below. The second way is to run the code block. import pprintimport pandas as pdfinished = Falsewhile not finished: inference_recommender_job = sm_client.describe_inference_recommendations_job( JobName=str(default_job_name) ) if inference_recommender_job["Status"] in ["COMPLETED", "STOPPED", "FAILED"]: finished = True else: print("In progress") time.sleep(300) if inference_recommender_job["Status"] == "FAILED": print("Inference recommender job failed ") print("Failed Reason: {}".format(inference_recommender_job["FailureReason"])) else: print("Inference recommender job completed") Below code is used to extract the comparison metric results for different instances. data = [ {**x["EndpointConfiguration"], **x ["ModelConfiguration"], **x["Metrics"]} for x in inference_recommender_job["InferenceRecommendations"] ] df = pd.DataFrame(data) df.drop("VariantName", inplace=True, axis=1) pd.set_option("max_colwidth", 400) df.head() Figure below shows an example of the comparison table, which helps you choose which instance type to use for endpoint deployment.

Additional Tips

Here are some additional tips and recommendations when using Inference Recommender:

- Your inference script that is deployed with the model should not need any changes to work with SageMaker Inference Recommender. You can point directly to the model.tar.gz file with the packaged inference script that you use to do batch transformation or deploy an endpoint.

- When zipping sample payload files, do it on SageMaker instead of in your local environment. Differences in operating systems may result in a different format when zipping, which can make the file unreadable when running the inference recommender.

- When you retrieve the image URI from SageMaker, you must provide an instance type. This is used to determine if the inference instances will be using a GPU or not, so that AWS knows whether you want the GPU image or the CPU image. There is also a GitHub repository that provides details on the deep learning container images offered by AWS; you can find it here.

- The example illustrated in this blog uses the default load testing built into the Inference Recommender. Inference recommender also allows you to do customized load testing, as this link provides some examples, such as tuning the environment variables.

Start Building with $10 in Free API Credits Today!

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.