Apr 22, 2025

What is Machine Learning Inference? A Guide to Smarter AI Predictions

Inference Research

Training a machine learning model is only half the journey, getting it to make accurate predictions on new data is where real impact happens. This process is known as machine learning inference, which turns a trained model into a functional AI system. Whether you're building AI for healthcare risk assessment, fraud detection, or content recommendations, inference is the key to applying machine learning in the real world. In this article, we’ll explain how machine learning inference works, why it’s essential for AI deployment, and how to optimize it for speed and accuracy. You’ll also learn how AI inference APIs can streamline this process, helping you deploy models that make fast, reliable predictions at scale. Additionally, understanding AI Inference vs Training is crucial to optimizing model performance and ensuring smooth deployment.

AI inference APIs are a valuable tool for achieving your inference objectives. These application programming interfaces can reduce the time and costs of deploying machine learning models and help you get the accurate AI predictions you need faster.

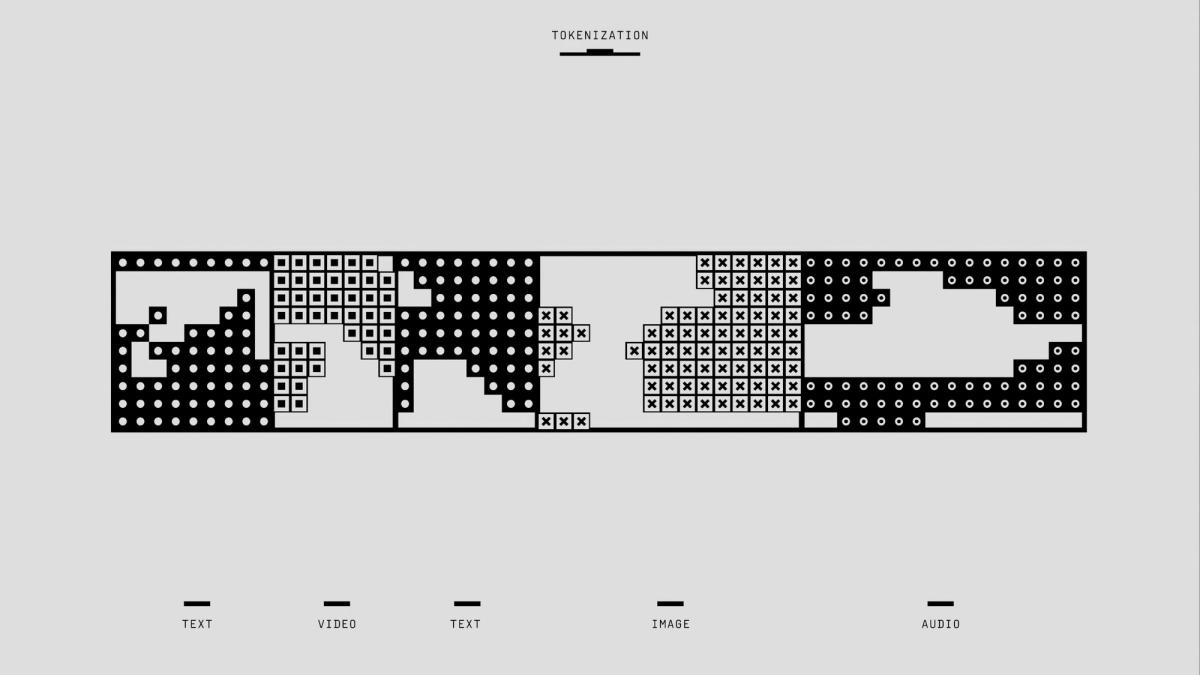

What is Machine Learning Inference?

Machine learning inference involves feeding live data points into a machine learning model (or ML model) to generate an output, such as a numerical score. This process is called "operationalizing an ML model" or "deploying an ML model into production." Once the model is in production, it is often referred to as artificial intelligence (AI) due to its ability to perform tasks that mimic human thinking and analysis.

Machine learning inference typically involves deploying a software application into a production environment. The ML model is a set of code that executes a mathematical algorithm. This algorithm processes data based on specific characteristics, or “features,” critical to making accurate predictions or decisions.

The Two Parts of Machine Learning Inference

An ML lifecycle can be divided into two distinct parts.

- The first is the training phase, in which an ML model is created or “trained” by running a specified subset of data through it.

- ML inference is the second phase, in which the model is put into action on live data to produce actionable output. The data processing by the ML model is often referred to as “scoring,” so one can say that the ML model scores the data, and the output is a score.

The Deployment of Machine Learning Inference

DevOps engineers or data engineers typically handle the deployment of ML or AI inference. Sometimes, the data scientists trained the models are asked to manage the inference process. This can create significant challenges, as data scientists may lack expertise in deploying systems.

Successful ML deployments often require close coordination between teams, and newer software technologies are increasingly being used to streamline the process. An emerging field called "MLOps" is helping to bring more structure and resources to the deployment and ongoing maintenance of ML models, ensuring they can be updated and adapted as needed.

The Process of Machine Learning Inference

Machine learning (ML) inference involves applying a machine learning model to a dataset and generating an output or “prediction”. This output might be a numerical score, a text string, an image, or structured or unstructured data.

Generally, a machine learning model is software code implementing a mathematical algorithm. The machine learning inference process deploys this code into a production environment, making it possible to generate predictions for inputs provided by actual end users.

Importance of Inference in the Machine Learning Workflow

Fuels Machine Learning Performance

Inference is a critical component of the machine learning workflow, as it enables the deployment and usage of trained models in real-world scenarios. Once a model has been trained on historical data, its primary purpose during inference is to make predictions or classifications on new data.

This phase is essential for various applications, including:

- Image recognition

- Natural language processing

- Recommendation systems, etc.

Efficient and accurate inference is essential for deploying machine learning models effectively and achieving desired outcomes in practical settings.

Supervised Learning

In supervised learning, inference refers to using a trained model to make predictions or classify new data points. During the training phase, supervised learning models learn patterns and relationships from labeled data, where each data point is associated with a known outcome or label.

Once trained, the model can generalize this knowledge to predict unseen or future data. Inference involves passing new input data through the trained model to obtain predictions or classifications based on the learned patterns and relationships encoded in the model’s parameters. The goal of inference in supervised learning is to accurately predict outcomes or classify input data based on the learned patterns from the training data.

Use Cases and Examples of Supervised Learning Inference

In supervised learning, inference finds extensive applications across various domains, including but not limited to:

- Image Classification: Inference is used to classify images into predefined categories or labels, such as identifying objects in photographs or medical images.

- Sentiment Analysis: Supervised learning models can infer the sentiment of text data, such as customer reviews or social media posts, by predicting whether the sentiment is positive, negative, or neutral.

- Disease Diagnosis: Medical professionals use supervised learning models for inferring disease diagnoses based on patient symptoms, medical history, and diagnostic tests.

- Predictive Maintenance: Supervised learning models can infer the likelihood of equipment failure or maintenance needs based on sensor data and historical maintenance records in industrial settings.

- Financial Forecasting: In finance, supervised learning models are employed to infer future stock prices, market trends, and investment opportunities based on historical market data.

Unsupervised Learning

In unsupervised learning, inference refers to extracting meaningful patterns, structures, or relationships from unlabeled data. Unlike supervised learning, where the data is labeled with known outcomes, unsupervised learning models work with unstructured or unlabeled data. They aim to uncover hidden insights or representations within the data itself.

Inference in unsupervised learning involves clustering similar data points, dimensionality reduction, or anomaly detection without explicit guidance from labeled examples. Unsupervised learning models infer underlying structures or groupings in the data, which can provide valuable insights or serve as a basis for further analysis and decision-making.

Use Cases and Examples of Unsupervised Learning Inference

Unsupervised learning inference finds applications across various domains, enabling exploratory data analysis, pattern discovery, and data-driven decision-making. Some everyday use cases and examples include:

- Customer Segmentation: Unsupervised learning models can infer distinct customer segments or clusters based on demographic, behavioral, or transactional data, allowing businesses to tailor marketing strategies and personalize customer experiences.

- Anomaly Detection: In cybersecurity, unsupervised learning models infer anomalous patterns or unusual behaviors in network traffic, identifying potential security threats or suspicious activities without prior knowledge of specific attack signatures.

- Topic Modeling: Unsupervised learning techniques such as Latent Dirichlet Allocation (LDA) can infer topics or themes within large text corpora, facilitating document clustering, content recommendation, and sentiment analysis in natural language processing tasks.

- Dimensionality Reduction: Methods like Principal Component Analysis (PCA) or t-distributed Stochastic Neighbor Embedding (t-SNE) infer low-dimensional representations of high-dimensional data, enabling visualization and interpretation of complex datasets in fields like bioinformatics, genomics, and image processing.

- Market Basket Analysis: Unsupervised learning algorithms like Apriori or FP-growth can infer associations or frequent itemsets from transactional data, revealing patterns of co-occurring items in retail sales data and informing inventory management, product placement, and cross-selling strategies.

Reinforcement Learning

In reinforcement learning (RL), inference refers to making decisions or selecting actions based on learned policies and environmental feedback. Unlike supervised and unsupervised learning, where models learn from labeled or unlabeled data, reinforcement learning agents interact with an environment to learn optimal strategies through trial and error.

Inference in RL involves selecting actions that maximize expected rewards or cumulative return over time, given the current state of the environment and the agent’s learned policy. Reinforcement learning models infer action-selection policies by iteratively exploring the environment, observing rewards, and updating their strategies through techniques like:

- Value iteration

- Policy iteration

- Deep Q-learning

Use Cases and Examples of Reinforcement Learning Inference

Reinforcement learning inference finds applications across various domains, enabling autonomous decision-making, control, and optimization in dynamic environments.

Some everyday use cases and examples include:

- Autonomous Robotics: Reinforcement learning agents control autonomous robots to perform tasks like navigation, object manipulation, or obstacle avoidance in real-world environments. Agents infer optimal actions by learning from sensor inputs, such as camera images or lidar data, and feedback signals, such as collision avoidance or task completion rewards.

- Game Playing: Reinforcement learning algorithms learn to play complex games like chess, Go, or video games by inferring optimal strategies through trial and error. Agents make decisions based on observed game states, available actions, and rewards from winning or achieving game objectives.

- Financial Trading: Reinforcement learning models infer trading strategies for automated trading systems by learning from historical market data and feedback signals, such as profit or loss. Agents make buy/sell decisions based on inferred policies to maximize returns and optimize portfolio performance.

- Healthcare Treatment Planning: Reinforcement learning agents infer personalized treatment plans or dosing regimens for patients with chronic diseases or complex medical conditions. Agents learn from patient data, clinical guidelines, and treatment outcomes to optimize therapy decisions and improve patient outcomes.

- Energy Management: Reinforcement learning models control energy systems, such as smart grids or renewable energy sources, to optimize resource allocation, demand-response actions, and energy storage strategies. Agents infer optimal policies to balance supply and demand, minimize costs, and maximize energy efficiency.

Techniques and Methods for Inference

Inference can be categorized into multiple areas. Each inference can have unique characteristics.

Probabilistic Inference

Probabilistic inference is a fundamental technique in machine learning that involves estimating probability distributions over unknown variables given observed data. It allows models to reason uncertainly about the underlying structure of the data and make predictions based on probabilistic beliefs.

In probabilistic inference, Bayes’ theorem is often used to update prior beliefs with observed evidence, resulting in posterior distributions that capture updated beliefs about the variables of interest. Standard probabilistic inference algorithm methods include:

- Maximum likelihood estimation (MLE)

- Markov chain Monte Carlo (MCMC)

- Expectation-maximization (EM)

Bayesian Inference

Bayesian inference is a principled approach to statistical inference that relies on Bayes’ theorem to update prior beliefs about model parameters with observed data, yielding posterior distributions that quantify updated beliefs.

In Bayesian inference, prior distributions represent:

- Initial beliefs about model parameters before observing any data

- Likelihood functions capture the probability of observing the data given the model parameters

- Posterior distributions combine prior beliefs and observed evidence

Bayesian inference provides a flexible framework for incorporating prior knowledge, handling uncertainty, and making probabilistic predictions.

Variational Inference

Variational inference is a family of methods used to approximate complex posterior distributions, often intractable to compute analytically. It involves approximating the actual posterior distribution with a simpler, tractable distribution by minimizing the Kullback-Leibler (KL) divergence between the two distributions.

Variational inference seeks the best approximation to the true posterior within a predefined family of distributions, such as Gaussian or neural network-based distributions. It is widely used in Bayesian statistics, deep learning, and probabilistic graphical models.

Monte Carlo Methods

Monte Carlo methods are computational techniques for estimating numerical quantities by simulating random sampling from probability distributions. In machine learning, Monte Carlo methods are commonly used for approximating integrals, computing expectations, and sampling from complex probability distributions.

Markov chain Monte Carlo (MCMC) algorithms, such as Metropolis-Hastings and Gibbs sampling, are particularly popular for sampling from posterior distributions in Bayesian inference. Other Monte Carlo techniques, such as importance sampling, rejection sampling, and particle filtering, are used for various inference tasks, including probabilistic modeling and uncertainty estimation.

How Inference Techniques Power Smarter Predictions and Data-Driven Decisions

These techniques and methods for inference are essential in various machine learning applications. They can be used to make predictions, estimate uncertainties, and reason about complex probabilistic relationships in data. Whether in probabilistic modeling, Bayesian inference, or deep learning, these techniques provide powerful tools for extracting valuable insights from data and making informed decisions.

Related Reading

- Model Inference

- AI Learning Models

- MLOps Best Practices

- MLOps Architecture

- Machine Learning Best Practices

- AI Infrastructure Ecosystem

How Does Machine Learning Inference Work?

The inference process starts with preprocessing the input data, where it is transformed into a format that matches the model’s requirements. The machine learning model processes the input data to generate outputs.

Preprocessing Input Data

Machine learning models have specific requirements for the data used during inference. For instance, a model may expect a certain number of input features or require the input data to be normalized within a specific range.

A preprocessing phase transforms the input data to meet these requirements before being fed into the model. This process helps to ensure smooth operations during inference and that the model performs accurately.

Generating Outputs

Once the input data is prepared, the machine learning model processes the information to create outputs. Depending on the complexity of the model, this process can take anywhere from milliseconds to several seconds.

For example, if you have a simple linear regression model that predicts house prices, it might take only a few milliseconds to process a new data sample and generate an output. In contrast, a large natural language processing model can create a text output for several seconds.

An Example of ML Inference in Action

Machine learning inference can be illustrated with an example of image recognition. Suppose you have a trained model that can identify different types of animals in photos. First, the model would preprocess the input data, which would be an image file of a cat.

The model would transform the image to meet its requirements, such as a specific size and color format. The model would process the image to generate outputs. In this case, the output might be that there is an 85% chance the image contains a cat.

The Components of Machine Learning Inference

To deploy machine learning inference, you need three main components:

Data Source

A data source captures real-time data from an internal source managed by the organization, external sources, or application users. Common examples of data sources for ML applications are log files, transactions stored in a database, or unstructured data in a data lake.

Host System

The ML model’s host system receives data from data sources and feeds it into the ML model. It provides the infrastructure on which the ML model’s code can run. After the ML model generates outputs (predictions), the host system sends these outputs to the data destination.

Common examples of host systems are an API endpoint accepting inputs through a REST API, a web application receiving inputs from human users, or a stream processing application processing large volumes of log data.

Data Destination

The ML model targets the data destination, which can be any data repository, such as a database, a data lake, or a stream processing system that feeds downstream applications.

For example, a data destination could be the database of a web application, which stores predictions and allows them to be viewed and queried by end users. In other scenarios, the data destination could be a data lake, where predictions are stored for further analysis by big data tools.

What Is a Machine Learning Inference Server?

Machine learning inference servers, or engines, execute your model’s algorithm and return the inference output. These servers accept input data, pass it to a trained ML model, process it, and generate the output.

ML inference servers need model creation tools to export the model in a compatible file format so that it functions correctly.

Ensuring Model Portability

For instance, Apple’s Core ML inference server only reads models stored in the .mlmodel format. If your model is created using TensorFlow, you can convert it to the .mlmodel format using TensorFlow’s conversion tool.

To improve interoperability across different ML inference servers and model training environments, you can use the Open Neural Network Exchange (ONNX) format. ONNX is an open format for representing deep-learning models, enabling better portability between different ML inference servers and supporting tools from various vendors.

Hardware for Deep Learning Inference

The following hardware systems are commonly used to run machine learning and deep learning inference workloads.

Central Processing Unit (CPU)

A CPU can process instructions for performing a sequence of requested operations. It contains billions of transistors and powerful cores to handle massive operations and memory consumption. CPUs can support any operation without customized programs.

Here are the four building blocks of CPUs:

- The Control Unit (CU) directs the processor’s operations and informs other components how to respond to instructions sent to it.

- Arithmetic logic unit (ALU): performs bitwise logical operations and integer arithmetic. Address generation unit (AGU): calculates the addresses used to access the main memory.

- Memory management unit (MMU): any memory component the CPU uses to allocate memory. The universality of CPUs means they include superfluous logic verifications and operations.

CPUs do not fully exploit deep learning’s parallelism opportunities.

Graphical Processing Units (GPU)

GPUs are specialized hardware designed to perform many simple operations simultaneously. While GPUs and CPUs share similar spatial architectures, they differ significantly in structure and function. CPUs typically consist of a few Arithmetic Logic Units (ALUs) optimised for sequential processing, while GPUs feature thousands of ALUs that enable the parallel execution of many tasks simultaneously.

This parallel processing capability makes GPUs particularly well-suited for deep learning applications. GPUs are energy-intensive, which limits their use on edge devices that may not have sufficient power to support them.

Field Programmable Gate Array (FPGA)

FPGAs (Field-Programmable Gate Arrays) are specialized hardware that users can configure after manufacturing.

They consist of:

- An array of programmable logic blocks

- A hierarchy of configurable interconnections that allow for flexible wiring of blocks in different configurations

Challenges in Converting High-Level Programming Languages to FPGA Code

Users can write code in hardware description languages (HDLs) like VHDL or Verilog to define the connections and how digital components are implemented. FPGAs are highly efficient for performing numerous multiply-and-accumulate operations, making them ideal for implementing parallel circuits.

HDLs, which define hardware components like counters and registers, are not traditional programming languages, posing challenges, especially when converting a Python library into FPGA code.

Custom AI Chips (SoC and ASIC)

Custom AI chips provide hardware built especially for artificial intelligence (AI), such as Systems on Chip (SoCs) and application-specific integrated Circuits (ASICs) for deep learning. Companies worldwide are developing custom AI chips, dedicating many resources to creating hardware that can perform deep learning operations faster than existing hardware, like GPUs.

AI chips are designed for different purposes, built for training and customised for inference. Notable solutions include:

- Google’s TPU

- NVIDIA’s NVDLA

- Amazon’s Inferentia

- Intel’s Habana Labs

Optimizing AI Workflows: Leveraging Serverless Inference for High-Performance, Cost-Effective Language Models

Inference delivers OpenAI-compatible serverless inference APIs for top open-source LLM models, offering developers the highest performance at the lowest cost in the market. Beyond standard inference, Inference provides specialized batch processing for large-scale async AI workloads and document extraction capabilities designed explicitly for RAG applications.

Start building with $10 in free API credits and experience state-of-the-art language models that balance cost-efficiency with high performance.

Related Reading

- AI Infrastructure

- MLOps Tools

- AI as a Service

- Artificial Intelligence Cost Estimation

- AutoML Companies

- Edge Inference

- LLM Inference Optimization

Machine Learning Inference vs Training

In machine learning, training and inference are separate phases with distinct objectives. Training focuses on developing a model using data and algorithms like a kitchen preparing a recipe. Inference, resembling a restaurant's dining area, emphasizes delivering accurate and timely predictions to users.

Understanding the difference between these phases is crucial. Cassie Kozyrkov's restaurant analogy effectively illustrates this: a valuable product (pizza) requires a well-defined recipe (model) and quality ingredients (data), prepared with appropriate appliances (algorithms).

Integrating Training and Inference

Just as a restaurant needs a functioning kitchen and a pleasant dining area, machine learning projects require practical training and inference. There is no service without a reliable kitchen (data science team). A kitchen is only valuable if customers appreciate its output.

The training and inference processes must be seamlessly integrated and continuously optimized for optimal customer experience and return on investment.

What Happens in Machine Learning Training?

Training a machine learning model requires the use of training and validation data. The training data is used to develop the model, whereas the validation data is used to fine-tune the model’s parameters and make it as robust as possible. This means that at the end of the training phase, the model should be able to predict new data with fewer errors. We can consider this phase as the kitchen side.Our comprehensive guide, What Is Machine Learning, explains machine learning, how it differs from AI and deep learning, and why it is one of the most exciting fields in data science.

What Happens in Machine Learning Inference?

Dishes can only be served when ready to be consumed, just as the machine learning model needs to be trained and validated before it can be used to make predictions. Machine learning inference is similar to the scenario of a restaurant. Both require attention for better and more accurate results, hence customer and business satisfaction.

Why Understanding the Differences Is Important

Knowing the difference between machine learning inference and training is crucial because it can help better allocate computation resources for training and inference once deployed into the production environment. Model performance usually decreases in the production environment. Proper understanding of this difference can help in adopting the right industrialization strategies for the models and maintaining them over time.

Key Considerations When Choosing Between Inference and Training

Whether an inference model is used or a brand new model is trained depends on the type of problem, the end goal, and the existing resources. The key considerations include, but are not limited to:

- Time to market: It is essential to consider how much is available when choosing between training and using an existing model. Using a pre-trained model requires less time and may give a business team a competitive advantage.

- Resources constraints or development cost: Training a model can require significant data and training resources depending on the use case. Using an inference model usually requires fewer resources, which makes it easier to obtain even better performance in a short amount of time.

- Model performance: Training a machine learning model is an iterative process that does not always guarantee a robust model. Using an inference model can provide better performance than an in-house model. Nowadays, model explainability and bias mitigation are crucial, and inference models may need to be updated to consider those capabilities.

- Team expertise: Building a robust machine learning model requires strong expertise for model training and industrialization. It can be challenging to have that expertise already available; hence, relying on inference models can be the best alternative.

Best Practices for Inference

Model Evaluation and Validation

Effective model evaluation and validation are crucial for ensuring the reliability and generalization of inference results. This involves splitting the dataset into training, validation, and testing sets to assess the model’s performance on unseen data. Metrics such as accuracy, precision, recall, F1-score, and area under the curve (AUC) are commonly used to evaluate classification models.

At the same time, these elements are used for regression tasks:

Cross-validation techniques such as k-fold cross-validation and stratified cross-validation can provide more robust estimates of model performance by mitigating the impact of data variability.

Hyperparameter Tuning

Hyperparameters are crucial in determining machine learning models’ performance and generalization ability. Through systematic experimentation and optimization, hyperparameter tuning involves selecting the optimal values for parameters such as:

- Learning rate

- Regularization strength

- Tree depth

- Batch siz

Techniques such as grid search, random search, and Bayesian optimization are commonly used for hyperparameter tuning to find the best configuration that maximizes model performance while avoiding overfitting.

Interpretability and Explainability

Interpretability and explainability are essential to inference, especially in domains where model decisions have significant real-world consequences, such as healthcare, finance, and criminal justice. Interpretability refers to the ability to understand and explain how a model makes predictions or classifications, while explainability involves providing transparent insights into the factors and features that influence model outputs.

Techniques such as feature importance analysis, model-agnostic methods (e.g., SHAP, LIME), and surrogate models can help improve the interpretability and explainability of machine learning models, enabling stakeholders to trust and understand the underlying mechanisms driving inference results.

3 Primary Challenges & Solutions When Setting Up ML Inference

1. Infrastructure Cost: Managing the Cost of Inference

Inference can be costly. Machine learning models require significant computational resources to produce outputs. For this reason, huge models can require thousands of dollars in computing resources to run just once. Organizations pay to run ML inference workloads in data centers or cloud environments that charge for the underlying compute resources.

It’s essential to ensure that inference workloads fully utilize the available hardware infrastructure, minimizing the cost per inference. One way to do this is to run queries concurrently or in batches.

For example, when an ML model is deployed on a server, it can quickly respond to multiple incoming queries simultaneously. Instead of answering each request individually, the model can process the queries together, which reduces the overall latency and cost of inference.

2. Latency: Reducing Inferencing Delay

Like any other software application, machine learning models require time to produce output when called upon. This delay is known as latency, and it can significantly impact the performance of inference systems. A common requirement for inference systems is maximal latency: The lower the latency, the better.

Mission-critical applications often require real-time inference. Examples include autonomous navigation, critical material handling, and medical equipment. Some use cases can tolerate higher latency. For example, some significant data analytics use cases do not require an immediate response. You can run these analyses in batches based on the frequency of inference queries.

3. Interoperability: Simplifying the Model Deployment Process

When developing ML models, teams use frameworks like:

- TensorFlow

- PyTorch

- Keras

Different teams may use various tools to solve their specific problems. When running inference in production, these other models must play well together. Models may need to run in diverse environments, including on client devices, at the edge, or in the cloud. Containerization has become a common practice that can ease the deployment of models to production.

Many organizations use Kubernetes to deploy large-scale models and organize them into clusters. Kubernetes makes it possible to deploy multiple instances of inference servers and scale them up and down as needed across public clouds and local data centers.

Start building with $10 in Free API Credits Today!

Inference, in machine learning, is the process of making predictions on new data using a previously trained model. Inference is crucial because it is how machine learning models create value. For example, a model may predict which customers will likely churn.

It doesn’t do anything until it is deployed and someone runs inference on the model with data about the current customer base. When that process can be completed quickly, the business can act on the results and mitigate churn. Slow inference speeds can hinder the decision-making process and drastically reduce the value of a machine learning model.

Related Reading

Own your model. Scale with confidence.

Schedule a call with our research team to learn more about custom training. We'll propose a plan that beats your current SLA and unit cost.